Aspiring to know about the docker architecture and its working in detail? Let’s dive in!

The importance of Docker in DevOps is undeniable! And it is a fact that this fantastic technology or tool consists of high-end architecture to define its functionality. Docker projects the resource efficiency aspects, which makes it better than that of virtualizing a complete hardware server. All of the dependencies essential for an application are bundled within a container. Hence, this makes Docker a portable alternative to develop an application. Moreover, it is easy for the developers to move the app in between the development, testing, and production phases.

Planning to take DevOps Certifications? Check out Whizlabs brand new online courses, practice tests, and free test here!

Docker has a uniform architecture that supports the modern DevOps concept for delivering software or applications. There are components that are collectively working together within the Docker platform to make this architecture perform its functionality seamlessly. Before you implement your application development prospects onto Docker, it is important for you to understand its architecture. Therefore, take a look at this detailed guide to Docker Architecture.

Learn about Docker Engine and its Components

The first approach for you to understand the docker architecture is by gaining insight into the Docker Engine concept. It has components that are responsible for making the entire system work. Docker Engine is behind the service aspects of developing, assembling, shipping and running the applications.

The components that are being used within Docker Engine are as follows:

1. Docker Daemon

It is more like a background process that intends to manage the docker containers, images, storage, volumes, and networks. The job of the Docker daemon is to keep track of the API requests that are proposed upon it and process them without interruptions.

2. Docker Engine REST API

As the name suggests, it is the specific API that the applications use for interacting or communicating with Docker daemon. An HTTP client has accessibility to REST API. In precise, this API gives instructions to the Docker daemon on what it should do within the engine and architecture.

3. Docker CLI

Command Line Interface is more of a client that interacts with docker daemon. It is quite useful in terms of entering or accessing the docker commands. Docker CLI simplifies the way you manage the instances of your container. The seamlessness of managing the container instances makes it easy for the developers to carry out their work process.

Preparing for Docker Certified Associate Certification? Try Whizlabs Free Test today!

You can implement docker across cloud, desktop, and server. It is available for Mac OS, Windows, Linux Distributions, Windows Server 2016, AWS, Google Compute Platform, Azure, IBM Cloud, and others. You have no restrictions upon implementing docker upon your application development needs. But you need to study architecture in more detail to understand what this platform has to offer.

Breakdown of Docker Architecture

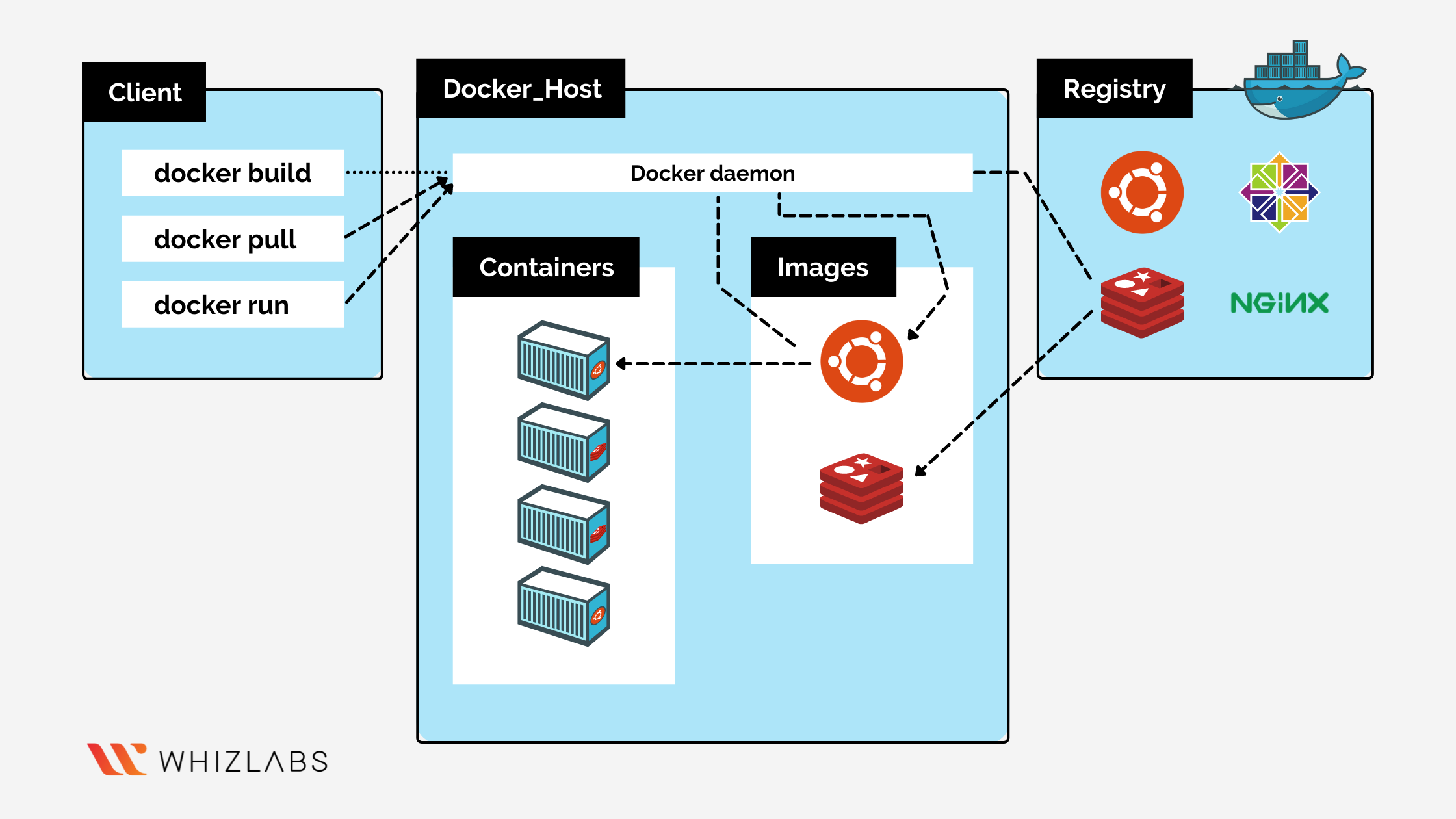

Docker is a client-server architecture. The entire docker architecture is comprised of four major components that are Docker Client, Docker Registry, Docker Host, and Docker Objects. All of these components are implementing their specific roles within the platform to help developers carry out their development projects with all the necessary elements in hand.

1. Docker Client

The Docker Client component is the element that enables the developers or users to interact with the Docker platform. The Docker Client can be present over the same host as that of the Docker daemon. If not that, the client can also connect to the daemon that is available upon a remote host. A docker client intends to communicate with one or more daemon.

Read more about What is Docker!

Moreover, the Docker client also provided CLI (Command Line Interface), which allows the developers to commence with building, running, or stopping the docker commands for an application to the daemon. Apart from that, another major purpose of Docker Client is to offer feasibility in pulling the images from respective registries and run it over the Docker host.

The common docker commands that are used for satisfying the docker client architecture implementation are docker build, docker pull, and docker run. Whenever any docker command is executed, the client intends to send it to the docker daemon. And then the daemon carries out the command. Docker Commands make use of the Docker API!

2. Docker Registries

Docker Registries are more like the location where all of the Docker Images are stored. There is no barrier to the registries being either public or private docker registries. The developers can create their own private registries with ideal measures. But Docker Hub is one default registry of the platform that has a stock of docker images. It is public and is accessible to all.

At the time you execute docker run and pull commands, then a docker image is essential for the execution process. Therefore, the required image is pulled from the respective configured docker registry. At the time you make use of the docker push command, then a docker image gets stored over a configured docker registry.

The docker registry is a highly scalable and stateless server-side application that has the potential to store & distribute Docker images. The Registry is useful if you intend to implement tight control over the image storing attributes. Apart from that, configuring a registry gives an ideology upon owning the entire pipeline of your image distribution.

Docker registries allow you to integrate the image storage & distribution aspects onto the in-house development workflow as well. The users who intend to adapt zero-maintenance and ready solutions are directed towards using Docker Hub. With the pre-configured registry, you get automated accounts, organization accounts, and other such features.

3. Docker Host

Docker Host intends to offer an environment that is ready for running and executing the applications. Docker Host comprises docker images, daemon, Networks, Storage, and Containers. Host networking has a different set of advantages for Docker Host and the platform as a whole. When you use the host network mode for any of the containers, then the container’s network stack won’t get isolated from the main Docker Host.

Moreover, the container doesn’t get any specific allotment of IP Addresses. For instance, if you are running a container that binds to port 80, and is implemented upon the host networking, then the application under the container will be available on port 80, over the IP Address of the host. The host networking driver works only over Linux hosts and is not compatible with Docker Desktop for Mac & Windows. Moreover, it is not compatible even with Docker EE for the Windows Server.

4. Docker Objects

Docker Objects resemble the elements that are used throughout the assembling aspects of your application. Here is a brief explanation of different docker objects and their overall functions:

-

Images

The docker images are termed to be just the read-only templates that are written in binary form. These images are used for building the containers! The images also consist of metadata that is destined to describe the capabilities and needs of the containers. You can make use of these images for storing and shipping the applications.

A docker image needs no element to proceed with building a container or to add some elements to customize and extend the configuration. Moreover, the container images are also shareable with the team by storing them over a private container registry. You can also count on sharing the images with the world by storing them over a public registry, such as Docker Hub.

Docker Images proposes a collaboration between developers and the docker containers. Hence, this enhances the overall experience of developers towards docker. Docker images can be called or pulled from a public or private registry and used without any modifications. You are open to making any changes to modify the docker image.

You can also make use of the docker file to create docker images. The only condition is that you should create the docker file with all sorts of instructions in it. When you are done with it, create the container and enable it to run. Hence, when the container is sorted for its seamless functions, store it as a docker image. And, your custom image is created!

The base layer of a docker image is read-only, while the top-most layer is open to be written by developers. Remember, every time you edit one dockerfile, rebuild and remodify it; only the part that is modified will be rebuilt over the top layer of docker image.

-

Containers

Containers are shells or capsules under which the applications execute or run. Containers create a substantial environment for the applications to start their process of data transfer and execution. A container is well-defined by the docker image and the configurations that are implemented right at the start.

A container is in no way limited to any of the storage options or network connections. The containers possess access to only the resources that are within the image. But this can be changed by the developer by enabling additional access at the time of building the docker image.

By observing the current state of your container, you can count on creating a new image. The containers are portable in comparison to the Virtual Machines. And due to this portability factor, the containers can be spun up in a very short span of time. Hence, it results in a more proficient server density.

You can make use of CLI or Docker API to call start, stop and delete commands for accessing the docker container. Containers are termed to be standard software units that are responsible for packaging up code & its dependencies for running the application reliably and quickly.

-

Networking

Docker implements networking upon its architecture in a very application-driven manner. Moreover, networking offers several options while maintaining the abstraction for developers. Over Docker, there are two network types available that are termed as default and user-defined docker networks.

When you install Docker for implementation, you get access to three different default networks, namely, none, bridge, and host. The none and host networks are accountable to be a prominent part of the Docker network stack. The bridge networks are responsible for creating IP subnet and gateway automatically.

All of the containers that are under the bridge network can talk to one another with the use of IP Addressing. As the scalability potential of this network is not seamless, it is not preferably used amongst the developers. The bridge network also has some constraints within it in terms of service discovery and network usability.

The second type of network is termed a user-defined network. The administrators have the potential to configure multiple networks of these types. There are three types of user-defined networks: bridge network, overlay network, and Macvlan network. The difference between default and user-defined bridge networks is that, under a user-defined network, you do not need to implement port forwarding for the containers to let them communicate with one another.

Read more about Docker Image Creation!

An overlay network is used when you need containers over different hosts to be able to communicate with one another. Macvlan network, on the other hand, works upon removing the bridge that resides between container and host upon using bridge and overlay networks.

-

Storage

The last object is storage! It explains your ability to store the data within the layer of the container that is writable. But for that, you will need a storage driver! The storage is non-persistent, for which the data will perish anytime the container is not under the run. In terms of persistent storage, Docker intends to offer few options that include:

- Data Volumes

It provides the ability to create persistent storage, and rename the volumes, list it, and also adapt container listing in association with the volume.

- Data Volume Container

Data Volume Container is more like the alternative approach, under which a container hosts a specific volume and mounts it upon other containers.

- Directory Mounts

You can mount the local directory of a host onto the container. The volumes should be within the folder of Docker volumes for you to avail this option.

- Storage Plugins

This storage option offers you the potential to connect to external storage platforms.

Final Words

Understanding the Docker architecture will help you realize its true potential in order to help you develop your organizational applications. With this article, you are now well-versed with the components of docker architecture and their usage. Now, you can conclude the reason behind the utmost popularity of the docker platform among the developers.

Docker intends to simplify the aspects of infrastructure management by proposing faster and lighter instances. Docker intends to separate the application layer from that of the infrastructure layer to promote collaboration, control, and portability over the delivery of an application.

- Cloud DNS – A Complete Guide - December 15, 2021

- Google Compute Engine: Features and Advantages - December 14, 2021

- What is Cloud Run? - December 13, 2021

- What is Cloud Load Balancing? A Complete Guide - December 9, 2021

- What is a BigTable? - December 8, 2021

- Docker Image creation – Everything You Should Know! - November 25, 2021

- What is BigQuery? - November 19, 2021

- Docker Architecture in Detail - October 6, 2021