Docker is an innovative open platform that plays a major role in developing, running, and shipping applications. It has the ability to help you create a partition of your application from its infrastructure, with an intention to deliver the software on a quick note. To be precise, Docker is meant for containerized applications.

The intention of a containerization platform is to enable the developers to package applications into the containers. The containers intend to simplify the seamless delivery of all of the distributed applications. The Docker ideology is gaining popularity amidst the developers, as most of the organizations are shifting their practices to hybrid multi-cloud or cloud-native environments.

Before heading out to implement the Docker properties to your applicational aspects, it is better to get an in-depth idea about the same. Therefore, this article or guide offers you a brief definition of this technology. It will help you understand the concept before moving onto the core working of this open platform.

Overview of the Docker Platform

The developers do have the potential to create containers even without Docker. But the introduction of this platform makes it easy for the developers to build, deploy and manage them. It is more like a simple toolkit that allows you to control and manage the containers. And you do not need to infuse any complex command for the same as well!

Simple commands and some automation through an API are sufficient for the same. Docker is ideally built over a collaborative culture that brings together developers to work collectively. Hence, Docker and its dedicated community are termed to be the heart of any application development.

Containers are the loosely isolated environment, and this is the strong part of it. The security aspects within this isolated environment allow you to put up numeral containers over the given host. The containers can hold anything and everything that is essential for running an application. The containers are sharable and work the same in all instances. Moreover, they are light in weight, offer great resource efficiency, and promote developer productivity.

Working Purpose of Implementing Docker

The purpose of docker is to help you manage the containers’ lifecycle. You can develop the application with the help of your container, as it becomes the testing and distributing unit of your application. When you are actually ready, it is time for you to deploy the application to production. It works for all of the organizational infrastructures, whether it is being operated within a data center, cloud provider, or both.

Docker is used for directing the application onto the test environment for manual and automated tests. Every time the developers are in search of bugs, they can find and fix them right at the development stage. And after that, the application can be pushed from the Docker container to the production environment. You do not need the hypervisor-based VMs anymore, as Docker can be an ideal alternative for the same. It allows you to implement maximum compute capacity for benefitting your business goals.

Docker is more like a connected experience that guides the developers to go from coding to cloud. Irrespective of the app complexity, Docker helps the developers build and launch them. Docker consists of a CLI-based workflow that eases the building, running, and sharing of the applications. Moreover, this workflow within Docker is accessible to developers at any skill level.

You can code and test the application locally and then push it into the development and production stage with the help of Docker. You can seek installation from a single package and get the work started within just a few minutes. Moreover, Docker allows you to push your application to any cloud-based application registry, to ensure collaboration with other members.

Know More : Steps to Setup Three-Tier Application with Docker Containers

Popularity of Docker

As per the statistics are concerned, Docker Inc. is now adopted by over 11 million developers. Along with that, over 13 billion container image downloads are handled every month. Docker platform allows you to simply write code for the application locally and share the work through the containers with other colleagues to help them catch up and take the work further. Moreover, Containers are some great inclusions for Continuous Delivery and Continuous Integration workflows.

Docker is an ideal pick for the developers who intend to work with fewer resources and carry out small & medium deployments. But, Docker is also proficient with high-density environments as well, which makes it versatile for all kinds of developers and applications. Building and sharing containerized apps is not a problem anymore. Whether you are sharing your app from desktop to cloud or vice versa, Docker containers will help you with the needful.

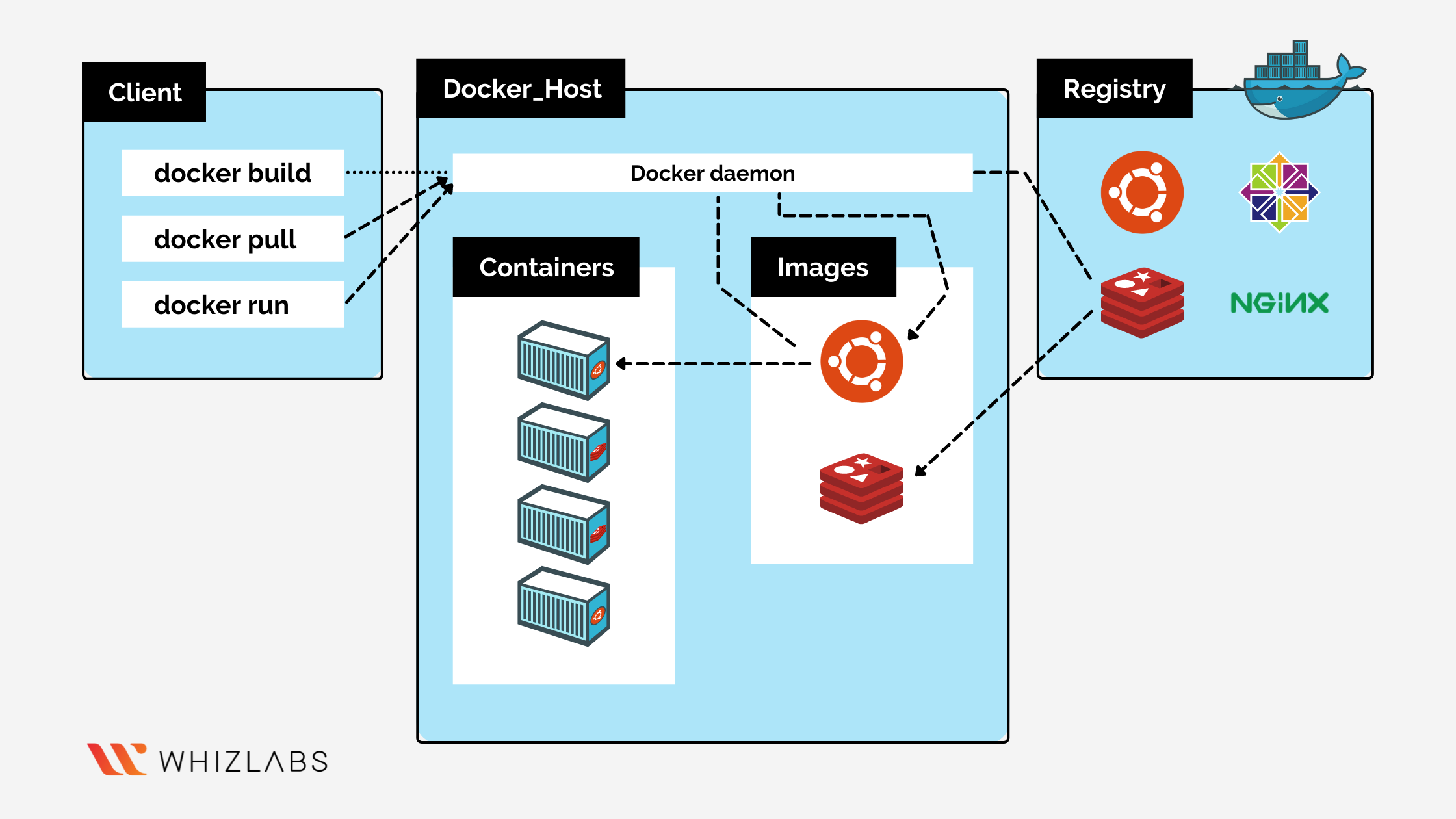

Docker Architecture

Docker operated within the client-server architecture. The Docker client will be in communication with the Docker daemon, which does the tough job of the entire process. It helps in building, running and distributing Docker Containers. The Docker client & daemon have the potential to run over the same system. Moreover, you can also connect the docker client to any remote docker daemon.

The communication between the client and daemon is carried out with the use of the REST API. The network interface and the UNIX sockets are not the first priority any more. Docker Compose is yet another client that allows the user to work with applications that consist of different container sets. Here is a brief explanation of the different components of the Docket Architecture.

- The Docker daemon- Docker daemon is destined to listen to Docker API requests and seeks management for the Docker objects, including containers, networks, volumes and images. A docker daemon has the potential to communicate with all of the other daemons for managing overall Docker services.

- Docker Client- Docker client is more like a pathway for the users to interact with Docker. For instance, when you use some docker commands such as docker run, the docker client then sends these commands to ‘dockerd’. And the commands get executed over it.

- Docker Registries- Docker Registry is a hub to store Docker images. It is a public registry that is accessible for anyone. You get the access to even run your own private registry. On the use of certain commands such as docker run & docker pull, the necessary images are pulled out from the configured registry.

- Docker Objects- The Docker objects are what you create and use over the platform. You create images, networks, volumes, containers, plugins and others. These are termed to be docker objects.

Tools, Commands & Terminology Associated with Docker

To help you better understand the true implementation of Docker, you need to understand the tools and terminology associated with it. These tools, commands & terms embedded within Docker are responsible for its functionality:

1. DockerFile

Every Docker container over the platform starts with a simple text file. These text files contain the instructions on how to build a container image for Docker. The purpose of DockerFile is to automate the process of image creation within Docker. DockerFile is more like the CLI instructions that the Docker Engine must run in order to seek assembly of the image.

2. Docker Images

Docker Images consists of executable application source code, tools, dependencies, and libraries. At the point you run the Docker Images, it becomes a single instance or gives rise to multiple instances of the container. The developers can seek to build Docker images from any single base image. Moving on, they can share the commonalities of the stack. All of the layers within the Docker images are different versions of the image. Any change made to the application by developers intends to add another layer to the image.

3. Docker Containers

Docker containers are nothing but the live instances of the Docker Images. The Docker Images are destined to be read-only. But, the containers are executable, ephemeral, and live content. The users have the accessibility to interact with the containers. The administrators have the potential to adjust the conditions and settings with the help of Docker commands.

4. Docker Hub

Docker Hub is more like a public repository of the Docker Images. It is the largest of all libraries and communities for the sake of container images. It consists of over 100,000 container images that are sourced from different open-source projects, developers, software vendors, and others. Docker Hub has container images that are specifically produced by Docker Inc and have images of Docker Trusted Registry. Apart from that, they also have images from numerous sources.

5. Docker Registry

It is an open-source storage system for docker images. It is scalable and also acts as a distribution system for the images. The purpose of the Docker Registry is to enable you to track the image versions in the repositories. It uses some form of tagging for identification purposes.

6. Docker Run Utility

Docker’s run utility is one form of command that intends to launch a container. Each of the containers within Docker is an image instance, or it can be multiple instances of a single image. All of them can be run simultaneously upon executing the Run Utility command.

7. Docker Desktop

All of the components of Docker container are wrapped or packed within the Desktop application of Docker. Hence, it offers a user-friendly platform and interfaces for the developers to build & share the containerized microservices and applications.

Security Aspects of Docker

During the initial years of Docker’s introduction, there was great concern about the security of its containers. Even though it had the best logical isolation, the containers still share the OS of the host. Any kind of attack or loophole within the underlying OS will lead to compromise of all the containers over the OS, along with authorization and access.

Docker has imposed several strategies to ensure security enhancements. Some security features such as secure node introduction, image scanning, cryptographic node identity, secure secret distribution, and cluster segmentation aspects are implemented in Docker for several years. It is to ensure more prominent security to the containers within the platform.

Docker has also taken up container scanning tools from Aqua, NeuVector, Twistlock, and others to work on strengthening security. The developers also need to work upon their implementations within Docker to ensure their security. The developers should take care not to expose the host of containers to the internet. Apart from that, all of the developers should use the container images only from reputable sources.

There were alternatives to Docker that intended to take the limelight of this platform by pointing out the security concerns. But Docker did several upgrades and added enhanced security prospects. As of now, Docker is a secure platform that has no loopholes within its app-building, deploying and managing system.

Why are Companies Embracing the Use of Docker Containers?

Containers, as a whole, make use of shared OS, which makes them quite more proficient in terms of hypervisors. There is no need for virtualizing the hardware anymore, as the containers rest over the top of a Linux Instance. Hence, it means that the developers can leave behind the Virtual Machine junks and can use only the small part of it that has the application.

Docker brought a revolution by introducing its lightweight properties of packing, shipping, and running the application in a portable and self-sufficient container. These containers can be accessed virtually. If you intend to pursue application portability, then use Docker Containers for the same.

Most companies are turning up for Docker containers because it has the design to be incorporated into most of the DevOps applications. If not that, it can still be used for managing the development environments, all on its own. The companies are using CI/CD Docker aspects to set up their local development environments to be exactly like the live server. They can then run multiple environments with unique configurations, OS, and software.

Conclusion

The use of containers is growing gradually as the cloud-native development prospects are increasing. Cloud-native development is now the major business model for building, testing, and running the software. And Docker is now part of that puzzle! Docker handles the responsibility of moving the code written for an application to the developer’s server or desktop.

The use of Docker containers is now increasing, as the introduction of this platform has shifted the attention of developers towards the proficient way of building apps. There is no more necessity of taking assistance from monolithic stacks, but instead, one can use networks of microservices for the purpose. So, if you intend to streamline your app development aspects, then Docker is here to assist you with the same.

- Cloud DNS – A Complete Guide - December 15, 2021

- Google Compute Engine: Features and Advantages - December 14, 2021

- What is Cloud Run? - December 13, 2021

- What is Cloud Load Balancing? A Complete Guide - December 9, 2021

- What is a BigTable? - December 8, 2021

- Docker Image creation – Everything You Should Know! - November 25, 2021

- What is BigQuery? - November 19, 2021

- Docker Architecture in Detail - October 6, 2021