This article has free AZ-204 exam questions which will give you a slight assessment of the actual exam and the pattern of questions that could be asked in the real exam. The AZ-204: Developing Solutions for Microsoft Azure certification exam validates your knowledge in the building, designing, testing and maintenance of the Cloud applications and services on Microsoft Azure.

What are the skills you will gain on AZ-204 exam on Developing Solutions for Microsoft Azure?

Earning this AZ-204 certification will give you the skills you need to design and implement solutions on Azure. This includes skills such as using Azure tools and services to provision and manage resources, and using Azure to deploy and manage applications.

The AZ-204 exam covers a wide range of topics, and to get certified you will need to demonstrate your skills in all of these areas.

The AZ-204 practice test covers all the topics you need to know for the AZ-204 exam, and it will help you gauge your readiness for the exam. Taking the practice test will also give you a good idea of what to expect on the actual exam.

Domains and Weightage

- Develop Azure compute solutions (25–30%)

- Develop for Azure storage (15–20%)

- Implement Azure security (20–25%)

- Monitor, troubleshoot, and optimize Azure solutions (15–20%)

- Connect to and consume Azure services and third-party services (15–20%)

Why do we provide free questions on AZ-204 exam on Developing Solutions for Microsoft Azure

We provide free questions on AZ-204 exam on Developing Solutions for Microsoft Azure because we want to help people prepare for this important exam. This exam is designed to validate your skills and knowledge in developing solutions for Microsoft Azure, and we want to make sure that you have the best possible chance of passing. We hope that by providing these free questions, you will feel more confident and comfortable when taking the exam.

Ok. Let’s start learning!

Domain : Develop for Azure storage

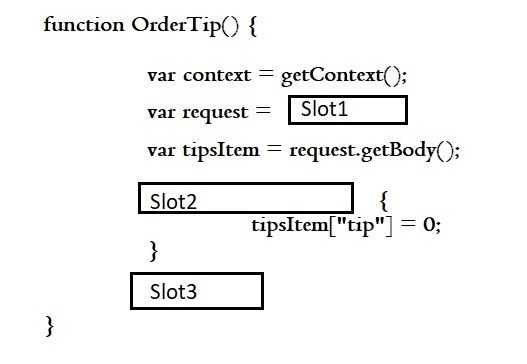

Q1 : A company currently has a web service deployed that is used to take in food orders and deliveries. The web service used Azure Cosmos DB as the data store.

A new feature is being rolled out that allow users to set a tip amount for orders. The new feature now mandates that the order needs to have a property named Ordertip in the document in Cosmos DB and that the property must contain a numeric value.

There might be existing web sites and web services that may not be updated so far to include this feature of having a tip in place.

You need to complete the below code trigger for this requirement

A. this.value();

B. this.readDocument(‘item’);

C. context.getRequest();

D. getContext().getResponse();

Correct Answer: C

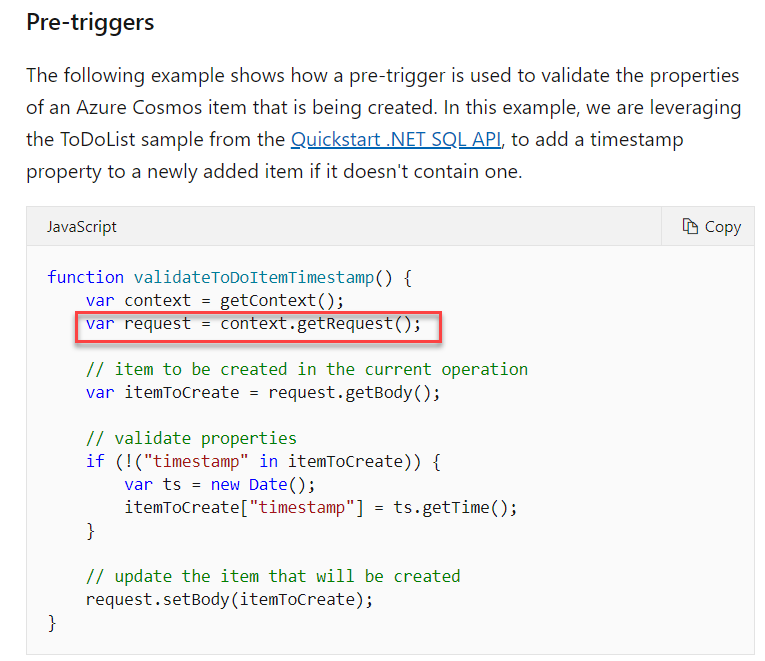

Explanation

This trigger in the web service will be used to get the request first from the web sites and applications that call this web service.

The original code is

—————–

function OrderTip() {

var context = getContext();

var request = context.getRequest();

var tipsItem = request.getBody();

if (!(“tip” in tipsItem)) {

tipsItem[“tip”] = 0;

}

request.setBody(tipsItem);

}

——————-

A similar example if also given in the Microsoft documentation

Since this is the right approach, all other options are incorrect

For more information on using triggers for Cosmos DB, please visit the following URL: https://docs.microsoft.com/en-us/azure/cosmos-db/how-to-write-stored-procedures-triggers-udfs#triggers

Domain : Implement Azure security

Q2 : You have to develop an ASP.Net Core application. The application is used to work with blobs in an Azure storage account. The application authenticates via Azure AD credentials.

Role based access has been implemented on the containers that contain the blobs. These roles have been assigned to the users.

You have to configure the application so that the user’s permissions can be used with the Azure Blob containers.

What is the type of permission that needs to be used for the Microsoft Graph API?

A. Application

B. Primary

C. Delegated

D. Secondary

Correct Answer: C

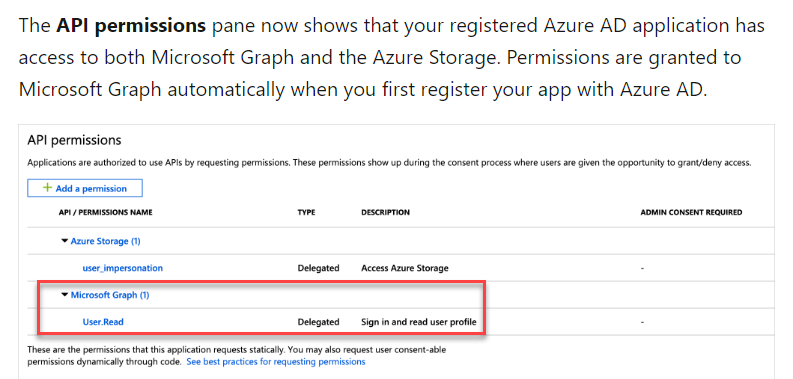

Explanation

For the Microsoft Graph API, we need to use the Delegated permission type. This is also given in the Microsoft documentation

Since this is clearly given in the documentation, all other options are incorrect

For more information on permissions for an application for accessing Azure storage, please visit the following URL: https://docs.microsoft.com/en-us/azure/storage/common/storage-auth-aad-app

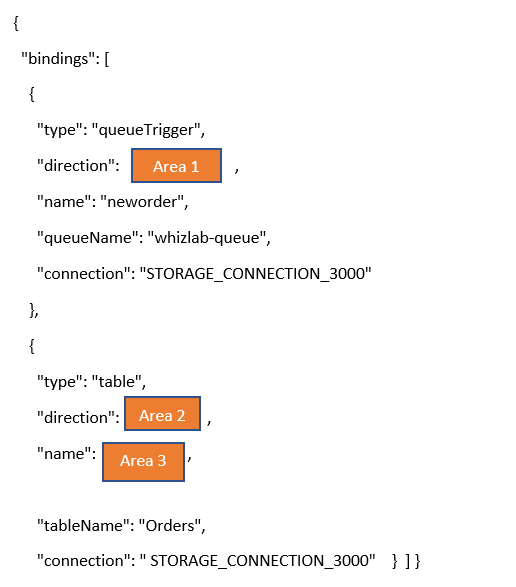

Domain : Develop Azure compute solutions

Q3 : You have to develop an Azure Function that would perform the following activities

- Read messages from an Azure Storage Queue

- Process the messages and add entities to Azure Table Storage

You have to define the correct bindings in the function.json file

A. “In”

B. “Out”

C. “Trigger”

D. “$return”

E. “$table”

Correct Answer: B

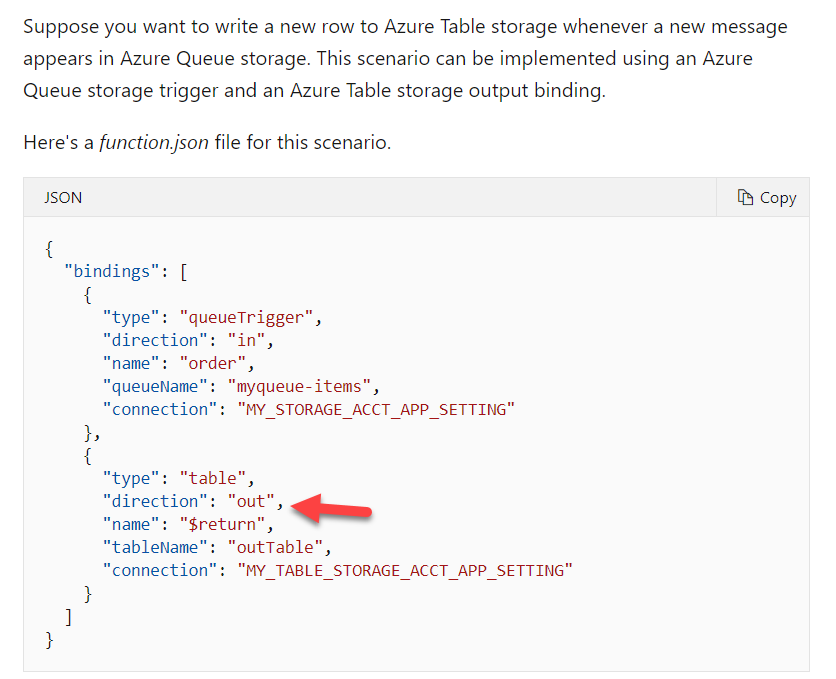

Explanation

Here we have to mention the binding as an output binding.

An example of this also given in the Microsoft documentation

For more information on function bindings, please refer to the below link: https://docs.microsoft.com/en-us/azure/azure-functions/functions-bindings-example

Domain : Develop Azure compute solutions

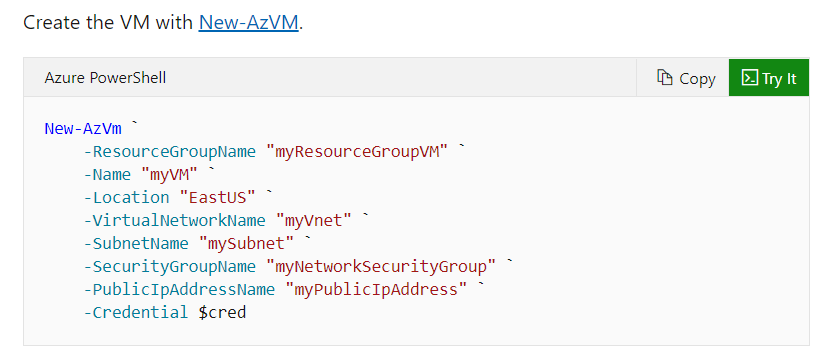

Q4 : You have to create an Azure Virtual Machine using a PowerShell script. Which of the following command can be used to create the new virtual machine?

A. Create-AzVm

B. New-AzVm

C. Set-AzVm

D. Get-AzVm

Correct Answer: B

Explanation

You would use the New-AzVm command to create a new virtual machine.

This is also given in the Microsoft documentation

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on creating virtual machines, please refer to the below link: https://docs.microsoft.com/en-us/azure/virtual-machines/windows/tutorial-manage-vm

Domain : Develop Azure compute solutions

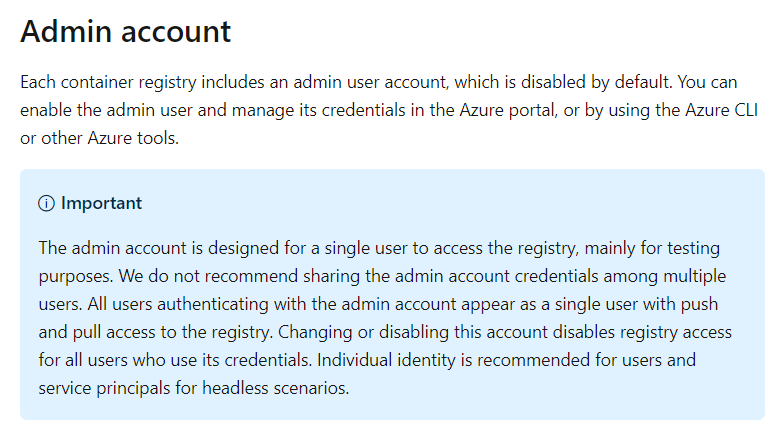

Q5 : You are planning on using the Azure container registry service. You want to ensure that your application or service can use it for headless authentication. You also want to allow role-based access to the registry.

You decide to use the Admin account associated with the container registry

Would this fulfil the requirement?

A. Yes

B. No

Correct Answer: B

Explanation

This is only used for single user access to the registry

The Microsoft documentation mentions the following

For more information on container registry authentication, please visit the following URL: https://docs.microsoft.com/en-us/azure/container-registry/container-registry-authentication

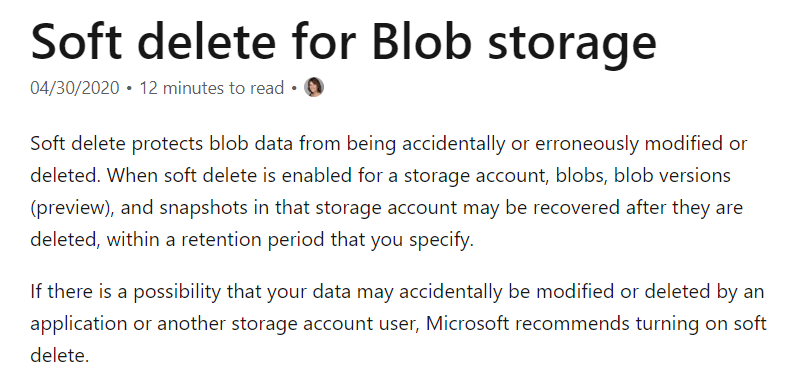

Domain : Develop for Azure storage

Q6 : You are going to create an Azure Storage Account as part of your subscription. This would be a General Purpose V2 storage account. You have to conform to the following requirements

- You must be able to recover a deleted blob

- You should be able recover a deleted blob a maximum of 7 days after the blob has been deleted.

- You need to be able to create snapshots of an existing blob in the storage account

Which of the following is a feature you have to implement on the Blobs to ensure you can recover the blob as per the specified requirements?

A. CORS

B. Soft Delete

C. Snapshots

D. Change Feed

Correct Answer: B

Explanation

For this you have to make use of the Soft Delete feature for Azure Blob storage

The Microsoft documentation mentions the following

Since this is clearly mentioned in the Microsoft documentation, all other options are incorrect

For more information on the Soft Delete feature, please refer to the below link: https://docs.microsoft.com/en-us/azure/storage/blobs/soft-delete-overview

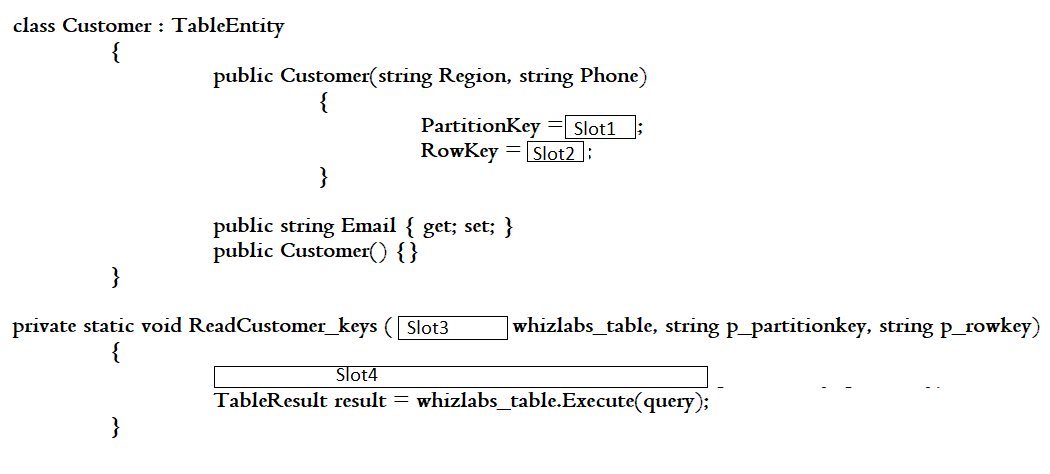

Domain : Develop for Azure storage

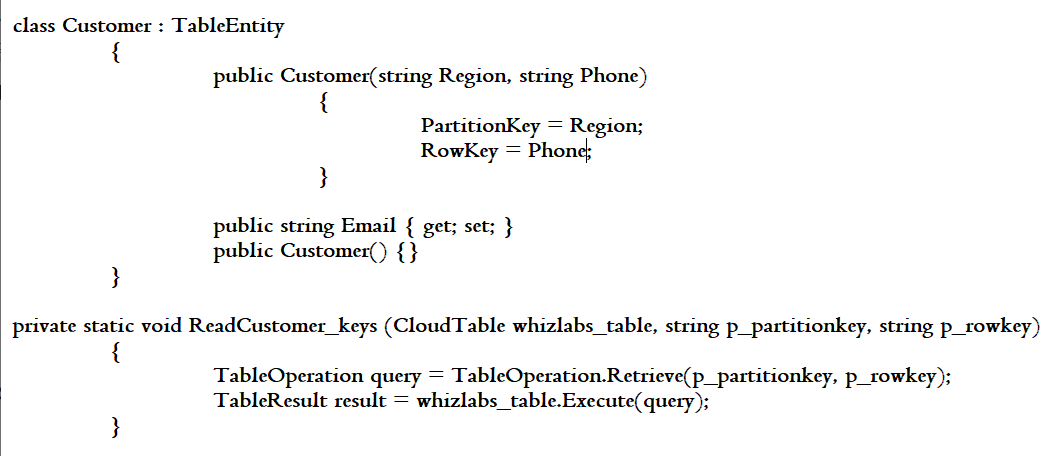

Q7 : A development team is developing an application. The application will be storing its data in Azure Cosmos DB – Table API. Below are the fields that are going to be stored in the table.

- Region

- Email address

- Phone number

Below are some key aspects with respect to the fields

- The region field will be used to load balance the data

- There is a chance that some entities may have blank email.

The following snippet of code needs to be completed that would be used to retrieve a particular data entity from the table.

A. TableEntity query=TableEntity.Retrieve(p_partitionkey,p_rowkey)

B. TableOperation query=TableOperation.Retrieve(p_partitionkey,p_rowkey)

C. TableResult query=TableQuery.Retrieve(p_partitionkey,p_rowkey)

D. TableResultSegment query=TableResult.Retrieve(p_partitionkey,p_rowkey)

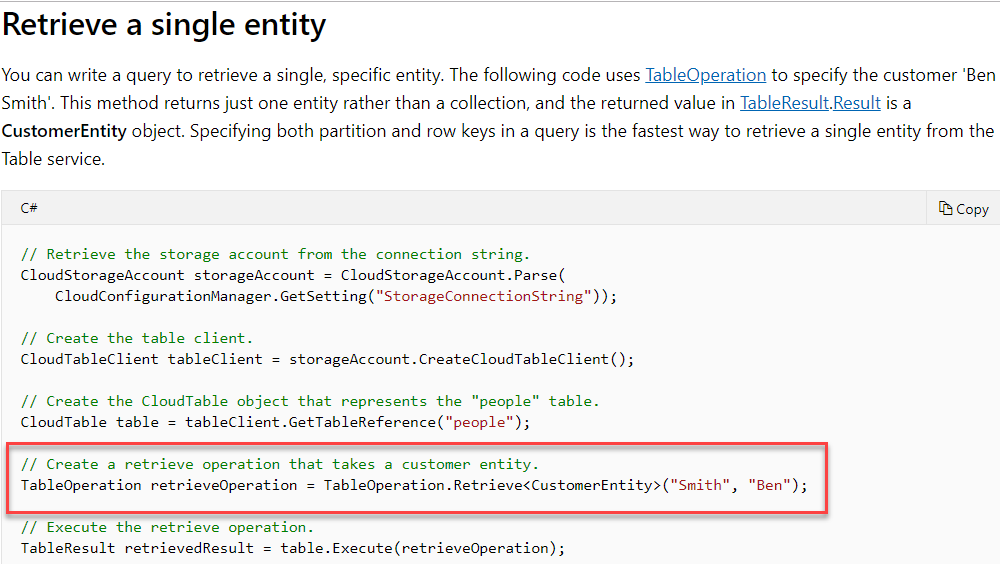

Correct Answer: B

Explanation

If we need to retrieve an entity based on the partition and row key , we will need to use the TableOperation method.

Snippet is as below

An example snippet of code in the Microsoft documentation is given below

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on using table storage with .Net, one can go to the below link: https://docs.microsoft.com/en-us/azure/cosmos-db/table-storage-how-to-use-dotnet

Domain : Connect to and consume Azure services and third-party services

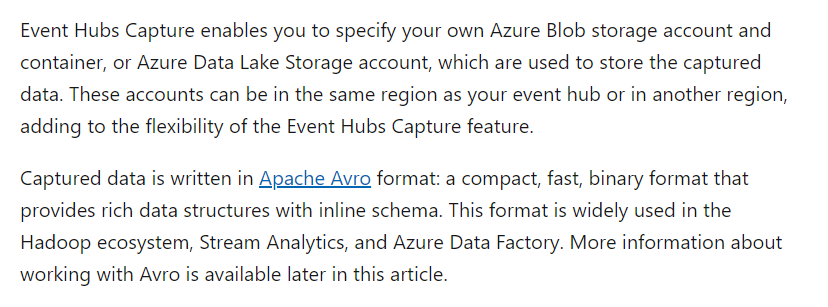

Q8 : Your team has to develop an application that will be used to capture events from multiple IoT enabled devices. Your team is planning on using Azure Event Hubs for the ingestion of the events. As part of the requirement, you have to ensure that the events are persisted to Azure Blob Storage.

When data is persisted from Azure Event Hubs onto Azure Blob Storage, which of the following is the data format used to write the data?

A. JSON

B. Apache Avro

C. XML

D. TXT

Correct Answer: B

Explanation

The data is written in Apache Avro format

The Microsoft documentation mentions the following

Since this is clearly mentioned in the Microsoft documentation, all other options are incorrect

For more information on Azure Event Hubs capture, please refer to the below link: https://docs.microsoft.com/en-us/azure/event-hubs/event-hubs-capture-overview

Domain : Develop Azure compute solutions

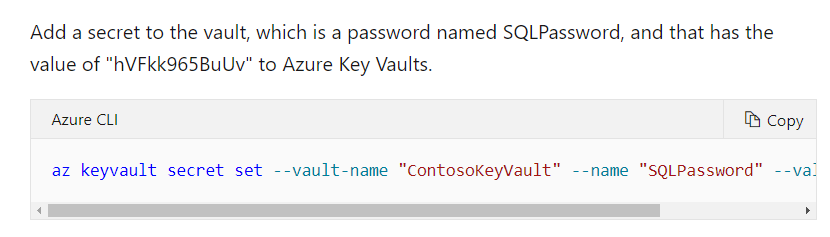

Q9 : You are developing an application that has a web and database tier. You have to store the database password as a secret in the Azure Key vault service.

You have to use Azure CLI commands to create the key vault and also create a secret in the key vault.

Which of the following command would you use to create the secret in the key vault?

A. az create

B. az keyvault create

C. az secret create

D. az keyvault secret set

Correct Answer: D

Explanation

To create the secret in the key vault, you have to use the az keyvault secret set command.

This is also given in the Microsoft documentation.

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on creating the key vault, please refer to the below link: https://docs.microsoft.com/en-us/azure/key-vault/general/manage-with-cli2

Domain : Develop Azure compute solutions

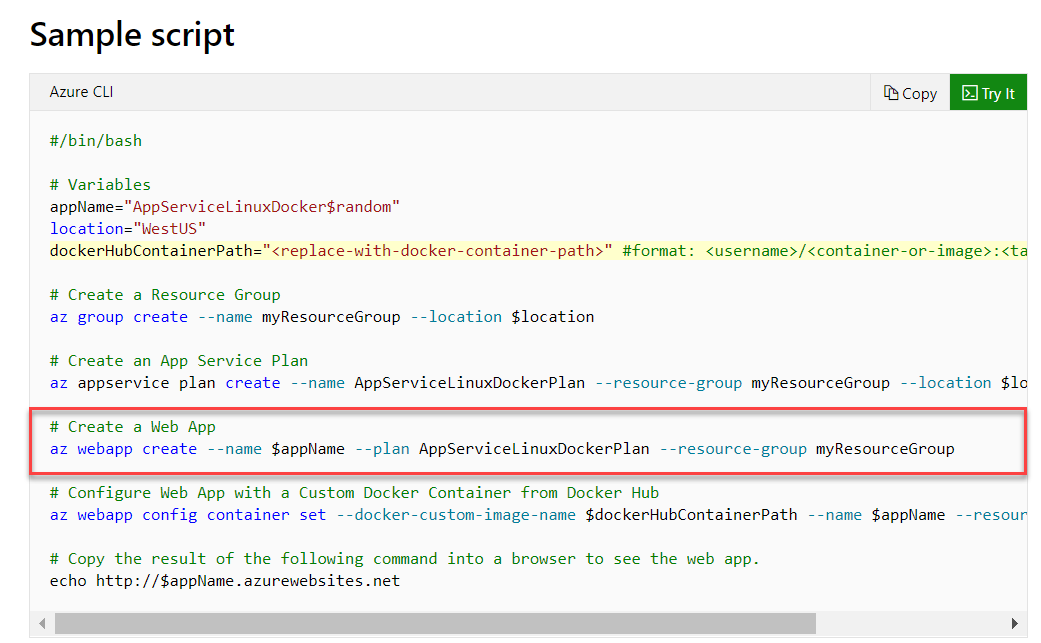

Q10 : Your company has an ASP.Net Core web application. This web application runs on Docker. The application is mapped to a domain named www.whizlabs.com.

The application needs to be hosted in Azure using Azure Web App service and the docker image for the web application. You also have to map a custom domain to the Azure Web app service. The following variables are in place

| Variable name | Description |

| appName | Name of the application |

| Location | Location of the resource |

| dockerHubContainerPath | Location of the docker image |

Which of the following would you issue to create the Web App?

A. az webapp create –name $appName –plan whizlabsplan –resource-group whizlabs-rg

B. az webapp set–name $appName –plan whizlabsplan –resource-group whizlabs-rg

C. az docker create –name $appName –plan whizlabsplan –resource-group whizlabs-rg

D. az docker image create –name $appName –plan whizlabsplan –resource-group whizlabs-rg

Correct Answer: A

Explanation

An example of this is given in the Microsoft documentation

Since this is clearly given in the documentation, all other options are incorrect

For more information on creating a Linux docker web app, one can go to the below link: https://docs.microsoft.com/en-us/azure/app-service/scripts/cli-linux-docker-aspnetcore

Domain : Develop Azure compute solutions

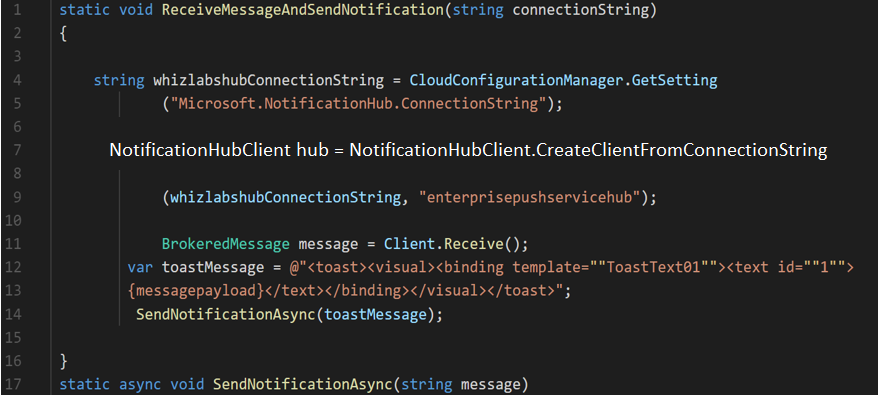

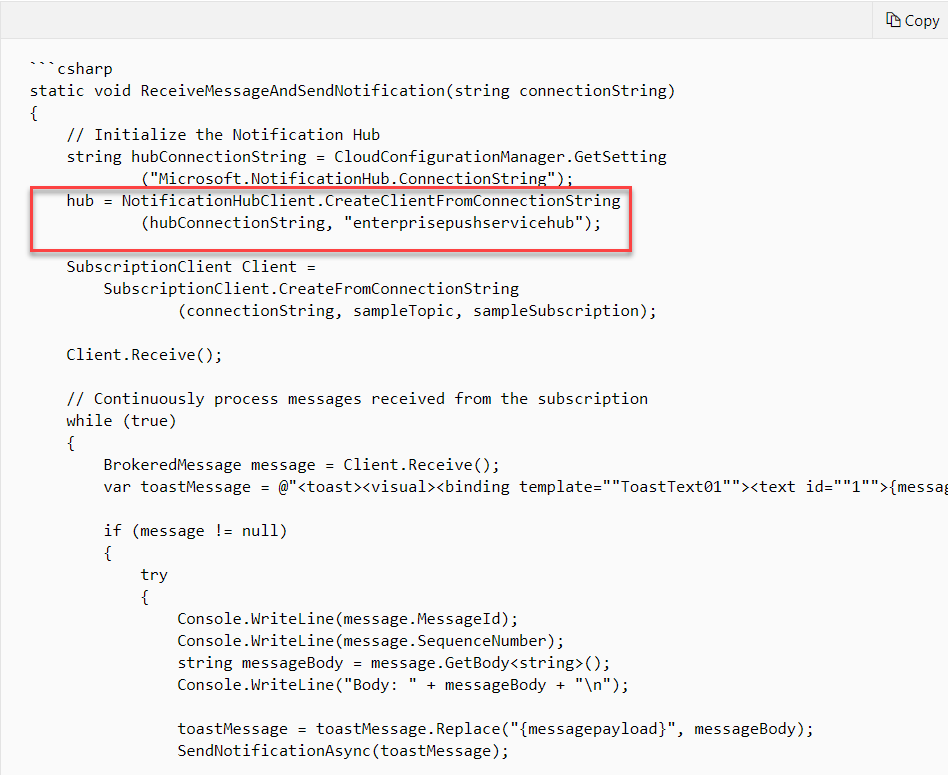

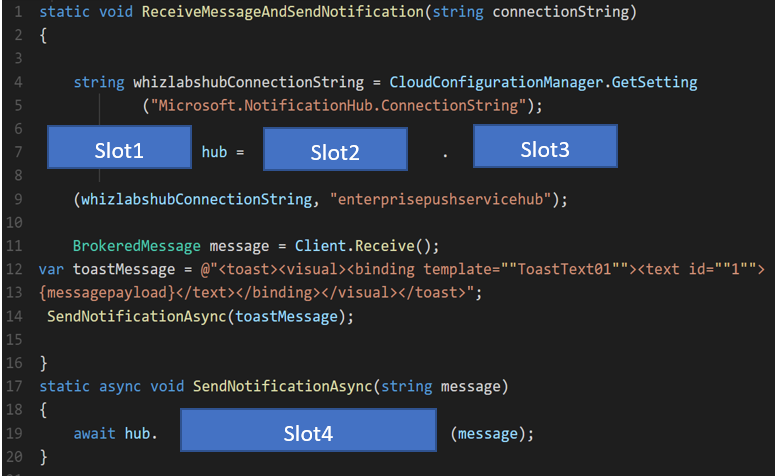

Q11 : A company is developing a shopping application for Windows devices. A notification needs to be sent on a user’s device whenever a new product is entered into the application. You have to implement push notifications.

You have to complete the missing parts in the partial code segment given below

A. GetInstallation

B. CreateClientFromConnectionString

C. CreateInstallation

D. PatchInstallation

Correct Answer: B

Explanation

the full code is as follows

An example of this is given in the Microsoft documentation

Since this is clearly given in the documentation, all other options are incorrect

For more information on enterprise push notification architecture, one can go to the below link: https://docs.microsoft.com/en-us/azure/notification-hubs/notification-hubs-enterprise-push-notification-architecture

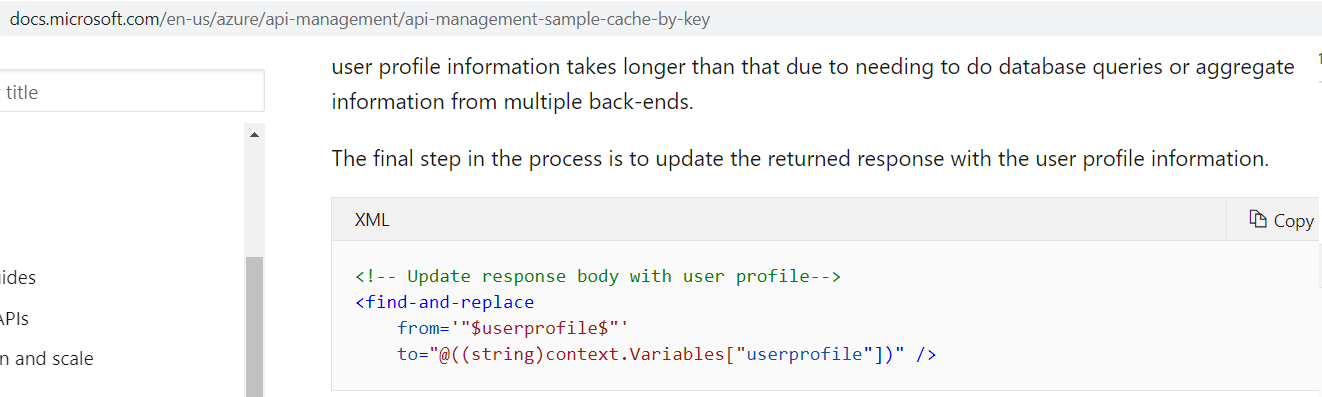

Domain : Connect to and consume Azure services and third-party services

Q12 : A company is developing an API system that will be hosted behind an Azure API Management service. You have to implement response caching. Here the user ID of the client must be detected and then the response must be cached for the given user ID.

You need to add the following policies to the policies file:

- A set-variable policy to store the detected user identity

- A cache-lookup-value policy

- A cache-store-value policy

- A find-and-replace policy to update the response body with the user profile information

To which policy section would you implement the policy for

“A find-and-replace policy to update the response body with the user profile information”

A. Inbound

B. Outbound

C. Error

D. Parameters

Correct Answer: B

Explanation

Here developer team need to

- implement response caching.

- user ID of the client must be detected

- the response must be cached for the given user ID

Means policy need inbound and outbound configuration

To implement following

- A set-variable policy to store the detected user identity

- Need to place in inbound

- A cache-lookup-value policy

- Need to place in inbound

- A cache-store-value policy

- Need to place in outbound

- A find-and-replace policy to update the response body with the user profile information

- Need to place in outbound

- Need to place in outbound

Hence we have recommended Option B is correct answer

For more information on an example of managing requests with API management, one can go to the below link: https://docs.microsoft.com/en-us/azure/api-management/api-management-sample-cache-by-key

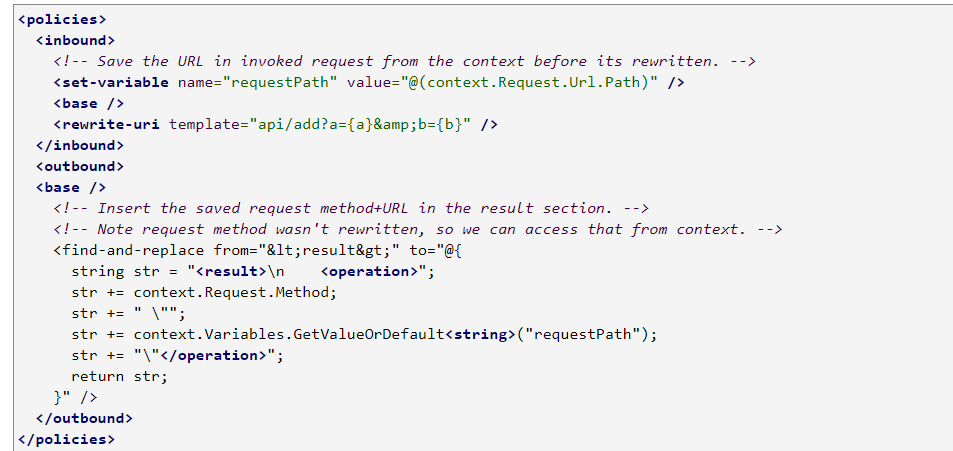

Domain : Connect to and consume Azure services and third-party services

Q13 : A company has an application that provides product data to external consultants.

Azure API Management is used to publish API’s to the consultants.

The API needs to meet the following requirements

- Support alternative input parameters.

- Remove formatting text from responses.

- Provide additional context to back-end services.

Which type of policy would you use for the following requirement

“Remove formatting text from responses”

A. Inbound

B. Outbound

C. Backend

D. Error

Correct Answer: B

Explanation

You can use policy expressions and find and replace to format the text in the response. An example of this is given in the Microsoft documentation

Since this is clearly mentioned, all other options are incorrect

For more information on API management transformation URL’s and a blog article on the same, one can go to the below link: https://docs.microsoft.com/en-us/azure/api-management/api-management-transformation-policies, https://azure.microsoft.com/fr-fr/blog/policy-expressions-in-azure-api-management/

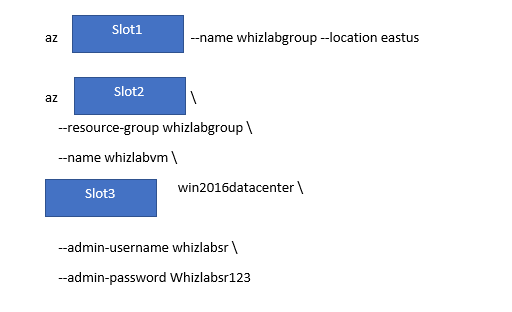

Domain : Develop Azure compute solutions

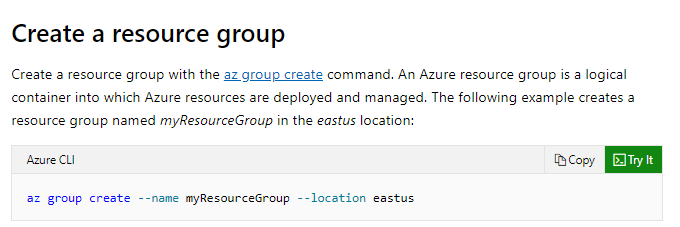

Q14 : A developer needs to run a set of Azure CLI commands to create a virtual machine. You need to complete the below set of commands.

A. vm create

B. group create

C. vm set

D. group set

Correct Answer: B

Explanation

An example of this is given in the Microsoft documentation. The first step is to ensure that you create a resource group via the az group create CLI command

Since this is clearly mentioned in the documentation, all other options are incorrect

For more information on using Azure CLI commands to create virtual machines, please visit the below URL: https://docs.microsoft.com/en-us/azure/virtual-machines/windows/quick-create-cli

Domain : Develop Azure compute solutions

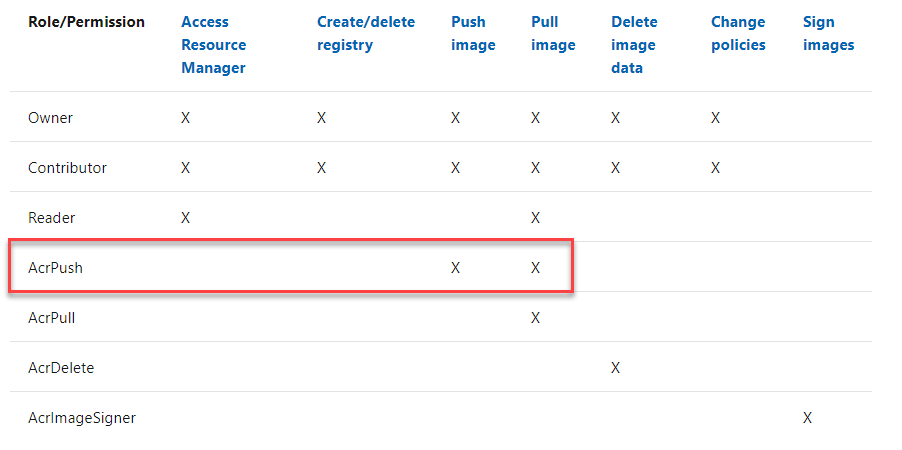

Q15 : Your team has an Azure container registry in place. You need to provide a set of developers the ability to publish images to registry. You need to ensure the least privilege access is given to the developers. Which of the following role would you give for this purpose?

A. Owner

B. Contributor

C. AcrPush

D. AcrPull

Correct Answer: C

Explanation

You would provide the AcrPush role. The different roles are given in the Microsoft documentation

Options A and B are incorrect because these roles would give more access than what is required.

Option D is incorrect because this does not provide the ability to push images to the registry.

For more information on Azure container registry roles, please visit the following URL: https://docs.microsoft.com/en-us/azure/container-registry/container-registry-roles

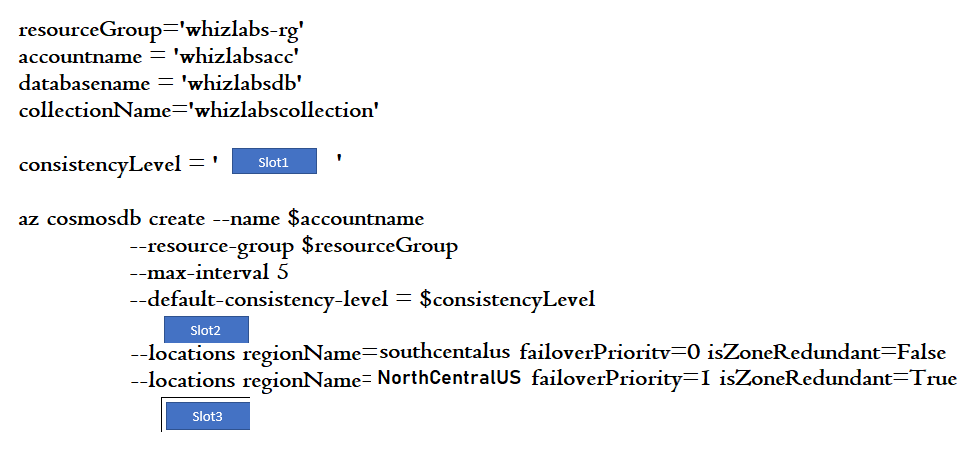

Domain : Develop for Azure storage

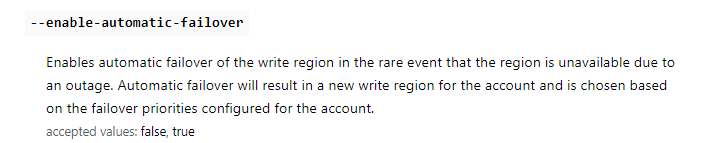

Q16 : A company is developing a system which is going to be using Azure Cosmos DB at the underlying data store. Below are the requirements of the data store

- Ensure at least 99.99% availability and provide network failures

- Accepts writes via the application even in the case of network outages or any unforeseen failures

- Process data in the same sequence as the writes being made

- Allow out of order data with a maximum of 5 second tolerance window

You have to provision a Cosmos DB account – SQL API. You already have a resource group in the South Central US region.

You have to complete the below Azure CLI commands for this purpose.

A. –enable-virtual-network true

B. –enable-automatic-failover true

C. –kind ‘GlobalDocumentDB’

D. –kind ‘MongoDB’

Correct Answer: B

Explanation

Since we have to ensure that the data needs to be available even in the case of network outages or any unforeseen failures, we have to enable automatic failover.

The Microsoft documentation mentions the following

Option A is incorrect since there is no mention in the question of requiring the database to be part of a virtual network

Option C is incorrect since the default API chosen for the database is the SQL API

Option D is incorrect since we need to create a Cosmos DB account with the SQL API

For more information on the Cosmos DB create command, please visit the below URL: https://docs.microsoft.com/en-us/cli/azure/cosmosdb?view=azure-cli-latest#az-cosmosdb-create

Domain : Develop for Azure storage

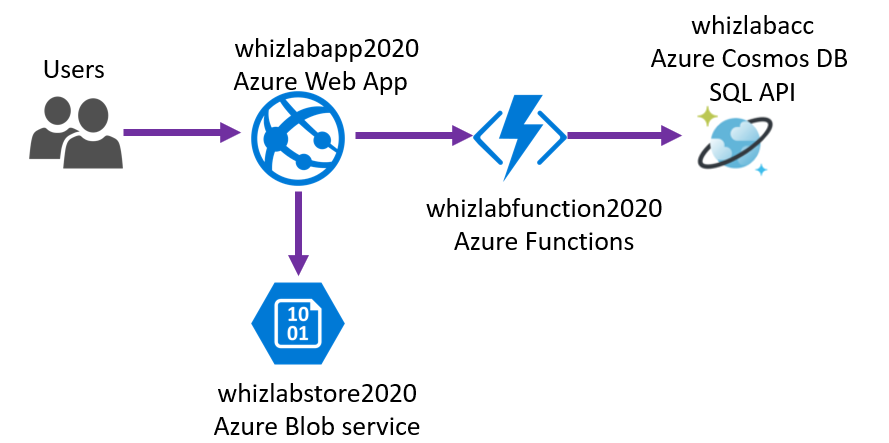

Case Study : A company is planning on deploying a system that will follow the below mentioned architecture

- Users would be accessing the web application – https://whizlab.com via the Azure Web App service.

- Users would upload images via the web application to Azure Blob storage

- The image data would be sent to an Azure Function

- The image data would then be stored along with the user data in a Cosmos DB account

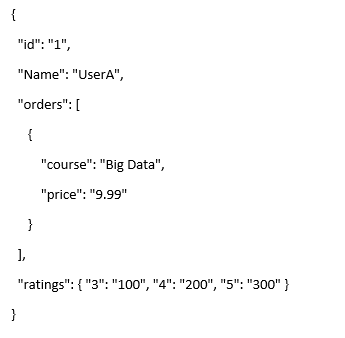

An example of an item stored in the Cosmos DB container is shown below

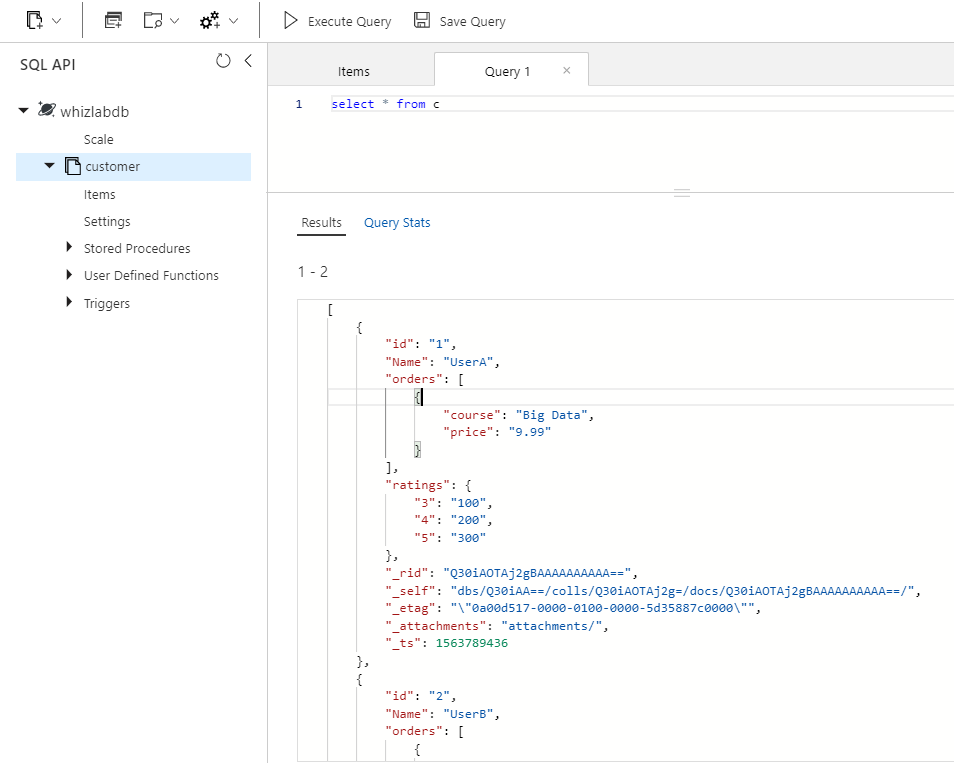

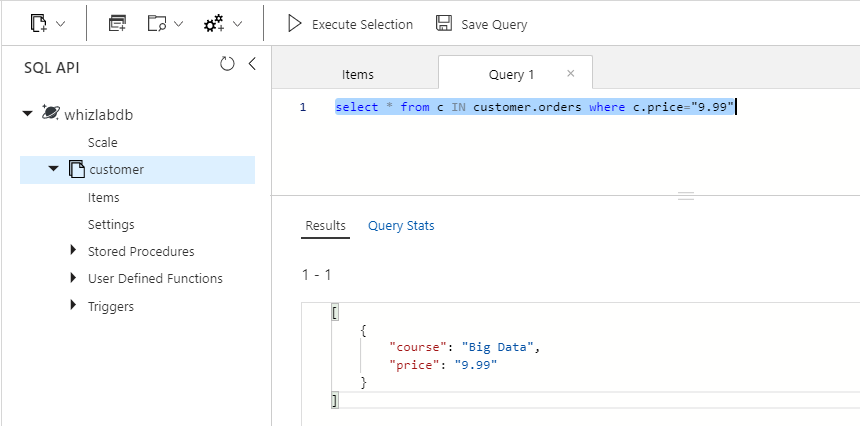

Q17 : You need to formulate a query that would be used to get all items from container customer where the order price is $9.99

A. Orders

B. Customer

C. Course

D. Customer.orders

Correct Answer: D

Explanation

We can use the IN clause to query data from JSON arrays

An example of an implementation of querying the data is given below

Data in the container

After executing the query

Since this is clear from the implementation, all other options are invalid

For more information on SQL queries for array objects, please visit the below URL: https://docs.microsoft.com/en-us/azure/cosmos-db/sql-query-object-array

Domain : Monitor, troubleshoot, and optimize Azure solutions

Case Study : A company is planning on deploying a system that will follow the below mentioned architecture

- Users would be accessing the web application – https://whizlab.com via the Azure Web App service.

- Users would upload images via the web application to Azure Blob storage

- The image data would be sent to an Azure Function

- The image data would then be stored along with the user data in a Cosmos DB account

An example of an item stored in the Cosmos DB container is shown below

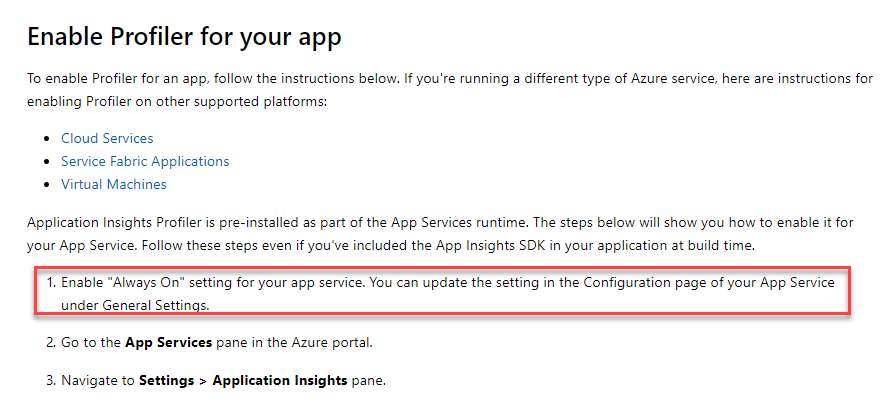

Q18 : A developer needs to enable Application Insights Profiler for the Azure Web App. Which of the following feature is required to enable Application Insights Profiler for a Web App?

A. CORS configuration

B. Always On setting

C. Enable Identity

D. Enable Custom domains

Correct Answer: B

Explanation

The requirement is to enable Application Insights Profiler for a web app which captures the data automatically at scale without negatively affecting to end-user and widely used for monitoring purpose. and the given options are

A. CORS configuration

Cross-origin resource sharing (CORS) defines a way for client web applications that are loaded in one domain to interact with resources

Hence this is not going to help us to enable Application Insights Profiler

B. Always On setting

If a web app sits idle for too long, the system unloads the website, and when traffic returns, the system needs to load the Web App which causes longer response time and higher utilization of resources. By enabling the ‘Always On’ setting (available for Standard tier websites), keeps the Web App up and running, which translates to higher availability and faster response times across the board.

Since the requirement is to enable ‘Application Insights Profiler’ for a web App, Enabling ‘Always On’ is the correct prerequisite.

Hence this is the correct answer. We recommend you find detail at https://docs.microsoft.com/en-us/azure/azure- monitor/app/profiler

C. Enable Identity

identity is part of Azure Active Directory hence not related to Application Insights Profiler

D. Enable Custom domains

Enable Custom Domain is a feature to access any Azure URI by different or customized URI. Hence this is not related to enabling Application Insights Profiler

We have to enable the “Always On” setting.

This is also given in the Microsoft documentation

Since this is clearly given in the documentation, all other options are incorrect

For more information on enabling Application Insights for the Azure Web App, please visit the below URL: https://docs.microsoft.com/en-us/azure/azure-monitor/app/profiler

Domain : Connect to and consume Azure services and third-party services

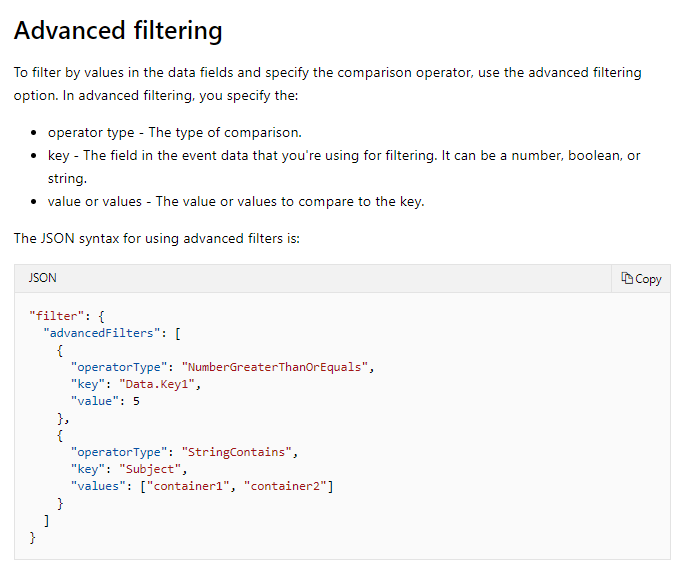

Q19 : A team has to integrate various modules of an application with the Azure Event Grid service. They have to filter events which are sent to the various application endpoints. The requirements for the type of messages that need to be received by the different endpoints are given below

| Application Endpoint | Message requirement |

| EndpointA | Receives failure messages for any resources deployed to the Azure subscription |

| EndpointB | Receives messages whenever objects are added to a specific container in Azure Blob storage |

| EndpointC | Receive messages whenever data fields in the message has the value of “Organization” |

Which of the following would you use as a filter option for messages that need to be sent to EndpointC?

A. Subject begins with or ends with

B. Advanced fields and operators

C. ResourceTypes

D. EventTypes

Correct Answer: B

Explanation

Here since we need a more advanced scenario and check for the data field values, we have to choose the “Advanced fields and operators” filter option

The Microsoft documentation mentions the following

Since this is clearly given in the documentation, all other options are incorrect

For more information on event filtering in Azure Event Grid, one can go to the below URL: https://docs.microsoft.com/en-us/azure/event-grid/event-filtering

Domain : Develop Azure compute solutions

Q20 : You are developing an Azure Function. This function will be using the Azure Blob storage trigger. You have to ensure the Function is triggered whenever .png files are added to a container named data.

You decide to add the following filter in the function.json file

“path”: “data/png”

Does this filter criteria meet the requirement?

A. Yes

B. No

Correct Answer: B

Explanation

This is the wrong structure of the filter that needs to be used for this scenario

The right filter structure is as follows

“path”: “data/{name}.png”

For more information on the blob trigger, please refer to the following URL: https://docs.microsoft.com/en-us/azure/azure-functions/functions-bindings-storage-blob-trigger?tabs=csharp

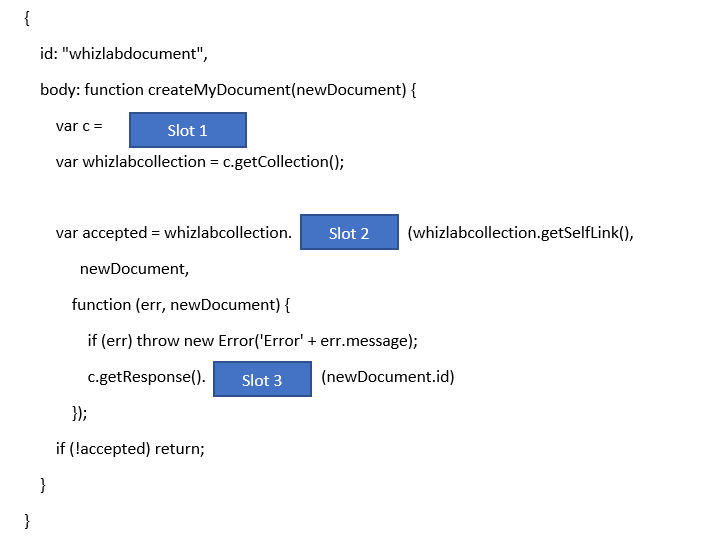

Domain : Develop for Azure storage

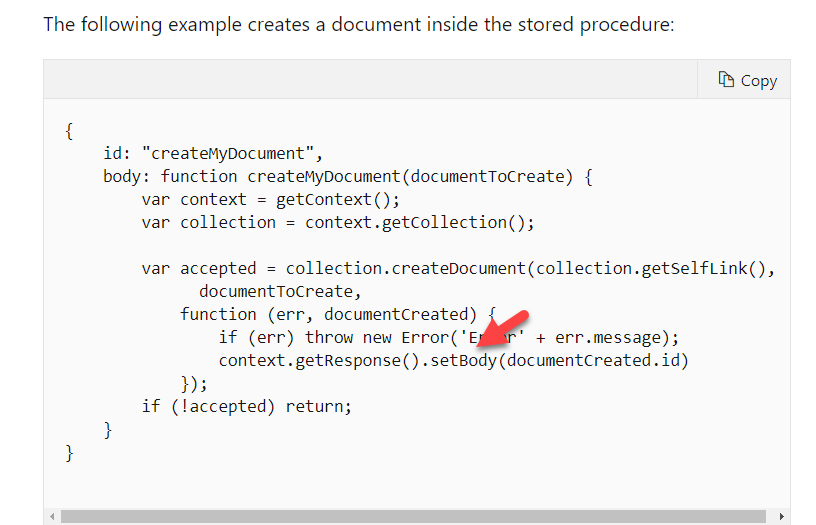

Q21 : Your team has an Azure Cosmos DB Account of the type SQL API. You have to develop a simple stored procedure that would perform the activity of adding a document to a Cosmos DB container.

You have to complete the below code snippet for this requirement

A. getBody

B. setBody

C. getDocument();

D. getContext();

E. createDocument

F. newDocument

Correct Answer: B

Explanation

Here we have to set the body of the response with the new document ID.

An example of this is also given in the Microsoft documentation

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on using Cosmos DB stored procedures, please refer to the following URL: https://docs.microsoft.com/en-us/rest/api/cosmos-db/stored-procedures

Domain : Connect to and consume Azure services and third-party services

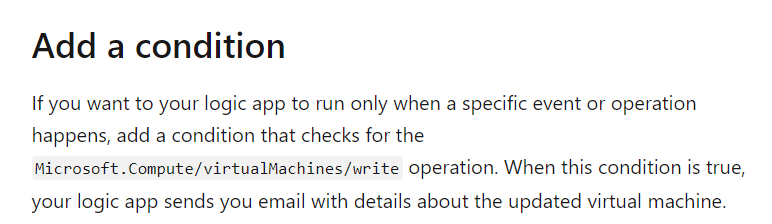

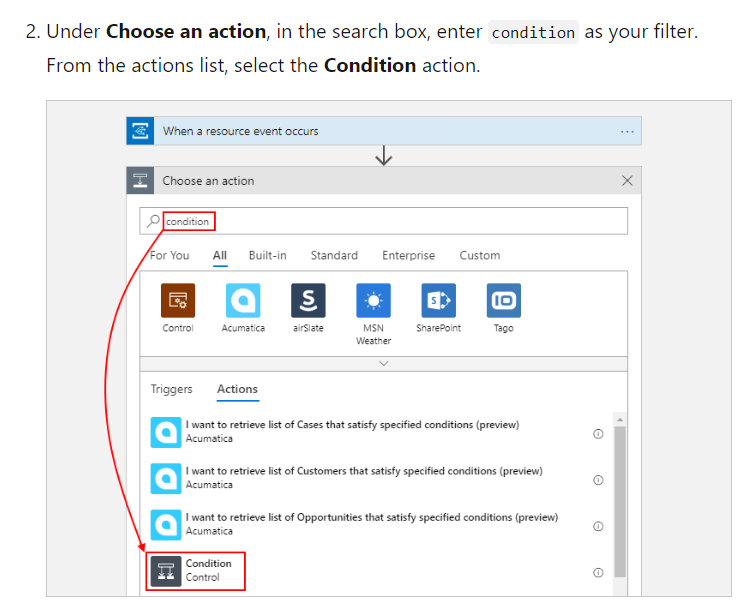

Q22 : You have to develop a workflow in the Azure Logic App service to perform the following actions

- Trigger the workflow whenever a deallocation activity occurs for a virtual machine

Send an email to the IT Administrator with details of the activity

Which of the following would you add as a control in your workflow to perform an action if the workflow is actually triggered?

A. Resource

B. Condition

C. Check

D. Version

Correct Answer: B

Explanation

You would add a Condition control to check the details of the event.

This is also given in the Microsoft documentation as an example

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on the actual use case scenario example, please refer to the following URL: https://docs.microsoft.com/en-us/azure/event-grid/monitor-virtual-machine-changes-event-grid-logic-app

Domain : Monitor, troubleshoot, and optimize Azure solutions

Q23 : You have to develop an ASP.Net application. The application is an on-demand video streaming service. The application would be hosted on an Azure Web App service. To facilitate the delivery of content, you decide to use the Azure Content Delivery Network service.

An example of a URL which users would use for viewing a video is given below

All of the video content must expire from the cache after an hour. The videos of varying quality must be delivered from the closest regional point of presence.

You have to implement the right caching rules.

Which of the following would you choose as the cache expiration duration?

A. 1 second

B. 1 minute

C. 1 hour

D. 1 day

Correct Answer: C

Explanation

Since the requirement mentions that the cache content needs to expire after an hour, we have to choose the setting of 1 hour.

Since the time frame is clearly mentioned in the requirement, all other options are incorrect.

For more information on Azure CDN cache settings, please refer to the following URL: https://docs.microsoft.com/en-us/azure/cdn/cdn-caching-rules

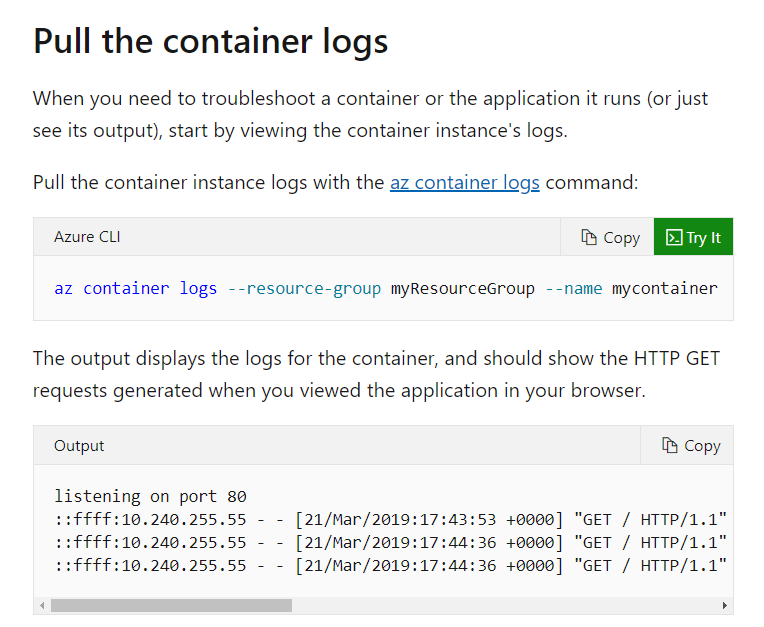

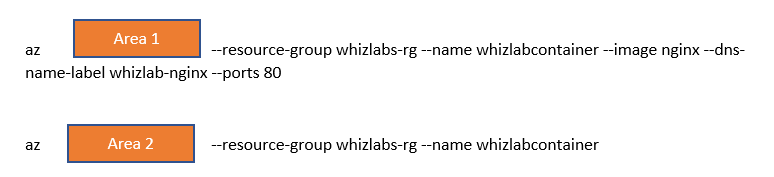

Domain : Develop Azure compute solutions

Q24 : You have to create a new Azure container instance. The container instance would be used to host a nginx container.

You also have to view the container instance’s logs

You have to complete the below Azure CLI commands for this requirement

A. instance create

B. container create

C. instance logs

D. container logs

Correct Answer: D

Explanation

To pull the container logs, we have to use the az container logs command

This is also given in the Microsoft documentation

Since this is clearly mentioned in the Microsoft documentation, all other options are incorrect

For more information on Azure container instances, please refer to the following URL: https://docs.microsoft.com/en-us/azure/container-instances/container-instances-quickstart

Domain : Develop Azure compute solutions

Case Study : A company currently has a social networking site in place. The content for all messages is first processed before being posted on the social networking site. The content for the messages is stored Azure Blob storage.

Application Services

The following services are used as part of the processing system

- ContentUploader – This is used to pick up the messages from Azure Blob storage.

- ContentProcessor – This service has been designed by Data scientists. This service processes the messages to check for any potentially offensive or unwanted content.

The Content Uploader service makes use of Azure Functions.

The Content Processor service is designed as a container and is published using the Azure Container Service. The image for the processor service is stored in Azure container registry named ContentRegistry

The Content Processor service would process the messages and output a number indicating a threshold on whether the message could be posted or not.

For each message that can’t be posted, an employee of the company needs to perform a manual review of the message content.

Manual Review

For the manual review, the company employees need to first log into the ContentProcessor service by using their Azure AD credentials. The employees need to have the ContentAdmin role for a successful login attempt. When the employee successfully logs in and performs a manual review of a message, an email needs to be sent to the appropriate stakeholders.

End user agreement

For all users that post messages to the platform, they have to agree to an end user agreement. This agreement would state that all messages might have to undergo a manual review by company employees. There would be a huge volume for the end user agreements, around a million per hour.

Security Requirements

- All data transmitted between services must be encrypted at rest and in transit

- The storage account keys must be available only in memory and must not available anywhere else in the system

- All container network traffic must be restricted to an Azure virtual network.

- When there is a newer version of the application, an automated process must be initiated to ensure the newer version is first verified before it is deployed to production.

Q25 : You have to view the diagnostic information about the container during the startup process. Which of the following command would you execute to get the relevant information?

A. az web config

B. az container attach

C. az logs

D. az docker logs

Correct Answer: B

Explanation

We can use the az container attach command for this purpose.

This is also given in the Microsoft documentation

Since this is clearly mentioned in the Microsoft documentation, all other options are incorrect

For more information on getting container instance logs, please refer to the following URL: https://docs.microsoft.com/en-us/azure/container-instances/container-instances-get-logs

Domain: Connect to and consume Azure services and third-party services

Q26:You are developing an application to publish events in batches using the Azure Event Hubs library for .Net. The events need not be published immediately to publish large batches efficiently. Which API class should you recommend?

A. EventHubProducerClient

B. EventHubConsumerClient

C. EventHubBufferedProducerClient

D. EventProcesserCheckpoint

Answer: C

Option C is correct:

The EventHubBufferedProducerClient does not publish immediately, instead it uses a deferred model where events are collected into a buffer so that they may be efficiently batched and published when the batch is full or the MaximumWaitTime has elapsed with no new events enqueued.

Option A is incorrect:

The EventHubProducerClient publishes immediately, ensuring a deterministic outcome for each send operation, though requires that callers own the responsibility of building and managing batches. When there is no need to publish the events immediately, it is recommended to consider using the EventHubBufferedProducerClient, which takes responsibility for building and managing batches to reduce the complexity of doing so in application code.

Option B is incorrect:

The EventHubConsumerClient class is used to consume events from an Event Hub.

Option D is incorrect:

EventProcesserCheckpoint contains the information to reflect the state of event processing for a given Event Hub partition.

For more information on Azure event hub, please refer to the following URL:

Domain: Connect to and consume Azure services and third-party services

Q27: You are working on an image library application. When the author uploads a new image you need to save the raw image and convert the image into various formats and save it to the database. You also should be able to view failed APIs logs and should be able to retry them.

Which of the below solutions is best recommended?

A. Create the azure service bus topics “raw-image-topic”, “image-processer-topic”.

Create subscriptions for each of these topics.

Create two APIs which can subscribe to each of these subscriptions.

B. Create the azure service bus topic called “image-topic” and create two subscriptions namely “raw-image-sub” and “image-processer-sub”.

Create two APIs which can subscribe to each of these subscriptions.

When the publisher publishes messages on the topic, each of the subscribers will receive the message to consume it independently.

C. Create two azure function APIs namely “AfSaveRawImage” and “AfFormatImage”.

Call these APIs from the client side independently passing the raw image file as payload.

D. Create two azure service bus queues “raw-image-queue” and “format-image-queue” and create APIs for each of these queues to consume the message.

Answer: B

Explanation

Option B is correct:

Azure service topics and subscriptions provide a one-to-many form of communication in a publish and subscribe pattern. It’s useful for scaling to large numbers of recipients.

Each published message is made available to raw-image-sub and image-processer-sub registered with the image-topic. A publisher sends a message to the image-topic and the subscriber’s raw-image-sub and image-processer-sub will receive a copy of the message.

And raw-image-sub and image-processer-sub will be executed without having a dependency on one another. When any of the registered subscribers failed to complete the execution after the default number of retries, those messages will be forwarded to the dead letter queue where you can view the failed messages and be able to retry them.

Option A is incorrect:

When you want to perform multiple actions(i.e saving the raw image file and converting the image to multiple formats) on the same data, it is recommended to use a single topic with multiple subscribers. Because you can easily add more subscribers for more actions.

Option C is incorrect because it does not comply with the given requirements. For stand-alone APIs, it is not straightforward to be able to view failed APIs and retry them.

Option D is incorrect because When you want to perform multiple actions (i.e saving the raw image file and converting the image to multiple formats) on the same data, it is recommended to use a single topic with multiple subscribers. So that each subscriber can be executed for each action.

A queue allows the processing of a message by a single consumer.

For more information on Azure service bus, please refer to the following URL:

Domain: Monitor, troubleshoot, and optimize Azure solutions

Q28:You are developing a solution which involves Azure Redis cache.There are no keys with the TTL value set but still, you need to remove the key if the system goes under memory pressure. What eviction policy for Azure Cache for Redis would you recommend?

A. allkeys-lru

B. volatile-lfu

C. noeviction

D. volatile-random

Answer: A

Explanation

Option A is correct:

allkeys-lru policy keeps most recently used keys; removes least recently used (LRU) keys

If no keys have a TTL value, then the system won’t evict any keys. If you want the system to allow any key to be evicted due to memory pressure, then you may want to consider the allkeys-lru policy.

Option B is incorrect:

Volatile-flu removes the least frequently used keys with the expire field set to true.

Option C is incorrect:

In the Noeviction policy, new values aren’t saved when the memory limit is reached. When a database uses replication, this applies to the primary database.

Option D is incorrect:

Volatile-random policy randomly removes keys with expire field set to true.

For more information on Configuring cache and expiration policies for Azure Cache for Redis, please refer to the following URL:

Domain: Develop for Azure storage

Q29:You are developing an application that uses Azure Cosmos DB to save the data. In the current implementation, you have found performance issues due to hot partitioning that did not allow Azure Cosmos DB to scale very well.Which of the following resulted in hot partition?

A. Querying over the non-partition key

B. Querying over the single partition key

C. Not defining any indexing policy

D. Creating partition key design that distributes requests unevenly

Answer: D

Explanation

Option D is correct:

Hot Partitions occur due to the imbalance of logical partitions, where some logical partitions are very large and some are very small which does not allow Azure Cosmos DB to scale.

Instead, create a partition key that will give uniform distribution of storage and throughput.

Option A is incorrect:

Querying over the non-partition key results in the cross-partition query. (which queries over the entire cluster). This does not result in Hot Partition.

Option B is incorrect:

Querying over the partition key results in the single partition query, i.e Azure Cosmos DB can efficiently fetch the results as it knows from which physical partition it needs to fetch the data.

Option C is incorrect:

In Azure Cosmos DB, every container has an indexing policy that dictates how the container’s items should be indexed. The default indexing policy for newly created containers indexes every property of each and every item.

For more information on partitioning schemes and partition keys, please refer to the following URL:

https://learn.microsoft.com/en-us/azure/cosmos-db/partitioning-overview#physical-partitions

Domain: Develop for Azure storage

Q30: You are developing an application that uses Azure Cosmos DB to save the data. All the documents in a container should be auto-deleted after 24 hours.Which approach would you best recommend to achieve the requirement?

A. Set the “TTL” property for each document in the container.

B. Set the ‘TTL’ property from ContainerProperties class at the container creation time.

C. Set the DefaultTimeToLive property from ContainerProperties class at the container creation time.

D. Create a timer trigger that runs every 24 hours and deletes the documents from the container.

Answer: C

Explanation

Option C is correct:

To set the time to live on a container, you need to provide a non-zero positive number that indicates the time period in seconds.

You can set time to live on a container or an item within the container using the DefaultTimeToLive property from ContainerProperties class.

Option A is incorrect because it is not recommended for the current requirements as any change in the item, ‘TTL’ value will override every container DefaultTimeToLive property.

Option B is incorrect as ContainerProperties class does not have a property named ‘TTL’

Option D is incorrect because it creates more time and complexity. We can easily achieve this using the DefaultTimeToLive property from ContainerProperties class.

For more information on operations on data and Cosmos DB containers, please refer to the following URL: https://learn.microsoft.com/en-us/azure/cosmos-db/nosql/time-to-live

Summary

Hope our efforts in this article have proved helpful to you and now you feel more confident and prepared after learning the AZ-204 exam questions and answers. To strengthen your preparation a level up, go through the elaborate and descriptive Practice Tests in Whizlab’s official webpage. AZ-204 practice tests looks very similar to the actual exam which boosts your confidence before attempting the direct exam.

- Top 25 DevSecOps Interview Question and Answers for 2024 - March 1, 2023

- How to prepare for VMware Certified Technical Associate [VCTA-DCV] Certification? - February 14, 2023

- Top 20 Cloud Influencers in 2024 - January 31, 2023

- 25 Free Question on SC-100: Microsoft Cybersecurity Architect - January 27, 2023

- Preparation Guide on MS-101: Microsoft 365 Mobility and Security - December 26, 2022

- Exam tips to prepare for Certified Kubernetes Administrator: CKA Exam - November 24, 2022

- Top Hands-On Labs To Prepare For AWS Certified Cloud Practitioner Certification - October 27, 2022

- Why do you need to upskill your teams with the Azure AI fundamentals? - October 11, 2022