Did you come here looking for the FREE Questions and Answers on the AWS Certified Machine Learning Specialty certification? Find them here.

What to expect in AWS Machine Learning Certification Exam?

The AWS Machine Learning Certification attests to your expertise in the building, tuning, training, and deployment of Machine Learning(ML) models on AWS. It aids the organizations in the identification and development of talent that possess critical skills in the implementation of Cloud Initiatives.

Let’s Start Exploring!

Domain : Exploratory Data Analysis

Q1 : You work for a financial services firm that wishes to enhance its fraud detection capabilities further. The firm has implemented fine-grained transaction logging for all transactions their customers make using their credit cards. The fraud prevention department would like to use this data to produce dashboards to give them insight into their customer’s transaction activity and provide real-time fraud prediction.

You plan to build a fraud detection model using the transaction observation data with Amazon SageMaker. Each transaction observation has a date-time stamp. In its raw form, the date-time stamp is not very useful in your prediction model since it is unique. Can you make use of the date-time stamp in your fraud prediction model, and if so, how?

A. No, you cannot use the date-time stamp since this data point will never occur again. Unique features like this will not help identify patterns in your data.

B. Yes, you can use the date-time stamp data point. You can just use feature selection to deselect the date-time stamp data point, thus dropping it from the learning process.

C. Yes, you can use the date-time stamp data point. You can transform the date-time stamp into features for the hour of the day, the day of the week, and the month.

D. No, you cannot use the date-time feature since there is no way to transform it into a unique data point.

Correct Answer: C

Explanation

Option A is incorrect since you can use the date-time stamp if you use feature engineering to transform the data point into a useful form.

Option B is incorrect since this option is really just another way of ignoring, thus not using, the date-time stamp data point.

Option C is correct. You can transform the data point using feature engineering and thus gain value from it for the learning process of your model. (See the AWS Machine Learning blog post: Simplify machine learning with XGBoost and Amazon SageMaker: https://aws.amazon.com/blogs/machine-learning/simplify-machine-learning-with-xgboost-and-amazon-sagemaker/)

Option D is incorrect since we can transform the data point into unique features that represent the hour of the day, the day of the week, and the month. These variables could be useful to learn if the fraudulent activity tends to happen at a particular hour, day of the week, or month.

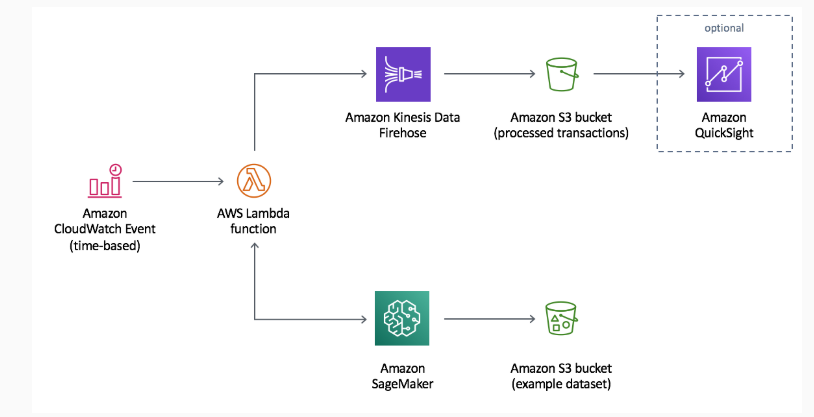

Diagram:

- Here is a screenshot from the AWS Machine Learning documentation depicting a typical fraud detection machine learning solution:

Reference: Please see the Amazon Machine Learning developer documentation: https://docs.aws.amazon.com/machine-learning/latest/dg/feature-processing.html.

Domain : Modeling

Q2 : You work for a real estate company where you are building a machine learning model to predict the prices of houses. You are using a regression decision tree. As you train your model, you see that it is overfitted to your training data, and it doesn’t generalize well to unseen data. How can you improve your situation and get better training results most efficiently?

A. Use a random forest by building multiple randomized decision trees and averaging their outputs to get the predictions of the housing prices.

B. Gather additional training data that gives a more diverse representation of the housing price data.

C. Use the “dropout” technique to penalize large weights and prevent overfitting.

D. Use feature selection to eliminate irrelevant features and iteratively train your model until you eliminate the overfitting.

Correct Answer: A

Explanation

Option A is correct because the random forest algorithm is well known to increase the prediction accuracy and prevent overfitting that occurs with a single decision tree. (See these articles comparing the decision tree and random forest algorithms: https://medium.com/datadriveninvestor/decision-tree-and-random-forest-e174686dd9eb and https://towardsdatascience.com/decision-trees-and-random-forests-df0c3123f991)

Option B is incorrect since gathering additional data will not necessarily improve the overfitting problem, especially if the additional data has the same noise level of the original data.

Option C is incorrect since while the “dropout” technique improves models that are overfitted, it is a technique used with neural networks, not decision trees.

Option D is incorrect since it requires significantly more effort than using the random forest algorithm approach.

Reference: Please see this overview of the random forest machine learning algorithm: https://medium.com/capital-one-tech/random-forest-algorithm-for-machine-learning-c4b2c8cc9feb

Domain : Data Engineering

Q3 : You need to use machine learning to produce real-time analysis of streaming data from IoT devices out in the field. These devices monitor oil well rigs for malfunction. Due to the safety and security nature of these IoT events, the events must be analyzed by your safety engineers in real-time. You also have an audit requirement to retain your IoT device events for 7 days since you cannot fail to process any of the events. Which approach would give you the best solution for processing your streaming data?

A. Use Amazon Kinesis Data Streams and its Kinesis Producer Library to pass your events from your producers to your Kinesis stream.

B. Use Amazon Kinesis Data Streams and its Kinesis API PutRecords call to pass your events from your producers to your Kinesis stream.

C. Use Amazon Kinesis Data Streams and its Kinesis Client Library to pass your events from your producers to your Kinesis stream.

D. Use Amazon Kinesis Data Firehose to pass your events directly to your S3 bucket where you store your machine learning data.

Correct Answer: B

Explanation

Option A is incorrect. The Amazon Kinesis Data Streams Producer Library is not meant to be used for real-time processing of event data since, according to the AWS developer documentation, “it can incur an additional processing delay of up to RecordMaxBufferedTime within the library”. Therefore, it is not the best solution for a real-time analytics solution. (See the AWS developer documentation titled Developing Producers Using the Amazon Kinesis Producer Library)

Option B is correct. The Amazon Kinesis Data Streams API PutRecords call is the best choice for processing in real-time since it sends its data synchronously and does not have the processing delay of the Producer Library. Therefore, it is better suited to real-time applications. (See the AWS developer documentation titled Developing Producers Using the Amazon Kinesis Data Streams API with the AWS SDK for Java)

Option C is incorrect. The Amazon Kinesis Data Streams Client Library interacts with the Kinesis Producer Library to process its event data. Therefore, you’ll have the same processing delay problem with this option. (See the AWS developer documentation titled Developing Consumers Using the Kinesis Client Library 1.x)

Option D is incorrect. The Amazon Kinesis Data Firehose service directly streams your event data to your S3 bucket for use in your real-time analytics model. However, Amazon Kinesis Data Firehose retries to send your data for a maximum of 24 hours, but you have a 7-day retention requirement. (See the Amazon Kinesis Data Firehose FAQs)

Reference: Please see the Amazon Kinesis Data Streams documentation.

Domain : Data Engineering

Q4 : You work as a machine learning specialist at a marketing company. Your team has gathered market data about your users into an S3 bucket. You have been tasked to write an AWS Glue job to convert the files from json to a format that will be used to store Hive data. Which data format is the most efficient to convert the data for use with Hive?

A. Ion

B. grokLog

C. Xml

D. Orc

Correct Answer: D

Explanation

Option A is incorrect. Currently, AWS Glue does not support ion for output. (See the AWS developer guide documentation titled Format Options for ETL Inputs and Outputs in AWS Glue)

Option B is incorrect. Currently, AWS Glue does not support grokLog for output. (See the AWS developer guide documentation titled Format Options for ETL Inputs and Outputs in AWS Glue)

Option C is incorrect. Currently, AWS Glue does not support xml for output. (See the AWS developer guide documentation titled Format Options for ETL Inputs and Outputs in AWS Glue)

Option D is correct. From the Apache Hive Language Manual: “The Optimized Row Columnar (ORC) file format provides a highly efficient way to store Hive data. It was designed to overcome the limitations of the other Hive file formats. Using ORC files improves performance when Hive is reading, writing, and processing data.” Also, AWS Glue supports orc for output. (See the Apache Hive Language Manual and the AWS developer guide documentation titled Format Options for ETL Inputs and Outputs in AWS Glue)

Reference: Please see the AWS developer guide documentation titled General Information about Programming AWS Glue ETL Scripts.

Domain : Exploratory Data Analysis

Q5 : You work as a machine learning specialist for a consulting firm where you analyze data about the consultants who work there in preparation for using the data in your machine learning models. The features you have in your data are things like employee id, specialty, practice, job description, billing hours, and principle. The principle attribute is represented as ‘yes’ or ‘no’, whether the consultant has made principle level or not. For your initial analysis, you need to identify the distribution of consultants and their billing hours for the given period. What visualization best describes this relationship?

A. Scatter plot

B. Histogram

C. Line chart

D. Box plot

E. Bubble chart

Correct Answer: B

Explanation

Options A is incorrect. You are looking for distribution on a single dimension: the consultants billing hours. From the Amazon QuickSite User Guide titled Working with Visual Types in Amazon QuickSight, “A scatter chart shows multiple distributions, i.e., two or three measures for a dimension.”

Option B is correct. You are looking for a distribution of a single dimension: the consultants billing hours. From the Wikipedia article titled Histogram, “A histogram is an accurate representation of the distribution of numerical data. It is an estimate of the probability distribution of a continuous variable.” The continuous variable in this question: the billing hours, binned into ranges (x-axis), at a frequency: the number of consultants at a billing hour range (y-axis).

Option C is incorrect. From the Amazon QuickSite User Guide titled Working with Visual Types in Amazon QuickSight, “Use line charts to compare changes in measured values over a period of time.” You are looking for distribution, not a comparison of changes over a period of time.

Option D is incorrect. From the Statistics How To article titled Types of Graphs Used in Math and Statistics, “A boxplot, also called a box and whisker plot, is a way to show the spread and centers of a data set. Measures of spread include the interquartile range and the mean of the data set. Measures of the center include the mean or average and median (the middle of a data set).” A Box Plot shows the distribution of multiple dimensions of the data. Once again, you are looking for a distribution of a single dimension, not a distribution on multiple dimensions.

Option E is incorrect. From the Wikipedia article titled Bubble Chart, “A bubble chart is a type of chart that displays three dimensions of data. Each entity with its triplet (v1, v2, v3) of associated data is plotted as a disk that expresses two of the vi values through the disk’s xy location and the third through its size.” Once again, you are looking for a distribution of a single dimension, not a distribution on three dimensions.

Reference: Please see the Amazon QuickSight user guide titled Working with Amazon QuickSight Visuals and the Statistics How To article titled Types of Graphs Used in Math and Statistics.

Domain : Modeling

Q6 : You work as a machine learning specialist for a robotics manufacturer where you are attempting to use unsupervised learning to train your robots to perform their prescribed tasks. You have engineered your data and produced a CSV file and placed it on S3.

Which of the following input data channel specifications are correct for your data?

A. Metadata Content-Type is identified as text/csv

B. Metadata Content-Type is identified as application/x-recordio-protobuf;boundary=1

C. Metadata Content-Type is identified as application/x-recordio-protobuf;label_size=1

D. Metadata Content-Type is identified as text/csv;label_size=0

Correct Answer: D

Explanation

Option A is incorrect. The Content-Type of text/csv without specifying a label_size is used when you have target data, usually in column one, since the default value for label_size is 1, meaning you have one target column. (See the Amazon SageMaker developer guide titled Common Data Formats for Training)

Option B is incorrect. The boundary content type is not relevant to CSV files. It is used for multipart form data.

Option C is incorrect. For unsupervised learning, the label_size should equal 0, indicating the absence of a target. (See the Amazon SageMaker developer guide titled Common Data Formats for Training)

Option D is correct. For unsupervised learning, the label_size equals 0, indicating the absence of a target. (See the Amazon SageMaker developer guide titled Common Data Formats for Training)

Reference: Please see the Amazon SageMaker developer guide, specifically Common Data Formats for Built-in Algorithms and Common Data Formats for Training.

Domain : Modeling

Q7 : You work as a machine learning specialist for a marketing firm. Your firm wishes to determine which customers in a dataset of its registered users will respond to a new proposed marketing campaign. You plan to use the XGBoost algorithm on the binary classification problem. In order to find the optimal model, you plan to run many hyperparameter tuning jobs to reach the best hyperparameter values. Which of the following hyperparameters must you use in your tuning jobs if your objective is set to multi:softprob?

A. Alpha

B. Base_score

C. Eta

D. Num_round

E. Gamma

F. Num_class

Correct Answers: D and F

Explanation

Option A is incorrect. The alpha hyperparameter is used to adjust the L1 regulation term on weights. This term is optional. (See the Amazon SageMaker developer guide titled XGBoost Hyperparameters)

Option B is incorrect. The base_score hyperparameter is used to set the initial prediction score of all instances. This term is optional. (See the Amazon SageMaker developer guide titled XGBoost Hyperparameters)

Option C is incorrect. The eta hyperparameter is used to prevent overfitting. This term is optional. (See the Amazon SageMaker developer guide titled XGBoost Hyperparameters)

Option D is correct. The num_round hyperparameter is used to set the number of rounds to run in your hyperparameter tuning jobs. This term is required. (See the Amazon SageMaker developer guide titled XGBoost Hyperparameters)

Option E is incorrect. The gamma hyperparameter is used to set the minimum loss reduction required to make a further partition on a leaf node of the tree. This term is optional. (See the Amazon SageMaker developer guide titled XGBoost Hyperparameters)

Option F is correct. This hyperparameter is used to set the number of classes. This term is required if the objective is set to multi:softmax or multi:softprob. (See the Amazon SageMaker developer guide titled XGBoost Hyperparameters)

Reference: Please see the Amazon SageMaker developer guide titled Automatic Model Tuning and the Amazon SageMaker developer guide titled How Hyperparameter Tuning Works.

Domain : Modeling

Q8 : You work as a machine learning specialist for a financial services company. You are building a machine learning model to perform futures price prediction. You have trained your model, and you now want to evaluate it to make sure it is not overtrained and can generalize.

Which of the following techniques is the appropriate method to cross-validate your machine learning model?

A. Leave One Out Cross Validation (LOOCV)

B. K-Fold Cross Validation

C. Stratified Cross Validation

D. Time Series Cross Validation

Correct Answer: D

Explanation

Option A is incorrect. Since we are trying to validate a time series set of data, we need to use a method that uses a rolling origin with day n as training data and day n+1 as test data. The LOOCV approach doesn’t give us this option. (See the article K-Fold and Other Cross-Validation Techniques)

Option B is incorrect. The K-Fold cross validation technique randomizes the test dataset. We cannot randomize our test dataset since we try to validate a time series set of data. Randomized time series data loses its time-related value.

Option C is incorrect. We are trying to cross-validate time series data. We cannot randomize the test data because it will lose its time-related value.

Option D is correct. The Time Series Cross Validation technique is the correct choice for cross-validating a time series dataset. Time series cross validation uses forward chaining, where the origin of the forecast moves forward in time. Day n is training data and day n+1 is test data.

Reference: Please see the Amazon Machine Learning developer guide titled Cross Validation, and the article K-Fold and Other Cross-Validation Techniques.

Domain : Modeling

Q9 : You work as a machine learning specialist for a state highway administration department. Your department is trying to use machine learning to help determine the make and model of cars as they pass a camera on the state highways. You need to build a machine learning model to accomplish this problem.

Which modeling approach best fits your problem?

A. Multi-Class Classification

B. Simulation-based Reinforcement Learning

C. Binary Classification

D. Heuristic Approach

Correct Answer: A

Explanation

Option A is correct. Multi-Class Classification is used when your model needs to choose from a finite set of outcomes, such as this car make and model classification image recognition problem.

Option B is incorrect. Simulation-Based Reinforcement Learning is used in problems where your model needs to learn through trial and error. An image recognition problem with a finite set of outcomes is better suited to a multi-class classification model.

Option C is incorrect. Binary Classification is the approach you use when you are trying to predict a binary outcome. This strategy determination problem would not fit a binary classification model since you have a finite set from which to choose that is greater than 2.

Option D is incorrect. The Heuristic Approach is used when a machine learning approach is not necessary. An example is the rate of acceleration of a particle through space. There are well known formulas for speed, inertia, and friction that can solve a problem such as this.

Reference: Please see the Amazon SageMaker developer guide titled Linear Learner Algorithm, the Amazon SageMaker developer guide titled Reinforcement Learningwith Amazon SageMaker RL, the Amazon Machine Learning developer guide titled Multiclass Classification, and the article titled What is the difference between a machine learning algorithm and a heuristic, and when to use each?

Domain : Modeling

Q10 : You work as a machine learning specialist for the highway toll collection division of the regional state area. The toll collection division uses cameras to identify car license plates as the cars pass through the various toll gates on the state highways. You are on the team that is using Sage Maker Image Classification machine learning to read and classify license plates by state and then identify the actual license plate number.

Very rarely, cars pass through the toll gates with plates from foreign countries, for example, Great Britain or Mexico. The outliers must not adversely affect your model’s predictions.

Which hyperparameter should you set, and to what value, to ensure these outliers do not adversely impact your model?

A. feature_dim set to 5

B. feature_dim set to 1

C. sample_size set to 10

D. sample_size set to 100

E. learning_rate set to 0.1

F. learning_rate set to 0.75

Correct Answer: E

Explanation

Option A is incorrect. The feature_dim hyperparameter is a setting on the K-Means and K-Nearest Neighbors algorithms, not the Image Classification algorithm.

Option B is incorrect. The feature_dim hyperparameter is a setting on the K-Means and K-Nearest Neighbors algorithms, not the Image Classification algorithm.

Option C is incorrect. The sample_size hyperparameter is a setting on the K-Nearest Neighbors algorithm, not the Image Classification algorithm.

Option D is incorrect. The sample_size hyperparameter is a setting on the K-Nearest Neighbors algorithm, not the Image Classification algorithm.

Option E is correct. The learning_rate hyperparameter governs how quickly the model adapts to new or changing data. Valid values range from 0.0 to 1.0. Setting this hyperparameter to a low value, such as 0.1, will make the model learn more slowly and be less sensitive to outliers. This is what you want. You want your model not to be adversely impacted by outlier data.

Option F is incorrect. The learning_rate hyperparameter governs how quickly the model adapts to new or changing data. Valid values range from 0.0 to 1.0. Setting this hyperparameter to a high value, such as 0.75, will make the model learn more quickly but be sensitive to outliers. This is not what you want. You want your model not to be adversely impacted by outlier data.

Reference: Please see the Amazon SageMaker developer guide titled Image Classification Hyperparameters, and the Amazon SageMaker developer guide titled Use Amazon SageMaker Built-in Algorithms.

Domain : Data Engineering

Q11 : You work as a machine learning specialist at a hedge fund firm. Your firm is working on a new quant algorithm to predict when to enter and exit holdings in their portfolio. You are building a machine learning model to predict these entry and exit points in time. You have cleaned your data, and you are now ready to split the data into training and test datasets.

Which splitting technique is best suited to your model’s requirements?

A. Use k-fold cross validation to split the data.

B. Sequentially splitting the data

C. Randomly splitting the data

D. Categorically splitting the data by holding

Correct Answer: B

Explanation

Option A is incorrect. Using k-fold cross validation will randomly split your data. But you need to consider the time-series nature of your data when splitting. So randomizing the data would eliminate the time element of your observations, making the datasets unusable for predicting price changes over time.

Option B is correct. By sequentially splitting the data, you preserve the time element of your observations.

Option C is incorrect. Randomly splitting the data would eliminate the time element of your observations, making the datasets unusable for predicting price changes over time.

Option D is incorrect. If you split the data by a category such as the holding attribute, you would create imbalanced training and test dataset since some holdings would only be in the training dataset and others would only be in the test dataset.

Reference: Please see the Amazon Machine Learning developer guide titled Splitting Your Data.

Domain : Exploratory Data Analysis

Q12 : You work for a major banking firm as a machine learning specialist. As part of the bank’s fraud detection team, you build a machine learning model to detect fraudulent transactions. Using your training dataset, you have produced a Receiver Operating Characteristic (ROC) curve, and it shows 99.99% accuracy. Your transaction dataset is very large, but 99.99% of the observations in your dataset represent non-fraudulent transactions. Therefore, the fraudulent observations are a minority class. Your dataset is very imbalanced.

You have the approval from your management team to produce the most accurate model possible, even if it means spending more time perfecting the model. What is the most effective technique to address the imbalance in your dataset?

A. Synthetic Minority Oversampling Technique (SMOTE) oversampling

B. Random oversampling

C. Generative Adversarial Networks (GANs) oversampling

D. Edited Nearest Neighbor undersampling

Correct Answer: C

Explanation

Option A is incorrect. The SMOTE technique creates new observations of the underrepresented class, in this case, the fraudulent observations. These synthetic observations are almost identical to the original fraudulent observations. This technique is expeditious, but the types of synthetic observations it produces are not as useful as the unique observations created by other oversampling techniques.

Option B is incorrect. Random oversampling uses copies of some of the minority class observations (randomly selected) to augment the minority class observation set. These observations are exact replicas of existing minority class observations, making them less effective than observations created by other techniques that produce unique synthetic observations.

Option C is correct. The Generative Adversarial Networks (GANs) technique generates unique observations that more closely resemble the real minority observations without being so similar that they are almost identical. This results in more unique observations of your minority class that improve your model’s accuracy by helping to correct the imbalance in your data.

Option D is incorrect. Using an undersampling technique would remove potentially useful majority class observations. Additionally, you would have to remove a huge number of your majority class observations to correct your imbalance that you would render your entire training dataset useless.

Reference: Please see the Wikipedia article titled Oversampling and undersampling in data analysis, and the article titled Imbalanced data and credit card fraud.

Domain : Exploratory Data Analysis

Q13 : You work for the security department of your firm. As part of securing your firm’s email activity from phishing attacks, you need to build a machine learning model that analyzes incoming email text to find word phrases like “you’re a winner” or “click here now” to find potential phishing emails.

Which of the following text feature engineering techniques is the best solution for this task?

A. Orthogonal Sparse Bigram (OSB)

B. Term Frequency-Inverse Document Frequency (tf-idf)

C. Bag-of-Words

D. N-Gram

Correct Answer: D

Explanation

Option A is incorrect. The Orthogonal Sparse Bigram natural language processing algorithm creates groups of words and outputs the pairs of words that include the first word. You are trying to classify an email as a phishing attack by having your model learn based on the presence of multi-word phrases in the email text, not pairs of words from the email text stream using the first word as the key.

Option B is incorrect. Term Frequency-Inverse Document Frequency determines how important a word is in a document by giving weights to words that are common and less common in the document. You are trying to classify an email as a phishing attack by having your model learn based on the presence of multi-word phrases in the email text. You are not trying to determine the importance of a word or phrase in the email text.

Option C is incorrect. The Bag-of-Words natural language processing algorithm creates tokens of the input document text and outputs a statistical depiction of the text. The statistical depiction, such as a histogram, shows the count of each word in the document. You are trying to classify an email as a phishing attack by having your model learn based on the presence of multi-word phrases in the email text, not individual words.

Option D is correct. The N-Gram natural language processing algorithm is used to find multi-word phrases in the text, in this case, an email. This suits your phishing detection task since you are trying to classify an email as a phishing attack by having your model learn based on the presence of multi-word phrases.

Reference: Please see the article titled Introduction to Natural Language Processing for Text, and the article titled Document Classification Part 2: Text Processing (N-Gram Model & TF-IDF Model)

Domain : Data Engineering

Q14 : You work as a machine learning specialist for a media sharing service. Healthcare professionals will use the media sharing service to share images of x-rays, MRIs, and other medical imagery. The accuracy of labelling these images is of primary importance, since the labelling will be used in auto diagnostic software. As your team builds the data repository to be used by your machine learning algorithms, you need to use human manual labellers. You have decided to use Amazon Ground Truth for this purpose. Since accuracy is of prime importance, you have decided to use the annotation consolidation feature of Ground Truth to ensure proper labelling of the medical images.

Which of the Ground Truth annotation consolidation functions should you use to ensure the accuracy of your labelling tasks?

A. Bounding box

B. Semantic segmentation

C. Named entity

D. Output manifest

E. Mechanical turk

Correct Answers: A and B

Explanation

Option A is correct. The bounding box finds the most similar bounding boxes from workers and averages them, thus using the power of multiple workers to annotate your images more accurately.

Option B is correct. The semantic segmentation feature fuses the pixel annotations of multiple workers and applying a smoothing function to the image, thus using the power of multiple workers to annotate your images more accurately.

Option C is incorrect. The named entity feature is used with text annotation work, not image annotation.

Option D is incorrect. The Ground Truth output manifest allows the output of a labelling job to be used as the input to a machine learning model. This feature will not help ensure the accuracy of worker annotations.

Option E is incorrect. The Ground Truth Mechanical Turk feature gives you access to a large pool of labelling workers. While increasing the number of workers at your disposal, this feature will not help ensure the accuracy of worker annotations.

Reference: Please see the Amazon SageMaker developer guide titled Annotation Consolidation, and the Amazon Machine Learning blog titled Use the wisdom of crowds with Amazon SageMaker Ground Truth to annotate data more accurately, and GitHub repository titled Amazon Sagemaker Examples Introduction to Ground Truth Labeling Jobs.

Domain : Exploratory Data Analysis

Q15 : You work for a city government in their shared bike program as a machine learning specialist. You need to visualize the bike share location predictions you are producing on an hourly basis using your model inference you created using the SageMaker built-in K-Means algorithm. Your inference endpoint takes IoT data from your shared bikes as they are used throughout the city. You also want to enrich your shared bike data with external data sources such as current weather and road conditions.

Which set of Amazon services would you use to create your visualization with the least amount of effort?

A. IoT Core -> IoT Analytics ->SageMaker -> QuickSight

B. IoT Core -> Kinesis Firehose -> SageMaker -> QuickSight

C. IoT Core -> Lambda -> SageMaker -> QuickSight

D. IoT Core -> IoT Greengrass -> QuickSight

Correct Answer: A

Explanation

Option A is correct. IoT Core collects data from each shared bike, IoT Analytics retrieves messages from the shared bikes as they stream data, IoT Analytics also enriches the streaming data with your external data sources and sends the streaming data to your K-Means machine learning inference endpoint, QuickSight is then used to create your visualization. This approach requires the least amount of effort mainly because of the data enrichment feature of IoT Analytics.

Option B is incorrect. With this option, you would have to create a lambda function to gather the data enrichment information (weather, road conditions) and enrich the data streams in your own code.

Option C is incorrect. Also, with this option, you would have to add code to your lambda function to gather the data enrichment information (weather, road conditions) and enrich the data streams in your own code.

Option D is incorrect. IoT Greengrass is a service that you use to run local machine learning inference capabilities on connected devices. This approach would not easily integrate with your QuickSight visualization.

Reference: Please see the AWS IoT Analytics overview, the Amazon SageMaker developer guide titled K-Means Algorithm, the AWS Big Data blog titled Build a Visualization and Monitoring Dashboard for IoT Data with Amazon Kinesis Analytics and Amazon QuickSight, the AWS IoT Analytics User Guide titled What IS AWS IoT Analytics?, and the AWS IoT Greengrass FAQs.

Domain : Data Engineering

Q16 : You work for a logistics company that specializes in the storage, movement, and control of massive amounts of packages. You are on the machine learning team assigned the task of building a machine learning model to assist in the control of your company’s package logistics. Specifically, your model needs to predict the routes your package movers should take for optimal delivery and resource usage. The model requires various transformations to be performed on the data. You also want to get inferences on entire datasets once you have your model in production. Additionally, you won’t need a persistent endpoint for applications to call to get inferences.

Which type of production deployment would you use to get predictions from your model in the most expeditious manner?

A. SageMaker Hosting Services

B. SageMaker Batch Transform

C. SageMaker Containers

D. SageMaker Elastic Inference

Correct Answer: B

Explanation

Option A is incorrect. SageMaker Hosting Services is used for applications to send requests to an HTTPS endpoint to get inferences. This type of deployment is used when you need a persistent endpoint for applications to call to get inferences.

Option B is correct. SageMaker Batch Transform is used to get inferences for an entire dataset, and you don’t need a persistent endpoint for applications to call to get inferences.

Option C is incorrect. SageMaker Containers is a service you can use to create your own Docker containers to deploy your models. This would not be the most expeditious option.

Option D is incorrect. SageMaker Elastic Interface is used to accelerate deep learning inference workloads. This service alone would not give you the batch transform capabilities you need.

Reference: Please see the Amazon SageMaker developer guide titled Deploy a Model on Amazon SageMaker Hosting Services, the Amazon SageMaker developer guide titled Get Inferences for an Entire Dataset with Batch Transform, the Amazon Elastic Inference developer guide titled What Is Amazon Elastic Inference?, and the Amazon SageMaker developer guide titled Amazon SageMaker Containers: a Library to Create Docker Containers.

Domain : Modeling

Q17 : You work as a machine learning specialist for an auto manufacturer that produces several car models in several product lines. Example models include an LX model, an EX model, a Sport model, etc. These models have many similarities. But of course, they also have defining differences. Each model has its own parts list entries in your company’s parts database. When ordering commodity parts for these car models from auto parts manufacturers, you want to produce the most efficient orders for each parts manufacturer by combining orders for similar parts lists. This will save your company money. You have decided to use the AWS Glue FindMatches Machine Learning Transform to find your matching parts lists.

You have created your data source file as a CSV, and you have also created your labeling file used to train your Find Matches to transform. When you run your AWS Glue transform job, it fails. Which of the following could be the root of the problem?

A. The labeling file is in the CSV format.

B. The labeling file has labeling set_id and label as its first two columns with the remaining columns matching the schema of the parts list data to be processed.

C. Records in the labeling file that don’t have any matches have unique labels.

D. The labeling file is not encoded in UTF-8 without BOM (byte order mark).

Correct Answer: D

Explanation

Option A is incorrect. When using the AWS Glue FindMatches ML Transform, the labeling file must be in CSV format.

Option B is incorrect. When using the AWS Glue FindMatches ML Transform, the first two columns of the labeling file are required to be labeling_set_id and label. Also, the remaining columns must match the schema of the data to be processed.

Option C is incorrect. When using the AWS Glue FindMatches ML Transform, if a record doesn’t have a match, it is assigned a unique label.

Option D is correct. When using the AWS Glue FindMatches ML Transform, the labeling file must be encoded as UTF-8 without BOM.

Reference: Please see the AWS Glue developer guide titled Machine Learning Transforms in AWS Glue.

Domain : Modeling

Q18 : You work for an Internet of Things (IoT) component manufacturer which builds servos, engines, sensors, etc. The IoT devices transmit usage and environment information back to AWS IoT Core via the MQTT protocol. You want to use a machine learning model to show how/where the use of your products is clustered in various regions around the world. This information will help your data scientists build KPI dashboards to improve your component engineering quality and performance. You have created, trained, and deployed to Amazon SageMaker Hosting Services your model based on the XGBoost algorithm. Your model is set up to receive inference requests from a lambda function that is triggered by the receipt of an IoT Core MQTT message via your Kinesis Data Streams instance.

What transform steps need to be done for each inference request? Also, which steps are handled by your code versus by the inference algorithm?

A. Inference request serialization (handled by the algorithm)

B. Inference request serialization (handled by your lambda code)

C. Inference request deserialization (handled by your lambda code)

D. Inference request deserialization (handled by the algorithm)

E. Inference request post serialization (handled by the algorithm)

Correct Answers: B and D

Explanation

Option A is incorrect. The inference request serialization must be completed by your lambda code. The algorithm needs to receive the inference request in serialized form.

Option B is correct. The inference request serialization must be completed by your lambda code.

Option C is incorrect. The inference request is deserialized by the algorithm in response to the inference request. Your lambda code is responsible for serializing the inference request.

Option D is correct. The inference request is deserialized by the algorithm in response to the inference request.

Option E is incorrect. There is no inference request post serialization step in the SageMaker inference request/response process.

Reference: Please see the Amazon SageMaker developer guide titled Common Data Formats for Inference, the AWS IoT Core overview page, the AWS IoT developer guide titled Creating an AWS Lambda Rule.

Domain : Modeling

Q19 : You are a Machine Learning Specialist on a team that is designing a system to help improve sales for your auto parts division. You have clickstream data gathered from your user’s activity on your product website. Your team has been tasked with using the large amount of clickstream information depicting user behavior and product preferences to build a recommendation engine similar to the Amazon.com feature that recommends products through the tagline of “users who bought this item also considered these items.” Similarly, your team’s task is to predict which products a given user may like based on the similarity between the given user and other users.

How should you and your team architect this solution?

A. Create a recommendation engine based on a neural combinative filtering model using TensorFlow and run it on Sage Maker.

B. Create a recommendation engine based on model-based filtering using TensorFlow and run it on SageMaker.

C. Create a recommendation engine based on a neural collaborative filtering model using TensorFlow and run it on Sage Maker.

D. Create a recommendation engine based on content-based filtering using TensorFlow and run it on SageMaker.

Correct Answer: C

Explanation

Option A is incorrect. There is no neural combinative filtering method used in recommendation engine models.

Option B is incorrect. The term model-based filtering is too generic. We are using a model to make our recommendations, but which type of model should we use?

Option C is correct. The famous Amazon.com recommendation engine is built using a neural collaborative filtering method. This method is optimized to find similarities in environments where you have large amounts of user actions that you can analyze.

Option D is incorrect. Content-based filtering relies on similarities between features of items, whereas collaborative-based filtering relies on preferences from other users and how they respond to similar items.

References: Please see the article titled BUILDING A RECOMMENDATION ENGINE WITH SPARK ML ON AMAZON EMR USING ZEPPELIN (https://noise.getoto.net/2015/11/14/building-a-recommendation-engine-with-spark-ml-on-amazon-emr-using-zeppelin-2/), The Wikipedia article titled Collaborative filtering (https://en.wikipedia.org/wiki/Collaborative_filtering), The AWS Machine Learning blog titled Building a customized recommender system in Amazon SageMaker (https://aws.amazon.com/blogs/machine-learning/building-a-customized-recommender-system-in-amazon-sagemaker/)

Domain : Modeling

Q20 : You are part of a machine learning team in a financial services company that builds a model that will perform time series forecasting of stock price movement using SageMaker. You and your team have finished training the model, and you are now ready to performance test your endpoint to get the best parameters for configuring auto-scaling for your model variant. How can you most efficiently review the latency, memory utilization, and CPU utilization during the load test?

A. Stream the SageMaker model variant CloudWatch logs to ElasticSearch. Then visualize and query the log data in a Kibana dashboard.

B. Create custom CloudWatch logs containing the metrics you wish to monitor, then stream the SageMaker model variant logs to ElasticSearch and visualize/query the log data in a Kibana dashboard.

C. Create a CloudWatch dashboard to show a view of the latency, memory utilization, and CPU utilization metrics of the SageMaker model variant.

D. Query the SageMaker model variant logs on S3 using Athena and leverage QuickSight to visualize the logs.

Correct Answer: C

Explanation

Option A is incorrect. Using ElasticSearch and Kibana unnecessarily complicates the solution. A CloudWatch dashboard can show all of the metric data you need to evaluate your model variant.

Option B is incorrect. You don’t need to create custom CloudWatch logs with the metrics you wish to monitor. All of the metrics (latency, memory utilization, and CPU utilization) you wish to view are generated by CloudWatch by default. Also, using ElasticSearch and Kibana unnecessarily complicates the solution.

Option C is correct. The simplest approach is to leverage the CloudWatch dashboard feature since it generates all of the metrics (latency, memory utilization, and CPU utilization) you wish to view by default.

Option D is incorrect. Using Athena and QuickSight unnecessarily complicates the solution. A CloudWatch dashboard can show all of the metric data you need to evaluate your model variant.

References: Please see the Amazon SageMaker developer guide titled Monitor Amazon SageMaker with Amazon CloudWatch (https://docs.aws.amazon.com/sagemaker/latest/dg/monitoring-cloudwatch.html), The Amazon CloudWatch user guide titled Using Amazon CloudWatch Dashboards (https://docs.aws.amazon.com/AmazonCloudWatch/latest/monitoring/CloudWatch_Dashboards.html), The Amazon CloudWatch user guide titled Creating a CloudWatch Dashboard (https://docs.aws.amazon.com/AmazonCloudWatch/latest/monitoring/create_dashboard.html)

Domain : ML Implementation and Operations

Q21 : You work as a machine learning specialist for a financial services firm. Your firm contracts with market data generation services that deliver 5 TB of market activity record data every minute. To prepare this data for your machine learning models, your team queries the data using Athena. However, the queries perform poorly because they are operating on such a large data stream. You need to find a more performant option. Which file format for your market data records on S3 will give you the best performance?

A. TSV files

B. Compressed LZO files

C. Parquet files

D. CSV files

Correct Answer: C

Explanation

Option A is incorrect. The TSV file format uses a row-based file structure that uses tabs as an attribute separator. When Athena reads from these types of files, it must read the entire row for every row versus reading in a column when only the attribute in that column is needed for your query. Columnar-based file processing is much more efficient for queries of large datasets. Also, the TSV file format does not support the partitioning of your data.

Option B is incorrect. Compressed LZO Files do not support columnar processing nor partitioning. Therefore they will perform poorly when compared to columnar file formats like Parquet.

Option C is correct. The Parquet file format is a columnar-based format, and it supports partitioning. The other columnar-based file format supported by Athena is ORC. These columnar-based file formats outperform the tabular formats such as CSV and TSV when Athena works with very large datasets.

Option D is incorrect. The CSV file format uses a row-based file structure that uses commas as an attribute separator. When Athena reads from these types of files, it must read the entire row for every row versus reading in a column (columnar-based processing) when only the attribute in that column is needed for your query. Columnar-based file processing is much more efficient for queries of large datasets. Also, the CSV file format does not support the partitioning of your data.

References: Please see the Amazon Athena FAQs (refer to the question “How do I improve the performance of my query?”(https://aws.amazon.com/athena/faqs/#:~:text=Amazon%20Athena%20supports%20a%20wide,%2C%20LZO%2C%20and%20GZIP%20formats.), The AWS Big Data blog titled Top 10 Performance Tuning Tips for Amazon Athena (https://aws.amazon.com/blogs/big-data/top-10-performance-tuning-tips-for-amazon-athena/), The Amazon Athena user guide titled Compression Formats (https://docs.aws.amazon.com/athena/latest/ug/compression-formats.html)

Domain : Modeling

Q22 : Your work for a company that performs seismic research for client firms that drill for petroleum. As a machine learning specialist, you have built a series of models that classify seismic waves to determine the seismic profile of a proposed drilling site. You need to select the best model to use in production. Which metric should you use to compare and evaluate your machine learning classification models against each other?

A. Area Under the ROC Curve (AUC)

B. Mean square error (MSE)

C. Mean Absolute Error (MAE)

D. Recall

Correct Answer: A

Explanation

Option A is correct. The area under the Receiver Operating Characteristic (ROC) curve is the most commonly used metric to compare classification models.

Option B is incorrect. The Mean Square Error (MSE) is commonly used to measure regression error. It finds the average squared error between the predicted and actual values. It is not used to compare classification models.

Option C is incorrect. The Mean Square Error is also commonly used to measure regression error. It finds the average absolute distance between the predicted and target values. It is not used to compare classification models.

Option D is incorrect. The recall metric is the percentage of results correctly classified by a model. This metric alone will not allow you to make a complete assessment and comparison of your models.

References: Please see the Towards Data Science article titled Metrics For Evaluating Machine Learning Classification Models (https://towardsdatascience.com/metrics-for-evaluating-machine-learning-classification-models-python-example-59b905e079a5), The Towards Data Science article titled How to Evaluate a Classification Machine Learning Model (https://towardsdatascience.com/how-to-evaluate-a-classification-machine-learning-model-d81901d491b1), The Machine Learning Mastery article titled Assessing and Comparing Classifier Performance with ROC Curves (https://machinelearningmastery.com/assessing-comparing-classifier-performance-roc-curves-2/), The Towards Data Science article titled 20 Popular Machine Learning Metrics. Part 1: Classification & Regression Evaluation Metrics (https://towardsdatascience.com/20-popular-machine-learning-metrics-part-1-classification-regression-evaluation-metrics-1ca3e282a2ce), The Data School article titled Simple guide to confusion matrix terminology (https://www.dataschool.io/simple-guide-to-confusion-matrix-terminology/), The Medium article titled Precision vs. Recall (https://medium.com/@shrutisaxena0617/precision-vs-recall-386cf9f89488)

Domain : Data Engineering

Q23 : You work as a machine learning specialist for the airline traffic control agency of the federal government. Your machine learning team is responsible for producing the models that process all air traffic in-flight data to produce recommended flight paths for the aircraft currently aloft. The flight paths need to consider all of the prevailing conditions (weather, other flights in the path, etc.) that may affect an aircraft’s flight path.

The data that your models need to process is massive in scale and requires large-scale data processing. How should you build the data transformation and feature engineering processing jobs so that you can process all of the flight data in real-time?

A. Run Glue ETL distributed data processing jobs to perform the transformation and feature engineering on the flight data in real-time and save the data to S3 for your model training.

B. Use Kinesis Data Firehose to perform the transformation and feature engineering on the flight data in real-time and save the data to S3 for your model training.

C. Run Apache Spark Streaming data processing jobs to perform the transformation and feature engineering on the flight data in real-time and save the data to S3 for your model training.

D. Use a Kinesis Data Analytics SQL application to perform the transformation and feature engineering on the flight data in real-time and save the data to S3 for your model training.

Correct Answer: C

Explanation

Option A is incorrect. Glue ETL is used for batch processing. So it will not work in a real-time scenario.

Option B is incorrect. Kinesis Data Firehose is a near real-time processing service (it buffers your data as it processes it using the buffer size and buffer interval configuration settings). It will not work in a real-time scenario.

Option C is correct. Apache Spark Streaming is an analytics engine used for large-scale data processing that runs distributed data processing jobs. You can apply data transformations and extract features (feature engineering) using the Spark framework.

Option D is incorrect. Kinesis Data Analytics running a SQL application can’t write directly to S3. Also, Kinesis Data Analytics cannot scale to the large-scale data processing capabilities that Apache Spark jobs can.

References: Please see the Amazon SageMaker developer guide titled Data Processing with Apache Spark (https://docs.aws.amazon.com/sagemaker/latest/dg/use-spark-processing-container.html), The Amazon SageMaker Examples GitHub repository titled Distributed Data Processing using Apache Spark and SageMaker Processing (https://github.com/aws/amazon-sagemaker-examples/blob/master/sagemaker_processing/spark_distributed_data_processing/sagemaker-spark-processing.ipynb), The Amazon Kinesis Data Firehose developer guide titled Configure Settings (https://docs.aws.amazon.com/firehose/latest/dev/create-configure.html) The Amazon Kinesis Data Analytics FAQs (https://aws.amazon.com/kinesis/data-analytics/faqs/)

Domain : Modeling

Q24 : You work as a machine learning specialist for an alternative transportation ride-share company. Your company has scooters, electric longboards, and other electric personal transportation devices in several major cities across the US. Your machine learning team has been asked to produce a machine learning model that classifies device preference by trip duration for each of the available personal transportation devices you offer in each city. You have created a model based on the SageMaker built-in K-Means algorithm. You are now using hyperparameter tuning to get the best-performing model for your problem. Which evaluation metrics and corresponding optimization direction should you choose for your automatic model tuning (a.k.a. hyperparameter tuning)?

A. msd, maximize

B. mse, minimize

C. ssd , minimize

D. f1, maximize

E. msd, minimize

Correct Answers: C and E

Explanation

Option A is incorrect. K-Means uses the msd (Mean Squared Distances) metric for model validation. However, you will want to minimize this metric.

Option B is incorrect. K-Means does not use the mse (Mean Squared Error) metric for model validation.

Option C is correct. K-Means uses the ssd (Sum of the Squared Distances) metric for model validation, and you will want to minimize this metric.

Option D is incorrect. K-Means does not use the f1 (weighted average of precision and recall) metric for model validation.

Option E is correct. K-Means uses the msd (Mean Squared Distances) metric for model validation, and you will want to minimize this metric.

References: Please see the Amazon SageMaker developer guide titled Define Metrics (https://docs.aws.amazon.com/sagemaker/latest/dg/automatic-model-tuning-define-metrics.html), The Amazon SageMaker developer guide titled Tune a K-Means Model (https://docs.aws.amazon.com/sagemaker/latest/dg/k-means-tuning.html)

Domain : Data Engineering

Q25 : You work as a machine learning specialist for the infectious disease testing department of a national government agency. Your machine learning team is responsible for creating a machine learning model that analyzes the daily test datasets for your country and produces daily predictions of trends of disease contraction and death rates. These projections are used throughout national and international news agencies to report on the daily projections of infectious disease progression. Since your model works on huge datasets on a daily basis, which of the following statements gives an accurate description of your inference processing?

A. You have set up a persistent endpoint to get predictions from your model using SageMaker batch transform.

B. You have set up a persistent endpoint to get predictions from your model using SageMaker hosting services.

C. You don’t need a persistent endpoint. You use SageMaker batch transform to get inferences from your large datasets.

D. You don’t need a persistent endpoint. You use SageMaker hosting services to get inferences from your large datasets.

Correct Answer: C

Explanation

Option A is incorrect. SageMaker batch transform does not use a persistent endpoint. You use SageMaker batch transform to get inferences from large datasets. Also, your process runs one per day, so a persistent endpoint does not make sense.

Option B is incorrect. You are processing large datasets on a daily basis. Therefore you should use SageMaker batch transform, not SageMaker hosting services. SageMaker hosting services are used for real-time inference requests, not daily batch requests.

Option C is correct. Since you are using your endpoint to get inferences one per day from a large dataset, you don’t need a persistent endpoint. Also, SageMaker batch transform is the best deployment option when getting inferences from an entire dataset.

Option D is incorrect. SageMaker hosting services need a persistent endpoint. Also, since you are processing large datasets on a daily basis, you should use SageMaker batch transform, not SageMaker hosting services.

References: Please see the AWS SageMaker developer guide titled Use Batch Transform (https://docs.aws.amazon.com/sagemaker/latest/dg/batch-transform.html), The AWS SageMaker developer guide titled Deploy Models for Inference (https://docs.aws.amazon.com/sagemaker/latest/dg/deploy-model.html)

Summary

Hope you enjoyed practicing for the exam with us. To learn and Practice more, go through our detailed exam-ready Mock Tests on our official website. Spending more time on preparation may help you to pass the actual exam within the first attempt. All the best! Keep Learning. Also, you can check AWS hands-on labs & AWS Sandbox.

- Study Guide DP-600 : Implementing Analytics Solutions Using Microsoft Fabric Certification Exam - June 14, 2024

- Top 15 Azure Data Factory Interview Questions & Answers - June 5, 2024

- Top Data Science Interview Questions and Answers (2024) - May 30, 2024

- What is a Kubernetes Cluster? - May 22, 2024

- Skyrocket Your IT Career with These Top Cloud Certifications - March 29, 2024

- What are the Roles and Responsibilities of an AWS Sysops Administrator? - March 28, 2024

- How to Create Azure Network Security Groups? - March 15, 2024

- What is the difference between Cloud Dataproc and Cloud Dataflow? - March 13, 2024