Are you looking for free questions and answers to prepare for the Snowflake Snowpro Advanced Architect exam?

Here are our newly updated 30+ Free questions on the Snowflake Snowpro Advanced Architect exam which are very similar to the practice test as well as the real exam.

Why do we provide Snowflake Snowpro Advanced Architect exam questions for free?

Snowflake Snowpro Advanced Architect exams are designed to test and recognize your skills on Snowflake architecture and can design and optimize Snowflake solutions for their organizations

We are giving it for free to help you in passing the Snowflake Snowpro Advanced Architect exam just like your colleagues. It’s a free takeaway from the Whizlabs team for Snowflake certification job seekers during this year 2022.

Snowflake Snowpro Advanced Architect Exam Questions

You can find a lot of video courses on Snowflake Snowpro Advanced Architect exam to learn the exam objectives. And now, it’s the time to test your hard-earned Snowflake Snowpro Advanced Architect skills by studying the exam simulator questions on Snowflake Snowpro Advanced Architect exam.

Our Snowflake certified experts even curated these Snowpro simulator questions carefully which are based on the latest syllabus and very relevant to the real exam. This list of free questions on Snowflake Snowpro Advanced Architect exams can help you in up-skilling the knowledge gaps.

Once you have spent some time learning these Snowflake Snowpro Advanced Architect exam questions, then you can face the real exam with more confidence and ensure passing it in your first attempt itself.

Let’s get started!

Snowflake Snowpro Advanced Architect exam questions

Domain : Snowflake Architecture

Q1 : Which of the following statements are TRUE concerning a data consumer account in Snowflake?

A. All objects in the shared database are always read-only for the consumer of the share

B. A consumer of a share does not necessarily need an account with Snowflake to be able to consume data

C. A consumer account must be in the same region and on the same cloud provider as the data provider account

D. A consumer needs to pay for the compute and storage of the shared data

E. A database ‘share’ can be ‘imported’ by at most 10 consumer accounts

Correct Answers: A and B

Explanation

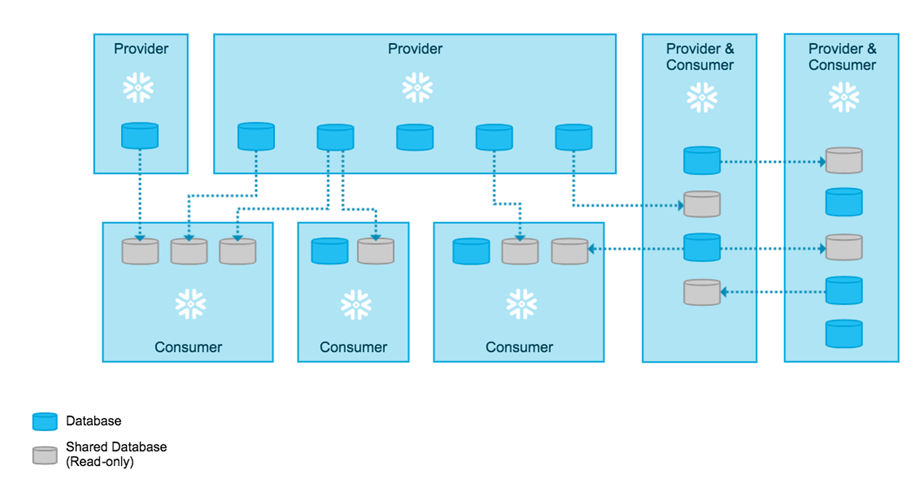

Option A is correct. A consumer can access a share only in read-only mode. The consumer cannot change information or modify/drop any object in the shared database.

Option B is correct. If a consumer of your share does not have a Snowflake account, Snowflake provides a functionality called ‘reader account’ which are special read-only Snowflake accounts created within the provider account for the sole purpose of accessing a share.

Option C is incorrect. Snowflake allows a consumer account to be located in any (supported) public cloud region/cloud provider. Snowflake data replication functionality is used to securely share data in such scenarios. Cross-region data sharing is supported for Snowflake accounts hosted on AWS, MS-Azure, or GCP.

Option D is incorrect. A data consumer doesn’t pay for the storage. The consumer only pays for the compute if the consumer is also a Snowflake account. If the consumer is not a snowflake account, the provider pays for the compute used (by the reader account).

Option E is incorrect. Snowflake does not have restrictions on the number of shares a provider can create or the number of shares that can be imported by a consumer.

Further Reading: Introduction to Secure Data Sharing – Snowflake Documentation

Domain : Snowflake Architecture

Q2 : Which of the following statements are FALSE concerning a data consumer account in Snowflake?

A. A single consumer account can contain objects from different providers

B. A consumer account can create clones of a shared database

C. A consumer account can perform time travel on a table within the shared database

D. A consumer account cannot forward (i.e. reshare) the shared databases and objects

Correct Answers: B and C

Explanation

Option A is incorrect as it is the true statement. A single consumer account can contain objects from different providers. Also, a provider account can share objects with different consumers. This architecture enables the creation of an interconnected network of data providers and consumers.

Option B is correct. Creating a clone of a shared database or any schemas/tables in the database is not supported.

Option C is correct. Time Travel on a shared database or any schemas/tables in the database is not supported.

Option D is incorrect as it is the true statement. A database created from an Inbound share cannot be shared further with other accounts. Remember that ‘You cannot share a share’.

Further Reading: Introduction to Secure Data Sharing – Snowflake Documentation

Domain : Account and Security

Q3 : Which of the following objects will NOT be cloned when cloning a schema?

A. A permanent Table

B. A temporary Table

C. A transient Table

D. An external table

E. Views

F. Internal (named) Stage

G. Stored Procedures

Correct Answers: B, D and F

Explanation

Cloning a schema clones all the contained objects in the schema, except the following object types:

- Temporary Tables

- External tables

- Internal (Snowflake) stages

Option B, D and F are appropriate choices.

Further Reading: Create <object>…CLONE – Snowflake Documentation

Domain : Snowflake Architecture

Q4 : Select appropriate database objects which can NOT be part of a direct share.

A. Tables

B. External Tables

C. External Stages

D. Secure views

E. Secure Materialized views

F. Stored Procedure

Correct Answers: C and F

Explanation

The following Snowflake database objects can be included in a share:

| Tables | Views | Other Objects |

| Table

External table |

Secure view

Secure Materialized view |

Secure UDF |

No other database object (e.g. regular views, stored procedures, tasks, streams, stages etc.) can be included in a share. Therefore Option C and Option F are appropriate answer choices.

Reference: Introduction to Secure Data Sharing

Domain : Account and Security

Q5 : To list all privileges and roles that a role named PYTHON_DEV_ROLE has, which of the following commands is the most appropriate one to use?

A. show grants

B. show grants in role PYTHON_DEV_ROLE

C. show grants of role PYTHON_DEV_ROLE

D. show grants to role PYTHON_DEV_ROLE

Correct Answer: D

Explanation

Option A is incorrect. This SQL command lists all roles granted to the current user executing this command.

Option B is incorrect. This SQL command is invalid and will result in the following compilation error.

SQL compilation error: syntax error line <m> at position <n> unexpected ‘in’.

Option C is incorrect. This SQL command lists all users and roles to which the role PYTHON_DEV_ROLE has been granted.

Option D is correct. This SQL command lists all privileges and roles granted to role PYTHON_DEV_ROLE .

➤ Exam Tip: Show grants SQL command is equivalent to Show grants to user <current_user>

Further Reading: Show Grants – Snowflake Documentation

Domain : Data Engineering

Q6 : Which of the following programming languages are supported in Snowflake to write UDFs?

A. SQL

B. Java

C. .NET

D. C++

E. JavaScript

F. Python

Correct Answers: A, B, E and F

Explanation

Options A, B, E and F are correct. UDFs allow developers to extend the Snowflake to perform the operations that are not natively available through built-in functions. UDFs (together with Stored Procedures) enable database-level programming in Snowflake.

At present, Snowflake supports four programming languages to write UDFs: SQL, Java, Javascript, and Python.

Further Reading: Supported Programming Languages for Creating UDFs

Domain : Data Engineering

Q7 : In which of the following languages, can you write a stored procedure in Snowflake?

A. SQL

B. Java

C. Scala

D. JavaScript

E. Python

F. Snowflake Scripting

Correct Answers: B, C, D, E and F

Explanation

Stored procedures in Snowflake allow a developer to write business logic at the database level by using procedural programming methods. Stored procedures can be written in one of the following languages:

- Java (using Snowpark)

- JavaScript

- Python (using Snowpark)

- Scala (using Snowpark)

- Snowflake Scripting

Options B, C, D, E and F are correct.

Further Reading: Overview of Stored Procedures

Domain : Snowflake Architecture

Q8 : Which REST API endpoints are supported by SnowPipe?

A. SnowPipe does not support REST

B. ‘insertFiles’ endpoint. Provides a POST method. Informs Snowflake about the files to be ingested into a table

C. ‘insertTuples’ endpoint. Provides a POST method. Informs Snowflake about the tuples which need to be ingested from a specific file

D. ‘insertReport’ endpoint. Provides GET method. Fetches a report of ingested files via ‘insertFiles’ whose contents were recently added to a table

E. ‘loadHistoryScan’ endpoint. Provides GET method. Fetches a report of ingested files whose contents have been added to a table between two points in time

Correct Answers: B, D and E

Explanation

Option A is incorrect. SnowPipe provides public REST endpoints to load data and retrieve load history reports.

Option B is correct. ‘insertFiles’ is a valid REST endpoint. This endpoint exposes a POST method. Calling this API informs Snowflake about the files to be ingested into a table. A successful response from this endpoint means that Snowflake has recorded the list of files to be added to the table.

Option C is incorrect. ‘insertTuples’ is not a valid REST endpoint.

Option D is correct. ‘insertReport’ is a valid REST endpoint. This endpoint exposes a GET method. Calling this API retrieves a report of the files whose content has been recently ingested into a table.

Option E is correct. ‘loadHistoryScan’ is a valid REST endpoint. This endpoint exposes a GET method. Calling this API retrieves a report of the files whose content has been recently ingested into a table. The difference between ‘insertReport’ and ‘loadHistoryScan’’ is that the latter retrieves the history between two points in time.

Further Reading: Snowflake REST API – Snowflake Documentation

Domain : Snowflake Architecture

Q9 : To access a database created from a share, the role must be granted which of the following privileges?

A. GRANT REFERENCES PRIVILEGE

B. GRANT IMPORTED PRIVILEGE

C. GRANT USAGE PRIVILEGE

D. GRANT SELECT PRIVILEGE

E. GRANT USAGE_REFERENCES PRIVILEGE

Correct Answer: B

Explanation

Option B is correct. On a database created from a share, one can only use GRANT IMPORTED PRIVILEGES and REVOKE IMPORTED PRIVILEGES to grant/revoke access. No other privileges can be granted on a shared object..

➤ Practical Info: By default, all inbound shares can be accessible by the ACCOUNTADMIN role. The ACCOUNTADMIN role will need to create a database from the share and grant access to the users using GRANT IMPORTED PRIVILEGES.

Further Reading: Granting Privileges on Shared Database

Domain : Account and Security

Q10 : When a database is cloned, which privileges of the (original) database are replicated in the cloned database?

A. ALL privileges associated with the original database and the child objects

B. Child object-level privileges only

C. Database level privileges only

D. Future privileges only

Correct Answer: B

Explanation

When cloning a database or a schema, the cloned object replicates all granted privileges on the child objects. Option B is correct.

For example, assume a database D1 which has a schema S1 and a table T1. A role R1 has usage privilege on D1 and S1 and selects privilege on T1.

Now the database D1 is cloned to create a new (cloned) database object D1_CLONE. Resultantly, the role R1 will NOT have a usage privilege on D1_CLONE but will have a usage privilege on D1_CLONE.S1 and a select privilege on D1_CLONE.S1.T1.

Further Reading: Access Control Privileges on Cloned Objects

Domain : Snowflake Architecture

Q11 : You are working as a Snowflake architect for an FMCG company. The company produces a variety of Ayurveda products. The products are sold through a network of 500+ B2B retailers (online and offline). The company and its retailers are using Snowflake.

Each retailer sends weekly POS (Point of Sale) information to the company. The company collates, aggregates, and reports the sales data to the senior management. The aggregated data is further analyzed by the business analysts and anonymized sales insights are shared with the select set of retailers that have subscribed to the insights.

Given this scenario, what would be the BEST architecture that you (as a Snowflake Architect) would recommend keeping in mind the security, robustness and resilience of your design?

A. Set up a data exchange. Invite B2B retailers as providers and consumers. Have them create and publish a data listing of their respective POS information. Secure the POS listing to allow access to the company’s Snowflake account as a consumer. Have the company publish the aggregated data to exchange through shares with the B2B retailers. Secure the data to allow access only to the B2B retailers subscribing to the insights

B. Have the B2B retailers send their data over using Email, FTP, etc. channels into the company’s Snowflake staging area. From there, use SnowPipe to ingest the data into the Snowflake tables. Have the company send the aggregated data back to the B2B retailers using a direct data share

C. Have the B2B retailers share their POS data with the company using direct data sharing. Have the company send the aggregated data back to the B2B retailers using a direct data share

D. Have the B2B retailers share their POS data with the company using direct data sharing. Have the company publish the data as a personalized listing in the public marketplace where the B2B retailers can request access

Correct Answer: A

Explanation

Option A is correct. Data Exchange is a data-sharing product offered by Snowflake that is specifically designed to make data sharing seamless across your ecosystem of suppliers, partners, and customers, as well as business units at your own company. It allows you to control who can join, publish, consume, and access data. You can combine the power of Data Exchange with Secure Views that include the logic to limit the data access to specific consumer accounts (e.g. id = current_account()) and share the secure view with all consumers.

Option B is incorrect. Although feasible, this is not the BEST design solution. Given that both – the company and its B2B retailer network – are using Snowflake, sharing data using data exchange is a more efficient and robust approach. It is more efficient compared to the email/FTP option because setting up a data exchange is fast and shared data is available instantaneously. This reduces the manual effort of emailing and FTPing every time. It is more robust because you are not introducing an additional point of failure (Email/FTP etc.) in your architecture. It is also more secure as Snowflake’s secure views provide mechanisms to share data with specific consumer accounts.

Option C is incorrect. Although feasible, this is also not the BEST architecture.

Given that there are 500+ B2B retailers. Setting up individual data shares for each retailer is effort-intensive and has significant maintenance overheads.

Option D is incorrect. Although feasible, this is also not the BEST architecture. Snowflake Marketplace’s offering is geared to sharing data with third parties and the world at large. Snowflake Data Exchange makes more sense for data sharing within a closed group of business partners and data consumers (as in this case).

Further Reading: Data Sharing Product Offerings in Snowflake

Domain : Snowflake Architecture

Q12 : As a data provider, a role owns the objects contained in a share but does not own the share itself. In this situation, how can the data provider remove the object from the share?

A. Revoking usage and/or select privileges from the share owner role

B. Revoking usage and/or select privileges from the share owner role with CASCADE option

C. User must request the share owner to remove the object from the share

D. Revoking usage and/or select privileges from the share owner role with RESTRICT option

Correct Answer: B

Explanation

Option B is correct. If the data provider role owns the objects that are included in the share but does not own the share itself (share is owned by some other non-ACCOUNTADMIN role), the data provider can remove objects from the share by revoking the ‘USAGE or SELECT privilege with CASCADE’ option on the objects.

Syntax:

REVOKE USAGE ON <schema_name> FROM ROLE <role_name> CASCADE

REVOKE SELECT ON <table_name> FROM ROLE <role_name> CASCADE

Further Reading: Enabling non-ACCOUNTADMIN roles to Perform Data Sharing Tasks

Domain : Snowflake Architecture

Q13 : Determine the below statements as TRUE/FALSE:

A. It is possible for Data consumers to enable change tracking on a share by creating streams in their own databases created from a share

B. A new object created in a database that is already included in a share is automatically available to consumers of the share

C. If a non-ACCOUNTADMIN role has the privilege to create a share (CRATE SHARE privilege) and has the USAGE privilege on a database DB1, it can add DB1 into the share

D. SIMULATED_DATA_SHARING_CONSUMER session parameter can be used to simulate the access to a share by a specific consumer account

A. T, F, F, T

B. T, F, T, F

C. F, T, F, F

D. F, T, T, T

Correct Answer: A

Explanation

Option A is TRUE. It is possible to create streams on shared objects (secure views or tables) to track DML changes made in those objects. This is akin to creating and using streams on “local” objects.

Option B is FALSE. A new object created in a database that is part of a share will not become automatically available to the consumers of the share. The owner of the share must explicitly add the new object to the share.

Option C is FALSE. To add an object to a share, the non-ACCOUNTADMIN role must have the following privileges:

- OWNERSHIP of the share, and

- OWNERSHIP or USAGE/SELECT WITH GRANT OPTION on each of the objects to be added in the share:

In the scenario given in the question, the roles do not have USAGE WITH GRANT OPTION on DB1 and therefore the grant operation will fail.

Option D is TRUE. SIMULATED_DATA_SHARING_CONSUMER session parameter can be set to the name of the consumer account. This will simulate the access for that consumer account within the provider account itself. This allows the data provider to test and ensure the data is properly filtered by account in the secure view.

Further Reading: Using Secure Objects to Control Data Access — Snowflake Documentation

Domain : Account and Security

Q14 : A user can change object parameters using which of the following roles?

A. ACCOUNTADMIN, SECURITYADMIN, USER with PRIVILEGE

B. ACCOUNTADMIN, USER with PRIVILEGE

C. SECURITYADMIN, USER with PRIVILEGE

D. ACCOUNTADMIN, SECURITYADMIN

Correct Answer: B

Explanation

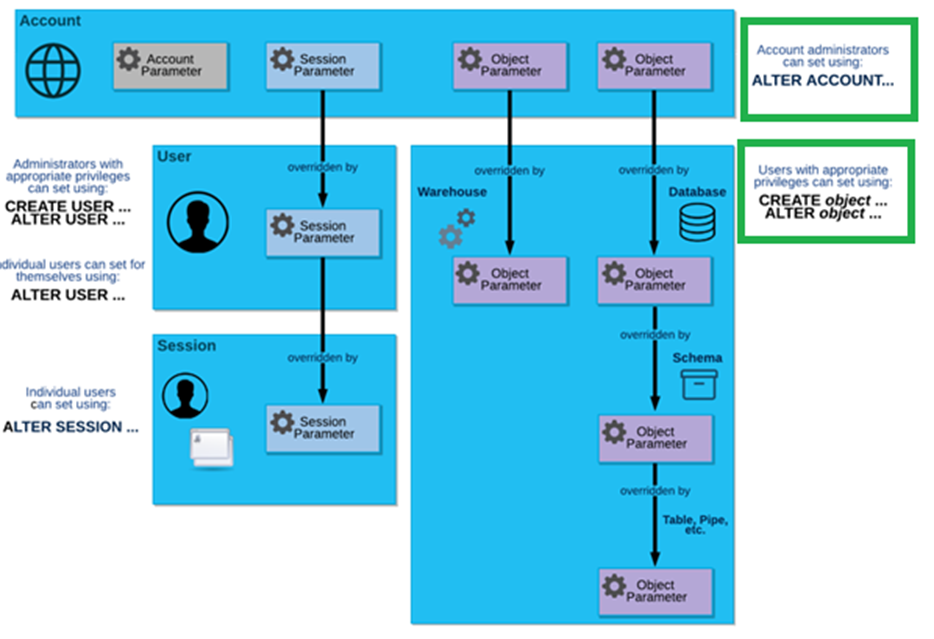

Snowflake parameters are name-value pairs that control the behaviour of your account, user sessions, and objects. There are 3 types of parameters in Snowflake:

- Account Parameters

- Session Parameters

- Object Parameters

The following diagram shows these parameter types and how the parameters can be overridden at each level:

Image source: Snowflake documentation (Parameters — Snowflake Documentation)

The top blue box in the diagram (Account box) shows all three types of parameters. This indicates that account administrators (i.e. users with ACCOUNTADMIN role) can change all three types of parameters.

The blue box on the right side shows that object parameters (warehouse level or database level) can be overridden by users with CREATE OBJECT or ALTER OBJECT privileges.

These two scenarios are depicted in the boxes with green borders.

Hence, we can understand from this discussion that account administrators (i.e. ACCOUNTADMIN role), and individual users with privileges at object level can set/override object parameters. Therefore Option B is correct.

Further Reading: Parameters — Snowflake Documentation

Domain : Account and Security

Q15 : A user can change session parameters using which of the following roles:

A. ACCOUNTADMIN, SECURITYADMIN, USER with PRIVILEGE

B. ACCOUNTADMIN, USER with PRIVILEGE

C. SECURITYADMIN, USER with PRIVILEGE

D. ACCOUNTADMIN, SECURITYADMIN

Correct Answer: A

Explanation

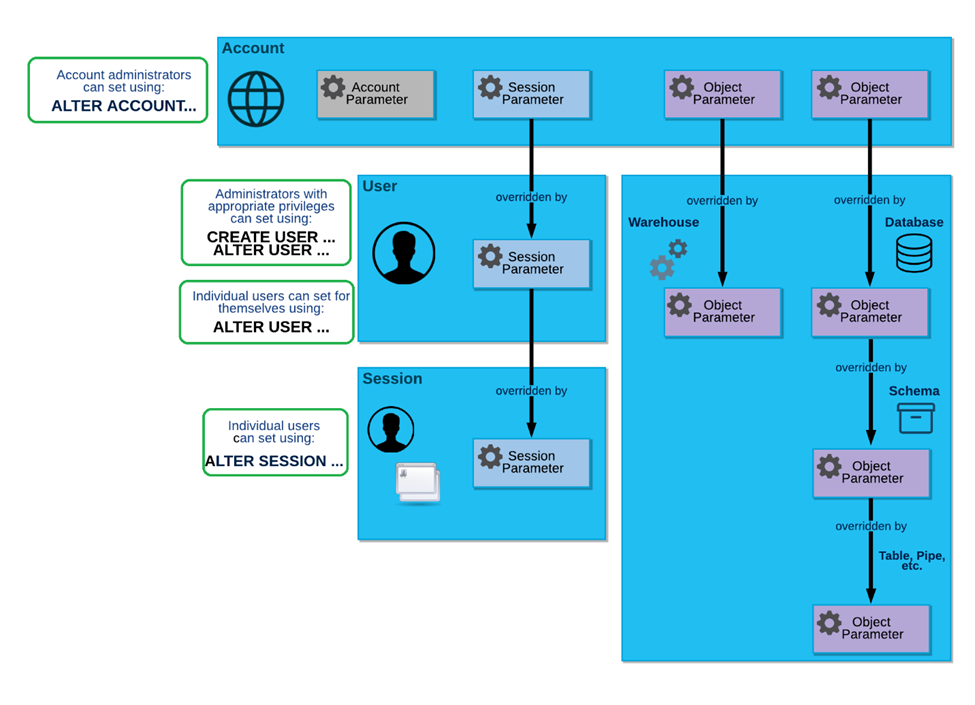

Snowflake parameters are name-value pairs that control the behaviour of your account, user sessions, and objects. There are 3 types of parameters in Snowflake:

- Account Parameters

- Session Parameters

- Object Parameters

The following diagram shows the relationship between these parameter types and how the parameters can be overridden at each level:

Image source: Snowflake documentation (Parameters — Snowflake Documentation)

The top blue box in the diagram (Account box) shows all three types of parameters. This indicates that account administrators (i.e. users with ACCOUNTADMIN role) can change all three types of parameters.

The blue box on the middle-left side shows those session parameters can be overridden by users with CREATE USER or ALTER USER privileges. These privileges are granted to the SECURITYADMIN role.

The blue box on the bottom-left side shows those session parameters can be overridden by users with ALTER SESSION privilege. This privilege is available to logged-in users.

These three scenarios are depicted in boxes with green borders.

Hence, we can understand from this discussion that, account administrators (i.e. ACCOUNTADMIN role), administrators with CREATE USER and ALTER USER privilege (typically SECURITYADMIN role) and individual users with ALTER SESSION privilege can set/override session parameters. Therefore Option A is correct.

Further Reading: Parameters — Snowflake Documentation

Domain : Data Engineering

Q16 : A table is created and 9 rows are inserted as given below:

create or replace table null_count_test(col1 integer, col2 integer);

insert into null_count_test(col1, col2) values

(null, null), — two all NULL values

(null, null),

(null, 1), — one NULL value

(1, null), — one NULL value

(1, 1),

(2, 2),

(3, 3),

(4, 4),

(5, 5);

What would be the output of the following query?

select count(*), count (nct.*), count(col1), count(distinct col1), count(distinct col1, col2), approx_count_distinct(*) from null_count_test nct;

A. Syntax error: Invalid function approx_count_distinct(*)

B. 8, 5, 6, 6, 6, 5

C. 9, 5, 6, 6, 6, 7

D. 9, 5, 6, 5, 5, 5

Correct Answer: D

Explanation

- count (*) returns a total number of records which is 9 in this table.

- count (alias.*) returns the total number of rows that do not contain any null value in any of the columns which is ‘5’ in this case.

- count() function when used with a column name returns the count of non-null records for the specified column. Therefore count (col1) returns 6.

- count(distinct col1) returns the count of rows that contain only unique and non-null values for the specified column. Therefore count (distinct col1) returns 5.

- count (distinct col1, col2) returns a count of rows that contain only unique combinations for the specified columns where none of the two columns should have null values. Therefore count (distinct col1, col2) returns 5.

- approx_count_distinct(*) returns the same output as count (distinct col1, col2,….) except that it is an approximation. Therefore, approx_count_distinct(*) returns 5.

➽ Exam Tip

approx_count_distinct() uses HyperLogLog algorithm to estimate the cardinality. This function can be used when you just need an approximation of the cardinality (and not a precise value). This function is cheaper to execute than count(distinct).

Further Readings: COUNT() function – Snowflake Documentation, COUNT(), DISTINCT and NULL – Snowflake knowledge base article

Domain : Performance Optimization

Q17 : Select the best practices of adding Search Optimization service to a table.

A. It is best to register all tables in a schema to search optimization

B. Before adding search optimization to a large table, get an estimate of costs involved

C. Batching of DML operations on the table

D. The table should not be clustered or the clustering key is different from the columns frequently used in queries

E. At most one of the columns in the table used in the filter operation has at least 100k-200k distinct values

Correct Answers: B, C and D

Explanation

Option A is incorrect. Search optimization is a table-level property. Search optimization service consumes storage and compute credits. For every table you register to the service, the credit consumption would increase. Therefore it is recommended to add search optimization to only a few tables initially and monitor the costs and benefits before registering more tables to the service.

Option B is correct. It is best to get an estimate of the costs involved and weigh that against the optimization benefits before enabling search optimization. To estimate the cost of adding search optimization to a table, Snowflake provides a function named SYSTEM$ESTIMATE_SEARCH_OPTIMIZATION_COSTS function.

Option C is correct. Snowflake recommends batching of DML operations on the table to reduce the cost of the search optimization service.

Option D is correct. Search optimization delivers the best results when the table registered to the service is not clustered or the clustering key is different from the columns frequently used in the query.

Option E is incorrect. Search optimization service is most useful when the columns used in the filter operation has at least 100k-200k distinct values. At most means 1 or less than 1 whereas at least means 1 or more than 1.

Further Reading: Using Search Optimization Service – Snowflake Documentation

Domain : Performance Optimization

Q18 : Which of the following SQL removes search optimization services from a table?

A. ALTER TABLE [IF EXISTS] <table_name> drop SEARCH OPTIMIZATION;

B. ALTER TABLE [IF EXISTS] <table_name> disable SEARCH OPTIMIZATION;

C. ALTER TABLE [IF EXISTS] <table_name> remove SEARCH OPTIMIZATION;

D. ALTER TABLE [IF EXISTS] <table_name> delete SEARCH OPTIMIZATION;

E. ALTER TABLE [IF EXISTS] <table_name> stop SEARCH OPTIMIZATION;

Correct Answer: A

Explanation

Option A is correct. To remove search optimization property from a table, you use the DROP command.

ALTER TABLE [IF EXISTS] <table_name> drop SEARCH OPTIMIZATION;

Note that To add or remove search optimization for a table, you must have the following privileges:

- You must have OWNERSHIP privilege on the table.

- You must have ADD SEARCH OPTIMIZATION privilege on the schema that contains the table.

➽ Exam Tip –

Kindly note that point-b in the explanation. To add or remove search optimization property on a table, you need ADD SEARCH OPTIMIZATION on the schema where the table resides.

Further Reading: Using Search Optimization Service – Snowflake Documentation

Domain : Performance Optimization

Q19 : A table is created with biometric data of the citizens of Genovia (as given below). There are 230 million records inserted in this table.

Create table people_master (

UniqueID number(11),

FirstName varchar(50),

LastName varchar(50),

DoB timestamp, — format is DDMMYYYY hh:mi:ss

Address varchar(200),

City varchar(50),

State char(2),

Current_Country char(2), –populated for non-resident- citizen

PassportNo string);

Following are some of the observations on the query patterns of this table.

A. UniqueID, FirstName, LastName, and DOB are frequently retrieved columns (i.e. used in SELECT clause) and City is frequently used for sorting data.

B. The table is queried based on State and Birth Year for demographic categorization (WHERE clause)

C. The table is heavily joined with another table called TRAVEL_LOG with the join column being PassportNo.

D. The data is frequently grouped by the Current_Country column

You, as a Snowflake Architect, are asked to review the clustering status and recommend reclustering (if required). How would you approach this problem?

A. Check SYSTEM$CLUSTERING_DEPTH. The smaller the number, the better clustered the table is

B. Select UniqueID, FirstName, LastName, and DOB are preferred candidates for a clustering key over PassportNo column

C. Select State, Birth Year would be an appropriate combination for the clustering key.

D. Do not use DOB in the clustering key

E. Once a clustering key is chosen, carry out reclustering using alter table <table name> recluster

Correct Answers: A and C

Option A is correct. SYSTEM$CLUSTERING_DEPTH computes the average depth of the table according to the specified clustering key. The smaller the average depth, the better clustered the table is with regards to the specified columns.

Option B is incorrect. The columns used in the SELECT clause are not helpful in a clustering key. Instead, you should choose the join column (PassportNo) as it is an excellent candidate for a clustering key to speed up join queries.

Option C is correct. Columns used in the WHERE clauses are worthy candidates to be in the clustering key.

Option D is incorrect. If you have queries that filter the data based on date criteria, you can use the date column in the clustering key.

However, you will need to check the cardinality of the date column before using it in the clustering key. Using expressions may help reduce cardinality. For example, you can use the SUBSTR expression to get a relevant portion of a string column for use in the clustering key.

Option E is incorrect. Reclustering happens automatically once a clustering key is defined for a table. Manual reclustering is not required.

Further Reading: Clustering Keys and Clustered Tables – Snowflake Documentation

Domain : Accounts and Security

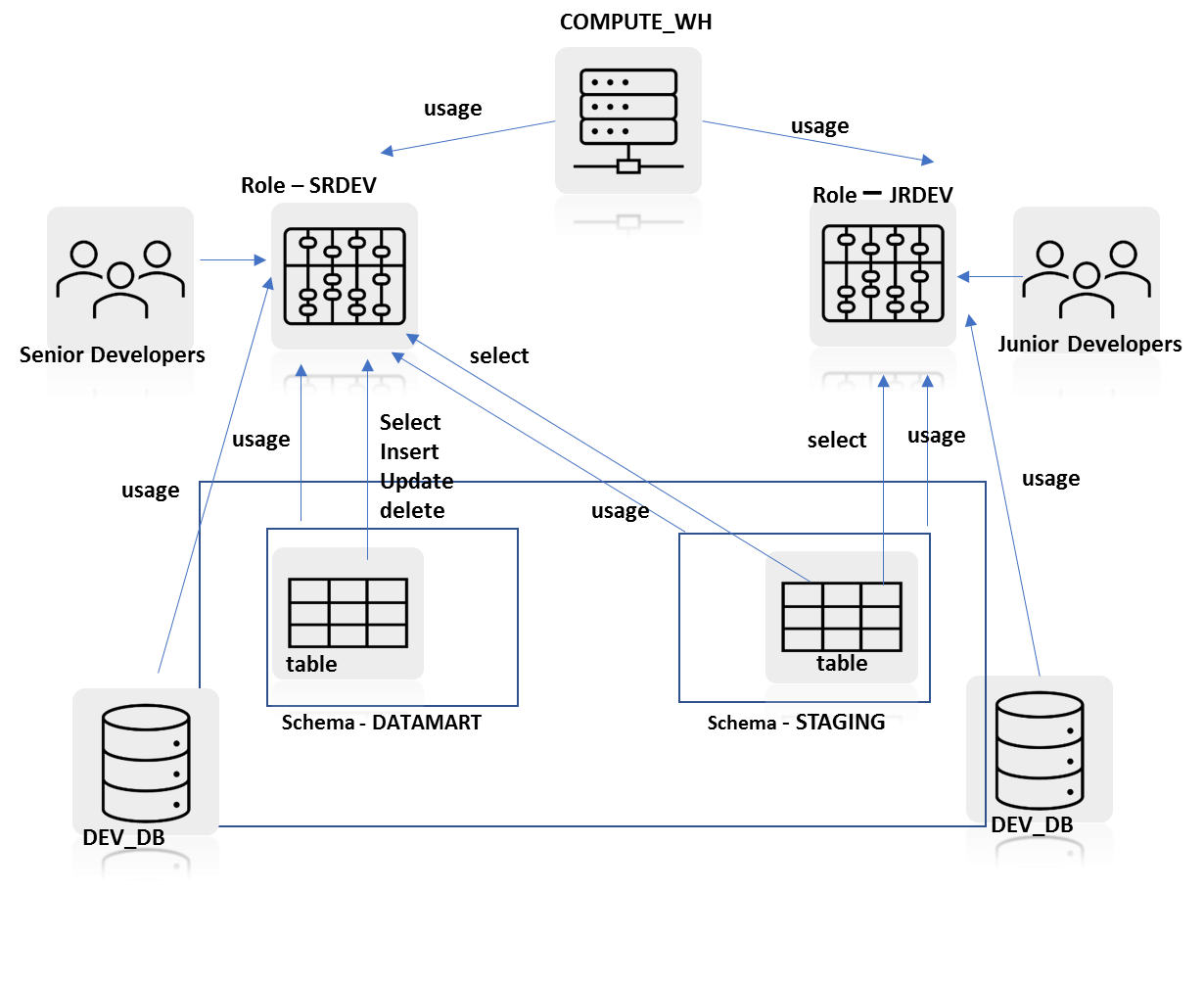

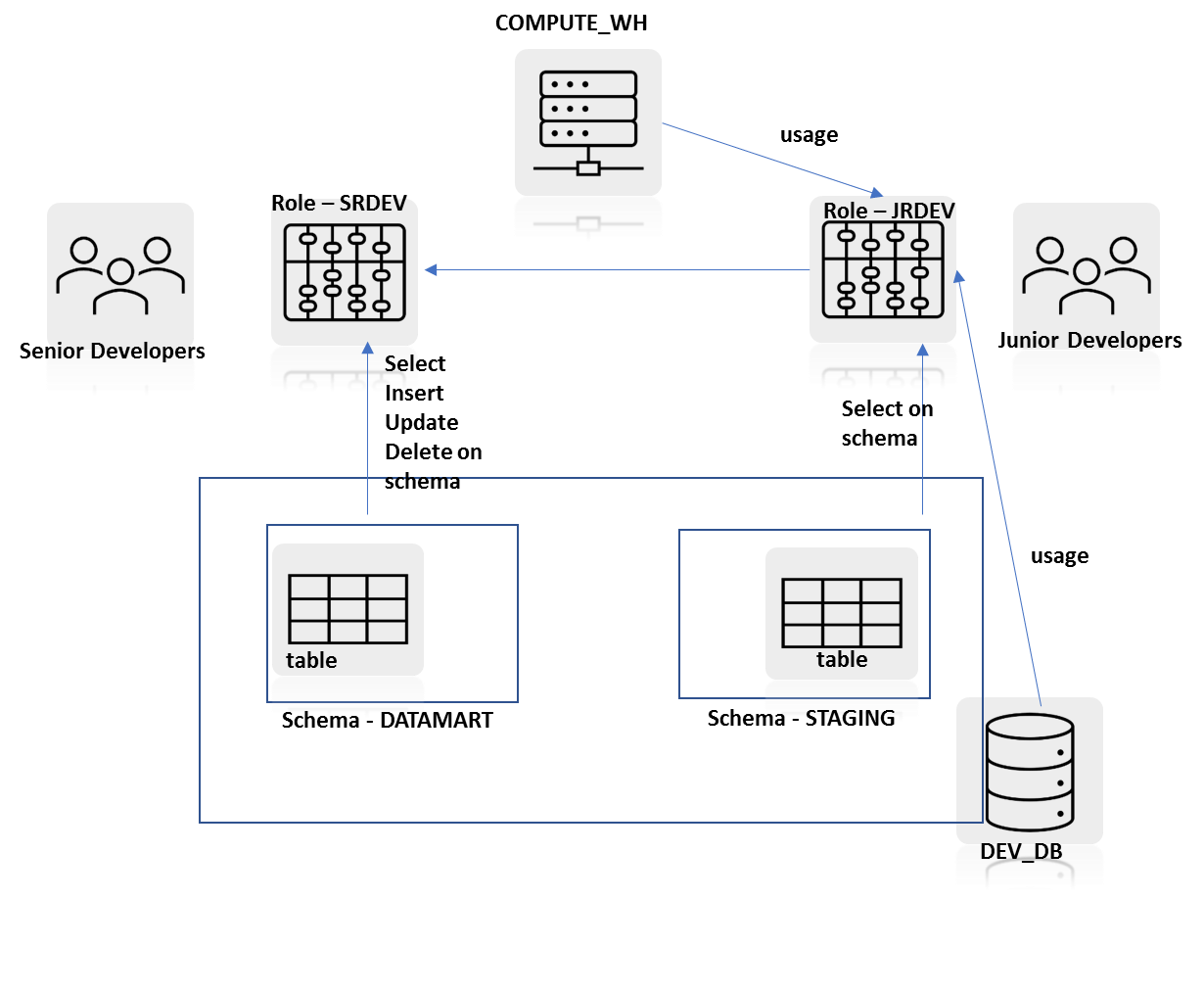

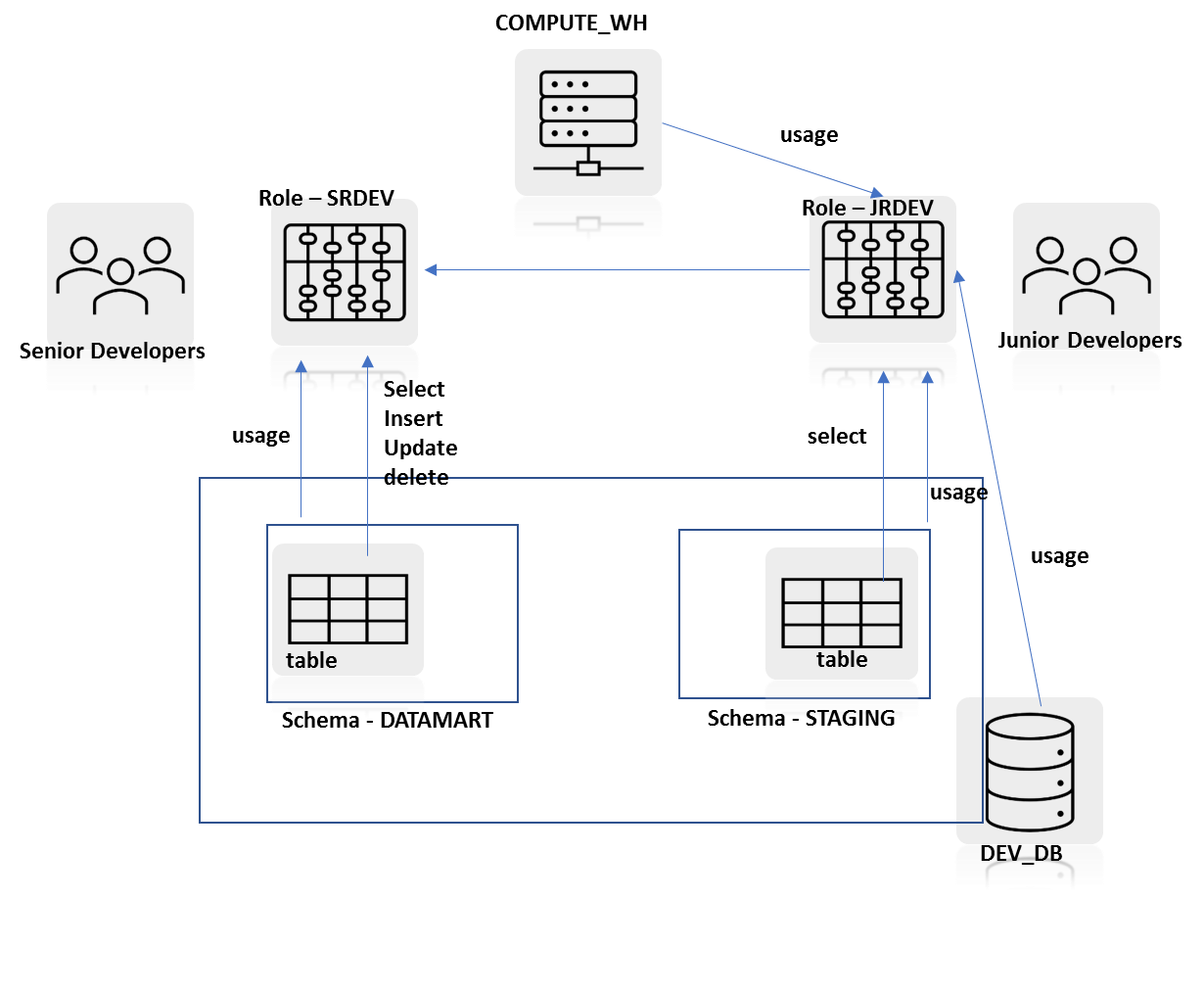

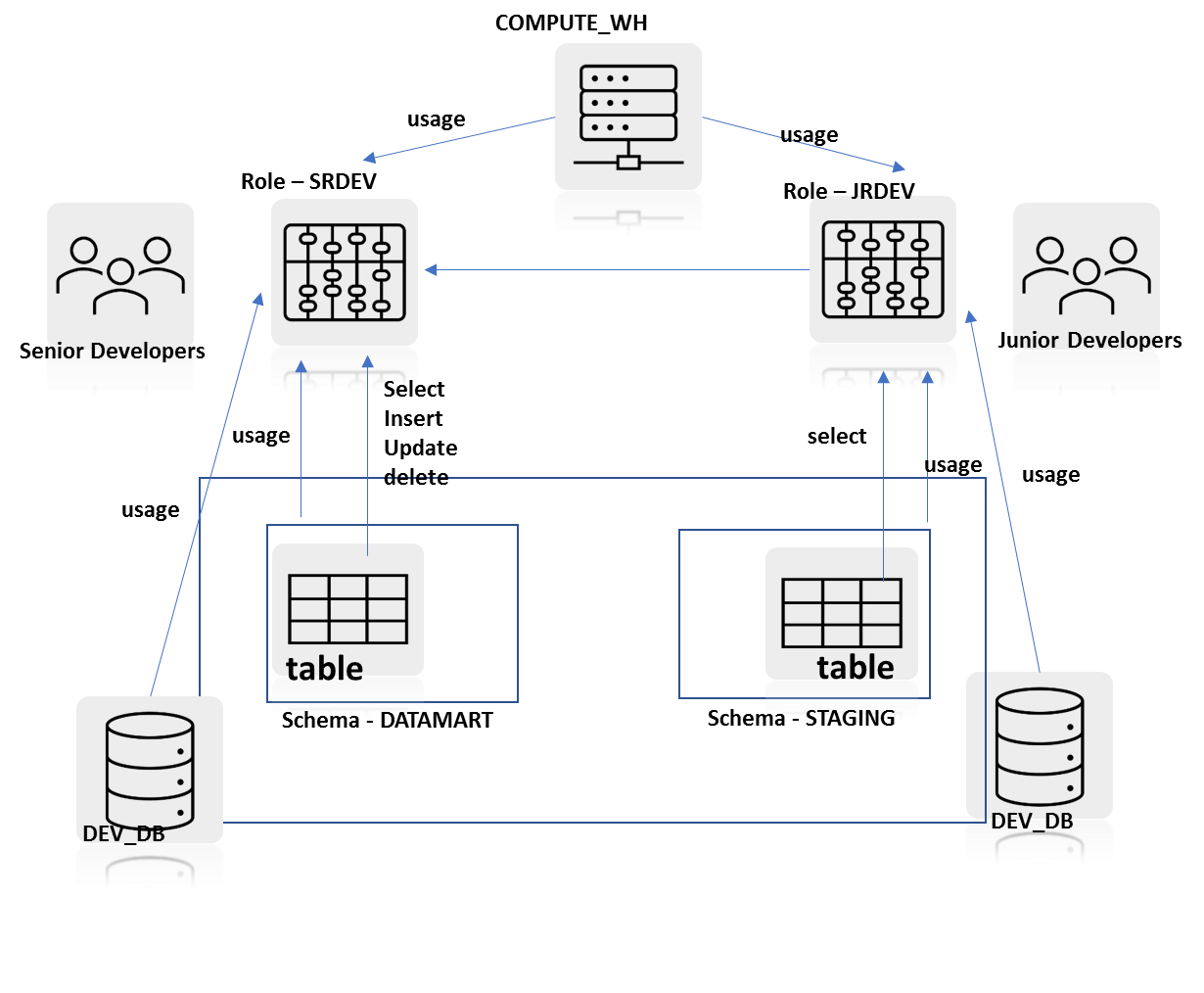

Q20 : A database DEV_DB has two schemas. STAGING schema and DATAMART schema. There is a warehouse named COMPUTE_WH of size M. There are two groups of developers in the project – junior developers and senior developers. There are two functional roles associated with these developers – JR_DEV and SR_DEV.

Following are the security design requirements:

A. Junior developers require read-only access to all objects within the DATAMART schema.

B. Senior developers require DML access on STAGING schema and read-only access to DATAMART schema.

C. Both groups require access to a virtual warehouse for computational requirements.

Which of the following 4 diagrams BEST represents the RBAC design you will recommend?

Option A: Diagram A

Option B: Diagram B

Option C: Diagram C

Option D: Diagram D

Correct Answer: C

Explanation

This question tests your understanding of three key principles of access control in Snowflake

- Principle of least privilege – this principle states that a role should be assigned the least required privileges to perform the activities.

- Aligning privileges with business functions – Snowflake recommends creating a combination of object access roles with different permissions on objects and assigning them as appropriate to functional roles:

- Using role hierarchy and privilege inheritance to build an access control model – This refers to granting lower-level functional roles to higher-level functional roles in a parent-child relationship where the parent roles map to business functions that should subsume the permissions of the child roles.

Applying these rules to the given scenario in question, you would need to

- create a role combination of object access roles i.e. JRDEV AND SRDEV to mirror functional roles played by senior developers and junior developers.

- assign the least set of privileges to both roles necessary to carry out their tasks

- create role hierarchy between JRDEV and SRDEV and have privilege inheritance provide additional privileges that SRDEV needs on the DATAMART schema and USAGE privilege on the warehouse

In diagram C, this model is implemented. You can see that there’s a role hierarchy setup between STDEV as the parent role and JRDEV as child role. Consequently, SRDEV inherits the USAGE privilege on the warehouse and the database and also inherits USAGE privilege on STAGING schema and read-only privilege i.e. SELECT privilege on STAGING schema tables.

Now it needs additional DML operations privileges on the DATAMART schema which is directly assigned to the SRDEV role. As this object access model satisfies all three key principles of the object access in Snowflake, this is the BEST design.

Therefore Option C is the correct answer.

In diagram A, you can observe that SRDEV role gets USAGE privilege on the warehouse and the database, usage privilege on STAGING schema, and select privilege on STAGING schema tables directly assigned. This model does not take advantage of role hierarchy and privilege inheritance.

Therefore Option A is incorrect.

In diagram B, you can observe that JRDEV role gets SELECT privilege on the STAGING schema. This is an invalid privilege. One can assign USAGE privilege on the schema and SELECT privilege on the tables of that schema.

Therefore Option B is incorrect.

In diagram D, you can observe that the role hierarchy is set up but the SRDEV role has duplicated privileges assigned to it through the direct assignment and through privilege inheritance. There are clear redundancies in this object access model.

Therefore Option D is incorrect.

Further Reading: Aligning object access with business functions – Snowflake Documentation

Domain : Data Engineering

Q21 : Which of the following are TRUE statements with respect to the Snowflake Connector for Kafka?

A. The Kafka connector relies on both – key pair authentication and username/password authentication

B. It is recommended to run your Kafka Connect instance in the same cloud provider region as your Snowflake account

C. Kafka connect cluster is a separate cluster from the Kafka cluster

D. The typical pattern with Kafka connect cluster in Snowflake is that one topic supplies messages (rows) for many Snowflake tables and many Kafka topics supply messages to one Snowflake table (i.e. many-to-many relationship)

Correct Answers: B and C

Explanation

Option A is incorrect. The Kafka connector relies on key pair authentication rather than basic authentication (i.e. username and password).

Option B is correct. While it is not mandatory, it is highly recommended that to improve throughput, Kafka connect instance should be in the same cloud provider and the same region as your Snowflake account

Option C is correct. A Kafka Connect cluster is a separate cluster from the Kafka cluster. A Kafka cluster hosts queues that connect the publisher to the subscriber. The Kafka Connect cluster supports running and scaling out connectors that connect Kafka with external systems.

Option D is incorrect. The typical pattern with the Kafka connect cluster in Snowflake is that one topic supplies messages (rows) for one Snowflake table.

Further Reading: Installing and Configuring the Kafka Connector – Snowflake Documentation

Domain : Snowflake Architecture

Q22 : Which of the following are TRUE statements with respect to database replication in Snowflake?

A. It works across regions of the same cloud provider. Cross-cloud provider data sharing is not supported

B. It is available in Snowflake Business Critical editions and above

C. It is supported for databases only. Other types of objects in an account are not supported

D. A database created from a share cannot be replicated

Correct Answers: C and D

Explanation

Option A is incorrect. Database replication is supported from any (supported) cloud region to any other (supported) cloud region AS WELL AS from any cloud provider (AWS, Azure, GCP) to any other cloud provider (AWS, Azure, GCP).

Option B is incorrect. Replication is available for all Snowflake editions. However, database failover/failback (a related feature) is available for the Business critical edition onwards.

Option C is correct. Currently, Snowflake has announced replication support for databases only (data and DDL). Other types of objects in an account cannot be replicated, including Users, Roles, Warehouses, Resource monitors, and Shares.

Option D is correct. A database created from a share cannot be replicated.

Further Reading: Introduction to Database Replication – Snowflake Documentation

Domain : Snowflake Architecture

Q23 : Which of the following are TRUE statements with respect to database replication in Snowflake?

A. Data transfer costs are an additional cost component of database replication in addition to the compute and storage costs

B. Data replication, if enabled, is billed at fixed monthly billing irrespective of the amount of data replicated

C. Replication fails if the primary database contains an external table

D. Privileges given to the primary database objects are replicated to a secondary database

Correct Answers: A and C

Explanation

Option A is correct. Database replication involves transferring data from one cloud region to another cloud region or across cloud providers. This is known as data egress. Cloud providers charge for data egress. To recover this expense, Snowflake charges back the customer for data transfer. This is charged on a per-byte basis. The rate depends on the region where your primary database is hosted.

Option B is incorrect. Data replication bills are not based on a fixed charge. Instead, the cost is variable and depends upon the amount of data replicated and the frequency of synchronization between primary and secondary.

Option C is correct. Replication operation fails if there’s an external table present in the primary database. To workaround this limitation, Snowflake suggests creating external tables outside the replicated database.

Option D is incorrect. When a database is replicated, the privileges associated with the replicated objects are NOT copied over to the secondary database. They have to be granted explicitly.

➽ Exam Tip – Snowflake provides the following ways to view the actual replication costs incurred.

-

REPLICATION_USAGE_HISTORY table function (in the Snowflake Information_schema)

-

REPLICATION_USAGE_HISTORY View view (in Account Usage)

Further Readings: Understanding costs model for replication – Snowflake Documentation, Database replication considerations – Snowflake Documentation

Domain : Data Engineering

Q24 : Which of the following is NOT a valid choice for ON_ERROR while loading data into Snowflake using COPY INTO <table> command?

A. ON_ERROR = CONTINUE

B. ON_ERROR = ABORT_STATEMENT

C. ON_ERROR = SKIP_FILE_<num>

D. ON_ERROR = ROLLBACK

Correct Answer: D

Explanation

Option A is incorrect. ON_ERROR = CONTINUE is a valid copy option while loading data into Snowflake. This option instructs Snowflake to continue loading the data even if an error is encountered while copying.

Option B is incorrect. ON_ERROR = ABORT_STATEMENT is a valid copy option while loading data into Snowflake. This option instructs Snowflake to abort the loading operation if any error is encountered while copying.

Option C is incorrect. ON_ERROR = SKIP_FILE_<num> is a valid copy option while loading data into Snowflake. This option instructs Snowflake to skip the loading from a file if the number of errors encountered is greater or equal to the number specified in <num>.

Option D is correct. ON_ERROR = ROLLBACK is invalid. In order to perform a rollback, we need to use ABORT_STATEMENT.

➽ Exam Tip –

Note the default options given below for the ON_ERROR clause.

Bulk loading using COPY ABORT_STATEMENT

Snowpipe SKIP_FILE

Further Reading: Copy Options in COPY INTO <table> – Snowflake Documentation

Domain : Performance Optimization

Q25 : Which of the following is TRUE about the Search Optimization service in Snowflake?

A. You must have ADD SEARCH OPTIMIZATION privilege on the table which you want to register for search optimization

B. A search access path becomes invalid if you add, drop, or rename a column

C. Search optimization service does not support materialized views

D. Search optimization service does not support VARCHAR and TIMESTAMP data types

Correct Answer: C

Explanation

Option A is incorrect. You must have ADD SEARCH OPTIMIZATION privilege on the schema that contains the table that you want to register for search optimization.

Option B is incorrect. A search access path remains valid if you add, drop, or rename a column. Search optimization background service automatically updates the search access path when you add, drop or rename a column.

Option C is correct. Search optimization service does not support materialized views and external tables.

Option D is incorrect. Search optimization service supports VARCHAR and TIMESTAMP data types.

Further Reading: Using Search Optimization Service – Snowflake Documentation

Domain : Data Engineering

Q 26: Row-level security within a Snowflake account in Snowflake can be implemented using… (Select TWO)

A. Masking policy

B. Row access policy

C. Tri-secret Secure

D. Secure data sharing

E. Secure views

Correct Answer – B and E

Explanation:

Option A is incorrect. Masking policy is a column-level security object (and not a row-level security object)

Option B is correct. Snowflake enterprise edition and above supports a schema-level object called row access policy that helps you control whether a given row in a table or a view shows up in the query result. The policy is evaluated at query runtime and the objects that pass the access test defined in the policy will be visible to the user. It is important to remember that this policy also includes the object owner who normally has full access to the underlying data.

Option C is incorrect. Tri-secret secure is a data encryption mechanism that combines the customer-managed key with a Snowflake-managed key to create a composite master key.

Option D is incorrect. Secure Data Sharing enables sharing selected objects in a database in your account with other Snowflake accounts. It does not aid in controlling row-level security in your account.

Option E is correct. You can use Snowflake context functions CURRENT_ROLE() and CURRENT_USER() in the secure view query to implement row-level security.

See an example below:

create or replace secure view movies_for_you as

select * from movies_table m where upper(m.role_name) = current_role();

Here, the secure view returns the list of movies that the logged-in is allowed to see by using the CURRENT_ROLE() context function to filter the movies list.

Reference:

Understanding row access policies in Snowflake

Working with Secure Views — Snowflake Documentation

Domain : Data Engineering

Q 27: Which of the following statements are TRUE concerning Secure View objects? (Select TWO)

- The following statement will change a secure view into a standard view.

ALTER VIEW <view name> UNSET SECURE;

- A secure view may have slower performance compared to a standard view.

- To add a view in your outbound share, it has to be created as a secure view

- A materialized view cannot be created as a secure view.

Correct Answer : A and B

Explanation:

When you create a secure view, Snowflake makes sure that a) the view definition is not visible to the users of the view and b) the users have no access to underlying data of the base tables. This feature is the differentiating feature of a secure view.

Option A is correct. A secure view can be converted to a regular view and vice versa using ALTER VIEW command as given below:

— to convert a regular view into a secure view

ALTER VIEW <name> SET SECURE

— to convert a secure view into a regular view

ALTER VIEW <name> UNSET SECURE

Option B is correct. When you create a secure view, Snowflake bypasses certain query optimizations. These optimizations are available for other regular views. As a result, a secure view has slower performance compared to a regular view.

Option C is incorrect. To add a view in an outbound share, you may create the view as a materialized view or a secure view.

The following Snowflake database objects can be included in a share:

| Tables | Views | Other Objects |

| Table

External table |

Secure view

Materialized view |

Secure UDF |

No other database objects (e.g. regular views, stored procedures, tasks, streams, etc.) can be included in a share

Option D is incorrect. A materialized view can be created as a secure view.

Reference:

Working with Secure Views — Snowflake Documentation

Domain : Data Engineering

Q 28 : An external table ext_weather_source is created in Snowflake. This table points to a data lake created on Amazon S3. The following 7 events occur in the data lake in sequence.

- File 1 with 7 rows added.

- A stream object ext_weather_stream is created on the external table.

- File 2 with 4 rows added.

- File 3 with 3 rows added.

- File 2 deleted.

- File 3 is appended with 2 more rows.

- ext_weather _source table is refreshed manually

What will be the output of the following queries based on the above sequence?

Select count(1) from ext_weather_source;

Select count(1) from ext_weather_stream;

A. 12, 9

B. 16, 9

C. 9, 16

D. 9, 12

Correct Answer : A

Explanation:

An external table in Snowflake is a reference to your data files in external cloud storage (e.g. data lake). Each external table has at least three columns as shown below:

External table structure :

| METADATA$FILENAME | A pseudocolumn column that identifies the name of each data file in the datalake, including its path. |

| METADATA$FILE_ROW_NUMBER | A pseudocolumn that shows the row number for each record in the data file. |

| VALUE | A VARIANT column that represents a single row in the data file. |

The external table needs to be refreshed either automatically or manually. When you refresh an external table, Snowflake synchronizes the metadata with the latest set of files in the external stage and data within these files, i.e.:

- New files in the path are added to the table metadata

- Changes to files in the path are updated in the table metadata.

- Files no longer in the path are removed from the table metadata.

In the given sequence of events in the datalake, (after the manual refresh) the external table ext_weather_source will have 7 rows for File 1, 0 rows for File 2, and 5 rows for File 3. This will make a total of 12 rows in the external table.

Snowflake supports insert-only stream objects on external tables. These objects record insertions only. They do not record deletions. Append operations are treated as new file insertions.

In the given question, the stream object is created in the 2nd step after adding 7 rows in File 1. Therefore this stream object starts recording any changes to the external table after the 1st step. The ext_weather_stream will record 4 rows inserted in File 2 and 5 rows inserted in File 3 for a total row count of 9.

Based on the above discussion, we can see that Option A is correct.

Reference:

Introduction to external tables

Insert-only streams on external table

Domain : Data Engineering

Q 29 : Which of the following statements are TRUE concerning cancellation of a long-running query in Snowflake? (select all that apply)

- It is not possible to selectively cancel long-running queries in Snowflake without aborting the session.

- Only SYSADMIN or a higher role has the privileges to cancel a long-running query using SnowSQL.

- The recommended way for a user to cancel a long-running query is to use the application interface (e.g. Snowflake web interface) or the cancellation API provided by the Snowflake ODBC or JDBC driver.

- A long-running query will be auto-canceled by default by Snowflake after 2 days

Correct Answer : C and D

Explanation:

Option A is incorrect. It is possible for a user to cancel a specific long-running query without aborting the session.

Option B is incorrect. Snowflake provides two SnowSQL functions for canceling long-running queries. Any user can use these functions provided by Snowflake to cancel their own long-running queries. These functions are:

- SYSTEM$CANCEL_ALL_QUERIES

- SYSTEM$CANCEL_QUERY

Option C is correct. The recommended way for a user to cancel a long-running query is to use the application interface (e.g. Snowflake web interface) or the cancellation API provided by the Snowflake ODBC or JDBC driver.

Option D is correct. Snowflake provides a parameter STATEMENT_TIMEOUT_IN_SECONDS at an account, session, object, or warehouse level. The value of this parameter controls how long a query may run before being automatically timed out by Snowflake. The default value is for this parameter is 2 days (though it can be modified to a lower value)

Reference:

Cancelling a long-running query

Domain : Performance and Optimization

Q 30 : A table is created in the Snowflake database as below:

CREATE TABLE “AGENTS”

(

“AGENT_CODE” CHAR(6) NOT NULL PRIMARY KEY,

“AGENT_NAME” CHAR(40),

“WORKING_AREA” CHAR(35),

“COMMISSION_PERCENT” NUMBER(10,2),

“CONTACT_NO” CHAR(15),

“COUNTRY_CODE” VARCHAR2(25)

);

There are 2150 agent records inserted into this table.

Next, the following query is executed on this table.

Select * from agents;

Which of the following queries can be completed subsequently without the use of a virtual warehouse? (Select TWO)

A. Select * from agents;

B. select * from agents limit 100;

C. Select count(1) from agents;

D. Select country_code, count(1) from agents group by country_code;

Correct Answer – A and C

Explanation:

Option A is correct. The exact same query is already issued once earlier by the same user role. Therefore Snowflake will be able to retrieve this information from the results cache. Hence, a virtual warehouse will not be used.

Option B is incorrect. This query will require the use of a virtual warehouse to limit the resultset count to the first 100 rows.

Option C is correct. The count aggregate function that returns the total number of rows in a table is a metadata-only operation and will not require the use of a virtual warehouse or local cache.

Option D is incorrect. In this query, the agent data is to be grouped by country code. This requires additional processing and will need a virtual warehouse.

Domain : Data Engineering

Q 31: Which of the following are TRUE statements with respect to the Snowflake Connector for Kafka? (Select TWO)

A. The Kafka connector relies on both – key pair authentication and username/password authentication.

B. It is recommended to run your Kafka Connect instance in the same cloud provider region as your Snowflake account.

C. Kafka connect cluster is a separate cluster from the Kafka cluster

D. The typical pattern with Kafka connect cluster in Snowflake is that one topic supplies messages (rows) for many Snowflake tables and many Kafka topics supply messages to one Snowflake table (i.e. many-to-many relationship).

Correct Answer – B, C

Explanation:

Option A is incorrect. The Kafka connector relies on key pair authentication rather than basic authentication (i.e. username and password).

Option B is correct. While it is not mandatory, it is highly recommended that to improve throughput, Kafka connect instance should be in the same cloud provider and the same region as your Snowflake account

Option C is correct. A Kafka Connect cluster is a separate cluster from the Kafka cluster. A Kafka cluster hosts queues that connect the publisher to the subscriber. The Kafka Connect cluster supports running and scaling out connectors that connect Kafka with external systems.

Option D is incorrect. The typical pattern with the Kafka connect cluster in Snowflake is that one topic supplies messages (rows) for one Snowflake table.

Further Reading:

Installing and Configuring the Kafka Connector – Snowflake Documentation

Domain: Snowflake Architecture

Q 32 : Which of the following are TRUE statements with respect to database replication in Snowflake? (Select TWO)

A. It works across regions of the same cloud provider. Cross-cloud provider data sharing is not supported.

B. It is available in Snowflake Business Critical editions and above.

C. It is supported for databases only. Other types of objects in an account are not supported.

D. A database created from a share cannot be replicated

Correct Answer – C and D

Explanation:

Option A is incorrect. Database replication is supported from any (supported) cloud region to any other (supported) cloud region AS WELL AS from any cloud provider (AWS, Azure, GCP) to any other cloud provider (AWS, Azure, GCP).

Option B is incorrect. Replication is available for all Snowflake editions. However, database failover/failback (a related feature) is available for the Business critical edition onwards.

Option C is correct. Currently, Snowflake has announced replication support for databases only (data and DDL). Other types of objects in an account cannot be replicated, including Users, Roles, Warehouses, Resource monitors, and Shares.

Option D is correct. A database created from a share cannot be replicated.

Further Reading:

Introduction to Database Replication – Snowflake Documentation

Domain: Snowflake Architecture

Q 33: Which of the following are TRUE statements with respect to database replication in Snowflake? (Select TWO)

A. Data transfer costs are an additional cost component of database replication in addition to the compute and storage costs.

B. Data replication, if enabled, is billed at fixed monthly billing irrespective of the amount of data replicated.

C. Replication fails if the primary database contains an external table.

D. Privileges given to the primary database objects are replicated to a secondary database.

Correct Answer – A and C

Explanation:

Option A is correct. Database replication involves transferring data from one cloud region to another cloud region or across cloud providers. This is known as data egress. Cloud providers charge for data egress. To recover this expense, Snowflake charges back the customer for data transfer. This is charged on a per-byte basis. The rate depends on the region where your primary database is hosted.

Option B is incorrect. Data replication bills are not based on a fixed charge. Instead, the cost is variable and depends upon the amount of data replicated and the frequency of synchronization between primary and secondary.

Option C is correct. Replication operation fails if there’s an external table present in the primary database. To workaround this limitation, Snowflake suggests creating external tables outside the replicated database.

Option D is incorrect. When a database is replicated, the privileges associated with the replicated objects are NOT copied over to the secondary database. They have to be granted explicitly.

➽ Exam Tip – Snowflake provides the following ways to view the actual replication costs incurred.

- REPLICATION_USAGE_HISTORY table function (in the Snowflake Information_schema)

- REPLICATION_USAGE_HISTORY View view (in Account Usage)

Further Reading:

Understanding costs model for replication – Snowflake Documentation

Database replication considerations – Snowflake Documentation

Domain: Data Engineering

Q 34 : Which of the following is NOT a valid choice for ON_ERROR while loading data into Snowflake using COPY INTO <table> command?

A. ON_ERROR = CONTINUE

B. ON_ERROR = ABORT_STATEMENT

C. ON_ERROR = SKIP_FILE_<num>

D. ON_ERROR = ROLLBACK

Correct Answer – D

Explanation:

Option A is incorrect. ON_ERROR = CONTINUE is a valid copy option while loading data into Snowflake. This option instructs Snowflake to continue loading the data even if an error is encountered while copying.

Option B is incorrect. ON_ERROR = ABORT_STATEMENT is a valid copy option while loading data into Snowflake. This option instructs Snowflake to abort the loading operation if any error is encountered while copying.

Option C is incorrect. ON_ERROR = SKIP_FILE_<num> is a valid copy option while loading data into Snowflake. This option instructs Snowflake to skip the loading from a file if the number of errors encountered is greater or equal to the number specified in <num>.

Option D is correct. ON_ERROR = ROLLBACK is invalid. In order to perform a rollback, we need to use ABORT_STATEMENT.

➽ Exam Tip –

Note the default options given below for the ON_ERROR clause.

Bulk loading using COPY ABORT_STATEMENT

Snowpipe SKIP_FILE

Further Reading:

Copy Options in COPY INTO <table> – Snowflake Documentation

Domain: Performance Optimization

Q 35 : Which of the following is TRUE about the Search Optimization service in Snowflake?

A. You must have ADD SEARCH OPTIMIZATION privilege on the table which you want to register for search optimization.

B. A search access path becomes invalid if you add, drop, or rename a column.

C. Search optimization service does not support materialized views.

D. Search optimization service does not support VARCHAR and TIMESTAMP data types.

Correct Answer – C

Explanation:

Option A is incorrect. You must have ADD SEARCH OPTIMIZATION privilege on the schema that contains the table that you want to register for search optimization.

Option B is incorrect. A search access path remains valid if you add, drop, or rename a column. Search optimization background service automatically updates the search access path when you add, drop or rename a column.

Option C is correct. Search optimization service does not support materialized views and external tables.

Option D is incorrect. Search optimization service supports VARCHAR and TIMESTAMP data types.

Further Reading:

Using Search Optimization Service – Snowflake Documentation

Summary

We hope the above list of questions on Snowflake Snowpro Advanced Architect exams are helpful for you. The Snowflake SnowPro Advanced Architect Certification is the highest level of certification offered by Snowflake which is intended for experienced professionals.

It is strongly recommended to ensure that we have covered all the objectives of the certification exam, so that you can pass the exam at ease and in your first attempt. Hence, keep practicing until you are confident to take the real exams. You can also try Whizlabs newly updated practice test on Snowflake Snowpro Advanced Architect exam.

- Top 25 DevSecOps Interview Question and Answers for 2024 - March 1, 2023

- How to prepare for VMware Certified Technical Associate [VCTA-DCV] Certification? - February 14, 2023

- Top 20 Cloud Influencers in 2024 - January 31, 2023

- 25 Free Question on SC-100: Microsoft Cybersecurity Architect - January 27, 2023

- Preparation Guide on MS-101: Microsoft 365 Mobility and Security - December 26, 2022

- Exam tips to prepare for Certified Kubernetes Administrator: CKA Exam - November 24, 2022

- Top Hands-On Labs To Prepare For AWS Certified Cloud Practitioner Certification - October 27, 2022

- Why do you need to upskill your teams with the Azure AI fundamentals? - October 11, 2022