Are you looking for the Google Cloud Professional Data Engineer Exam Questions? The questions and answers provided here test and enhance your knowledge of the exam objectives.

A professional Data Engineer collects, transforms, and publishes the data, thereby enabling data-driven decision making. Earning a Google Cloud Certified Professional Data Engineer certification may help you in pursuing a better career in the Google cloud industry. To pass the actual exam, you have to spend more time on learning & re-learning through multiple practice tests.

Let’s start learning!

Domain: Design Data Processing Systems

Q1 : A company is migrating its current infrastructure from on-premise to Google cloud. It stores over 280TB of data on its on-premise HDFS servers. You were tasked to move data from HDFS to Google Storage in a secure and efficient manner. Which of the following approaches are best to fulfill this task?

A. Install Google Storage gsutil tool on servers and copy the data from HDFS to Google Storage.

B. Use Cloud Data Transfer Service to migrate the data to Google Storage.

C. Import the data from HDFS to BigQuery. Then, export the data to Google Storage in AVRO format.

D. Use Transfer Appliance Service to migrate the data to Google Storage.

Correct Answer: D

Explanation :

Storage Transfer Service allows you to quickly import ONLINE data into Cloud Storage. You can also set up a repeating schedule for transferring data, as well as transfer data within Cloud Storage, from one bucket to another.

Transfer Appliance is an OFFLINE secure, high capacity storage server that you set up in your datacenter. You fill it with data and ship it to an ingest location where the data is uploaded to Google Cloud Storage.

So, answer D is the correct one, while B is incorrect.

Answer A is incorrect: gsutil tool is good for programmatic usage by developers and may be useful to copy and move megabytes/gigabytes of data. Not so practical for Terabytes of data. It’s also not reliable data transfer technique as it is related to the machine’s connectivity with Google Cloud.

Answer C is incorrect: In order to migrate to BigQuery, you need to migrate data to Google Storage. This is a useless approach as the main challenge is migrating data from HDFS to Google Storage and BigQuery won’t help solving it.

References: Google Cloud Storage Transfer Service: https://cloud.google.com/storage-transfer/docs/ Google Appliance Transfer Service: https://cloud.google.com/transfer-appliance/ Migrate HDFS to Google Storage: https://cloud.google.com/solutions/migration/hadoop/hadoop-gcp- migration-data

Domain: Design Data Processing Systems

Q2 : You have a Dataflow pipeline to run and process a set of data files received from a client, for transformation and loading into a data warehouse. This pipeline should run each morning so that metrics can be ready when stakeholders need the latest stats based on data sent the day before. Which tool should you use?

A. Cloud Functions

B. Compute Engine

C. Kubernetes Engine

D. Cloud Scheduler

Correct Answer: D

Explanation :

The question is asking to suggest a name of service that can be used to trigger to schedule a dataflow pipeline.

A: Cloud Functions Cloud Functions can be written in Node.js, Python, Go, Java, .NET, Ruby, and PHP programming languages, and are executed in language-specific runtimes. This can be invoked HTTP functions from standard HTTP requests. These HTTP requests wait for the response and support handling of common HTTP request methods like GET, PUT, POST, DELETE and OPTIONS Hence this is not a correct solution.

B: Compute Engine This is a VM and hence not a service to schedule a dataflow pipeline. Hence this is not a correct solution.

C: Kubernetes Engine This is work on model of Master and node and hence not correct solution.

D: Cloud Scheduler Cloud Scheduler is a fully managed enterprise-grade cron job scheduler. It allows you to schedule virtually any job, including batch, big data jobs, cloud infrastructure operations, and more. You can automate everything, including retries in case of failure to reduce manual toil and intervention Hence this is a correct solution

Reference: Cloud Scheduler: https://cloud.google.com/scheduler/

Domain: Build and Operationalize Data Processing Systems

Q3 : A pharmaceutical factory has over 100,000 different sensors generating JSON-format events every 10 seconds to be collected. You need to gather the event data for sensor & time series analysis.

Which database is best used to collect event data?

A. Google Storage

B. Cloud Spanner

C. Big Table

D. Datastore

Correct Answer: C

Explanation :

Cloud Big Table is a petabyte-scale, fully managed NoSQL database service for large analytical and operational workloads.

Answer A is incorrect: Storing data to Google Storage needs further processing to be eligible for time- series analysis using tools such as Apache Hive or Presto.

Answer B is incorrect: Cloud Spanner is a relational database service. It is not recommended for JSON- format data that may have changing structure.

Answer D is incorrect: Datastore can be a potential choice since it’s a NoSQL database. The issue is, Datastore is not built for storing and reading huge data volumes as in this scenario. Datastore is deisgned for web applications of small scale.

Reference: Big Table vs. Datastore: https://stackoverflow.com/questions/30085326/google-cloud-bigtable-vs- google-cloud-datastore

Domain: Operationalize Machine Learning Models

Q4 : A financial services firm providing products such as credit cards and bank loans receives thousands of online applications from clients applying for their products. Because it takes a lot of effort to scan and check all applications if they meet the minimum requirements for the products they are applying for, they want to build a machine learning model takes application fields like annual income, marital status, date of birth, occupation and other attributes as input and finds out if the applicant is qualified for the product the client applies for.

What is the machine learning technique will help build such model?

A. Regression

B. Classification

C. Clustering

D. Reinforcement learning

Correct Answer: B

Explanation :

A regression problem is a problem which its output variable is of continuous value. Problems which finds out about variables such as weights, prices or age are considered regression problems.

A classification problem is a problem which the output variable is a category. Examples of classification problems are finding a passenger’s nationality, detect if a patient is diagnosed with a disease or if an applicant is qualified for a job interview.

Regression and classification are supervised learning problems. It means, the machine learns from past experiences by training it on a labeled data set. A training set is a set of rows with input and output parameters. The machine then learns from the training set and improves its parameters for better detection.

Clustering is an unsupervised learning method. An unsupervised learning is a method to find references between input data without labeled output. The purpose is to find meaningful structure between the input sets with similar features and group them. Clustering is the method of grouping data points share similarities and separating dissimilar points to other groups. Examples of clustering applications are customer segmentation (new, frequent, loyal, ..), city land value and detecting anomalies in network traffic.

Reinforcement learning is a technique which a machine takes actions without training sets to reach the highest rewards possible. The agent learns from trial and decides what to do to perform a given task without supervision. The task punishes the agent for a wrong action and rewards it for achieving the task. Examples of reinforcement learning is asking an agent to play a maze game to reach the exit with traps along the way or making an agent play a video game and win a racing game.

From the explanation above, we can see the scenario problem which finding if a client is qualified for a product is a classification problem. So, answer B is correct.

Domain: Operationalize Machine Learning Models

Q5 : Data scientists are testing a TensorFlow model on Google Cloud using four NVIDIA Tesla P100 GPUs to test a TensorFlow model. After experimenting with several use cases, they decide to scale up by using a different machine type for testing. As a data engineer, you are responsible of assisting with choosing the right machine type to reach a better model performance. What should you do?

A. Use TPU machine type for testing the TensorFlow on.

B. Scale up machine type by using NVIDIA Tesla V100 GPUs.

C. Use 8 NVIDIA Tesla K80 GPUs instead of the current 4 P100 GPUs.

D. Increase number of Tesla P100 GPUs used until test results return satisfactory performance.

Correct Answer: A

Explanation :

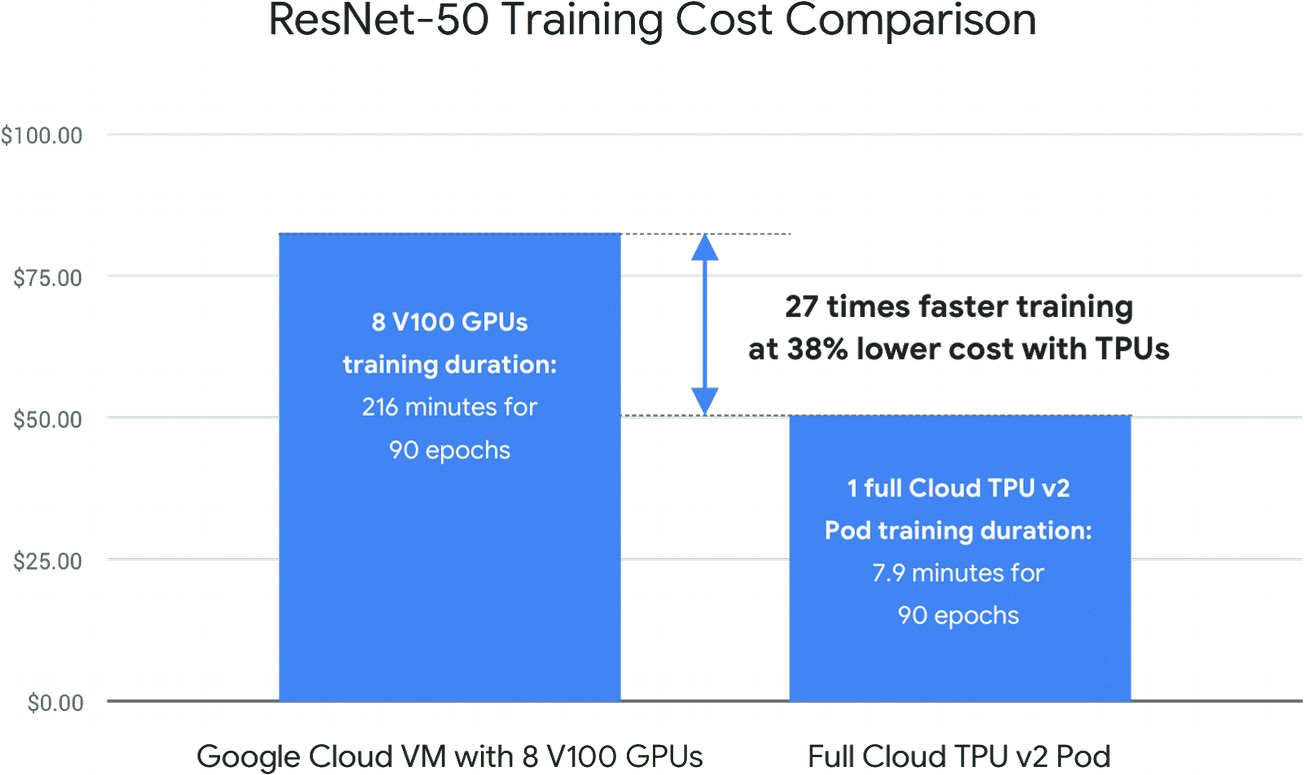

Google built the Tensor Processing Unit (TPU) in order to make it possible for data scientists to achieve business and research breakthroughs ranging from network security to medical diagnoses. Cloud TPU is the custom-designed machine learning ASIC that powers Google products like Translate, Photos, Search, Assistant, and Gmail.

So, for this scenario, using TPU machine type is the recommended type to build Tensorflow models on.

References: TPU Machine Type: https://cloud.google.com/tpu/

GPU Machine Type: https://cloud.google.com/gpu/

https://cloud.google.com/blog/products/ai-machine-learning/what-makes-tpus-fine-tuned-for-deep- learning

Using GPUs for training models in the cloud: https://cloud.google.com/ml-engine/docs/tensorflow/using-gpus

Domain: Operationalize Machine Learning Models

Q6 : The data scientists at your company have built a machine learning neural network model using TensorFlow. After several tests on the model, the team decides the model is ready to be deployed for production use. Which of the following services would you use to host the model to Google Cloud?

A. Google Kubernetes Engine

B. Google ML Deep Learning VM

C. Google Container Registry

D. Google Machine Learning Model

Correct Answer: D

Explanation :

Google Kubernetes Engine is a managed, production-ready environment for deploying containerized applications. It brings our latest innovations in developer productivity, resource efficiency, automated operations, and open-source flexibility to accelerate your time to market.

Answer A is incorrect: GKE is a service to deploy and scale Docker containers in the cloud. You need to build the docker image for your model if you want to use it, which is not recommended for this scenario.

Answer B is incorrect: Google ML Deep Learning VM is a service that offers pre-configured virtual machines for deep learning applications. It is not used to deploy ML models to production.

Answer C is incorrect: Google Container Registry is a service to store, manage, and secure your Docker container images. It does not for deploying machine learning models. Cloud Machine Learning Engine is a managed service that lets developers and data scientists build and run superior machine learning models in production. Cloud ML Engine offers training and prediction services, which can be used together or individually.

Answer D is correct: Google ML Model is the service to use to deploy your machine learning models.

References: Google Kubernetes Engine: https://cloud.google.com/kubernetes-engine/, Google Machine Learning Engine: https://cloud.google.com/ml-engine/, Google ML Deep Learning VM: https://cloud.google.com/deep-learning-vm/

Domain: Ensure Solution Quality

Q7 : You launched a Dataproc cluster to perform some Apache Spark jobs. You are looking for a method to securely transfer web traffic data between your machine’s web browser and Dataproc cluster.

How can you achieve this?

A. FTP connection

B. SSH tunnel

C. VPN connection

D. Incognito mode

Correct Answer: B

Explanation :

Some of the core open source components included with Google Cloud Dataproc clusters, such as Apache Hadoop and Apache Spark, provide web interfaces. These interfaces can be used to manage and monitor cluster resources and facilities, such as the YARN resource manager, the Hadoop Distributed File System (HDFS), MapReduce, and Spark. Other components or applications that you install on your cluster may also provide web interfaces.

It is recommended to create an SSH tunnel for a secure connection between your web browser and Dataproc’s master node. SSH tunnel supports traffic proxying using the SOCKS protocol. To configure your browser to use the proxy, start a new browser session with proxy server parameters.

Reference: Dataproc – Cluster Web Interfaces: https://cloud.google.com/dataproc/docs/concepts/accessing/cluster- web-interfaces#connecting_to_the_web_interfaces

Domain: Design Data Processing Systems

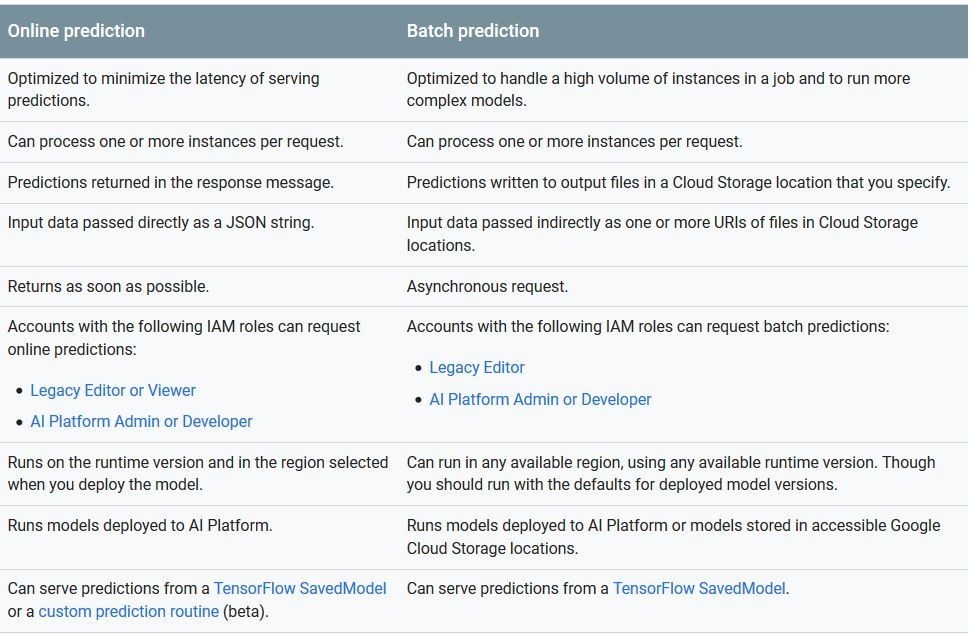

Q8 : You have deployed a Tensorflow machine learning model using Cloud Machine Learning Engine. The model should be able to handle high volume of instances in a job to run complex models. The model should also write the output to Google Storage.

Which of the following approaches is recommended?

A. Use online prediction when using the model. Batch prediction supports asynchronous requests.

B. Use batch prediction when using the model. Batch prediction supports asynchronous requests.

C. Use batch prediction when using the model to return the results as soon as possible.

D. Use online prediction when using the model to return the results as soon as possible.

Correct Answer: B

Explanation :

Batch prediction can handle high volume of instances in a job to run complex models. It also writes the output to Google Storage by specified location.

Answer A & D are incorrect: Online prediction doesn’t support handling high volume of instances per job and doesn’t write output to Google Storage.

Answer C is incorrect: Batch prediction doesn’t return the output as soon as possible, it supports asynchronous requests.

Reference: Online vs. Batch Prediction: https://cloud.google.com/ml-engine/docs/tensorflow/online-vs-batch- prediction

Domain: Design Data Processing Systems

Q9 : A company uses Airflow to orchestrate its data pipelines and DAGs (Directed Acyclic Graphs), installed and maintained on-premise by DevOps team. The company wants to migrate the data pipelines managed in Airflow to Google Cloud. The company is looking for a migration method which can make DAGs available and migrated without extra code modifications so the data pipelines can be available once migrated. Which service should you use?

A. App Engine

B. Cloud Function

C. Dataflow

D. Cloud Composer

Correct Answer: D

Explanation :

Cloud Composer is a fully managed workflow orchestration service built on Apache Airflow. Cloud composer is built specifically to schedule and monitor workflows and take required actions. You can use Cloud Composer to orchestrate dataflow pipeline and create a custom sensor to detect file’s condition if any changes occurred, then it triggers the dataflow pipeline to run again.

Reference: Cloud Composer: https://cloud.google.com/composer/

Domain: Build and Operationalize Data Processing Systems

Q10 : An air-quality research facility monitors the quality of the air and alerts of possible high air pollution in a region. The facility receives event data from 25,000 sensors every 60 seconds. Event data is then used for time-series analysis per region. Cloud experts suggested using BigTable for storing event data.

What will you design the row key for each even in BigTable?

A. Use event’s timestamp as row key.

B. Use combination of sensor ID with timestamp as sensorID-timestamp.

C. Use combination of sensor ID with timestamp as timestamp-sensorID.

D. Use sensor ID as row key.

Correct Answer: B

Explanation :

Storing time-series data in Cloud Bigtable is a natural fit. Cloud Bigtable stores data as unstructured columns in rows; each row has a row key, and row keys are sorted lexicographically.

For time series, you should generally use tall and narrow tables. This is for two reasons: Storing one event per row makes it easier to run queries against your data. Storing many events per row makes it more likely that the total row size will exceed the recommended maximum (see Rows can be big but are not infinite).

When Cloud Bigtable stores rows, it sorts them by row key in lexicographic order. There is effectively a single index per table, which is the row key. Queries that access a single row, or a contiguous range of rows, execute quickly and efficiently. All other queries result in a full table scan, which will be far, far slower. A full table scan is exactly what it sounds like—every row of your table is examined in turn.

For Cloud Bigtable, where you could be storing many petabytes of data in a single table, the performance of a full table scan will only get worse as your system grows.

Choosing a row key that facilitates common queries is of paramount importance to the overall performance of the system. Enumerate your queries, put them in order of importance, and then design row keys that work for those queries.

From the description, you need to combine both sensor ID and timestamp in order to fetch data you want fast. So, answers A & D are incorrect.

If you start the row key with timestamp, most recent data will be inserted at the bottom of the table since rows are sorted in lexicographic order. Starting the row key with sensor ID will allow writing all sensor’s events together and allow distributing data among nodes.

Reference: BigTable – Schema Design for Time Series Data: https://cloud.google.com/bigtable/docs/schema- design-time-series

Domain: Build and Operationalize Data Processing Systems

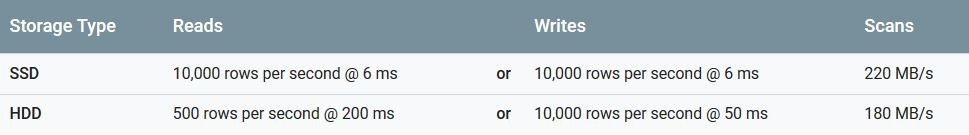

Q11 : Your company hosts a gaming app which reaches over 30,000 players in a single minute. The app generates event data including information about players state, score, location coordinates and other stats. You need to find a storage solution which can support high read/write throughput with very low latency which doesn’t exceed 10 milliseconds to ensure a quality performance experience for the players.

Which of the following is the best option for this scenario?

A. Cloud Spanner

B. BigQuery

C. BigTable

D. Datastore

Correct Answer: C

Explanation :

Cloud BigTable is a petabyte-scale, fully managed NoSQL database service for large analytical and operational workloads. Under a typical workload, Cloud BigTable delivers highly predictable performance. When everything is running smoothly, a typical workload can achieve the following performance for each node in the Cloud Bigtable cluster, depending on which type of storage the cluster uses:

Answer A is incorrect: Cloud Spanner does not guarantee the same performance and low latency as BigTable.

Answer B is incorrect: While BigQuery is a potential choice, BigQuery doesn’t provide high throughput and low latency as powerful as BigTable.

Answer D is incorrect: Datastore can be a potential choice since it’s a NoSQL database. The issue is, Datastore is not built for storing and reading huge data volumes as in this scenario. Datastore is designed for web applications of small scale.

Reference: Understanding BigTable Performance: https://cloud.google.com/bigtable/docs/performance

Domain: Operationalize Machine Learning Models

Q12 : An online learning platform wants to generate captions for its videos. The platform offers around 2,500 courses with topics about business, finance, cooking, development & science. The platform allows content with different languages such as French, German, Turkish and Thai. Thus, this can be very difficult for a single team to caption all available courses and they are looking for an approach which helps do such massive job.

Which product from Google Cloud will you suggest them to use?

A. Cloud Speech-to-Text.

B. Cloud Natural Language.

C. Machine Learning Engine.

D. AutoML Vision API.

Correct Answer: A

Explanation :

Answer A is correct: Cloud Speech-to-Text is a service to generate captions from videos by detecting speakers language and speech.

Answer B is incorrect: Cloud natural language service is to derive insights from unstructured text revealing meaning of the documents and categorize articles. It won’t help extracting captions from videos.

Answer C is incorrect: Machine Learning Engine is a managed service letting developers and scientists build their own models and run them in production. This means, you have to build your own model to generate text from videos which needs much effort and experience to build such model. So, it’s not a practical solution for this scenario.

Answer D is incorrect: AutoML Vision API is a service to recognize and derive insights from images by either using pre-trained models or training a custom model based on a set of photographics.

References: Google NLP: https://cloud.google.com/natural-language/, Google Machine Learning Engine: https://cloud.google.com/ml-engine/, Google Vision API: https://cloud.google.com/vision, Google Speech-to-Text API: https://cloud.google.com/speech-to-text/

Domain: Operationalize Machine Learning Models

Q13 : You are building a machine learning model to solve a binary classification problem. The model is going to predict the likelihood of a customer to be using a fraudulent credit card when purchasing online.

Since there is a very small fraction of purchase transactions are proved to be fraudulent, more than 99% of the purchase transactions are valid.

You want to make sure the machine learning model is able to identify the fraudulent transactions. What is the technique to examine the effectiveness of the model?

A. Gradient Descent

B. Recall

C. Feature engineering

D. Precision

Correct Answer: B

Explanation :

Precision is the formula to check how accurate the model is when most of the output are positives. In other words, if most of the output is yes.

Recall: is the formula to check how accurate the model is when most of the output are negatives. In other words, if most of the output is no.

Gradient Descent is an optimization algorithm to find the minimal value of a function. Gradient descent is used to find the minimal minimal RMSE or cost function.

Feature Engineering is the process of deciding which data is important for the model.

From the description, answers A & C are incorrect. It leaves us with B & D.

Since the scenario mentions very little likelihood a transaction can be fraudulent. There are more “no” than “yes” means more negative than positive. Hence, to calculate the effectiveness of the model, you should use recall formula.

References: Precision & Recall: https://developers.google.com/machine-learning/crash-course/classification/ precision-and-recall, Gradient Descent: https://en.wikipedia.org/wiki/Gradient_descent, Feature Engineering: https://cloud.google.com/ml-engine/docs/tensorflow/data-prep

Domain: Operationalize Machine Learning Models

Q14 : You want to launch a Cloud Machine Learning Engine cluster to deploy a deep neural network model built by Tensorflow by data scientists of your company. Reviewing the standard tiers available by Google ML Engine, you could not find a tier that suits the requirements data scientists need for the cluster. Google allows you to specify custom cluster specification.

Which of the following specifications you are allowed to set?

A. workerCount

B. parameterServerCount

C. masterCount

D. workerMemory

Correct Answers: A and B

Explanation :

The Custom tier is not a set tier, but rather enables you to use your own cluster specification. When you use this tier, set values to configure your processing cluster according to these guidelines:

- You must set TrainingInput.masterType to specify the type of machine to use for your master node. This is the only required setting. See the machine types described below.

- You may set TrainingInput.workerCount to specify the number of workers to use. If you specify one or more workers, you must also set TrainingInput.workerType to specify the type of machine to use for your worker nodes.

- You may set TrainingInput.parameterServerCount to specify the number of parameter servers to use. If you specify one or more parameter servers, you must also set TrainingInput.parameterServerType to specify the type of machine to use for your parameter servers.

From the explanation, specifications can be set from the answers are workerCount & parameterServerCount.

Reference: Specifying Machine Types or Scale Tiers: https://cloud.google.com/ml-engine/docs/tensorflow/machine-types

Domain: Design Data Processing Systems

Q15 : Your company is using multiple Google Cloud projects. Since maintaining and managing bills for these projects is becoming very complicated with time, management decided to unify the company’s projects into one and migrate all existing resources to a single project.

One of the projects to be migrated contains several Google Storage buckets with the total estimated file size of 25TB. This data is required to be moved to the newly created project. You need to find a secure and efficient method to migrate data. What Google Cloud product is best for this task?

A. gsutil command

B. Storage Transfer Service

C. Appliance Transfer Service

D. Dataproc

Correct Answer: B

Explanation :

Storage Transfer Service allows you to quickly import ONLINE data into Cloud Storage. You can also set up a repeating schedule for transferring data, as well as to transfer data within Cloud Storage, from one bucket to another.

Transfer Appliance is an OFFLINE, secure, high capacity storage server that you set up in your data center. You fill it with data and ship it to an ingest location where the data is uploaded to Google Cloud Storage.

So, option B is correct, while option C is incorrect.

Option A is incorrect: The gsutil tool is good for programmatic usage by developers and may be useful to copy and move megabytes/gigabytes of data. It’s not so practical for Terabytes of data. It’s also not a reliable data transfer technique as it is related to the machine’s connectivity with Google Cloud.

Option D is incorrect: Dataproc may help by reading from source buckets and writes into the destination buckets, but this requires data in source buckets to be used by Hadoop/Apache tools (Partitioned, optimized file formats such as ORC, ..).

References: Google Cloud Storage Transfer Service: https://cloud.google.com/storage-transfer/docs/, Google Appliance Transfer Service: https://cloud.google.com/transfer-appliance/

Domain: Design Data Processing Systems

Q16 : Your company signed a contract with a retail chain store to handle its data processing applications and tech stack. One of the several applications to be implemented is building an ETL pipeline to ingest the chain store’s daily purchase transaction logs to be processed and stored for analysis and reporting; visualize the chain’s purchase details for the head management.

Daily transaction logs will be available at 2 am when the day is over and logs are exported to a Google Storage bucket partitioned by date in format (yyyy-mm-dd). Dataflow pipeline should run every day at 3:00 am to ingest and process the logs. Which of the following Google products would help?

A. Cloud Function

B. Compute Engine

C. Cloud Scheduler

D. Kubernetes Engine

Correct Answer: C

Explanation :

Cloud Scheduler is a fully managed enterprise-grade cron job scheduler. It allows you to schedule any job virtually, including batch, big data jobs, cloud infrastructure operations, and more. You can automate everything, including retries in case of failure to reduce manual toil and intervention. Cloud Scheduler even acts as a single pane of glass, allowing you to manage all

Reference: Cloud Scheduler: https://cloud.google.com/scheduler/

Domain: Build and Operationalize Data Processing Systems

Q17 : You receive payment transaction logs from e-wallet apps. Transaction logs have a dynamic structure which differs from the e-wallet apps received from. Logs are required to be stored for further security analysis. Transaction logs are critical and it is expected from data storage to have high performance in order to query the required security metrics to be updated in near-real time. Which of the following approaches should you use?

A. Use BigTable as a database with HDD storage to store system logs.

B. Use BigTable as a database with SSD storage to store system logs.

C. Use Datastore as a database to store system logs.

D. Use Firebase as a database to store system logs.

Correct Answer: B

Explanation :

When you create a Cloud Bigtable instance, you choose whether its clusters store data on solid-state drives (SSD) or hard disk drives (HDD):

SSD is significantly faster and has more predictable performance than HDD.

HDD throughput is much more limited than SSD throughput. In a cluster that uses HDD storage, it’s easy to reach the maximum throughput before CPU usage reaches 100%. To increase throughput, you must add more nodes, but the cost of the additional nodes can easily exceed your savings from using HDD storage. SSD storage does not have this limitation because it offers much more throughput per node.

Individual row reads on HDD are very slow. Because of disk seek time, HDD storage supports only 5% of the read rows per second of SSD storage.

The cost savings from HDD are minimal, relative to the cost of the nodes in your Cloud Bigtable cluster, unless you’re storing very large amounts of data.

References: Choosing Between SSD and HDD Storage: https://cloud.google.com/bigtable/docs/choosing-ssd-hdd, Querying Cloud Bigtable Data: https://cloud.google.com/bigquery/external-data-bigtable

Domain: Operationalize Machine Learning Models

Q18 : You have over 2,000 video clips with dialog scenes and you need to transcribe the dialog to text. Since transcribing this amount of clips can be time-consuming, you want to find a product in Google Cloud which can achieve this instead. Which of the following is that best for this scenario?

A. AutoML Vision API.

B. Cloud Natural Language.

C. Machine Learning Engine.

D. Cloud Speech-to-Text.

Correct Answer: D

Explanation :

Cloud Speech-to-Text is a service to generate captions from videos by detecting speakers language and speech. Google Cloud Speech-to-Text enables developers to convert audio to text by applying powerful neural network models in an easy-to-use API. The API recognizes 120 languages and variants to support your global user base. You can enable voice command-and-control, transcribe audio from call centers, and more. It can process real-time streaming or prerecorded audio, using Google’s machine learning technology.

Option A is incorrect: AutoML Vision API is a service to recognize and derive insights from images by either using pre-trained models or training a custom model based on a set of photographic.

Option B is incorrect: Cloud natural language service is used to derive insights from unstructured text, revealing the meaning of the documents and categorize articles. It won’t help in extracting captions from videos.

Option C is incorrect: Machine Learning Engine is a managed service that allows developers and scientists to build their own models and run them in production. This means you have to build your own model to generate text from videos which needs much effort and experience to build such a model. So, it’s not a practical solution for this scenario.

References: Google Speech-to-Text API: https://cloud.google.com/speech-to-text/, Google NLP:https://cloud.google.com/natural-language/, Google Machine Learning Engine:https://cloud.google.com/ml-engine/, Google Vision API:https://cloud.google.com/vision

Domain: Operationalize Machine Learning Models

Q19 : You are building a model using TensorFlow. Upon training the model, the results show that the model could return 73% true positives. When you tested the model with a set derived from real data. You noticed a decrease in true positive returns to 65%. You need to tune the model for better prediction. What would you do?

A. Increase feature parameters

B. Increase regularization

C. Decrease feature parameters

D. Decrease regularization

Correct Answers: B and C

Explanation :

Overfitting happens when a model performs well on a training set, generating only a small error while giving wrong output for the test set. This happens because the model is only picking up specific features input found in the training set instead of picking out the general features of the given training set.

To solve overfitting, the following would help in improving the model’s quality:

- Increase the number of examples, the more data a model is trained with, the more use cases the model can be training on and better improves its predictions.

- Tune hyperparameters which are related to number and size of hidden layers (for neural networks), and regularization, which means using techniques to make your model simpler such as using dropout method to remove neuron networks or adding “penalty” parameters to the cost function.

- Remove features by removing irrelevant features. Feature engineering is a wide subject and feature selection is a critical part of building and training a model. Some algorithms have built-in feature selection, but in some cases, data scientists need to cherry-pick or manually select or remove features for debugging and finding the best model output.

From the brief explanation, to solve the overfitting problem in the scenario, you need to: - Increase the training set.

- Decrease features parameters.

- Increase regularization.

Reference: Building a serverless Machine learning model: https://cloud.google.com/solutions/building-a- serverless-ml-model

Domain: Operationalize Machine Learning Models

Q20 : You are building a machine learning model using TensorFlow. The model aims to predict the next earthquake’s locations, approximate time and Richter scale based on data records since 1913. The model needs tuning each number of epochs on training data for higher accuracy. Which of the variables are used for hyperparameter tuning?

A. Number of features

B. Number of hidden layers

C. Number of nodes in hidden layers

D. Weight values

Correct Answers: B and C

Explanation :

Hyperparameters are the variables that govern the training process itself. For example, part of setting up a deep neural network is deciding how many hidden layers of nodes to use between the input layer and the output layer, and how many nodes each layer should use. These variables are not directly related to the training data but these are configuration variables. Note that parameters change during a training job, while hyperparameters are usually constant during a job.

Option A is incorrect: Numbers of features is set by feature engineering, not hyperparameter tuning.

Option D is incorrect: Weight values are set while training the model.

Reference: Hyperparameter Tuning: https://cloud.google.com/ml-engine/docs/tensorflow/hyperparameter-tuning-overview

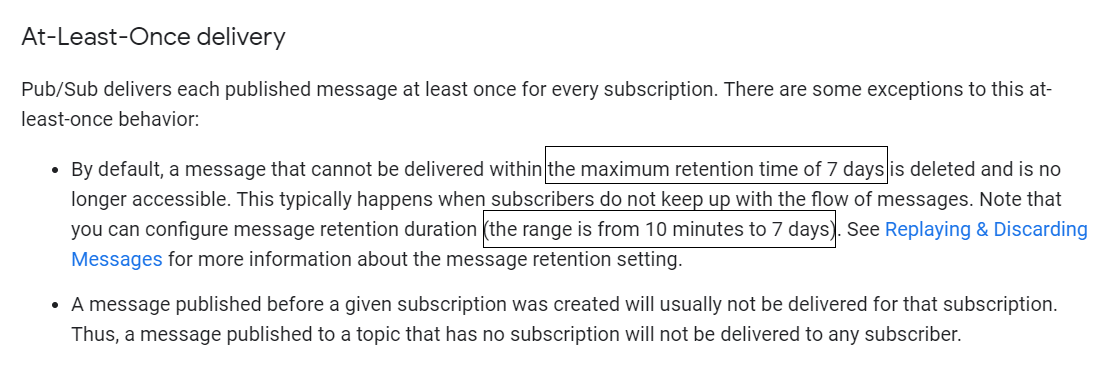

Q21 : Choose all statement(s) which is/are correct for cloud pub/sub.

A. Cloud PubSub has default retention period of 7 days.

B. The retention period for cloud pubsub is configurable and can be configured to a maximum of 28 days and a minimum of 10 minutes.

C. The retention period for cloud pubsub is configurable and can be configured to a maximum of 7 days and a minimum of 10 minutes.

D. Retention period for cloud pub/sub is not configurable and is set to 7 days by default.

Correct Answers: A and C

Explanation :

Option A is correct. Cloud pub/sub has default retention period of 7 days.

Option B is incorrect. Cloud pub/sub retention period is configurable and can be configured to a maximum of 7 days and a minimum of 10 minutes.

Option C is correct. Cloud pub/sub retention period is configurable and can be configured to a maximum of 7 days and a minimum of 10 minutes

Option D is incorrect. Cloud pub/sub retention period is configurable.

Q22 : You receive bank transactions data through Cloud Pub/Sub and you need to analyze the data using Cloud data flow. The transactions are in the below format:

2INDEL3465, JACK, 34627,DOLLAR,20191205234251000,D

1USCHG5627, SAM, 1276, DOLLAR, 20191205234252562,C

Currently the requirement is to extract customer name from the transaction and store the results in an output PCollection. Select the operation which is best suited for this processing.

A. Regex.find

B. Pardo

C. Extract

D. Transform

Correct Answer: B

Explanation :

Option A is incorrect. Regex.find will output Regex group containing all the lines that matches the regex. In this case we need the customer name to be extracted and placed into another PCollection for further processing.

Option B is correct. As ParDo helps in extracting parts from elements. We can use ParDo for filtering a dataset. ParDo can be used to consider each element in PCollection and either output that element to a new collection or discard it.

Option C is incorrect. Extract option does not exist in Cloud Dataflow.

Option D is incorrect. Transform is a step in your pipeline and it represents data processing operation.

Q23 : You have some Hadoop jobs on-premise which the management of the company has decided to bring to Google Cloud Dataproc. Few of the jobs will still be running from on-premise hadoop cluster while other will be running from Cloud Dataproc. You need to orchestrate these jobs and add required dependencies between your on-premises and dataproc jobs. However, the company doesn’t want any vendor lock-in and can move to AWS as well in future. The orchestration framework should be chosen which can accept all these future changes as well. Select the best possible option provided by GCP with very little overhead.

A. Cloud Composer

B. Apache Airflow

C. Apache Oozie

D. Cloud Scheduler

Correct Answer: A

Explanation :

Option A is correct. Cloud Composer allows you to pull workflows together from wherever they live, supporting a fully-functioning and connected cloud environment. Since Cloud Composer is built on Apache Airflow – an open-source technology – it provides freedom from vendor lock-in as well as integration with a wide variety of platforms. We can connect to on-premise database from Cloud Composer. For connecting to on-premise database refer below link: https://www.progress.com/tutorials/cloud-and-hybrid/connect-to-on-premises-databases-f rom-google-composer

Option B is incorrect. As per question GCP service is required, Cloud Composer is built on top of Apache Airflow. Cloud Composer should be correct answer.

Option C is incorrect. Orchestration job using Apache Oozie does not support connecting to on-premises and dataproc at the same time. Also, Oozie can only be run with Hadoop. There is no managed service for Oozie in GCP.

Option D is incorrect. Cloud Scheduler is fully managed cron job scheduler. In this case, we need orchestration framework where dependencies between jobs will be present hence Cloud Scheduler is not the correct answer.

Domain: Design Data Processing Systems

Q24 : A regional auto dealership is migrating its business applications to Google Cloud. The CTO of this company asked their data engineer to find the possible ways you can ingest data into BigQuery?

- Batch Ingestion & Streaming Ingestion

- Data Transfer Service

- Query Materialization

- Partner Integrations

- All of the above

Correct Answer: E

Explanation :

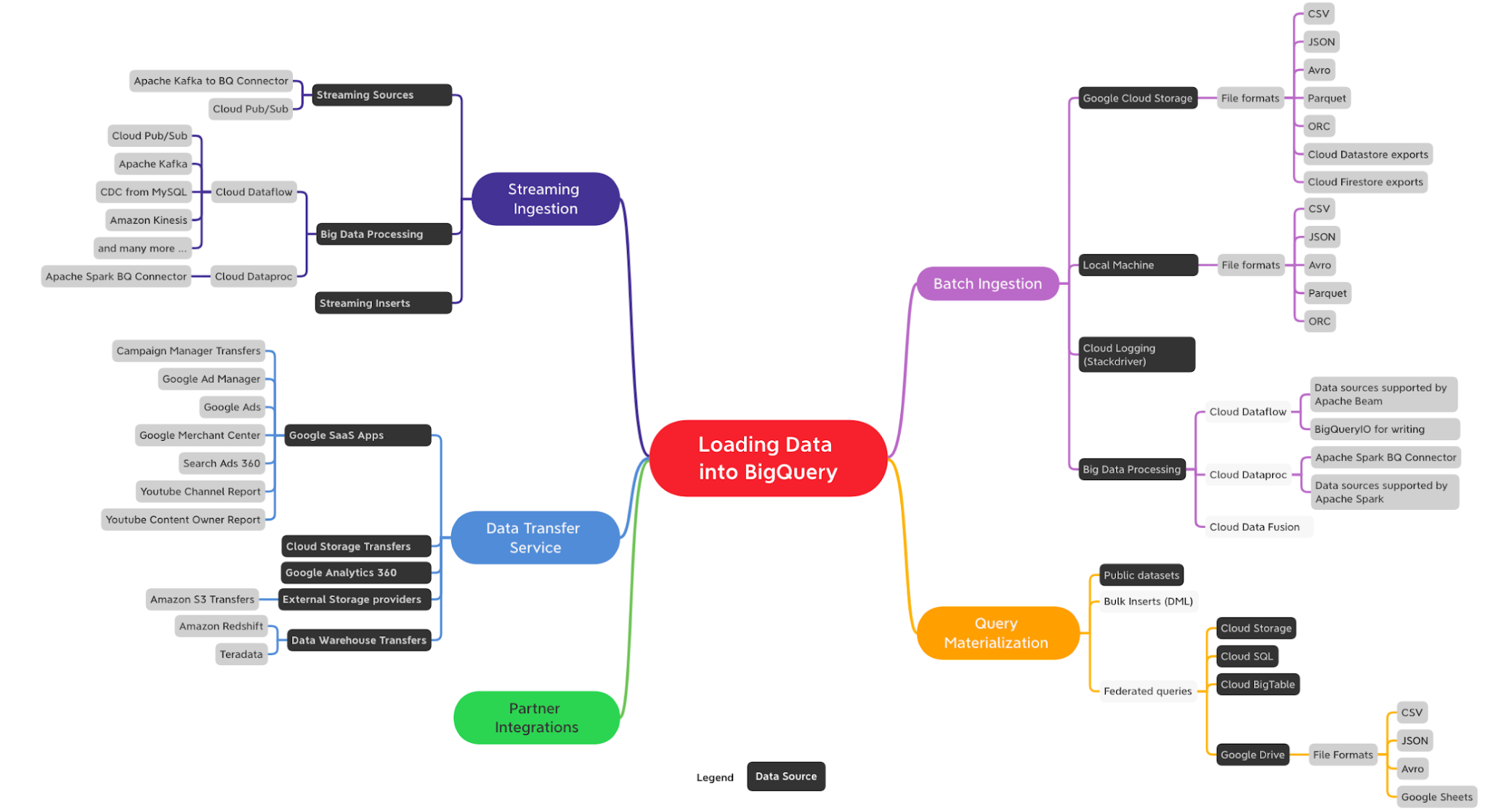

Option E is CORRECT because these all are the possible ways to load data into BigQuery. Lets understand these one by one –

Batch Ingestion – This involves ingesting large, bounded datasets that don’t have to be processed in real-time.

To implement batch ingestion, one can use Google Cloud Storage, Cloud Dataflow, Cloud Dataproc, Cloud Data Fusion etc.

Stream Ingestion – This involves ingesting large, unbounded data that is processed in real-time.

To implement Stream Ingestion, one can use Apache Kafka to BQ connector, Cloud Pub/Sub, Cloud Dataflow, Cloud Dataproc etc.

Data Transfer Service (DTS) – This is a fully managed service to load data from external cloud storage providers such as Amazon S3, Google SaaS applications such as Google Ads, and transferring data from data warehouse technologies such as Teradata etc.

Partner Integrations – These are the data integration alternatives from Google Cloud Partners. This includes, Confluent, Informatica, snapLogic, Talend and many more.

Query Materialization – This is the best way to simplify extract, transform and load patterns in BigQuery. Using federated queries in BigQuery, one can persist their analysis results in Big Query to derive any insights.

Please find the image attached below having details about all the possible ways to ingest data into BigQuery.

Domain: Design Data Processing Systems

Q25 : Your organization is looking for a fully managed, cloud-native data integration service. Their engineers are very familiar with CDAP (Cask Data Application Platform).

Which managed service in Google Cloud would you recommend?

A. Cloud Dataproc

B. Cloud Composer

C. Cloud Dataflow

D. Cloud Data Fusion

Correct Answer: D

Explanation :

Option D is CORRECT because Cloud Data Fusion is built with an open-source core (CDAP) for pipeline portability.

This also provides end-to-end data lineage for root cause analysis.

Option A is incorrect because Cloud Dataproc is a fully managed and highly scalable service for running Apache Flink, Apache Spark, and other applications.

Option B is incorrect because Cloud Composer is a fully managed data-workflow orchestration service.

Option C is incorrect because Cloud Dataflow is a fully managed streaming analytics service.

For more information on the Google Cloud Data fusion, please visit the below URL: https://cloud.google.com/data-fusion

Domain: Maintaining and Automating Data Workloads

Q26: A company needs to process large volumes of data for business-critical processes while minimizing costs. They are considering using Dataproc for their data processing needs. What should they consider when deciding between persistent or job-based data clusters in Dataproc?

A) Persistent clusters offer fixed capacity and are suitable for continuous, long-running processes, while job-based clusters are more cost-effective for short-lived, ad-hoc tasks.

B) Job-based clusters provide greater flexibility and cost efficiency for intermittent workloads, while persistent clusters are ideal for predictable, ongoing data processing tasks.

C) Persistent clusters are recommended for batch processing tasks due to their ability to scale resources dynamically, while job-based clusters are better suited for interactive query jobs.

D) Job-based clusters offer better fault tolerance and error recovery mechanisms, making them suitable for mission-critical data processes, while persistent clusters are more cost-effective for one-time data transformations.

Answer: B

Explanation:

Option B is CORRECT as job-based clusters in Dataproc provide flexibility and cost efficiency for intermittent workloads by provisioning resources only when needed, while persistent clusters are better suited for predictable, ongoing data processing tasks where resources are continuously required.

Option A is incorrect because persistent clusters may not be the most cost-effective option for short-lived, ad-hoc tasks due to their continuous resource allocation.

Option C is incorrect because persistent clusters are not specifically recommended for batch processing tasks, and job-based clusters can also handle batch processing effectively.

Option D is incorrect because fault tolerance and error recovery mechanisms are not distinguishing factors between persistent and job-based clusters in Dataproc. Both types of clusters offer fault tolerance capabilities.

Reference Link: https://cloud.google.com/dataproc/docs/concepts/compute/clusters-datalab-flex

Domain: Maintaining and Automating Data Workloads

Q27: An organization wants to automate its data processing workflows using Cloud Composer. They need to schedule jobs in a repeatable manner to ensure the timely execution of critical tasks. What approach should they take to achieve this goal effectively?

A) Utilize Cloud Functions to trigger workflows based on predefined schedules and dependencies.

B) Implement directed acyclic graphs (DAGs) in Cloud Composer to define workflow dependencies and schedule job execution.

C) Use Cloud Scheduler to define and manage job schedules, and then trigger workflow execution in Cloud Composer.

D) Leverage Cloud Tasks to create and manage task queues for scheduling and orchestrating data processing jobs in Cloud Composer.

Answer: B

Explanation:

Option B is CORRECT as directed acyclic graphs (DAGs) in Cloud Composer allow organizations to define workflow dependencies and schedule job execution in a repeatable and reliable manner, ensuring timely execution of critical tasks.

Option A is incorrect because while Cloud Functions can trigger workflows based on schedules and dependencies, they do not provide the same level of orchestration and scheduling capabilities as Cloud Composer DAGs.

Option C is incorrect because Cloud Scheduler is primarily used for managing job schedules, but it does not offer workflow orchestration capabilities like Cloud Composer DAGs.

Option D is incorrect because Cloud Tasks is more suitable for managing task queues and asynchronous task execution, rather than scheduling and orchestrating data processing workflows.

Reference Link: https://cloud.google.com/composer/docs/concepts/dags

Domain: Maintaining and Automating Data Workloads

Q28: Your organization needs to organize data workloads based on business requirements, considering factors like flexibility, capacity, and pricing models. Which pricing model offers fixed capacity and is best suited for predictable, steady workloads?

A) Flex slot pricing

B) On-demand pricing

C) Flat-rate slot pricing

D) Pay-as-you-go pricing

Answer: C

Explanation:

Option C is CORRECT as flat-rate slot pricing offers fixed capacity for predictable workloads. It allows users to pay a flat rate for a set number of slots, regardless of usage, providing stability in pricing for steady workloads.

Option A is incorrect because flex slot pricing offers flexibility and dynamically allocates slots based on demand, making it suitable for fluctuating workloads.

Option B is incorrect because on-demand pricing charges users based on usage, without any fixed commitments, making it suitable for sporadic or unpredictable workloads.

Option D is incorrect because pay-as-you-go pricing is similar to on-demand pricing and charges users based on actual usage, without fixed commitments.

Reference Link: https://cloud.google.com/bigquery/pricing#flat-rate-pricing

Domain: Maintaining and Automating Data Workloads

Q29: Your organization needs to ensure the observability of data processes, monitor planned usage, and troubleshoot error messages and billing issues effectively. Which Google Cloud service provides centralized logging and monitoring capabilities for data processes?

A) Google Cloud Monitoring

B) Google Cloud Logging

C) Google Cloud Trace

D) Google Cloud Audit Logging

Answer: A

Explanation:

Option A is CORRECT as Google Cloud Monitoring provides centralized logging and monitoring capabilities for Google Cloud services, including data processes such as Dataproc and Dataflow. It enables users to collect, view, and analyze metrics, logs, and other monitoring data across their Google Cloud environment.

Option B is incorrect because while Google Cloud Logging offers centralized logging capabilities, it does not provide comprehensive monitoring functionalities for data processes such as planned usage monitoring and error troubleshooting.

Option C is incorrect because Google Cloud Trace is primarily focused on distributed application tracing, providing insights into application performance, rather than centralized logging and monitoring for data processes.

Option D is incorrect because Google Cloud Audit Logging is specifically designed for tracking and logging user access and system activity within Google Cloud Platform services, not for monitoring data processes.

Reference Link: https://cloud.google.com/monitoring

Domain: Maintaining and Automating Data Workloads

Q30: Your organization operates critical data processes in a Google Cloud environment. You need to decide between persistent or job-based data clusters for processing large-scale data workloads efficiently. What should you consider when making this decision?

A) Evaluate the cost-effectiveness of persistent clusters based on long-term utilization trends.

B) Implement job-based clusters to ensure scalability and resource optimization for irregular workloads.

C) Analyze the availability of resources in persistent clusters to ensure uninterrupted data processing.

D) Use persistent clusters to avoid the overhead of cluster initialization and termination.

Answer: B

Explanation:

Option B is CORRECT as implementing job-based clusters allows for scalability and resource optimization, especially for irregular workloads, ensuring efficient utilization of resources without incurring unnecessary costs.

Option A is incorrect because while evaluating the cost-effectiveness of persistent clusters is important, it may not address the scalability and optimization needs for sporadic workloads.

Option C is incorrect because analyzing resource availability in persistent clusters may ensure uninterrupted processing but may not offer the flexibility needed for varying workloads.

Option D is incorrect because opting for persistent clusters to avoid initialization and termination overhead may lead to underutilization of resources and increased costs for sporadic workloads.

Reference Link: https://cloud.google.com/dataproc/docs/concepts/compute

Domain: Preparing and Using Data for Analysis

Q31: A prominent e-commerce platform is revamping its data visualization dashboard to display real-time sales analytics. The dashboard is expected to handle a substantial number of concurrent users and deliver quick visualizations with minimal latency. Which approach should they take to optimize the dashboard’s performance?

- A) Employ BigQuery BI Engine with precomputed materialized views.

- B) Implement BigQuery BI Engine with virtualized logical views.

- C) Utilize BigQuery BI Engine with real-time streaming data integration.

- D) Integrate BigQuery BI Engine with access-controlled authorized views.

Answer: A

Explanation:

Option A is CORRECT because leveraging BigQuery BI Engine with precomputed materialized views allows for quick access to aggregated data, resulting in faster visualization rendering and minimal latency, which aligns with the requirements of the e-commerce platform’s data visualization dashboard.

Option B is incorrect because virtualized logical views may introduce additional processing overhead and latency, which could impact the performance of the dashboard, especially under high concurrent user loads.

Option C is incorrect because while real-time streaming data integration can provide up-to-date insights, it may not offer the same level of performance optimization as precomputed materialized views for handling large volumes of concurrent user requests.

Option D is incorrect because access-controlled authorized views focus on security rather than performance optimization for data visualization dashboards.

References: https://cloud.google.com/bigquery/docs/materialized-views-intro

Domain: Preparing and Using Data for Analysis

Q32: Your organization aims to consolidate and analyze a vast amount of genomic sequencing data, totaling over 20 TB, from various sources. The data must be stored in new tables for further query and analytics, accessible via SQL, with a low-maintenance architecture. What is the most cost-effective solution to support data analytics for such large datasets?

- A) Implement Cloud SQL, organize the data into tables, and utilize JOIN operations in SQL queries to retrieve data.

- B) Use BigQuery as a data warehouse solution, configuring output destinations for caching large query results.

- C) Deploy a MySQL cluster on a Compute Engine managed instance group for scalability and SQL access.

- D) Utilize Cloud Spanner to replicate the data across regions and normalize it in a series of tables.

Answer: B

Explanation:

Option B is CORRECT because using BigQuery as a data warehouse solution offers scalability, low maintenance, and efficient handling of large datasets for data analytics. Setting output destinations for caching large query results, ensures quick access to previously processed data, aligning with the organization’s requirements.

Option A is incorrect because while Cloud SQL offers SQL access and table organization, it may not be as scalable and cost-effective for analyzing large datasets compared to BigQuery.

Option C is incorrect because managing a MySQL cluster on Compute Engine instances may require more maintenance and management overhead, and it may not provide the same level of scalability and cost-effectiveness as BigQuery for analyzing large datasets.

Option D is incorrect because while Cloud Spanner offers scalability and replication features, it may not be optimized for data analytics and querying large datasets compared to BigQuery.

References: https://cloud.google.com/bigquery

Domain: Preparing and using data for analysis

Q33: Your organization utilizes a dataset in BigQuery for extensive analysis. Now, you intend to grant access to the same dataset for third-party companies while keeping data sharing costs low and ensuring data currency. Which solution should you choose?

- A) Utilize Analytics Hub to manage data access and provide third-party companies with access to the dataset.

- B) Implement Cloud Scheduler to regularly export the data to Cloud Storage and grant third-party companies access to the bucket.

- C) Create a separate dataset in BigQuery containing the relevant data for sharing and grant access to third-party companies for the new dataset.

- D) Develop a Dataflow job to periodically read the data and write it to the appropriate BigQuery dataset or Cloud Storage bucket for third-party usage.

Answer: A

Explanation:

Option A is CORRECT because leveraging Analytics Hub allows centralized management of data access, ensuring security and control while providing third-party companies with access to the dataset. This solution helps maintain low data-sharing costs and ensures data currency.

Option B is incorrect because while using Cloud Scheduler for data export may provide regular updates, it may not offer the same level of control and access management as Analytics Hub for third-party companies.

Option C is incorrect because creating a separate dataset could lead to redundancy and increased management overhead. It may also not provide the necessary control and access management features offered by Analytics Hub.

Option D is incorrect because although Dataflow can automate data movement, it may not be the most efficient solution for managing data access and ensuring currency for third-party companies.

References: https://cloud.google.com/analytics-hub

Domain: Preparing and using data for analysis

Q34: Your team is developing an application on Google Cloud aimed at automatically generating subject labels for users’ blog posts. Due to competitive pressure and limited developer resources, you need to implement this feature quickly, with no prior experience in machine learning. What approach should you take?

- A) Integrate the Cloud Natural Language API into your application and process the generated Entity Analysis results as labels.

- B) Utilize the Cloud Natural Language API within your application and process the generated Sentiment Analysis as labels.

- C) Develop and train a text classification model using TensorFlow, deploy it using Cloud Machine Learning Engine, and call the model from your application to process the results as labels.

- D) Create and train a text classification model using TensorFlow, deploy it using a Kubernetes Engine cluster, and call the model from your application to process the results as labels.

Answer: A

Explanation:

Option A is CORRECT because leveraging the Cloud Natural Language API allows for quick implementation of subject labels without the need for machine learning expertise. By processing the generated Entity Analysis results, the application can efficiently generate subject labels for blog posts.

Option B is incorrect because while Sentiment Analysis could provide insights into the sentiment of blog posts, it may not be suitable for generating subject labels.

Option C is incorrect because building and deploying a custom text classification model using TensorFlow and Cloud Machine Learning Engine would require significant time and expertise, which contradicts the requirement for a quick implementation.

Option D is incorrect because deploying a TensorFlow model using a Kubernetes Engine cluster would also require considerable effort and resources, making it unsuitable for the scenario described.

References: https://cloud.google.com/natural-language

Domain: Preparing and Using Data for Analysis

Q35: Your company has onboarded a new data scientist who needs to conduct complex analyses across extensive datasets stored in Google Cloud Storage and a Cassandra cluster on Google Compute Engine. The data scientist aims to create labeled datasets for machine learning projects and perform visualization tasks. However, she finds her current laptop insufficient for these tasks, causing significant slowdowns. What solution should you provide to facilitate her work?

- A) Install a local instance of Jupyter Notebook on the data scientist’s laptop.

- B) Grant the data scientist access to Google Cloud Shell for performing analyses and visualization tasks.

- C) Deploy a visualization tool on a virtual machine (VM) hosted on Google Compute Engine for the data scientist’s use.

- D) Deploy Google Cloud Datalab to a virtual machine (VM) on Google Compute Engine to enable the data scientist to perform analyses and visualizations efficiently.

Answer: D

Explanation:

Option D is CORRECT because Google Cloud Datalab provides a powerful and interactive toolset tailored for data exploration, analysis, and visualization. Deploying Datalab to a virtual machine (VM) on Google Compute Engine ensures that the data scientist has access to the necessary computational resources and tools to handle large datasets efficiently.

Option A is incorrect because installing a local instance of Jupyter Notebook on the data scientist’s laptop may not address the performance limitations caused by the large datasets.

Option B is incorrect because while Google Cloud Shell provides command-line access to Google Cloud Platform resources, it may not offer the computational power required for handling extensive datasets and performing complex analyses.

Option C is incorrect because hosting a visualization tool on a VM on Google Compute Engine does not address the data scientist’s need for a comprehensive data exploration and analysis environment.

References: https://cloud.google.com/datalab

Summary

We hope you were able to get insights on the certification and feel a little more confident now. For more elaborations and practice follow our exam-ready Practice Tests. Keep learning until you feel confident enough to take up the actual exam. Preparation is the key tor passing the Google Cloud Professional Data Engineer Certification Exam.

- Which AWS Certification is Best For Developers - December 5, 2023

- Top Popular Hands on Labs for Google Cloud Platform (GCP) - October 29, 2023

- 7 Exam Tips for Google Cloud Database Engineer Certification - September 21, 2023

- What Is Azure Web Application Firewall (WAF)? - September 8, 2023

- The 5 Best Team Chat Apps for Business in 2024 - August 10, 2023

- What is Microsoft Cybersecurity Reference Architectures? - July 31, 2023

- How to Secure & Migrate your SAP Environment on AWS - July 26, 2023

- A Comparison of SUM-DMO and SWPM - July 21, 2023