Kubernetes cluster refers to a group of computing resources, like servers or virtual machines, handled by the Kubernetes container orchestration system. It is an open-source platform that helps you seamlessly deploy and scale app containers and leverage user-friendly APIs to manage containers across environments.

This blog will guide you through everything you need to know about a Kubernetes cluster – its components, features, how it works, benefits, how you can learn to work with Kubernetes cluster, and more.

For those preparing for the CKA certification exam, understanding the Kubernetes Cluster is essential.

Let’s dive in!

What is a Kubernetes Cluster?

A Kubernetes cluster is a node that helps run containerized apps. Containerizing sheaths an application with its dependencies and essential services, offering a lighter and more adaptable alternative to virtual machines.

Consequently, it also helps develop, migrate, and monitor Applications.

Additionally, these clusters empower containers to operate seamlessly across diverse environments, spanning virtual, physical, cloud-based, and on-premises setups.

Unlike virtual machines, Kubernetes containers are not confined to specific operating systems. They are capable of using shared operating systems and operating universally.

Each Kubernetes cluster includes a master node and many worker nodes, either physical machines or virtual instances.

- Master Node: It governs the status of the cluster, including running applications and their attached container images. It initiates task assignments and manages various processes such as scheduling, scaling applications, maintaining cluster state, and implementing updates.

- Worker nodes: It execute the applications assigned by the master node. They are part of a unified system, operating as virtual machines or physical computers. A Kubernetes cluster requires at least one master node and worker node to operate. In production and staging environments, the cluster typically spans multiple worker nodes, while for testing purposes, all components may reside on a single physical or virtual node.

Kubernetes also introduces the concept of namespaces, effectively helping you organize multiple clusters within a single physical cluster. Namespaces facilitate resource allocation among different teams via resource quotas, making them invaluable for complex projects or scenarios involving multiple teams.

What is Kubernetes Cluster Management?

With modern cloud-native applications constantly evolving, Kubernetes environments are getting more distributed. They extend across various locations, including multiple data centers on-premise, in public clouds, and at the edge.

The deployment will include managing multiple clusters for organizations that leverage Kubernetes at scale or in production. These clusters serve distinct purposes, spanning development, testing, and production environments, necessitating effective management across diverse settings. It encompasses the practices through which an IT team oversees a collection of Kubernetes clusters.

Additionally, Kubernetes provides built-in tools to monitor and help maintain the cluster’s health and performance. Meanwhile, external and third-party tools and platforms also provide advanced monitoring, logging, and alerting.

Moreover, you can scale applications up or down based on demand, either manually or automatically, using the Horizontal Pods Autoscaler or HPA. You can ensure the longevity of your application’s performance by keeping it secure and updated. Also, it offers rolling updates of your application with zero downtime by updating the Deployment configuration.

Components of Kubernetes Cluster?

Kubernetes Cluster has four main components.

Control Plane

The control plane serves as the backbone of Kubernetes, facilitating the abstraction and ensuring that the configurations defined for the cluster are seamlessly executed.

Alongside the kube-controller-manager responsible for cluster operations, the control plane includes vital components such as kube-Episerver, which exposes the Kubernetes API, and kube-scheduler, tasked with monitoring cluster health and orchestrating pod deployment to nodes according to configuration settings.

Workloads

Workloads refer to the applications operated by Kubernetes. They range from a single component to multiple discrete components working in sync. Within a Kubernetes cluster, you can execute workloads across pods.

Pods

A Kubernetes pod comprises one or more containers that share storage and network resources. Each pod within the cluster includes a spec detailing the container execution parameters.

Nodes

Nodes represent the tangible resources—like CPU and RAM—where workloads are executed. These resources can originate from various sources, including virtual machines, on-premises physical servers, or cloud infrastructure.

Irrespective of the underlying source, nodes serve as the foundational elements representing resources within a Kubernetes cluster.

Apart from this, nodes have several other components to hold the Kubernetes cluster together.

Components of Nodes

Kubelet

Operating as an agent on every worker node, Kubelet oversees the execution of containers within pods. It continually reports the node’s status and the running pods to the control plane, ensuring synchronization with the desired application state via communication with the API server.

Kube-proxy

Deployed on each worker node, Kube-proxy serves as a network proxy. It facilitates service abstraction by managing network rules and directing traffic accurately to the designated pods, ensuring seamless communication within the cluster.

Container runtime

Responsible for executing containers, the container runtime software—such as Docker, containers, or CRI-O—plays a crucial role in Kubernetes. It enables the platform to support various container runtimes using Container Runtime Interface, allowing flexibility in runtime selection based on specific requirements and preferences.

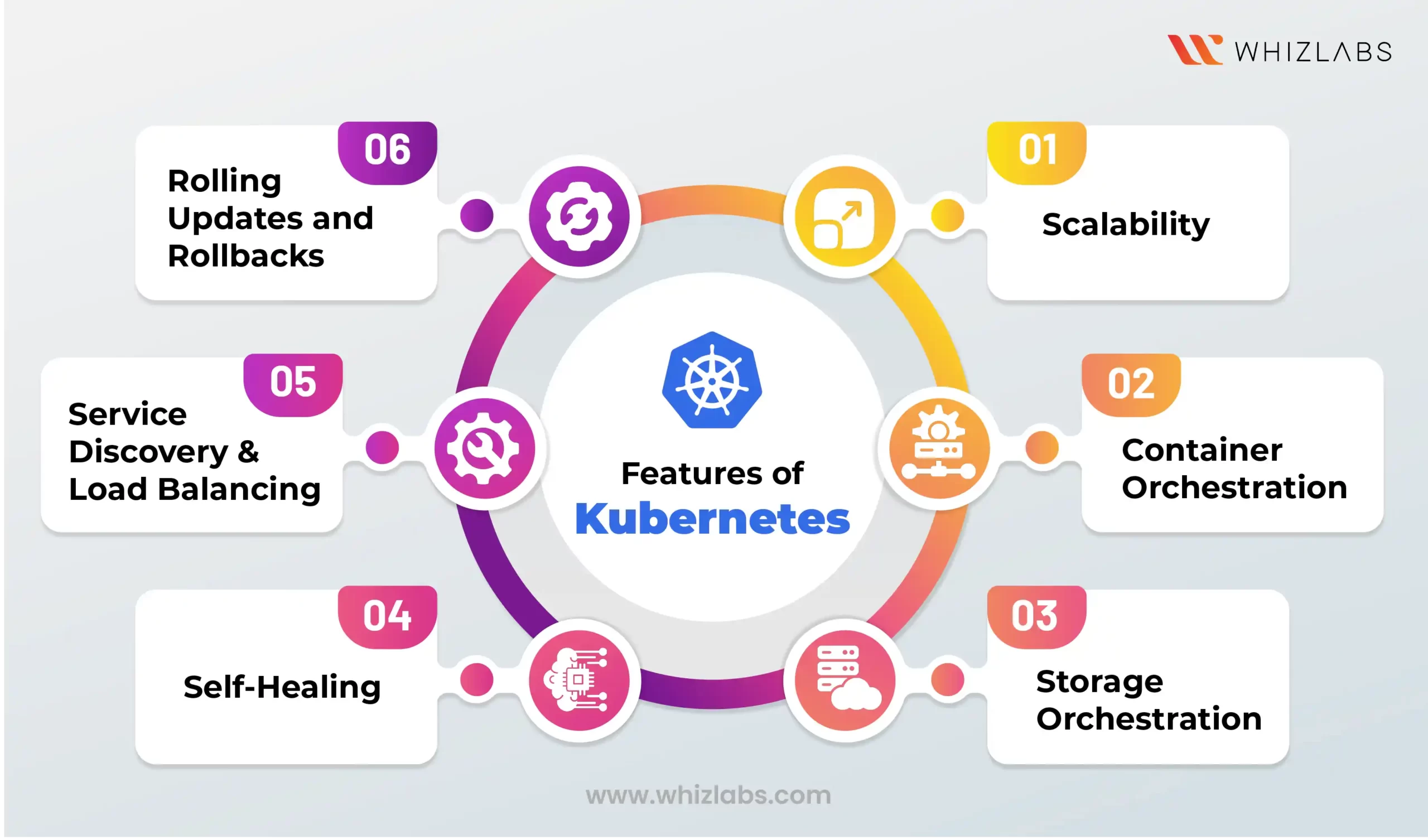

Features of Kubernetes

Kubernetes offers an array of essential features for deploying containerized applications, including:

- Scalability

Kubernetes excels at scaling workloads, whether large or small. It supports single-node clusters for local development or production-grade clusters spanning thousands of nodes across multiple data centers. While scalability limits exist, most Kubernetes use cases fall well within these Constraints.

- Container Orchestration

Kubernetes automates containerized applications' provisioning, deployment, and scaling, known as container orchestration. This feature streamlines the management of container deployments, eliminating the complexities of manual orchestration, especially at scale.

- Storage Orchestration

For stateful applications requiring persistent data storage, Kubernetes offers storage orchestration. It automatically connects containers to the appropriate storage infrastructure, easily facilitating tasks such as writing logs or managing databases.

- Self-Healing

Kubernetes incorporates self-healing mechanisms to recover from failures autonomously. It automatically restarts failed containers or Pods and redistributes workloads from unreachable nodes to healthy ones, reducing the need for human intervention in routine failure scenarios.

- Service Discovery and Load Balancing

Kubernetes simplifies service discovery and load balancing by automatically exposing applications as network services and distributing traffic between them. This eliminates the need for manual configuration of network addresses and rules, making scaling network services Effortless.

- Rolling Updates and Rollbacks

Kubernetes supports rolling updates, allowing applications to be updated incrementally without downtime. Older versions of Pods are gradually replaced with updated versions, minimizing the risk of service disruption. Similarly, rollbacks to previous application versions can be performed Seamlessly.

How does the Kubernetes Cluster work?

Understanding how Kubernetes works at a high level can simplify its complexity:

Declaration of Workload State

Workload states, including container images for pods, are declared in plaintext YAML files.

Container Image Deployment

Kubernetes fetches container images from a registry and deploys them across nodes, efficiently allocating resources while abstracting away network and computing resource allocation to pods.

Autonomous State Restoration

If changes occur, such as unhealthy pods, the control plane automatically restores the workload’s ideal state. This continuous loop abstracts away the intricacies of container Orchestration.

Kubernetes clusters also facilitate automatic rolling updates and scaling configurations based on demand. However, depending on your deployment environment, there are various approaches to creating Kubernetes clusters. Azure and AWS offer user-friendly tools like wizard-based cluster creation and Amazon EKS, respectively, to simplify deployment complexity.

Benefits of using Kubernetes Cluster

Kubernetes offers a multitude of significant benefits for modern workloads.

Easy Deployment and Updates

While setting up Kubernetes from scratch can be complex, various deployment approaches, such as managed Kubernetes services or deployment platforms like Rancher and OpenShift, simplify the process. Once configured, deploying and updating applications is straightforward using YAML code, streamlining management tasks.

App Stability and Availability in a Cloud Environment

Kubernetes excels in maintaining application stability and availability, automatically deploying and scaling applications to ensure optimal performance. Although it can’t recover from total infrastructure failures, it helps maintain availability and performance levels, especially in dynamic cloud environments.

Multi-Cloud Capability

It is versatile, running seamlessly across public, private, or on-premises servers. Despite challenges in managing multi-cloud Kubernetes clusters, it offers a consistent approach to managing and scaling workloads across multiple clouds.

Portability Across Cloud Providers

It enables workload portability across different cloud providers, reducing vendor lock-in. While adjustments may be necessary, Kubernetes offers portability unmatched by alternative container orchestration platforms.

Cost-Effectiveness

As an open-source solution, Kubernetes is cost-effective, with minimal fees for managed services. Its efficient resource management capabilities optimize infrastructure utilization, reducing idle resources and maximizing cost-effectiveness.

Increased DevOps Efficiency for Microservices Architecture

Kubernetes streamlines DevOps workflows by enabling the build, test, and deployment of microservices within the same platform. This integration enhances efficiency and reduces risks associated with platform transitions during the development lifecycle.

What are Kubernetes Clusters used for?

Kubernetes Cluster architecture serves various common use cases, especially when available as a managed service, offering tailored solutions to address specific needs.

Increasing Development Velocity

By enabling the creation of cloud-native microservices-based applications and supporting the containerization of existing apps, Kubernetes accelerates application development. It is the cornerstone of application modernization efforts, empowering developers to build and deploy apps more rapidly.

Deploying Applications Anywhere

With Kubernetes designed for universal deployment, you can seamlessly deploy applications across diverse environments, including on-site deployments, public clouds, and hybrid setups. This flexibility ensures that applications can be run wherever needed, facilitating agility and adaptability.

Running Efficient Services

Kubernetes automates the adjustment of cluster size to accommodate service requirements. This dynamic scalability enables automatic scaling of applications based on demand, optimizing resource utilization and ensuring efficient operation of services.

How can you learn Kubernetes Cluster?

If you are new to the Kubernetes Cluster and wondering how to begin your journey in the domain, the best way is to go for a Kubernetes Cluster certification.

The Certified Kubernetes Administrator certification, or the CKA exam, aims to impart a holistic understanding of Kubernetes administration. It builds your ability to install, configure, and manage Kubernetes clusters at a production-grade level.

The CKA exam also helps you gain an in-depth knowledge of Kubernetes Cluster deployment, maintenance, architecture, and operation. It will also help you learn critical Kubernetes concepts like:

1. Cluster Setup

Demonstrating proficiency in installing and configuring Kubernetes clusters using different methods.

2. Maintenance

Skills in ongoing cluster maintenance, including upgrades, troubleshooting, and security

measures, are tested.

3. Workload Management

The ability to deploy, manage, and scale workloads on Kubernetes clusters is evaluated.

4. Storage

Configuring and managing storage solutions, including persistent volumes and storage classes.

5. Monitoring and Logging

Monitoring cluster health and resource utilization and configuring logging for cluster components

and workloads.

6. Security

Implementing security best practices, including securing cluster components and managing authentication and authorization.

In addition, the best part of the Certified Kubernetes Administrator course is that you don’t need any prior experience to be eligible for the exam. However, preliminary knowledge of concepts like YAML or JSON, Linux administration, shell scripting, DevOps methodologies, Containers or Dockers will help you ace the exam.

Summary

I hope this blog helps you understand the Kubernetes Cluster in depth. We have discussed its features, benefits, how it works, and how to learn the domain. While Kubernetes has numerous alternatives, most of them lack core features like scalability and perfect orchestration that help you grow with your data limitlessly.

However, to delve into these nuances of the Kubernetes Cluster and pass the CKA exam with deep expertise, Whizlabs brings you a comprehensive training program on Kubernetes administration. The course helps you prepare for the exam and gives you deeper and updated knowledge through practice papers and thoughtfully created video lectures.

You can also sharpen your practical skills with Whizlabs Kubernetes hands-on labs.

Want to learn more about Kubernetes or related certifications? Talk to us today.

- Study Guide DP-600 : Implementing Analytics Solutions Using Microsoft Fabric Certification Exam - June 14, 2024

- Top 15 Azure Data Factory Interview Questions & Answers - June 5, 2024

- Top Data Science Interview Questions and Answers (2024) - May 30, 2024

- What is a Kubernetes Cluster? - May 22, 2024

- What are the Roles and Responsibilities of an AWS Sysops Administrator? - March 28, 2024

- How to Create Azure Network Security Groups? - March 15, 2024

- What is the difference between Cloud Dataproc and Cloud Dataflow? - March 13, 2024

- What are the benefits of having an AWS SysOps Administrator certification? - March 1, 2024