Are you preparing for the Microsoft AZ-305 exam ? – then this article helps you in preparing for the Designing Microsoft Azure Infrastructure Solutions [AZ-305] certification exam. We provide 25 free questions on various exam domains to give you an overview of the actual exam.

By trying out these free questions and answers with detailed explanations on the AZ-305 certification exam, you will be able to face the actual exam with full confidence. Let us try exploring these AZ-305 exam questions!

Domain : Design data storage solutions

Q1 : A company needs a data store created in Azure for an application. Below are the key requirements for the data store.

- Ability to store JSON based items

- Ability to use SQL like queries on the data store

- Ability to provide low latency access to data items

Which of the following would you consider as the data store?

A. Azure BLOB storage

B. Azure CosmosDB

C. Azure HDInsight

D. Azure Redis

Correct Answer: B

Explanation

You can use CosmosDB to provide low latency access to data. You can use the SQL API to store JSON based objects. The Microsoft documentation mentions the following.

Option A is incorrect since this is used for object level storage.

Option C is incorrect since this is used for open source analytics.

Option D is incorrect since this is used for storing data in a low memory cache.

For more information on how to use SQL queries, please visit the below URL: https://docs.microsoft.com/en-us/azure/cosmos-db/how-to-sql-query

Domain : Design business continuity solutions

Q2 : A company has set up a storage account in Azure. They have the following storage requirements.

- Ensure that administrators can recover any BLOB data if it has been accidentally deleted.

- Have the ability to recover data over a period of 14 days after the deletion has occurred.

Which of the following feature of Azure storage could be used for this requirement?

A. CORS

B. Static web site

C. Azure CDN

D. Soft Delete

Correct Answer: D

Explanation

You have to use the feature of Soft Delete. The Microsoft documentation mentions the following.

Since this is clearly mentioned in the documentation, all other options are incorrect.

For more information on Azure BLOB soft delete, please visit the below URL: https://docs.microsoft.com/en-us/azure/storage/blobs/storage-blob-soft-delete

Domain : Design business continuity solutions

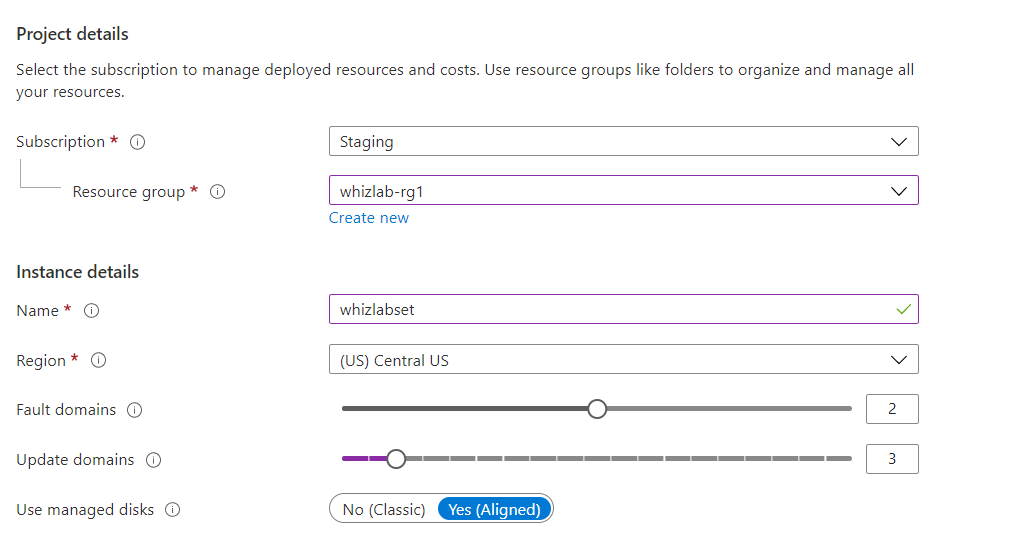

Q3 : You are going to be creating the following Availability set which has the following details

A total of 10 virtual machines are going to be deployed to the availability set

During a planned maintenance, what is the least number of virtual machines that would be available?

A. 4

B. 5

C. 6

D. 8

Correct Answer: C

Explanation

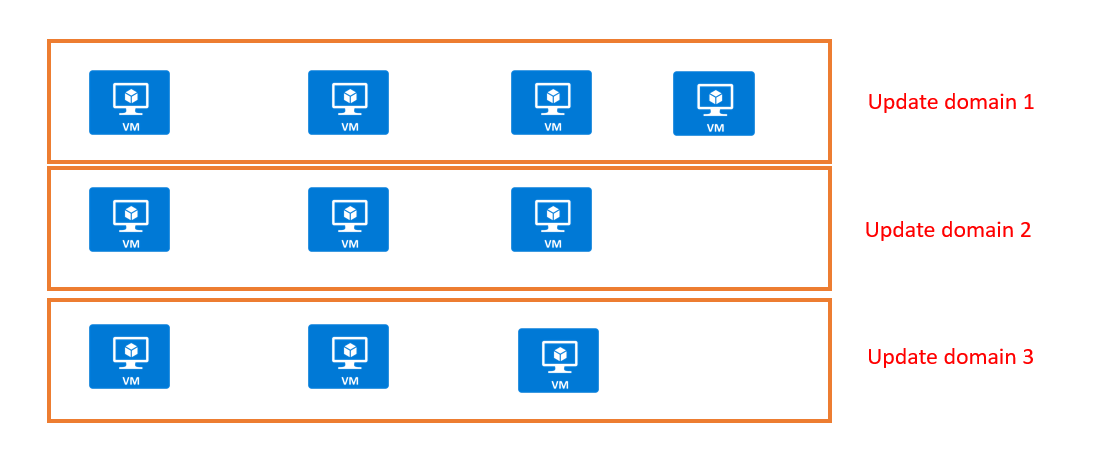

Here we need to focus on update domains for planned maintenance. Below is the representation of 10 virtual machines across 3 update domains.

So, if Update domain 1 goes down, we would have at least 6 virtual machines running.

Since this is clear from the representation, all other options are incorrect

For more information on availability sets, one can visit the following URL: https://docs.microsoft.com/en-us/azure/virtual-machines/manage-availability

Domain : Design data storage solutions

Q4 : A company uses Azure SQL Managed Instance for the application data.

What two parameters would you set up to ensure that the instance will scale to meet the workload demands?

A. Define the maximum of CPU cores

B. Define the maximum of the allocated storage

C. Define the maximum of the resources per database

D. Define the maximum resource limit per group of databases

Correct Answers: A and B

Explanation

Azure provides dynamic scalability for the Azure SQL Databases and Azure Managed Instances.

Azure SQL Database service offers two purchasing models: DTU-based and vCore-based. Azure Managed instance service is based only on the vCore purchasing model. This model allows you to select two scalability parameters for managed instance: the maximum CPU cores and the maximum allocated storage.

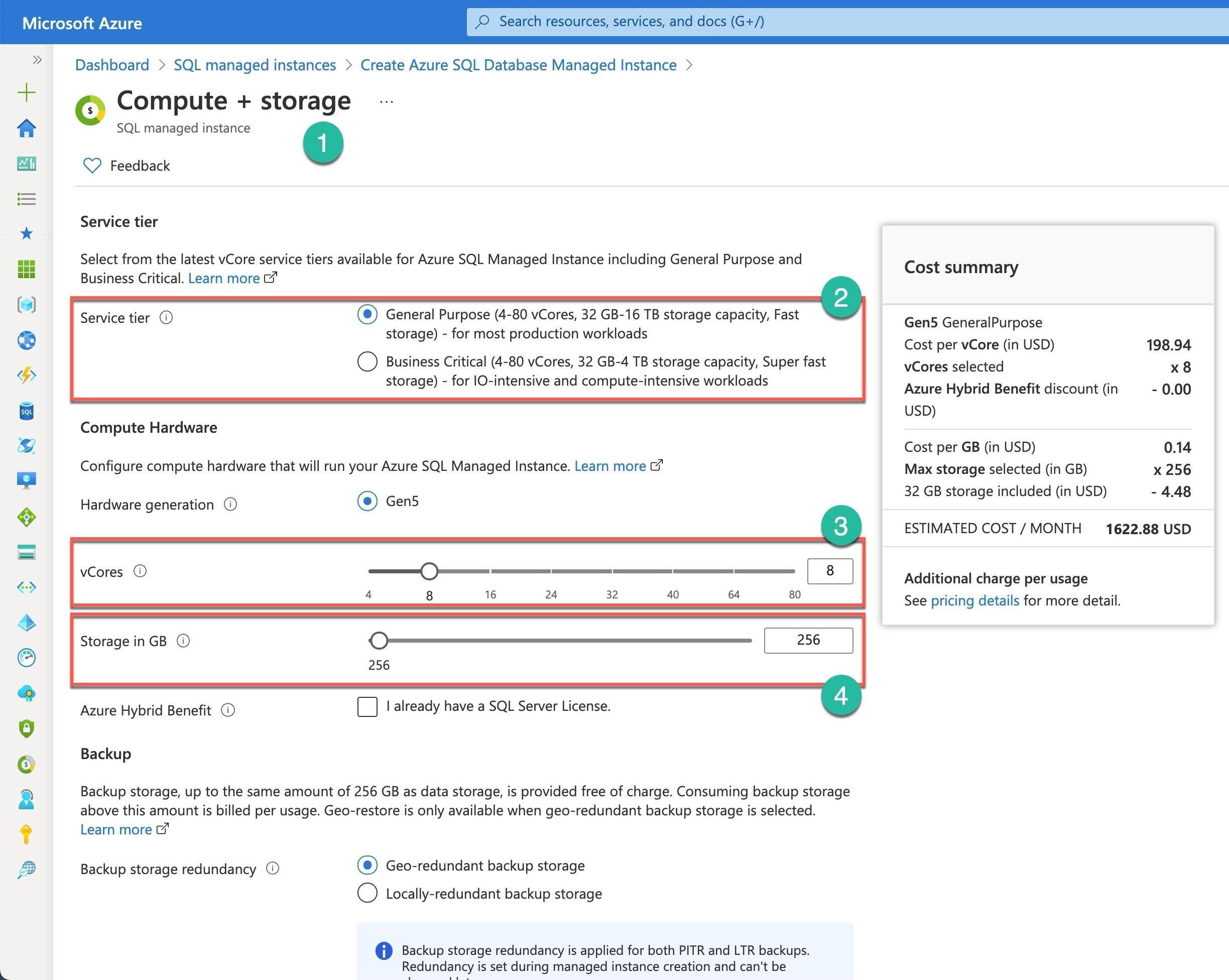

(Number 1): When you create a SQL Managed instance or change the resources for an existing instance, you use the Compute + storage panel.

(Number 2): Because Azure SQL Managed Instance uses only the vCore model, you need to select one of the model’s Service tier options, General Purpose and Business Critical. These tiers define the storage latency: fast or super fast.

Then, using sliders, you set or change the instance resources: the number of vCores (Number 3) and the storage size (Number 4).

The default values are 8 CPU cores and 256 GB of storage. Azure SQL Managed Instance service will dynamically scale within these parameters to meet the workload demands. All databases in the Azure SQL Managed instance will share the assigned resources.

Option C is incorrect because you need to define a maximum of the resources per database to scale Azure SQL Database for both purchasing models dynamically but not for the Azure SQL Managed Instance scaling.

Option D is incorrect because you need to define a resource limit per group of databases to scale Azure SQL Database Elastic pools dynamically but not for the Azure SQL Managed Instance scaling.

For more information about solutions for database scalability, please visit the below URLs: https://docs.microsoft.com/en-us/azure/azure-sql/database/scale-resources, https://docs.microsoft.com/en-us/azure/azure-sql/managed-instance/sql-managed-instance-paas-overview, https://docs.microsoft.com/en-us/azure/azure-sql/managed-instance/service-tiers-managed-instance-vcore?tabs=azure-portal

Domain : Design business continuity solutions

Q5 : A company has container-based workloads and asks you to advise how to protect the multi-region AKS deployments from regional outages.

What two services would you recommend for the company to implement?

A. Azure Backup

B. Azure VM Scale sets

C. Azure Traffic Manager

D. Azure App Service

E. Azure Load Balancer

Correct Answers: C and E

Azure provides several disaster recovery tools for container-based workloads. To protect the multi-region Azure Kubernetes Service deployments from the regional outages, you need to implement Azure Traffic Manager. Azure Traffic Manager is a global load balancing service based on DNS.

It provides high availability and disaster recovery for container-based workloads. If one region fails, Azure Traffic Manager will direct the traffic to the secondary region. Traffic Manager routes any protocol (not only HTTP/HTTPS as Azure Front Door or Azure Application Gateway does) to the service endpoint’s public IP address based on the routing method. Using geographic routing, it will direct the traffic to the closest AKS cluster and application instance. Then, the Load Balancer will take care of data delivery to the AKS.

Option A is incorrect because you need to use the Traffic Manager and Load Balancer services for multi-region AKS protection from the regional outages, but not the Azure Backup service. However, the Azure Backup service protects the application data. Your container-hosted application uses Azure Storage disks or file shares, and Azure Backup will be an appropriate data recovery service.

Option B is incorrect because you need to use the Traffic Manager and Load Balancer services for multi-region AKS protection from the regional outages, but not the Azure VM Scale sets. However, in case of node failures, Azure Kubernetes Service uses VM Scale sets to protect the nodes.

Option D is incorrect because you need to use the Traffic Manager service and Load Balancer services for multi-region AKS protection from the regional outages, but not the Azure App Service. Azure App Service is a fully managed web application hosting platform.

For more information about the recovery solutions for the containers, please visit the below URLs: https://docs.microsoft.com/en-us/azure/aks/operator-best-practices-multi-region#use-azure-traffic-manager-to-route-traffic, https://docs.microsoft.com/en-us/azure/traffic-manager/traffic-manager-configure-geographic-routing-method, https://docs.microsoft.com/en-us/azure/traffic-manager/traffic-manager-configure-geographic-routing-method

Domain : Design identity, governance, and monitoring solutions

Q6 : A company is planning on deploying an application onto Azure. The application will be based on the .Net core programming language. The application would be hosted using Azure Web apps. Below is part of the various requirements for the application

- Gives the ability for the testing team to view the different components of an application and see the calls being made between the different application components

- Helps business analyse how many users actually return to the application

- Ensuring IT administrators get alerts based on critical conditions being met in the application

Which of the following service would be best suited for fulfilling the requirement of

“Ensuring IT administrators get alerts based on critical conditions being met in the application”

A. Application Insights

B. Azure Monitor

C. Azure Advisor

D. Azure Policies

Correct Answer: B

Explanation

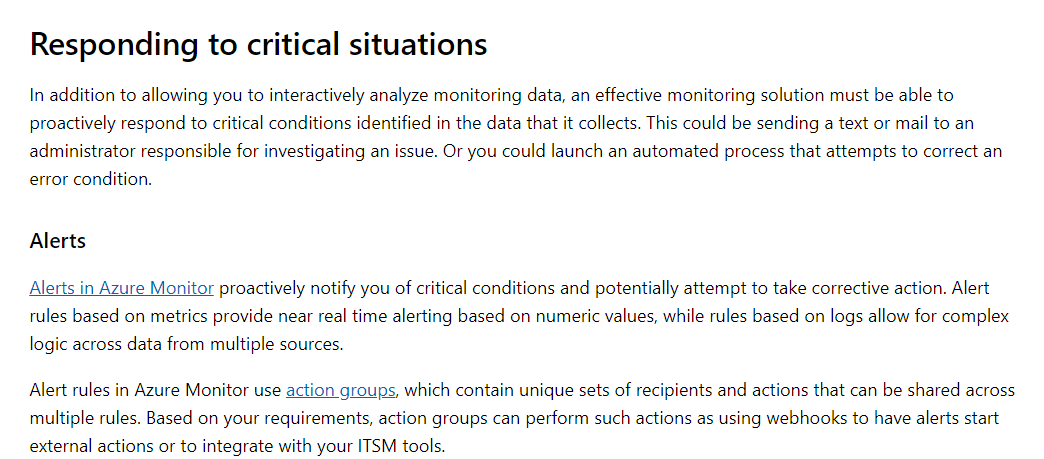

This is a feature of Azure Monitor wherein you can use the Alerts feature. This is also mentioned in the Microsoft documentation

Since this is clearly mentioned in the documentation, all other options are incorrect

For more information on Azure Monitor, please visit the below URL: https://docs.microsoft.com/en-us/azure/azure-monitor/overview

Domain : Design infrastructure solutions

Q7 : A company is planning on deploying a set of applications onto a set of Azure Kubernetes clusters. The clusters would be distributed across various Azure regions. You have to recommend a storage solution for the application container images. The update container images need to be automatically replicated across all AKS clusters. Which of the following would you implement for this requirement?

A. Geo-redundant storage account

B. Azure Cache for Redis

C. Azure Content Delivery Network

D. Azure Container Registry – Premium SKU

Correct Answer: D

Explanation

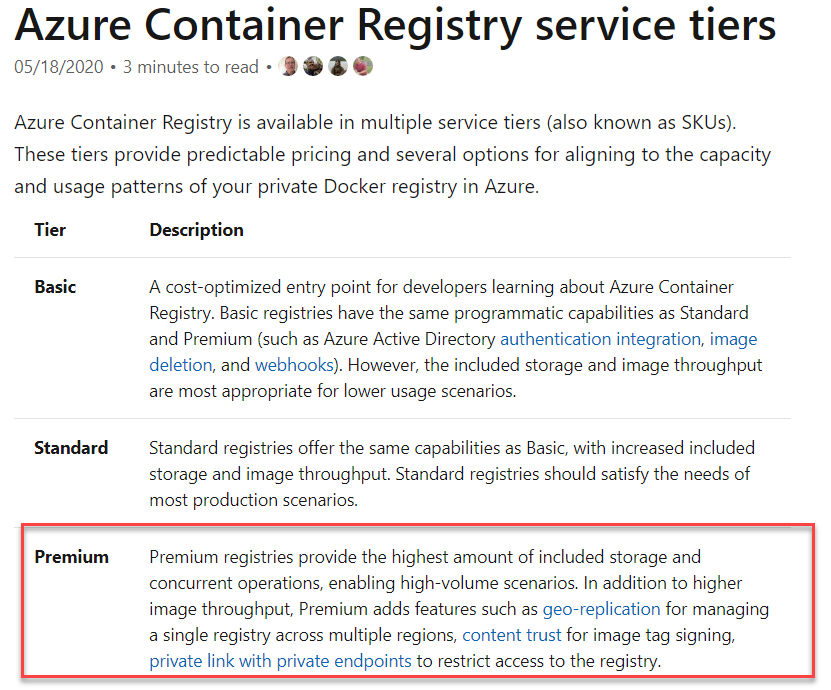

For this requirement, you can Azure Container Registry – Premium SKU. It provides the feature of automatic distribution of images across regions.

The Microsoft documentation mentions the following

Since this is clearly given in the Microsoft documentation, all other options are incorrect

For more information on Azure Container registry SKUs , you can visit the below link: https://docs.microsoft.com/en-us/azure/container-registry/container-registry-skus

Domain : Design infrastructure solutions

Q8 : A company currently has the following systems running on their on-premise environment

- An ASP.Net application running on Internet Information Services

- A MongoDB database

The company wants to migrate the systems onto Azure. They want to ensure to use managed services to reduce the administrative overhead. They want to minimize the time for migration as well and also reduce costs wherever possible.

Which of the following Azure service would you use for the MongoDB database?

A. CosmosDB

B. Azure SQL Database

C. Virtual Machines

D. Azure SQL Data warehouse

Correct Answer: A

Explanation

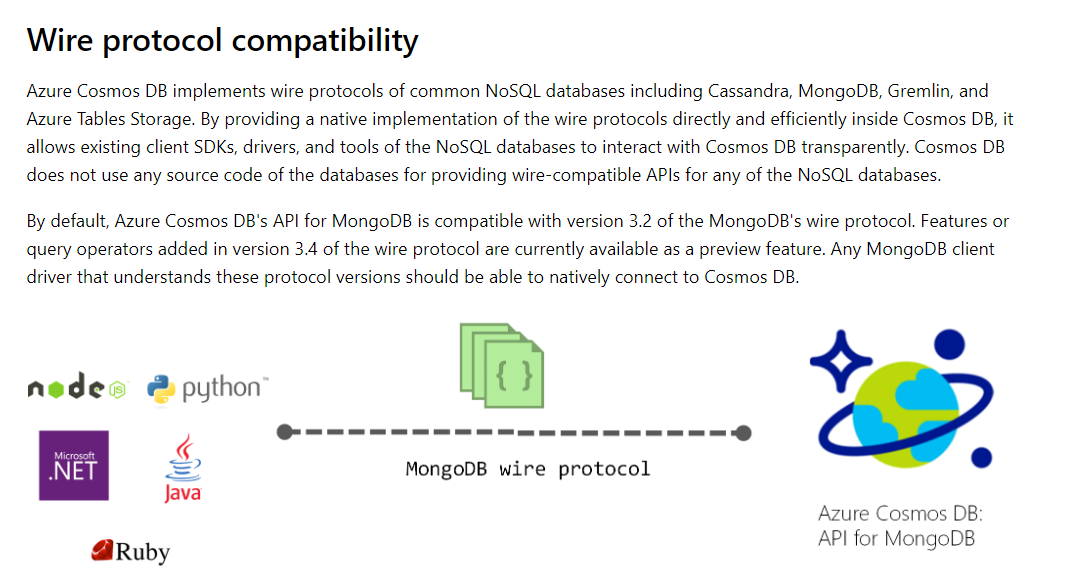

You can use the MongoDB API which is available as part of CosmosDB

The Microsoft documentation mentions the following

Options A and D are incorrect since these are SQL based data stores

Option C is incorrect since this would less cost effective and not a managed service

For more information on CosmosDB and the MongoDB API, please go ahead and visit the below URL: https://docs.microsoft.com/en-us/azure/cosmos-db/mongodb-introduction

Domain : Design data storage solutions

Q9 : A company stores web access logs for an application in Azure Blob storage. At the end of each month, the log data must be automatically sent to an Azure SQL database for report generation.

Which of the following would you implement for this requirement?

A. Azure Data Factory

B. Data Migration Assistant

C. Microsoft SQL Server Migration Assistant (SSMA)

D. AzCopy

Correct Answer: A

Explanation

Azure Data Factory is managed cloud service for extract-transform-load (ETL), extract-load-transform (ELT), and data integration operations. With Azure Data Factory, you can create a data pipeline. A data pipeline is a logical group of activities (steps) that perform a unit of work. Each activity consists of tasks. You can implement the data pipeline to transfer data from Azure Blob storage to an Azure SQL database. It can also run based on a schedule.

Option B is incorrect because Data Migration Assistant helps assess compatibility issues when upgrading your data store to a newer version of SQL Server or Azure SQL database.

Option C is incorrect because Microsoft SQL Server Migration Assistant (SSMA) helps automate the database migration to SQL Server from Microsoft Access, DB2, MySQL, Oracle, and SAP ASE.

Option D is incorrect because AzCopy helps to copy data between Azure storage accounts.

For more information about Azure Data Factory, please visit the following URL: https://docs.microsoft.com/en-us/azure/data-factory/introduction

Domain : Design identity, governance, and monitoring solutions

Q10 : Your organization has multiple Azure Cosmos DB accounts. You need to recommend what API to use for applications functionality.

Which of the following API would you use to host graph-based data?

A. SQL

B. Table

C. Gremlin

D. Cassandra

E. MongoDB

Correct Answer: C

Explanation

Azure Cosmos DB is a multi-model globally distributed NoSQL database. Cosmos DB stores data in atom-record-sequence (ARS) format. It unites under one roof several data management systems and exposes them in the form of APIs. You can select between the Core (SQL) API and MongoDB API (document model), Cassandra API (column-oriented model), Gremlin API (graph model), and Table API (key-value model). You should select the default Cosmos DB API: Core (SQL) for the new projects. If you have an existent database in formats that Cosmos DB API supports and do not want to deal with application migration, the best way is to bring the data to Cosmos DB and use provided APIs for your application. For example, suppose you have a MongoDB database with the purchase orders in different formats that are suitable for your customers. In that case, you can bring data to Cosmos DB with native MongoDB tools, like mongodump and mongorestore. And use all MongoDB queries in your apps for the data access now in Cosmos DB. But if the business logic of your application will get better data representation, for example, in a graph, you should use Gremlin API in your applications instead of the Core.

The graph model presents the data as vertex (an individual database item) and edge (a connection between items). To query the data, Gremlin API uses Apache Tinkerpop’s Gremlin language. The data model is useful for e-commerce or fraud detection when you need to track the relation between different types of information like customers, billings, delivery addresses, payments, order history, etc.

For more information on Cosmos DB – Gremlin API, please visit the below URLs: https://docs.microsoft.com/en-us/azure/cosmos-db/choose-api, https://docs.microsoft.com/en-us/azure/cosmos-db/graph-introduction, https://docs.microsoft.com/en-us/learn/modules/choose-api-for-cosmos-db/5-use-the-gremlin-graph-api-as-a-recommendation-engine

Domain : Design identity, governance, and monitoring solutions

Q11 : A company has a set of 10 Virtual Machines created in their Azure subscription.

There is a requirement to ensure that an IT administrator gets an email whenever the following operations are performed on the Virtual Machine.

- Restart of the machine

- Whenever the machine is deallocated

- Whenever the machine is powered off

You need to decide on the minimum number of rules and actions groups required in Azure Monitor for this requirement. Choose two answers from the options given below.

A. Three rules

B. One rule

C. One action group

D. Three action groups

Correct Answers: A and C

Explanation

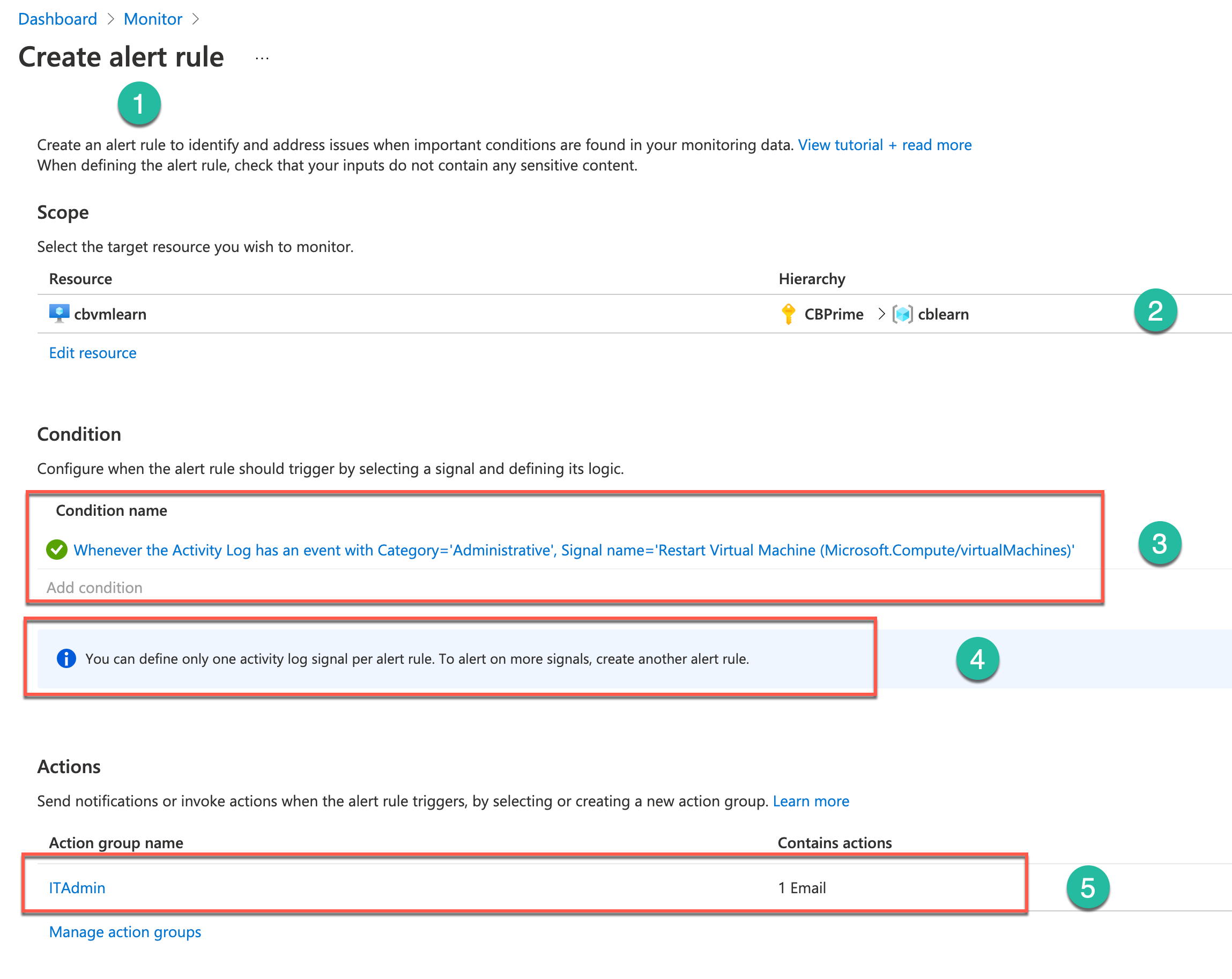

To monitor the three events in Azure Monitor, you need to create 3 separate rules for each requirement. When you open from Azure Monitor blade the Alerts screen and select the “New Alert Rule” link, the Azure portal opens the “Create alert rule” screen (Number 1). You need to fill the sections on this panel. First, you select a scope for the monitoring (Number 2): your VM, subscription, and resource group. Then, you choose the Activity Log event (signal) to trigger the alert (Number 3) under the Condition section. You can create only one alert per event (Number 4). Next, you add an Action group that will send a notification email (Number 5), provide a rule name, and create it.

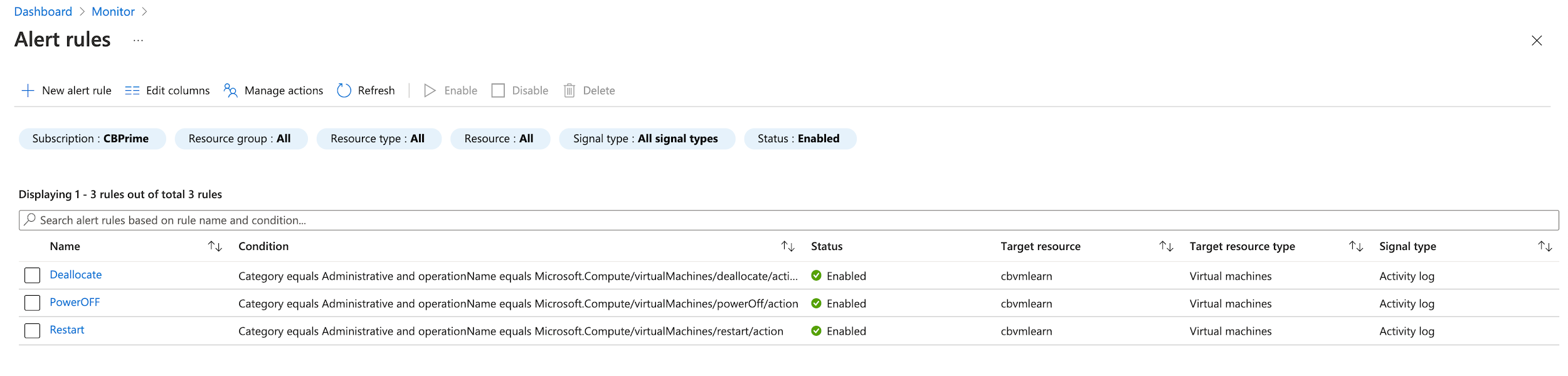

After creating three alert rules, you can review them on the “Alert rules” screen.

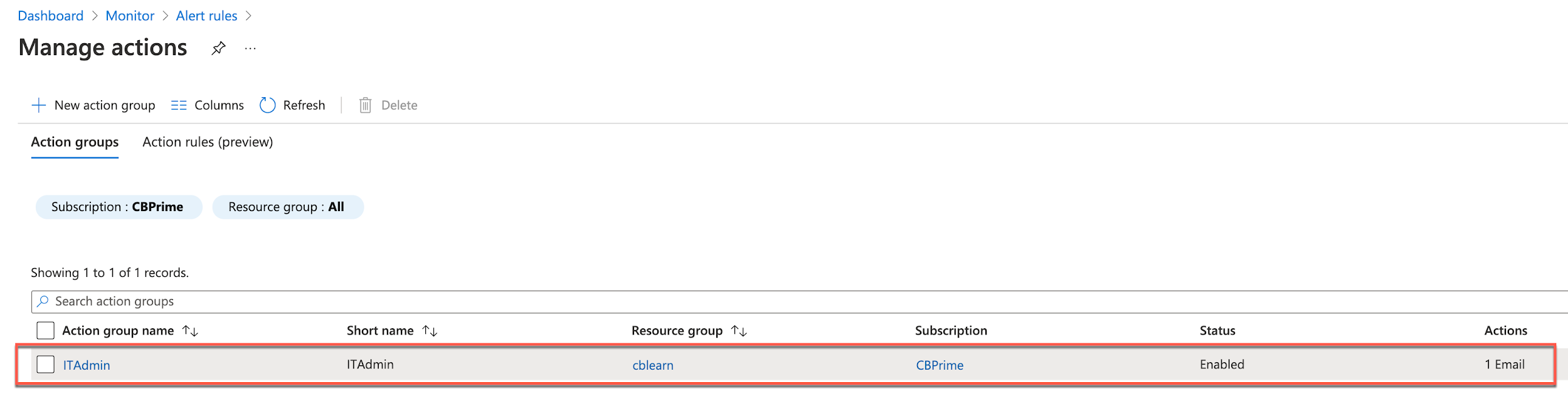

And you can review the Action group on the “Manage actions” screen as well.

In the end, you have three rules and one action group.

All other options are incorrect.

For more information on alerts in Azure Monitor, please go ahead and visit the below URL: https://docs.microsoft.com/en-us/azure/azure-monitor/platform/alerts-overview

Domain : Design infrastructure solutions

Q12 : Your company wants to deploy a new Azure web application. The web application will use Azure blob storage for storage of static content. The Web application uses a large number of JavaScript files along with cascading style sheets. The users of the web application are located across the world. You have to ensure the time to load individual pages is minimized. Which of the following would you recommend for this requirement?

A. Make use of Azure Redis Cache

B. Make use of Azure Content Delivery Network

C. Make use of the Azure Load Balancer

D. Make use of the Azure Application Gateway

Correct Answer: B

Explanation

To distribute static web content to users across the world, you should use the Azure CDN service. Azure Content Delivery Network (CDN) service caches the web content on the edge servers and delivers it to point-of-presence (POP) locations. It uses the distributed network of the servers. The network cuts content delivery time to the end-users by serving the cached data from the closest servers instead of the origin server. CDN delivers static web content and, leveraging the POPs, accelerates the dynamic content that cannot be cached.

All other options are incorrect.

For more information on Azure CDN, please visit the below URL: https://docs.microsoft.com/en-us/azure/cdn/cdn-overview

Domain : Design infrastructure solutions

Q14 : A company wants to migrate workloads from on-premises to the cloud.

What are the three main migration effort phases that you would advise the company to prepare for?

A. Release workloads

B. Load workloads

C. Deploy workloads

D. Assess workloads

E. Test workloads

Correct Answers: A, C and D

Explanation

Migration efforts based on Azure Cloud Adoption Framework include the incremental approaches to the workloads. Each migration iteration is a batch of migration waves — the smallest workload that produces tangible results. Usually, the iteration consists of the three phases:

- Assess workloads — these workloads help to evaluate costs, architecture, and deployment tools.

- Deploy workloads — these workloads replicate the current functionality in a cloud using lift and shift, lift, and optimize approaches.

- Release workloads — these workloads provide test, optimization, documentation, and release of the cloud migration efforts.

All other options are incorrect.

For more information about Cloud Adoption Framework and Migration effort, please visit the below URLs: https://docs.microsoft.com/en-us/azure/cloud-adoption-framework/migrate/, https://docs.microsoft.com/en-us/azure/cloud-adoption-framework/migrate/#migration-effort

Domain : Design infrastructure solutions

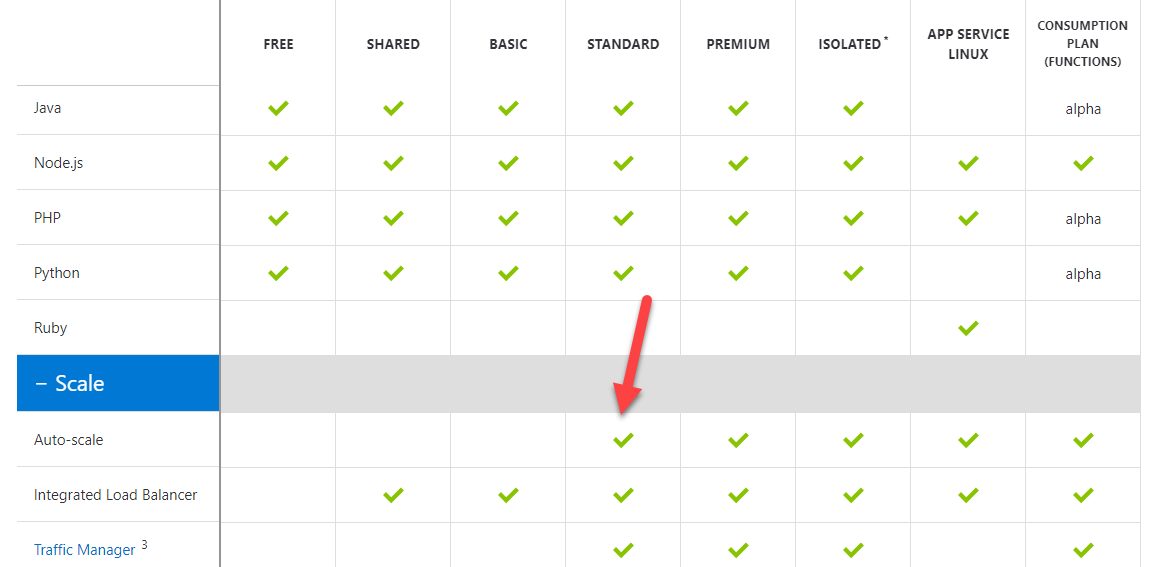

Q15 : A company plans to host a web application using the Azure Web App service. The service must provide an auto-scale option for the web application based on demand with minimal costs.

You decide to allocate the Azure Web App to a Standard App Service Plan.

Would this solution fulfill the requirement?

A. Yes

B. No

Correct Answer: A

Explanation

Yes, the Standard App service plan does support Autoscaling and would be the most cost-effective App service plan for this purpose.

For more information on Azure App Service Plans, please visit the below URL: https://azure.microsoft.com/en-us/pricing/details/app-service/plans/

Domain : Design infrastructure solutions

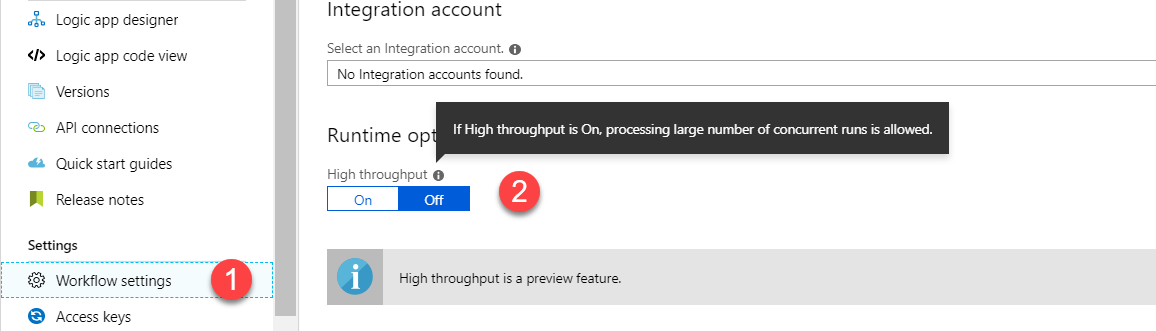

Q16 : A company has created a Logic App and named it whizlabs-logicapp. The application is configured to provide a response when HTTP POST or HTTP GET request is received. The application should have the capability to receive up to 200,000 requests in a 5-minute period during peak loads.

Which of the following should you configure to ensure that the application can handle the expected load?

A. Workflow settings

B. API connections

C. Access control (IAM)

D. Access keys

Correct Answer: A

Explanation

As shown below, for the Azure Logic App, you have to go to the Workflow settings and enable High Throughput.

Since this is clearly evident in the implementation, all other options are incorrect.

For more information on logic apps limits and configuration, please visit the below URL: https://docs.microsoft.com/en-us/azure/logic-apps/logic-apps-limits-and-config

Domain : Design infrastructure solutions

Q17 : Your company has an Azure Kubernetes Service and an Azure Container Registry. You have to perform continuous deployments of a containerized application to the cluster. The deployments need to be made as soon as image updates are made to the registry.

Which of the following needs to be implemented for this requirement?

A. Use an Azure Automation Runbook

B. Use an Azure Pipeline release pipeline

C. Use an Azure Resource Manager templates

D. Use a CRON job

Correct Answer: B

Explanation

In Azure Pipelines, you can define build and release pipelines. You can use the pipelines to build images in the Azure Container Registry. The images can then be deployed as containers in the Azure Kubernetes service

Option A is incorrect because this is normally used to automate jobs on your Azure infrastructure.

Option C is incorrect because this is used to automate the deployment of resources onto Azure.

Option D is incorrect because CRON jobs would not be affective to automate the deployment of newer container images.

For more information on deploying to Azure Kubernetes, one can visit the following URL: https://docs.microsoft.com/en-us/azure/devops/pipelines/ecosystems/kubernetes/aks-template?view=azure-devops

Domain : Design identity, governance, and monitoring solutions

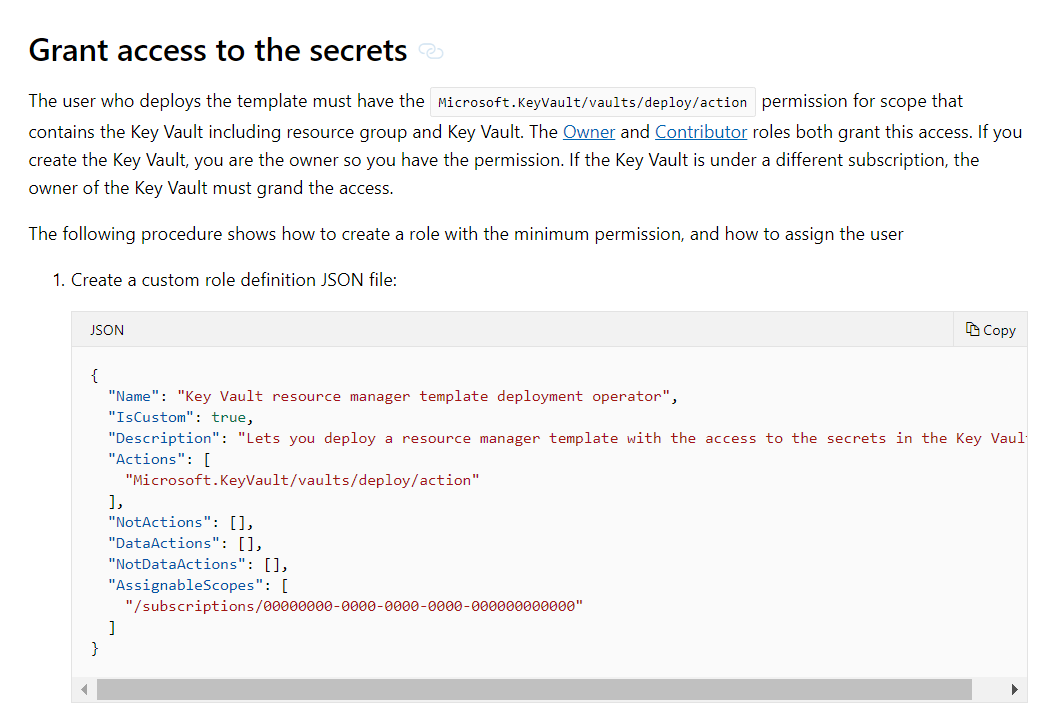

Q20 : A team is planning on deploying Azure resources by using Resource Manager templates. The templates need to reference secrets that are stored in Azure Key vault. You need to ensure deployments can be made accordingly.

Which of the following would you use to restrict access to the secrets in the key vault?

A. Access policies for the Key vault

B. An Azure policy

C. Role Based access

D. Advanced access policy for the Key vault

Correct Answer: C

Explanation

The Microsoft documentation clearly gives the steps for this. One of them is to ensure the identity deploying the template has the right permissions. This can be done with the help of Role based access.

Since this is clearly given in the documentation, all other options are invalid

For more information on accessing secrets from Resource Manager templates, please visit the below URL: https://docs.microsoft.com/en-us/azure/azure-resource-manager/resource-manager-keyvault-parameter

Domain : Design business continuity solutions

Q21 : A company needs to host a set of applications on Azure virtual machines. There are different requirements for each of the applications

- Maintain reliable performance on a set of virtual machines

- Ensure application is running in the event of a data center failure

Which of the following services can you recommend for the “Maintain reliable performance on a set of virtual machines” requirement?

A. Azure Availability Zones

B. Azure Application Gateway

C. Azure Scale Sets

D. Azure Traffic Manager

Correct Answer: C

Explanation

To ensure the reliable performance of the applications running on the set of virtual machines, you need to use Azure Scale Sets. Azure Virtual Machine Scale Sets service helps create and manage a group of VMs behind a load balancer. The configuration of these machines must be the same, and they should run on the same base OS image. The VM scale sets can automatically increase or decrease VM instances depending on the scaling rules and resource demand. Scale sets provide high availability for your applications. You can use scale sets for large-scale services, like compute, big data, and containers.

All other options are incorrect.

For more information about Azure Virtual Machine Scale Sets, please visit the following URL: https://docs.microsoft.com/en-us/azure/virtual-machine-scale-sets/overview

Domain : Design infrastructure solutions

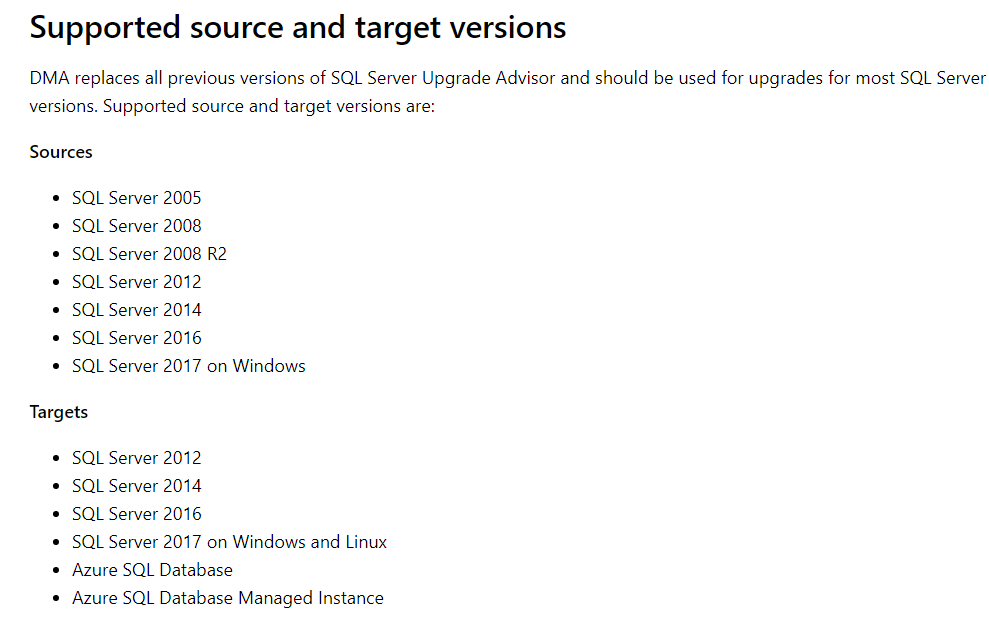

Q22 : A company has the following on-premise data stores

- A Microsoft SQL Server 2012 database

- A Microsoft SQL Server 2008 database

The data needs to be migrated to Azure.

- Requirement 1 – The data in the Microsoft SQL Server 2012 database needs to be migrated to an Azure SQL database

- Requirement 2 – The data in a table in the Microsoft SQL Server 2008 database needs to be migrated to an Azure CosmosDB account that uses the SQL API

Which of the following should be used to accomplish Requirement1?

A. AzCopy

B. Azure CosmosDB Data Migration tool

C. Data Management Gateway

D. Data Migration Assistant

Correct Answer: D

Explanation

The Data Migration assistant can be used to migrate the data. It has support for various versions of Microsoft SQL Server as shown below

Option A is incorrect since this works with data in Azure storage accounts

Option B is incorrect since this is used for migration of data to CosmosDB

Option C is incorrect since this is used for building a gateway with the on-premise infrastructure

For more information on the data migration assistant, please visit the below URL: https://docs.microsoft.com/en-us/sql/dma/dma-overview?view=sql-server-2017

Domain : Design business continuity solutions

Q25 : A company wants to migrate its relation data to Azure CosmosDB. The management is worried about CosmosDB high availability.

What are two primary ways how Azure CosmosDB provides high availability?

A. Replicates data across regions

B. Uses Azure scale sets

C. Uses Azure Traffic Manager

D. Replicates data four times in a region

E. Replicates data six times in a region

Correct Answers: A and D

Explanation

Azure Cosmos DB is a multi-model globally distributed NoSQL database. Cosmos DB stores data in atom-record-sequence (ARS) format. It unites under one roof several data management systems and exposes them as APIs. You can select between the Core (SQL) API and MongoDB API (document model), Cassandra API (column-oriented model), Gremlin API (graph model), and Table API (key-value model).

Azure CosmosDB provides high availability in two primary ways: replication data across regions and storing four copies of the data in a region. By default, Azure CosmosDB is distributed in all Azure regions. You can change the number of regions in your CosmosDB account. Suppose you associate five Azure regions with your data, and every region will have four copies of the data. There will be twenty copies of your data available to use.

All other options are incorrect.

For more information about Azure CosmosDB high availability, please visit the below URL: https://docs.microsoft.com/en-us/azure/cosmos-db/high-availability

Summary

We hope you have enjoyed this article and tried out the free questions on the AZ-305 exam. These sample questions may have given you a clear outline of the actual certification exam. It is recommended to prepare more with practice tests which are a set of mock questions to identify your skill gaps on the AZ-305 exam. Until you are fully confident to face the real exam, keep learning!

Whizlabs has Practice tests, Video Courses, Hands-on Labs & Azure Sandbox for the AZ-305 exam.

- 25 Free Questions on MS-101: Microsoft 365 Mobility and Security - November 13, 2022

- What is Snowpipe & how does it works? - October 7, 2022

- Preparation Guide on DP-420 Designing and Implementing Cloud-Native Applications Using Microsoft Azure Cosmos DB Certification - September 12, 2022

- Preparation guide on MB-910: Microsoft Dynamics 365 Fundamentals (CRM) Certification Exam - August 5, 2022

- Snowflake Certifications – Which snowflake certification is best for you? - July 11, 2022

- All you need to know about Certified Ethical Hacker Certification - June 16, 2022

- What are Hands-On Labs? A beginner’s guide to Hands-on Labs - May 17, 2022

- 25 Free Question on Salesforce Administrator Certification - April 26, 2022

Nice formulation of questions and explanation for answers, a good starting point for exam preparation and getting overview of exam questions.