Previously we’ve covered free AWS Solutions Architect Associate exam questions to help you in your AWS Certified Solutions Architect exam preparation. Now, extending this series, here we bring the FREE AWS Developer Associate exam questions to help you with your AWS Certified Developer Associate exam preparation and make you ready for the exam.

AWS Certified Developer Associate exam (DVA-C02) is intended for the candidates in the developer role, who have at least one year of working experience in the development and maintenance of AWS applications.

The AWS Certified Developer Associate exam validates the ability of the individuals in

- Developing, Debugging, and Deploying cloud applications on the AWS platform

- Understanding the core AWS services, basic AWS architecture, and best AWS practices

We have launched 100+ hands-on labs for this exam. These guided labs would help you to understand the concepts in real time. Along with you can also explore Whizlabs AWS Playground.

Latest Updates

We have a recent update on the AWS Certified Developer Associate exam that is changing on February 28, 2023. The last date to take the current exam was February 27, 2023. The new exam would have more emphasis on deployment and management with a reduced focus on development and refactoring. The new exam code will be DVA0C02.

Note: From January 1, 2023, all AWS Certification exams will be taken through Pearson VUE. The last day for candidates to complete an exam appointment through PSI is December 31, 2022, at 11:59 pm UTC.

Exam Domain for AWS Developer Associate Exam (DVA-C02)

Following is the skillset for the AWS Certified Developer Associate exam:

| Domain | Weightage |

| Developing with AWS services | 32% |

| Security | 26% |

| Deployment | 24% |

| Troubleshooting and Optimization | 18% |

Prepare with Free AWS Developer Associate Practice

Exam (DVA-C02) Questions

During your AWS preparation for the AWS Certified Developer Associate exam, you may find a number of resources for your exam preparation. So, it becomes important to choose the right preparation guide while you’re preparing for any AWS developer associate certification exam. Similarly, it is mandatory to choose the right AWS Developer Associate exam preparation guide that could guide you with the right sequence of preparation steps. Also, that could provide you with good materials for the preparation like books, white papers, documentation, online courses, and sample questions.

Not to mention, every preparation step has its own importance. You may easily find all the resources and do well with your exam preparation. But the practice is the most important part. Practicing with AWS Developer Associate exam questions helps you check your preparation level and thus makes you confident to pass the certification exam.

So, our team of subject matter experts and certified professionals has brought some important AWS Developer Associate exam questions for you that are free. These questions are in the same pattern as that of the actual exam. Also, you will get a complete explanation of the questions with the related AWS documentation. So, what are you thinking? Try these questions now.

1. What is used in S3 to enable client web applications that are loaded in one domain to interact with resources of the different domains? Choose the correct answer from the options below

A. CORS Configuration

B. Public Object Permissions

C. Public ACL Permissions

D. None of the above

Answer: A

Explanation: Cross-origin resource sharing (CORS) configuration is a way to interact with resources in a different domain for the client web applications loaded in one domain. With CORS, you can build client-side web applications with Amazon S3 and also allow cross-origin to have access to the S3 resources selectively.

For more information on S3 CORS configuration, please visit the link:

http://docs.aws.amazon.com/AmazonS3/latest/dev/cors.html

2. Which of the descriptions below best describes what the following bucket policy does?

{

“Version”:”2012-10-17″,

“Id”:”Statement1”,

“Statement”:[

{

“Sid”:” Statement2″,

“Effect”:”Allow”,

“Principal”:”*”,

“Action”:[

“s3:GetObject”,

“s3:PutObject”

],

“Resource”:”arn:aws:s3:::mybucket/*”,

“Condition”:{

“StringLike”:{

“aws:Referer”:[

“http://www.example.com/*”,

“http://www.demo.com/*”

]

}

}

}

]

}

Choose the correct answer from the options below

A. It allows read or write actions on the bucket ‘mybucket’

B. It allows read access to the bucket ‘mybucket’ but only if it is accessed from example.com or demo.com

C. It allows unlimited access to the bucket ‘mybucket’

D. It allows read or write access to the bucket ‘mybucket’ but only if it is accessed from example.com or demo.com

Answer: D

Explanation: The PutObject allows one to put objects in an S3 bucket.

For more information on S3 bucket policy examples, please visit the link:

http://docs.aws.amazon.com/AmazonS3/latest/dev/example-bucket-policies.html

3. Is the default visibility timeout for an SQS queue 1 minute?

A. True

B. False

Answer: B

Explanation: The visibility timeout of each queue is 30 seconds by default. It is possible to change this setting for all the queues. Typically, the visibility timeout is set to the average time it takes in processing and deleting a message from the queue. While receiving messages, special visibility timeout can be set for the returned messages without making any change in the overall timeout of the queue

For more information on SQS please visit the below link:

https://aws.amazon.com/sqs/faqs/

4. When a failure occurs during stack creation in Cloudformation, does a rollback occur?

A. True

B. False

Answer: A

Explanation: By default, the “automatic rollback on error” feature is enabled. It causes AWS CloudFormation to be created successfully for a stack until the point of error is deleted. This is useful when the default limit for Elastic IP addresses is exceeded accidentally, or you can’t access an EC2 AMI you want to run.

This feature makes you depend on the fact that stacks may either be fully created or not at all. It simplifies the layered solutions system administration built on the top of the AWS CloudFormation.

For more information on CloudFormation, please refer to the below link:

https://aws.amazon.com/cloudformation/faqs/

Preparing for an AWS interview? Read these top 50 AWS Interview Questions and Answers and get ready to ace the interview.

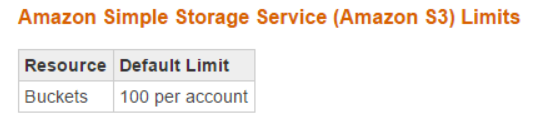

5. An administrator is getting an error while trying to create a new bucket in S3? You feel that bucket limit has been crossed. What is the bucket limit per account in AWS? Choose the correct answer from the options below

A. 100

B. 50

C. 1000

D. 150

Answer: A

Explanation: This is clearly mentioned in the AWS documentation.

For more information on AWS service limitations, please visit the link:

http://docs.aws.amazon.com/general/latest/gr/aws_service_limits.html

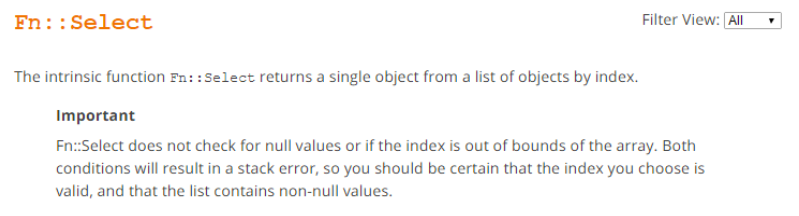

6. Which of the below functions is used in Cloudformation to retrieve an object from a set of objects? Choose an answer from the options below

A. Fn::GetAtt

B. Fn::Combine

C. Fn::Join

D. Fn::Select

Answer: D

Explanation: This is clearly given in the AWS documentation

For more information on the function please refer to the below link:

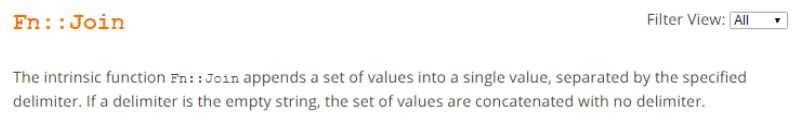

7. Which of the below functions is used in Cloudformation to append a set of values into a single value? Choose an answer from the options below

A. Fn::GetAtt

B. Fn::Combine

C. Fn::Join

D. Fn::Select

Answer: C

Explanation: This is clearly given in the AWS documentation

For more information on the function please refer to the below link:

http://docs.aws.amazon.com/AWSCloudFormation/latest/UserGuide/intrinsic-function-reference-join.html

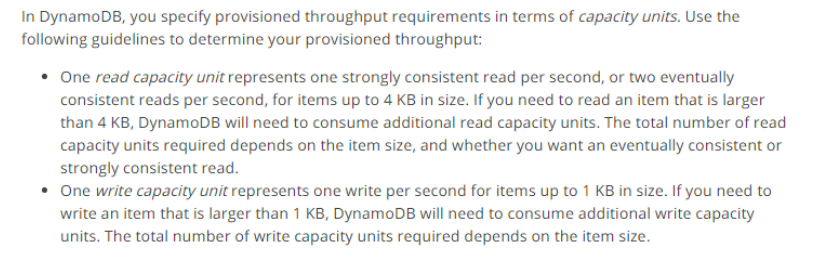

8. What is the max size of an item that corresponds to a single write capacity unit? (While creating an index or table in Amazon DynamoDB, it is required to specify the capacity requirements for the read and write activity)?.

Choose an answer from the options below.

A. 1 KB

B. 4 KB

C. 2 KB

D. 8 KB

Answer: A

Explanation: This is clearly given in the AWS documentation

Good preparation always brings good results. Follow this comprehensive guide for your AWS Developer Associate Exam Preparation and give your preparation a new edge.

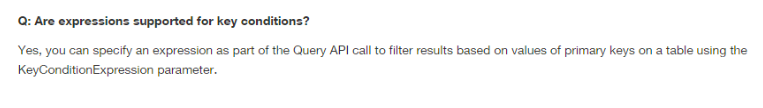

9. What can be used in DynamoDB as a part of the Query API call for the filtration of results based on the primary keys’ values? Choose an answer from the options below

A. Expressions

B. Conditions

C. Query API

D. Scan API

Answer: A

Explanation: This is clearly provided in the AWS documentation

For more information on DynamoDB please refer to the below link:

https://aws.amazon.com/dynamodb/faqs/

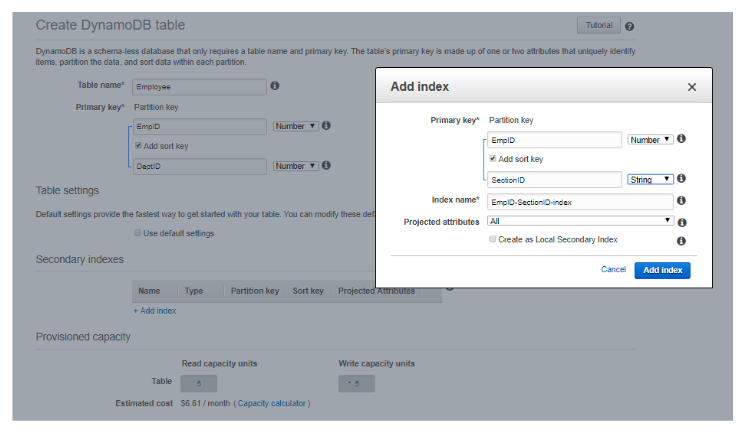

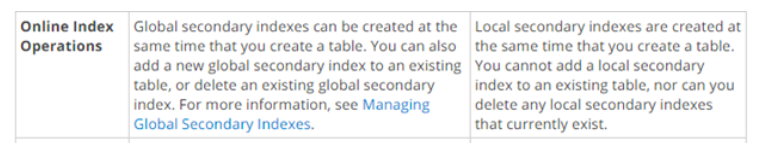

10. Can a global secondary index create at the same time as the table creation?

A. True

B. False

Answer: A

Explanation: This is clearly given in the AWS documentation

For more information on DynamoDB Indexes, please visit the link:

http://docs.aws.amazon.com/amazondynamodb/latest/developerguide/SecondaryIndexes.html

11. A Global Secondary Index can have a different partition key and sort key from those of its base table.

A. True

B. False

Answer: A

Explanation: GSI can also use the same partition key as the base table. Even in the AWS documentation, they say that GSI can use a different partition key and sort key. However, anywhere in the document, they are not saying that it has to be different.

AWS Console Test:

The table is created successfully.

So now we have seen that we can create GSI with the same partition key as the Base table.

Global secondary index — Global secondary index is an index where both the partition key and sort key can be different from that of the base table. The global secondary index is named as global because here the queries can span all the data in the base table over all the partitions.

Local secondary index — Local secondary index is an index where the partition key is the same as that of the base table, while sort key is different. The local secondary index is named as local as all the partitions here are scoped to a base table partition having the same partition key value. For more information on DynamoDB Indexes, please visit the link:

http://docs.aws.amazon.com/amazondynamodb/latest/developerguide/SecondaryIndexes.html

12. What in AWS can be used to restrict access to SWF?

A. ACL

B. SWF Roles

C. IAM

D. None of the above

Answer: C

Explanation: This is clearly mentioned in the AWS documentation

For more information on SWF, please visit the link:

https://aws.amazon.com/swf/faqs/

Also Read: Top AWS Developer Interview Questions

13. An IT admin has enabled long polling in their SQS queue. What must be done for long polling to be enabled in SQS? Choose the correct answer from the options below

A. Create a dead letter queue

B. Set the message size to 256KB

C. Set the ReceiveMessageWaitTimeSeconds property of the queue to 0 seconds

D. Set the ReceiveMessageWaitTimeSeconds property of the queue to 20 seconds

Answer: D

Explanation: Amazon SQS long polling is a method of retrieval of messages from SQS queues. It returns a response only when a message arrives in the message queue instead of short polling where the response returns immediately even when the message is empty.

As the messages are available, the retrieval of messages from Amazon SQS becomes inexpensive due to long polling. It may also reduce the cost of using SQS as it can reduce the empty receipts.

For more information on Long polling, please refer to the link:

http://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-long-polling.html

14. As per the IAM decision logic, what is the first step of access permissions for any resource in AWS? Choose the correct answer from the options below

A. A default deny

B. An explicit deny

C. An allow

D. An explicit allow

Answer: A

The below diagram shows the evaluation logic of IAM policies. And as per the evaluation logic, it is clear that the above scenario leads to a default deny.

For more information on the IAM policy evaluation logic, please refer to the link:

http://docs.aws.amazon.com/IAM/latest/UserGuide/reference_policies_evaluation-logic.html

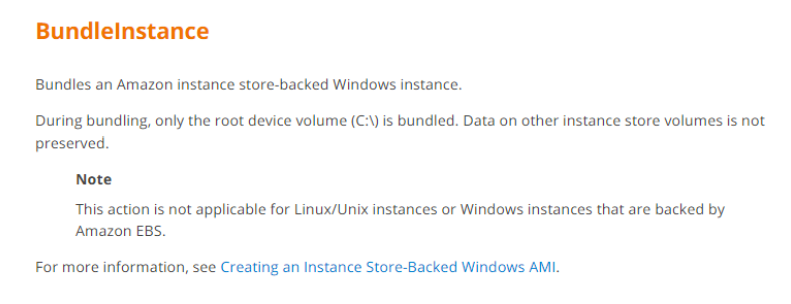

15. Which API call is used to Bundle an Amazon instance store-backed Windows instance? Choose the correct answer from the options below

A. AllocateInstance

B. CreateImage

C. BundleInstance

D. ami-register-image

Answer: C

Explanation: This is given in the AWS documentation

For more information on BundleInstance, please refer to the below link:

http://docs.aws.amazon.com/AWSEC2/latest/APIReference/API_BundleInstance.html

Domain : Development with AWS Services

16. A developer is building an application that needs access to an S3 bucket. An IAM role is created with the required permissions to access the S3 bucket. Which API call should the Developer use in the application so that the code can access to the S3 bucket?

A. IAM: AccessRole

B. STS: GetSessionToken

C. IAM:GetRoleAccess

D. STS:AssumeRole

Correct Answer: D

Explanation

This is given in the AWS Documentation.

A role specifies a set of permissions that you can use to access AWS resources. In that sense, it is similar to an IAM user. An application assumes a role to receive permissions to carry out required tasks and interact with AWS resources. The role can be in your own account or any other AWS account. For more information about roles, their benefits, and how to create and configure them, see IAM Roles, and Creating IAM Roles. To learn about the different methods that you can use to assume a role, see Using IAM Roles.

Important

The permissions of your IAM user and any roles that you assume are not cumulative. Only one set of permissions is active at a time. When you assume a role, you temporarily give up your previous user or role permissions and work with the permissions assigned to the role. When you exit the role, your user permissions are automatically restored.

To assume a role, an application calls the AWS STS AssumeRole API operation and passes the ARN of the role to use. When you call AssumeRole, you can optionally pass a JSON policy. This allows you to restrict permissions for the role’s temporary credentials. This is useful when you need to give the temporary credentials to someone else. They can use the role’s temporary credentials in subsequent AWS API calls to access resources in the account that owns the role. You cannot use the passed policy to grant permissions that are in excess of those allowed by the permissions policy of the role that is being assumed. To learn more about how AWS determines the effective permissions of a role, see Policy Evaluation Logic.

Option A is incorrect because IAM does not have this API.

Option B is incorrect because STS: GetSessionToken is used if you want to use MFA to protect programmatic calls to specific AWS API operations like Amazon EC2 StopInstances. MFA-enabled IAM users would need to call GetSessionToken and submit an MFA code associated with their MFA device.

Option C is incorrect because IAM does not have this API.

For more information on switching roles, please refer to the below Link: https://docs.aws.amazon.com/IAM/latest/UserGuide/id_roles_use_switch-role-api.html

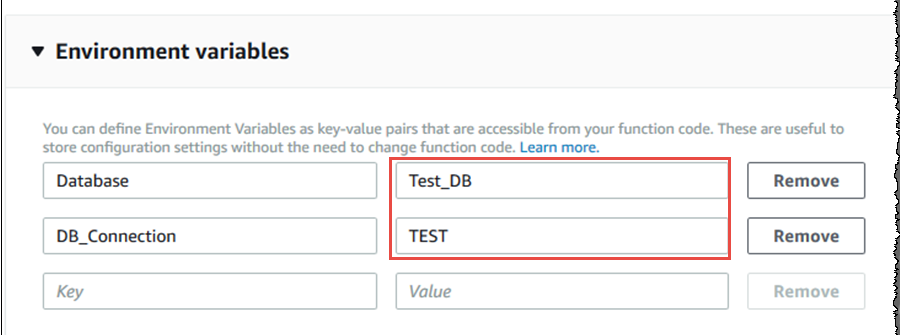

17. A company is writing a Lambda function that will run in multiple stages, such a dev, test, and production. The function is dependent upon several external services, and it must call different endpoints for these services based on the function’s deployment stage.

What Lambda feature will enable the developer to ensure that the code references the correct endpoints when running in each stage?

A. Tagging

B. Concurrency

C. Aliases

D. Environment variables

Correct Answer: D

Explanation

You can create different environment variables in the Lambda function that can be used to point to the different services. The below screenshot from the AWS Documentation shows how this can be done with databases.

Option A is invalid since this can only be used to add metadata for the function.

Option B is invalid since this is used for managing the concurrency of execution.

Option C is invalid since this is used for managing the different versions of your Lambda function.

For more information on AWS Lambda environment variables, please refer to the below Link: https://docs.aws.amazon.com/lambda/latest/dg/env_variables.html

Domain : Deployment

18. You are using AWS SAM to define a Lambda function and configure CodeDeploy to manage deployment patterns. With the new Lambda function working as per expectation which of the following will shift traffic from the original Lambda function to the new Lambda function in the shortest time frame?

A. Canary10Percent5Minutes

B. Linear10PercentEvery10Minutes

C. Canary10Percent15Minutes

D. Linear10PercentEvery5Minute

Correct Answer: A

Explanation

With the Canary Deployment Preference type, Traffic is shifted in two intervals. With Canary10Percent5Minutes, 10 percent of traffic is shifted in the first interval while remaining all traffic is shifted after 5 minutes.

Option B is incorrect as Linear10PercentEvery10Minutes will add 10 percent traffic linearly to a new version every 10 minutes. So, after 100 minutes all traffic will be shifted to the new version.

Option C is incorrect as Canary10Percent15Minutes will send 10 percent traffic to the new version and 15 minutes later complete deployment by sending all traffic to the new version.

Option D is incorrect as Linear10PercentEvery5Minute will add 10 percent traffic linearly to the new version every 5 minutes. So, after 50 minutes all traffic will be shifted to the new version.

For more information on Deployment Preference Type for AWS SAM templates, refer to the following URL: https://docs.aws.amazon.com/serverless-application-model/latest/developerguide/automating-updates-to-serverless-apps.html

Skill : Development with AWS Services

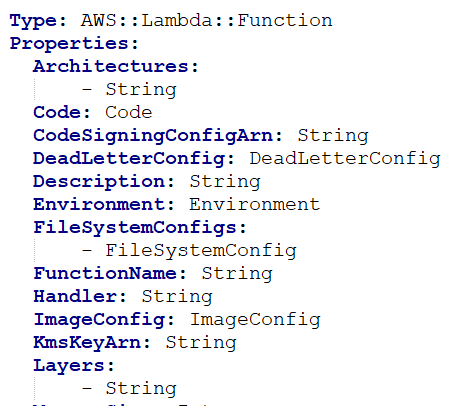

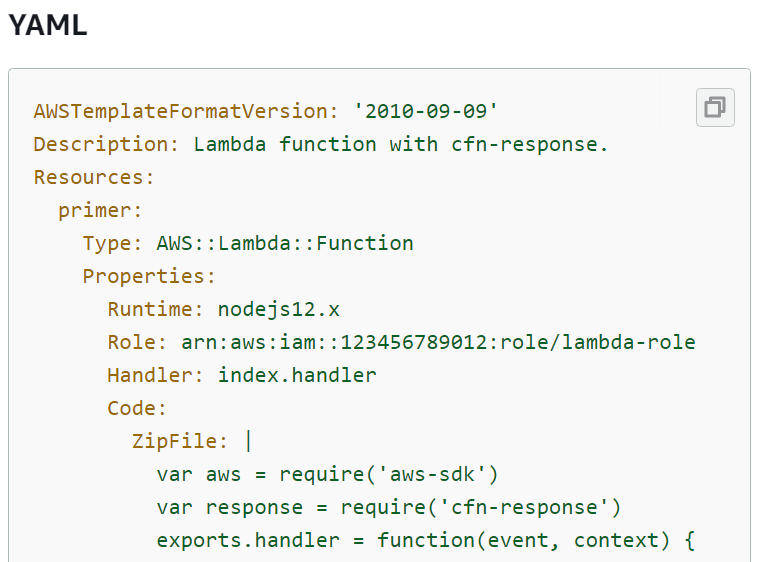

19. You are using S3 buckets to store images. These S3 buckets invoke a lambda function on upload. The Lambda function creates thumbnails of the images and stores them in another S3 bucket. An AWS CloudFormation template is used to create the Lambda function with the resource “AWS::Lambda::Function”. Which of the following attributes is the method name that Lambda calls to execute the function?

Sample CloudFormation template:

A. Function Name

B. Layers

C. Environment

D. Handler

Correct Answer: D

Explanation

The handler is the name of the method within a code that Lambda calls to execute the function.

Option A is incorrect as the version number changes when the functions are “published”, so FunctionName is incorrect.

Option B is incorrect as it’s a list of function layers added to the Lambda function execution environment.

Option C is incorrect as these are variables that are accessible during Lambda function execution.

For more information on declaring Lambda Function in AWS CloudFormation Template, refer to the following URL: https://docs.aws.amazon.com/AWSCloudFormation/latest/UserGuide/aws-resource-lambda-function.html

Domain : Monitoring and Troubleshooting

20. Before deploying an application to production, the developer team needs to test the application latency locally using X-ray daemon. For this testing they want to skip checking Amazon EC2 instance metadata.

Which of the configuration settings can be done with daemon?

A. ~/xray-daemon$ ./xray -r

B. ~/xray-daemon$ ./xray -t

C. ~/xray-daemon$ ./xray -b

D. ~/xray-daemon$ ./xray -o

Correct Answer: D

Explanation

~/xray-daemon$ ./xray -o command option can be used while running X-Ray daemon locally & not on Amazon EC2 instance. This will skip checking Amazon EC2 instance metadata.

Option A is incorrect as this command can be used to assume an IAM role while saving results in different accounts.

Option B is incorrect as this command can be used to bind a different TCP port for the X-Ray service.

Option C is incorrect as this command can be used to bind a different UDP port for the X-Ray service.

For more information on configuring AWS X-Ray daemon, refer to the following URL: https://docs.aws.amazon.com/xray/latest/devguide/xray-daemon-configuration.html

Domain : Security

21. Your company currently stores its objects in S3. The current request rate is around 11000 GET requests per second. There is now a mandate for objects to be encrypted at rest. So you enable encryption using KMS. There are now performance issues being encountered. What could be the main reason behind this?

A. Amazon S3 will now throttle the requests since they are now being encrypted using KMS

B. You need to also enable versioning to ensure optimal performance

C. You are now exceeding the throttle limits for KMS API calls

D. You need to also enable CORS to ensure optimal performance

Correct Answer: C

Explanation

This is also mentioned in the AWS Documentation.

You can make API requests directly or by using an integrated AWS service that makes API requests to AWS KMS on your behalf. The limit applies to both kinds of requests.

Option A is incorrect because S3 will not throttle requests just because encryption is enabled.

For example, you might store data in Amazon S3 using server-side encryption with AWS KMS (SSE-KMS). Each time you upload or download an S3 object that’s encrypted with SSE-KMS, Amazon S3 makes a GenerateDataKey (for uploads) or Decrypt (for downloads) request to AWS KMS on your behalf. These requests count toward your limit, so AWS KMS throttles the requests if you exceed a combined total of 5500 (or 10,000) uploads or downloads per second of S3 objects encrypted with SSE-KMS.

Options B and D are incorrect because these will not help increase performance.

For more information on KMS limits improvement, please refer to the below URL: https://docs.aws.amazon.com/kms/latest/developerguide/limits.html

Domain : Deployment

22. Your company has asked you to maintain an application using Elastic Beanstalk. They have mentioned that when updates are made to the application, the infrastructure maintains its full capacity. Which of the following deployment methods should you use for this requirement?

A. All at once

B. Rolling

C. Immutable

D. Rolling with additional batch

Correct Answers: C and D

Explanation

Since the only requirement is that the infrastructure should maintain its full capacity, So answers should be both C & D.

You can now use an immutable deployment policy when updating your application or environment configuration on Elastic Beanstalk. This policy is well suited for updates in production environments where you want to minimize downtime and reduce the risk from failed deployments. It ensures that the impact of a failed deployment is limited to a single instance and allows your application to serve traffic at full capacity throughout the update.

You can now also use a rolling with additional batch policy when updating your application. This policy ensures that the impact of a failed deployment is limited to a single batch of instances and allows your application to serve traffic at full capacity throughout the update.

Option A is incorrect because All at once is used to deploy the new version to all instances simultaneously. All instances in your environment are out of service for a short time while the deployment occurs.

Option B is incorrect because Rolling is used to deploy the new version in batches. Each batch is taken out of service during the deployment phase, reducing your environment’s capacity by the number of instances in a batch.

Please refer to the following links for more information: https://docs.aws.amazon.com/elasticbeanstalk/latest/dg/using-features.rolling-version-deploy.html#environments-cfg-rollingdeployments-method, https://docs.aws.amazon.com/elasticbeanstalk/latest/dg/environmentmgmt-updates-imm

Domain : Refactoring

23. How many read request units of the DynamoDB table are required for an item up to 8 KB using one strongly consistent read request?

A. 1

B. 2

C. 4

D. 8

Correct Answer: B

Explanation

One read request unit represents one strongly consistent read request, or two eventually consistent read requests for an item up to 4 KB in size.

As in the question, the item is up to 8KB, the DynamoDB table needs two read request units.

Domain : Development with AWS Services

24. Your application is developed to pick up metrics from several servers and push them off to CloudWatch. At times, the application gets client 429 errors. Which of the following can be done from the programming side to resolve such errors?

A. Use the AWS CLI instead of the SDK to push the metrics

B. Ensure that all metrics have a timestamp before sending them across

C. Use exponential backoff in your requests

D. Enable encryption for the requests

Correct Answer: C

Explanation

The main reason for such errors is that throttling occurs when many requests are sent via API calls. The best way to mitigate this is to stagger the rate at which you make the API calls.

This is also given in the AWS Documentation.

In addition to simple retries, each AWS SDK implements an exponential backoff algorithm for better flow control. The idea behind exponential backoff is to use progressively longer waits between retries for consecutive error responses. You should implement a maximum delay interval, as well as a maximum number of retries. The maximum delay interval and maximum number of retries are not necessarily fixed values and should be set based on the operation being performed and other local factors, such as network latency.

Option A is invalid because this accounts for the same thing. It’s basically the number of requests that is the issue.

Option B is invalid because any way you have to add the timestamps when sending the requests.

Option D is invalid because this would not help in the issue.

For more information on API retries, please refer to the below URL: https://docs.aws.amazon.com/general/latest/gr/api-retries.html

Domain : Refactoring

25. You’ve define a DynamoDB table with a read capacity of 5 and a write capacity of 5. Which of the following statements are TRUE?

A. Strong consistent reads of a maximum of 20 KB per second

B. Eventual consistent reads of a maximum of 20 KB per second

C. Strong consistent reads of a maximum of 40 KB per second

D. Eventual consistent reads of a maximum of 40 KB per second

E. Maximum writes of 5KB per second

Correct Answers: A, D and E

Explanation

This is also given in the AWS Documentation.

For example, suppose that you create a table with 5 read capacity units and 5 write capacity units. With these settings, your application could:

- Perform strongly consistent reads of up to 20 KB per second (4 KB × 5 read capacity units).

- Perform eventually consistent reads of up to 40 KB per second (twice as much read throughput).

- Write up to 5 KB per second (1 KB × 5 write capacity units).

Based on the documentation, all other options are incorrect.

For more information on provisioned throughput, please refer to the below URL: https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/HowItWorks.ProvisionedThroughput.html

Domain : Monitoring and Troubleshooting

26. When calling an API operation on an EC2 Instance, the following error message was returned.

A client error (UnauthorizedOperation) occurred when calling the RunInstances operation:

You are not authorized to perform this operation. Encoded authorization failure message:

oGsbAaIV7wlfj8zUqebHUANHzFbmkzILlxyj__y9xwhIHk99U_cUq1FIeZnskWDjQ1wSHStVfdCEyZILGoccGpC

iCIhORceWF9rRwFTnEcRJ3N9iTrPAE1WHveC5Z54ALPaWlEjHlLg8wCaB8d8lCKmxQuylCm0r1Bf2fHJRU

jAYopMVmga8olFmKAl9yn_Z5rI120Q9p5ZIMX28zYM4dTu1cJQUQjosgrEejfiIMYDda8l7Ooko9H6VmGJX

S62KfkRa5l7yE6hhh2bIwA6tpyCJy2LWFRTe4bafqAyoqkarhPA4mGiZyWn4gSqbO8oSIvWYPwea

KGkampa0arcFR4gBD7Ph097WYBkzX9hVjGppLMy4jpXRvjeA5o7TembBR-Jvowq6mNim0

Which of the following can be used to get a human-readable error message?

A. Use the command aws sts decode-authorization-message

B. Use the command aws get authorization-message

C. Use the IAM Policy simulator, enter the error message to get the human readable format

D. Use the command aws set authorization-message

Correct Answer: A

Explanation

This is mentioned in the AWS Documentation.

Decodes additional information about the authorization status of a request from an encoded message returned in response to an AWS request.

For example, if a user is not authorized to perform an action that he or she has requested, the request returns a Client.UnauthorizedOperation response (an HTTP 403 response). Some AWS actions additionally return an encoded message that can provide details about this authorization failure.

Because of the right command used in the documentation, all other options are incorrect.

For more information on the command, please refer to the below URL: https://docs.aws.amazon.com/cli/latest/reference/sts/decode-authorization-message.html

Domain: Deployment

27. You have created an Amazon DynamoDB table with a Global Secondary Index. Which of the following can be used to get the latest results quickly with the least impact on RCU (Read Capacity Unit)?

A. Query with ConsistentRead

B. Scan with ConsistentRead

C. Query with EventualRead

D. Scan with EventualRead

Correct Answer: C

Explanation

Global Secondary Index does not support Consistent read. It only supports Eventual Read. For other tables, Query with Consistent Read will provide the latest results without scanning the whole table.

Option A is incorrect as Global Secondary Index does not support Consistent read.

Option B is incorrect as Scan will impact performance as it will scan the whole table.

Option D is incorrect as Scan will impact performance as it will scan the whole table.

For more information for Query with DynamoDB, refer to the following URL: https://docs.aws.amazon.com/amazondynamodb/latest/APIReference/API_Query.html

Domain: Security

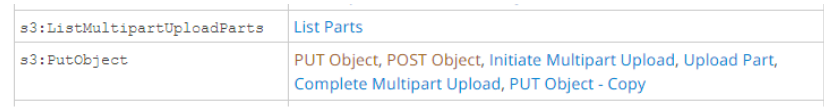

28. All the objects stored in the Amazon S3 bucket need to be encrypted at rest. You are creating a bucket policy for the same.

Which header needs to be included in the bucket policy to enforce server-side encryption with SSE-S3 for a specific bucket?

A. Set “x-amz-server-side-encryption-customer-algorithm” as AES256 request header

B. Set “x-amz-server-side-encryption-bucket” as AES256 request header

C. Set “x-amz-server-side-encryption-context” as AES256 request header

D. Set “x-amz-server-side-encryption” as AES256 request header

Correct Answer: D

Explanation

To enable server-side encryption for all objects within a bucket, a request should include the “x-amz-server-side-encryption” header to request server-side encryption. A bucket policy can be created to deny all other requests.

Option A is incorrect as “x-amz-server-side-encryption-customer-algorithm” is an invalid header for encrypting objects in a bucket with SSE-S3.

Option B is incorrect as “x-amz-server-side-encryption-bucket” is an invalid header for encrypting objects in a bucket with SSE-S3

Option C is incorrect as “x-amz-server-side-encryption-context” is an invalid header for encrypting objects in a bucket with SSE-S3

For more information on Amazon S3-managed encryption keys (SSE-S3), refer to the following URLs: https://docs.aws.amazon.com/AmazonS3/latest/userguide/UsingServerSideEncryption.html

Domain : Refactoring

29. You are using Amazon DynamoDB for storing all product details for an online Furniture store. Which of the following expression can be used to return the Colour & Size Attribute of the table during query operations?

A. Update Expressions

B. Condition Expressions

C. Projection Expressions

D. Expression Attribute Names

Correct Answer: C

Explanation

Projection Expression is used to identify a specific attribute from a table instead of all items within a table during scan or query operation. Projection Expression can be created with Colour & Size instead of querying the full table in the above case.

Option A is incorrect as Update Expression is used to specify how an update item will modify an item’s attribute. In the above case, there is no need to modify the attributes of a table.

Option B is incorrect as Condition Expressions are used to specify a condition that should be met to modify an item’s attribute.

Option D is incorrect as Expression Attribute Names are used as an alternate name in an expression instead of an actual attribute name.

For more information on Projection expression for DynamoDB, refer to the following URL: https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/Expressions.ProjectionExpressions.html

Domain : Deployment

30. Which of the following is true with respect to strongly consistent read requests from an application to a DynamoDB with a DAX cluster?

A. All requests are forwarded to DynamoDB & results are cached

B. All requests are forwarded to DynamoDB & results are stored in Item Cache before passing to application

C. All requests are forwarded to DynamoDB & results are stored in Query Cache before passing to application

D. All requests are forwarded to DynamoDB & results are not cached

Correct Answer: D

Explanation

For strongly consistent read request from an application, DAX Cluster pass all request to DynamoDB & does not cache for these requests.

Option A is incorrect as Partly correct as for consistent read request from an application, DAX Cluster pass all requests to DynamoDB & does not cache for these requests.

Option B is incorrect as Only for GetItem and BatchGetItem eventual consistent read request, Data is stored in Item Cache.

Option C is incorrect as Only for Query and Scan eventual consistent read request, Data is stored in Query Cache.

For more information on DAX for DynamoDB, refer to the following URL: https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/DAX.concepts.html#DAX.concepts.item-cache

Domain : Development with AWS Services

31. You are a developer for your company. You are working on creating Cloudformation templates for different environments. You want to be able to base the creation of the environments on the values passed at runtime to the template. How can you achieve this?

A. Specify an Outputs section

B. Specify a parameters section

C. Specify a metadata section

D. Specify a transform section

Correct Answer: B

Explanation

You can use the Parameters section to take in values at runtime. You can then use the values of those parameters to define how the template gets executed.

The AWS Documentation also mentions the following.

Parameters (optional)

“Values to pass to your template at runtime (when you create or update a stack). You can refer to parameters from the Resources and Outputs sections of the template”.

Option A is invalid since this is used to describes the values that are returned whenever you view your stack’s properties.

Option C is invalid since this is used to specify objects that provide additional information about the template.

Option D is invalid since this is used to specify options for the SAM Model.

For more information on the working of cloud formation templates, please refer to the below URL: https://docs.aws.amazon.com/AWSCloudFormation/latest/UserGuide/template-anatomy.html

Domain : Deployment

32. As an API developer, you have just configured an API with the AWS API gateway service. You are testing out the API and getting the below response whenever an action is made to an undefined API resource.

{ “message”: “Missing Authentication Token” }

You want to customize the error response and make it more user-readable. How can you achieve this?

A. By setting up the appropriate method in the API gateway

B. By setting up the appropriate method integration request in the API gateway

C. By setting up the appropriate gateway response in the API gateway

D. By setting up the appropriate gateway request in the API gateway

Correct Answer: C

Explanation

This is mentioned in the AWS Documentation.

Set up Gateway Responses to Customize Error Responses

If API Gateway fails to process an incoming request, it returns the client an error response without forwarding the request to the integration backend. By default, the error response contains a short descriptive error message. For example, if you attempt to call an operation on an undefined API resource, you receive an error response with the { “message”: “Missing Authentication Token” } message. If you are new to API Gateway, you may find it difficult to understand what actually went wrong.

For some of the error responses, API Gateway allows customization by API developers to return the responses in different formats. For the Missing Authentication Token example, you can add a hint to the original response payload with the possible cause, as in this example: {“message”:”Missing Authentication Token”, “hint”:”The HTTP method or resources may not be supported.”}.

The documentation clearly mentions how this should be configured. Hence the other options are all invalid.

For more information on the gateway response, please refer to the below URL: https://docs.aws.amazon.com/apigateway/latest/developerguide/customize-gateway-responses.html

Domain : Deployment

33. Your team has currently developed an application using Docker containers. As the development lead, you now need to host this application in AWS. You also need to ensure that the AWS service has orchestration services built-in. Which of the following can be used for this purpose?

A. Consider building a Kubernetes cluster on EC2 Instances

B. Consider building a Kubernetes cluster on your on-premise infrastructure

C. Consider using the Elastic Container Service

D. Consider using the Simple Storage service to store your docker containers

Correct Answer: C

Explanation

The AWS Documentation also mentions the following.

Amazon Elastic Container Service (Amazon ECS) is a highly scalable, fast, container management service that makes it easy to run, stop, and manage Docker containers on a cluster. You can host your cluster on a serverless infrastructure that Amazon ECS manages by launching your services or tasks using the Fargate launch type. You can host your tasks on a cluster of Amazon Elastic Compute Cloud (Amazon EC2) instances that you manage by using the EC2 launch type for more control.

Options A and B are invalid since these would involve additional maintenance activities.

Option D is incorrect since this is Object-based storage.

For more information on the Elastic Container service, please refer to the below URL: https://docs.aws.amazon.com/AmazonECS/latest/developerguide/Welcome.html

Domain : Monitoring and Troubleshooting

34. You are developing an application that is working with a DynamoDB table. During the development phase, you want to know how much of the Consumed capacity is being used for the queries being fired. How can this be achieved?

A. The queries by default sent via the program will return the consumed capacity as part of the result

B. Ensure to set the ReturnConsumedCapacity in the query request to TRUE

C. Ensure to set the ReturnConsumedCapacity in the query request to TOTAL

D. Use the Scan operation instead of the query operation

Correct Answer: C

Explanation

The AWS Documentation mentions the following.

By default, a Query operation does not return any data on how much read capacity it consumes. However, you can specify the ReturnConsumedCapacity parameter in a Query request to obtain this information. The following are the valid settings for ReturnConsumedCapacity.

- NONE—no consumed capacity data is returned. (This is the default).

- TOTAL—the response includes the aggregate number of read capacity units consumed.

- INDEXES—the response shows the aggregate number of read capacity units consumed, together with the consumed capacity for each table and index that was accessed.

Because of what the AWS Documentation mentions, all other options are invalid.

For more information on the Query operation, please refer to the below URL: https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/Query.html

Domain : Development with AWS Services

35. There is a new Lambda Function developed using AWS CloudFormation Templates. Which of the following attributes can be used to test the new Function with migrating 5% of traffic to the new version?

A. aws lambda create-alias –name alias name –function-name function-name \–routing-config AdditionalVersionWeights={“2″=0.05}

B. aws lambda create-alias –name alias name –function-name function-name \–routing-config AdditionalVersionWeights={“2″=5}

C. aws lambda create-alias –name alias name –function-name function-name \–routing-config AdditionalVersionWeights={“2″=0.5}

D. aws lambda create-alias –name alias name –function-name function-name \–routing-config AdditionalVersionWeights={“2″=5%}

Correct Answer: A

Explanation

Routing-Config parameter of the Lambda alias allows one to point to two different versions of the Lambda function and determine what percentage of incoming traffic is sent to each version. In the above case, a new version will be created to test the new function with 5 % of the traffic, while the original version will be used for the remaining 95% of traffic.

Option B is incorrect since 5% of traffic needs to shift to a new function. The routing-config parameter should be 0.05 & not 5.

Option C is incorrect since 5% of traffic needs to shift to a new function. The routing-config parameter should be 0.05 & not 0.5.

Option D is incorrect since 5% of traffic needs to shift to a new function. The routing-config parameter should be 0.05 & not 5%.

For more information on Pointing to the right version of the Lambda function in CloudFormation, refer to the following URL: https://docs.aws.amazon.com/lambda/latest/dg/lambda-traffic-shifting-using-aliases.html

Domain: Deployment

36. You are part of a development team that is in charge of creating Cloudformation templates. These templates need to be created across multiple accounts with the least amount of effort. Which of the following would assist in accomplishing this?

A. Creating Cloudformation ChangeSets

B. Creating Cloudformation StackSets

C. Make use of Nested stacks

D. Use Cloudformation artifacts

Correct Answer: B

Explanation

The AWS Documentation mentions the following.

AWS CloudFormation StackSets extends the functionality of stacks by enabling you to create, update, or delete stacks across multiple accounts and regions with a single operation. Using an administrator account, you define and manage an AWS CloudFormation template and use the template as the basis for provisioning stacks into selected target accounts across specified regions.

Option A is incorrect since this is used to make changes to the running resources in a stack.

Option C is incorrect since these are stacks created as part of other stacks.

Option D is incorrect since this is used in conjunction with Code Pipeline.

For more information on Stack Sets, please refer to the below URL: https://docs.aws.amazon.com/AWSCloudFormation/latest/UserGuide/what-is-cfnstacksets.html

Domain: Monitoring and Troubleshooting

37. Your team has started configuring CodeBuild to run builds in AWS. The source code is stored in a bucket. When the build is run, you are getting the below error.

Error: “The bucket you are attempting to access must be addressed using the specified endpoint…” When Running a Build.

Which of the following could be the cause of the error?

A. The bucket is not in the same region as the Code Build project.

B. Code should ideally be stored on EBS Volumes.

C. Versioning is enabled for the bucket.

D. MFA is enabled on the bucket.

Correct Answer: A

Explanation

This error is specified in the AWS Documentation.

Because the error is clearly mentioned, all other options are invalid.

For more information on troubleshooting Code Builds, please refer to the below URL: https://docs.aws.amazon.com/codebuild/latest/userguide/troubleshooting.html

Domain: Security

38. Your team is deploying a set of applications onto AWS. These applications work with multiple databases. You need to ensure that the database passwords are stored securely. Which of the following is the ideal way to store the database passwords?

A. Store them in separate Lambda functions which can be invoked via HTTPS

B. Store them as secrets in AWS Secrets Manager

C. Store them in separate DynamoDB tables

D. Store them in separate S3 buckets

Correct Answer: B

Explanation

This is mentioned in the AWS Documentation.

AWS Secrets Manager is an AWS service that makes it easier for you to manage secrets. Secrets can be database credentials, passwords, third-party API keys, and even arbitrary text. You can store and control access to these secrets centrally by using the Secrets Manager console, the Secrets Manager command-line interface (CLI), or the Secrets Manager API and SDKs.

Option A is incorrect because the Lambda function is a compute service and not used for storing credentials.

Option C is incorrect because DynamoDB is a NoSQL database service and not suitable for storing credentials.

Option D is incorrect because the S3 bucket is used to store objects and is not particularly designed to store credentials. It may have some security issues.

For more information on the Secrets Manager, please refer to the below URL: https://docs.aws.amazon.com/secretsmanager/latest/userguide/intro.html

Domain: Deployment

39. The Development team at New Epic Games are working on a new version of their top-rated car game. All their services are on AWS hence the management team has decided to explore AWS CodeStar along with GitHub & AWS CodeDeploy to launch the new version.

While testing, the application is experiencing continuous breaks even though the dependencies are being pointed to the latest version using the package.json file which is in the AWS CodeStar project. Which of the following solutions are required to resolve the problem?

A. Change the repository to AWS CodeCommit since AWS CodeStar does not support GitHub.

B. Set the dependencies to point to a specific version to avoid application breaks.

C. Change the deployment tool to Jenkins since it is well-supported by AWS CodeStar.

D.Package.json file needs to be updated with relevant read and write permissions through AW IAM.

CORRECT Answer: B

Explanation

As a best practice, AWS recommends setting your dependencies to point to a specific version. This is known as pinning the version. In fact, AWS does not recommend that you set the version to the latest because that can introduce changes that might break your application without notice.

https://docs.aws.amazon.com/codestar/latest/userguide/best-practices.html

Option A is incorrect because changing the repository will not help in correcting the application breaks and secondly AWS CodeStar does support GitHub as the second repository service.

Option C is incorrect because changing the deployment tool will not help in correcting the application breaks. The code has been deployed successfully, hence the problem lies somewhere else i.e. dependencies pointing.

Option D is incorrect because it has nothing to do with permissions. It is getting deployed but facing breaks that need to be corrected.

Domain: Deployment

40. Mary is a Docker expert and has deployed multiple projects using AWS Cloud9 as the preferred IDE along with AWS CodeStar to streamline the CI/CD pipelines.

She is currently struggling to open a new environment for a new project which involves workloads on Docker. She is unable to connect to the EC2 environment in the project VPC which has been set up using the IPV4 CIDR block of 172.17.0.0./16

Which of the following solutions can be used to resolve the problem?

A. Enable Advance Networking for the EC2 instance which is being used for AWS Cloud9

B. Configure a new VPC for the instance backing the EC2 environment using a 192.168.0.0/16 as the CIDR block.

C. Upgrade the EC2 instance backing the environment from t2.micro to t3.large family type and then try reconnecting.

D. Change the IP address range of the existing VPC to 172.17.0.0/18.

CORRECT Answer: B

Explanation

Docker uses a link layer device called a bridge network that enables containers that are connected to the same bridge network to communicate.

AWS Cloud9 creates containers that use a default bridge for container communication. The default bridge typically uses the 172.17.0.0/16 subnet for container networking.

If the VPC subnet for your environment’s instance uses the same address range that’s already used by Docker, an IP address conflict might occur. So, when AWS Cloud9 tries to connect to its instance, that connection is routed by the gateway route table to the Docker bridge and this prevents AWS Cloud9 from connecting to the EC2 instance that backs the development environment.

Hence changing the CIDR block will help resolve the issue.

Option A is incorrect because Advanced networking provides higher bandwidth, high packet-per-second performance with lower latencies. This will not help in resolving the issue.

Option C is incorrect because upgrading the instance type will not help. It is a connection issue and not the performance.

Option D is incorrect because you can’t change the IP address range for an existing VPC or subnet.

Domain: Deployment

41. A leading online learning platform provider having their 500 + courses distributed on 300 + micro services is experiencing High CPU usage during the JSON decentralization process used for application communications and bottlenecks resulting in server errors because of timeouts.

The manual code reviews are turning out to be time consuming and too laborious.

Please select the right option from the listed below to resolve the application communication latencies, high CPU usage and performance degradation.

A. Migrate the entire workload to AWS Elastic Kubernetes Services resulting in increased performance, automatic scaling and applications availability.

B. Move the code to a Test environment & Integrate Amazon CodeReview Profiler to evaluate the performance of JSON code and native object serialization.

C. Change the Application Load balance to Network Load balancer adding more listener’s.

D. Upgrade the backend EC2 Instance family to the latest Amazon EC2 M5 type of instances providing 25 Gbps of network bandwidth using enhanced networking.

CORRECT Answer: B

Explanation

Because all the issues are similar across multiple applications leading towards rechecking of the code,CodeGuru Profiler provides application insights, helps troubleshoot issues in the applications and is easy to maintain and scale.

Reference:

https://aws.amazon.com/blogs/devops/coursera-codeguru-profiler/

Option A is incorrect because the question does not share any details of the existing infrastructure. It is pertaining towards application communication.

Option C is incorrect because Network Load balancer will not help in providing a solution to JSON decentralization.

Option D is incorrect because it has nothing to do with the underlying infrastructure.

Domain: Deployment

42. The team at Wonder Web Studios are working on different versions of a new-age serverless Photo app called as newagephoto.com which is based on the Next.js modern framework. Their team consists of frontend, backend & mobile developers who are making use of AWS Amplify Studio to quickly and easily build this application.

One of their users is experiencing the following error in deleting the old fictional version of the app while using the console;

User: arn:aws:iam::123456789012:user/User9 is not authorized to perform: amplifybackend;:RemoveAllBackends on resource: ver1photo-amplify-app

Please help this user in rectifying the problem by selecting the right option from the list below.

A. The policy for User9 needs to be updated to allow him to access the ver1photo-amplify-app resource using the amplifybackend:RemoveAllBackends action.

B. Add User9 to the administrative group so the user has full access to the project and set of permissions.

C. Use the Amplify Command Line Interface (CLI) to delete the resource and reinitialize the project.

D. Move the app to a new environment, integrate it with AWS Cognito, and set up new authentication level permissions to delete the app.

CORRECT Answer: A

Explanation

Deleting an app or a backend in AWS Amplify Studio requires amplify & amplifybackend permissions. If the user’s IAM policy provides only amplify permissions, he/she will get a permission error while trying to delete the app.

Reference:

https://docs.aws.amazon.com/amplify/latest/userguide/security_iam_troubleshoot.html

Option B is incorrect because adding the user to the administrative group gives more privileged permissions which are not necessary plus falters the AWS least privilege policy.

Option C is incorrect because this is a permission-related issue and the policy needs to be updated. No need to reinitialize the project.

Option D is incorrect because AWS Cognito is used for authentication and will not resolve the issue since it is permission level. Only the user’s IAM policy needs to be updated.

Domain: Troubleshooting and Optimization

43. A leading Automobile dealer company that is expanding globally, facing problems to ensure a consistent state of provisioning and maintenance of environments.

Their current architecture is rolling out Kubernetes jobs through AWS EKS using Spot instances to create new microservices for a new environment requested by a user. However, Spot instances are deleting the underlying nodes and jobs are getting terminated, hence disrupting the entire chain of environment creation for different business units.

From the below solutions proposed, you have been asked to resolve the issue by selecting the right options. (Select TWO)

A. Replace Spot instances with Reserved which will ensure that the underlying infrastructure will not get terminated.

B. Integrate AWS API Gateway which will trigger Lamda functions to spin off new instances.

C. Integrate AWS SNS with AWS SQS with a Dead letter queue which will ensure job requests are being managed, stored, and processed seamlessly. Dead Letter queue will further enhance and bring in overall consistency.

D. Integrate Cloudwatch monitoring along with Lambda, to spin off new instances in the event of nodes that are getting terminated.

CORRECT Answer: B and C

Explanation

Services like AWS SQS & AWS SNS bring in isolation & decouple the application. Even if there are messages which do not get consumed, the dead letter queue handles the lifecycle of these unconsumed messages.

Reference:

Option A is incorrect since reserved instances will only bring in price changes. It does not bring any kind of advantages to the workflow.

Option D is incorrect since Cloudwatch & Lambda will only get into action once the nodes have failed. They will not be able to rectify the failures or bring any kind of consistency to the flow.

Domain: Development with AWS Services

44. The engineers at EpicONE Games are trying to work out the cache memory and the node provisioning sizing for their AWS ElastiCache cluster. They are clearly looking at high throughput, low latency, and about 35 to 45 million users per session for their new upcoming war game. They have been advised to spread the memory over more nodes with smaller capacities rather than using fewer capacity nodes. They are looking at 35 GB of cache memory for the cluster.

One of the engineers has proposed the following options and you are required to work with the team and help them to choose the right sizing by selecting the correct option.

A. Use cache.t2.medium type of nodes with each having 3.22 GiB memory, 2 cores, and a quantity of 11 nodes giving 35.42 GiB in total meeting the requirement.

B. Start with one cache.m5.large node. Monitor memory usage, CPU utilization, and cache hit rate with ElastiCache metrics that further get published to CloudWatch.

These metrics will enable monitoring of your clusters in the form of CPU usage and cache gets and cache misses. These metrics will be measured and published for each Cache node in 60-second intervals.

Based on the results, node & memory sizing can then be worked upon.

C. The node size does not impact the performance and fault tolerance at all. You could start with the t2 type of instances keeping the cost to a minimum and then add the nodes as the requirement increases.

D. The number of nodes in the cluster is not a key factor when it comes to cluster availability.

CORRECT Answer: A

Explanation

AWS ElastiCache clusters contain one or more nodes with the cluster’s data portioned across them. Because of this, cluster memory needs and node’s memory are related.

The total memory capacity of the cluster is calculated by multiplying the number of nodes in the cluster by the RAM capacity of the node after deducting the system overhead.

Reference:

Option B is incorrect because this method is used when we are not sure of how much capacity is needed. Trials can only be estimated and based on the estimation, the configuration can be worked out.

Option C is incorrect because the node size “does” impact your cluster costs, performance, and fault tolerance.

Option D is incorrect because the number of nodes is an important factor to consider, to reduce the availability impact, spread the memory, and compute capacity over more nodes with smaller capacity rather than using fewer high-capacity nodes.

Summary

So, here we’ve covered 35+ Free AWS Developer Associate exam questions. Definitely, this set of AWS CDA (dva-c02) practice exam questions will prove as a valuable resource to prepare you for the real certification exam. With the aim of helping you pass the AWS Developer Associate exam (DVA-C02) in the first attempt, we’ve prepared this exclusive set of AWS Developer Associate exam questions. Online, more unvalued dva-c02 dumps are available where you can avoid it and keep practicing on valuable resources.

Along with this, we provide AWS Developer Associate free test with free practice questions. You can also try our full-length AWS Developer Associate practice tests with practice questions to check your current level of preparation. Trying a number of practice questions makes you confident enough to pass the exam on the first attempt. We also provide 121+ Videos and 86 AWS Hands-on Labs where you can apply your learnings practically in live AWS environment.

If you are looking for help choosing the best AWS certification, read our blog post on which AWS certification exams are suitable for you.

What are you thinking now? Give your AWS Developer Associate exam preparation a new edge with these AWS CDA practice exam questions.

- Top 20 Questions To Prepare For Certified Kubernetes Administrator Exam - August 16, 2024

- 10 AWS Services to Master for the AWS Developer Associate Exam - August 14, 2024

- Exam Tips for AWS Machine Learning Specialty Certification - August 7, 2024

- Best 15+ AWS Developer Associate hands-on labs in 2024 - July 24, 2024

- Containers vs Virtual Machines: Differences You Should Know - June 24, 2024

- Databricks Launched World’s Most Capable Large Language Model (LLM) - April 26, 2024

- What are the storage options available in Microsoft Azure? - March 14, 2024

- User’s Guide to Getting Started with Google Kubernetes Engine - March 1, 2024

Need latest questions and answers for my upcoming exam. Please help me.