Google Kubernetes Engine (GKE) provides a managed environment for installing, scaling, and overseeing containerized applications by incorporating Google Cloud infrastructure.

Being a Google Cloud Certified Professional Cloud Architect, possessing expertise in Google Kubernetes Engine (GKE) is essential.

In this blog, you’ll learn about Google Kubernetes Engine, its features, mode of operations, and how to create a simple application using GKE in real-time hands-on labs settings.

Let’s see more in detail!

What is Google Kubernetes Engine?

Google Kubernetes Engine is a feature-rich controlled Kubernetes platform that facilitates the deployment, setup, and orchestration of containers by utilizing Google Cloud infrastructure. Several Google Compute Engine instances clustered together to form a Kubernetes cluster typically make up a GKE environment.

To offer an adaptable and flexible framework for orchestrating containers in Kubernetes clusters, GKE depends on Google Compute Engine (GCE). By allowing them to select from a variety of Kubernetes releases, GKE enables cluster managers to optimize operations while maintaining stability and performance.

Why Choose Google Kubernetes Engine?

One major reason for using Kubernetes such that you can achieve a higher amount of flexibility. It is designed in such a way that it can be used and installed anywhere in either private, public, or hybrid cloud environments. It helps the companies to attain better reach in terms of security, reliability, and availability.

Here are some core benefits of using Kubernetes:

- Open Source

- Increased Productivity

- Multi-Cloud Capability

- Portability & Flexibility

To manage the containerized applications, we move on to the Google Kubernetes Engine. And some more reasons for adopting Google Kubernetes Engine such as:

- The initial and perhaps most apparent factor is the backend team. Since both Kubernetes and GKE are products of Google, GKE provides seamless integration with various Google services. Additionally, any newly introduced features or tools are likely to be available on GKE before other platforms.

- Furthermore, GKE offers node auto-scaling, a feature lacking in its Amazon and Microsoft counterparts.

- Lastly, Google Kubernetes Engine stands out as the most cost-effective managed Kubernetes Service among the top three vendors.

These considerations collectively highlight why GKE would be the preferred option for individuals working with containers.

Features of GKE

Some of the distinct features of Kubernetes Engine such as:

- Pod and Cluster Autoscaling: Based on the user CPU utilization and other metrics, Google offers horizontal pod autoscaling and vertical pod autoscaling based on memory and CPU usage.

- Kubernetes Applications: Google offers in-built applications with additional features such as portability, licensing, and billing. Employing such applications can improve user productivity when their work is cut out.

- Integrated logging and monitoring: GKE provides logging and monitoring features with simplified checkbox configurations and thus makes it easier to gain insights into how the application is running.

- Fully Managed: GKE clusters are completely controlled by Google Site Reliability Engineers (SREs) to ensure that the cluster is up-to-date.

Modes of Operation in Google Kubernetes Engine

GKE collaborates with containerised applications and these applications can be encapsulated into platform-independent, segregated user-space instances, with the help of tools such as Docker.

In both GKE and Kubernetes, these containers, whether for applications or batch jobs, are collectively referred to as workloads.

Before migrating the workload into the GKE cluster, users need to package the workload into a container.

When creating a cluster on Google Kubernetes Engine, users have the option to choose from two operational modes outlined below:

1.) Standard mode

This mode, the original mode introduced with GKE, is still in use today. It provides users with flexibility in node configuration and complete control over managing clusters and node infrastructure. It is well-suited for those who seek comprehensive control over every aspect of their GKE experience.

2.) Autopilot mode

In this mode, Google handles the entire management of node and cluster infrastructure, offering a more hands-off approach for users. However, it comes with certain limitations to consider, such as a restricted choice in the operating system, currently limited to just two options and the availability of most features exclusively through the CLI.

Google Kubernetes Engine Architecture

Let’s explore the foundational architecture of Google Kubernetes Engine (GKE) with a focus on key components that facilitate its seamless operation.

Control Plane

The control plane plays a pivotal role in executing various processes such as the Kubernetes API server, scheduler, and core resource controllers. GKE directly manages the Control Plane based on the configured cluster settings.

Clusters

Clusters, as previously discussed, represent a collaborative group of machines. The Kubernetes engine harmonizes the functioning of all machines within the cluster.

Nodes

Nodes, the building blocks of a cluster, can be singular or multiple machines working together to execute containerized applications. Each node is responsible for running essential services supporting the containers within a specific cluster. Nodes with identical configurations form a Node Pool.

Pods

Pods, the smallest deployable computing units managed by Kubernetes, exist within a cluster. Clusters may host multiple pods, organized logically and containing one or more containers to run necessary applications.

Containers

Containers, falling under the realm of Software as a Service (SaaS), provide a form of operating system virtualization. GKE dynamically handles the distribution and scheduling of containers across clusters, optimizing efficiency. Containers can range from microservices to larger applications, running in isolated environments.

Virtual Private Cloud

The Virtual Private Cloud (VPC) is responsible for enforcing cluster isolation and enables the setup of routing and network policies. GKE clusters are formed within a subnet in a Google Cloud Platform (GCP) virtual private cloud. This VPC allocates IP addresses to pods based on native routing rules. Communication between clusters in different VPCs is achieved through VPC network peering. Additionally, GKE clusters can connect with external on-premises clusters or third-party cloud platforms using Cloud Interconnect or Cloud VPN routers.

Cluster Master

The Cluster Master is the managed instance that runs GKE control plane components, including the API server, resource controllers, and the scheduler. These components collectively manage storage, compute, and network resources for workloads within the GKE cluster. The GKE control plane oversees various aspects of the containerized application’s lifecycle, including scheduling, scaling, and upgrades.

Getting Started with Google Kubernetes Engine

In the Whizlabs hands-on labs, search the text getting started with Google Kubernetes Engine in the search bar. Once you find the desired lab page, follow the steps outlined in the lab task.

Sign in to the GCP console

- To sign in, paste your email address into the Google Sign-In page, then click “Next.”

- After entering your password, click “Next.”

- Clicking the “I Understand” button indicates your acceptance of the Google Workspace Terms of Service.

- By reviewing the terms of service and selecting the “Agree and Continue” button, you can accept the Google Cloud terms of service.

- Click the dropdown menu to choose the project from the top bar.

- Click on the project.

Creating a Simple Application using GKE: A Step-by-Step Guide

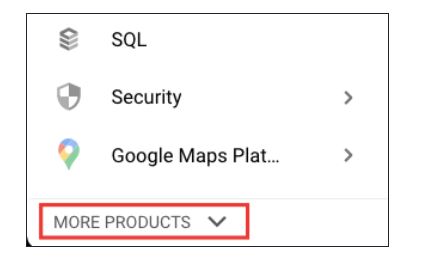

The collection of frequently used GCP services can be viewed by clicking on the hamburger button in the upper left corner. You can also scroll down and hit on more products to view more services.

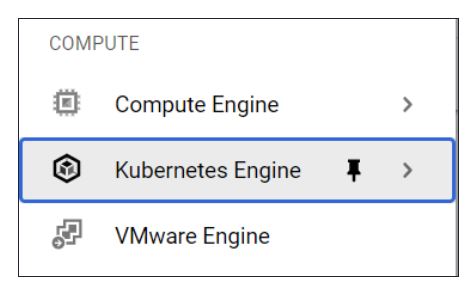

Click On Kubernetes Engine.

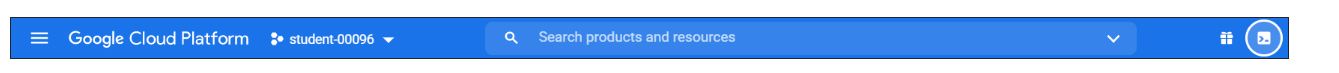

Click On The Cloud Shell Icon In The Top Right Corner As Shown Below.

Your screen will load with the Cloud Shell Window at the bottom.

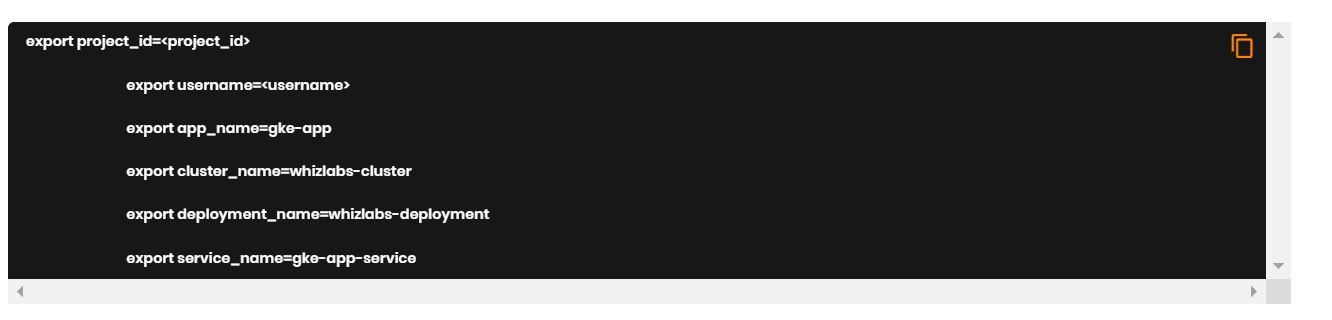

- Instead of writing the project ID again each time, we may reuse it by setting the Project ID variables to the provided project ID, which we are going to utilize later in the commands.

- Don’t include @Whizlabs.In; instead, replace it with the user name stated in the lab credentials section.

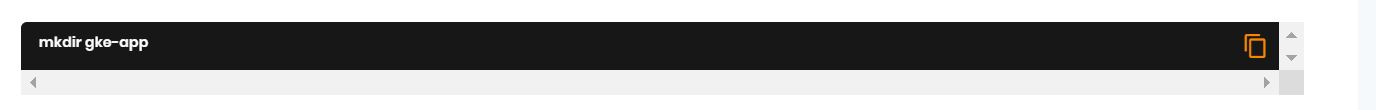

Enter the following command to create a directory named Gke-App.

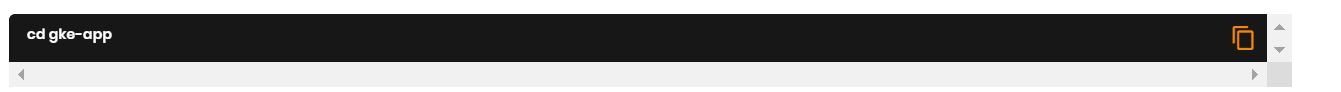

Enter the command to Change the Directory into the desired Directory.

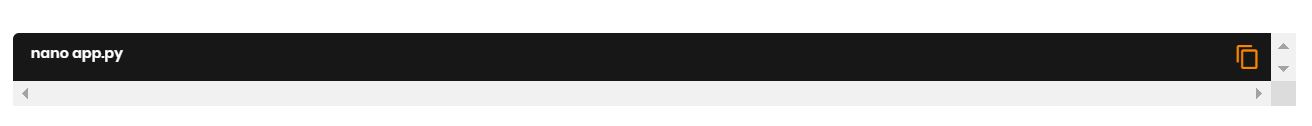

Enter the below command to create a File named App.Py And Open It In A Text Editor.

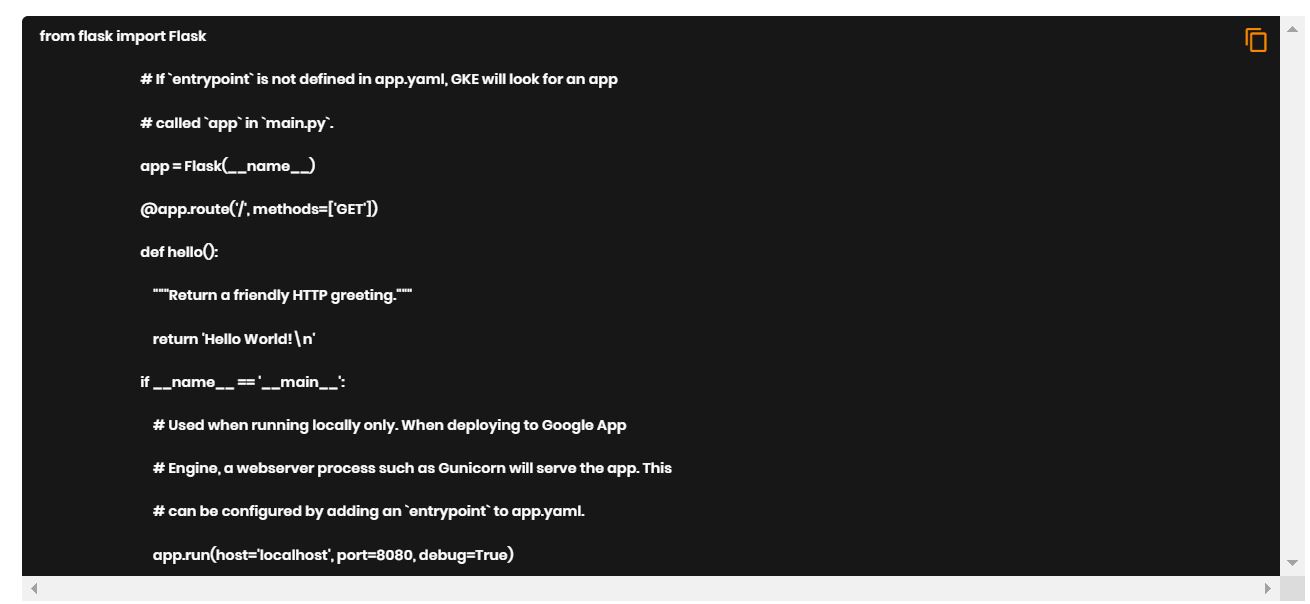

Enter The Given Code Which Will Print Hello World. After Writing The Code, Press CTRL + O To Save Then Press Enter. Press CTRL + X To Exit The Editor.

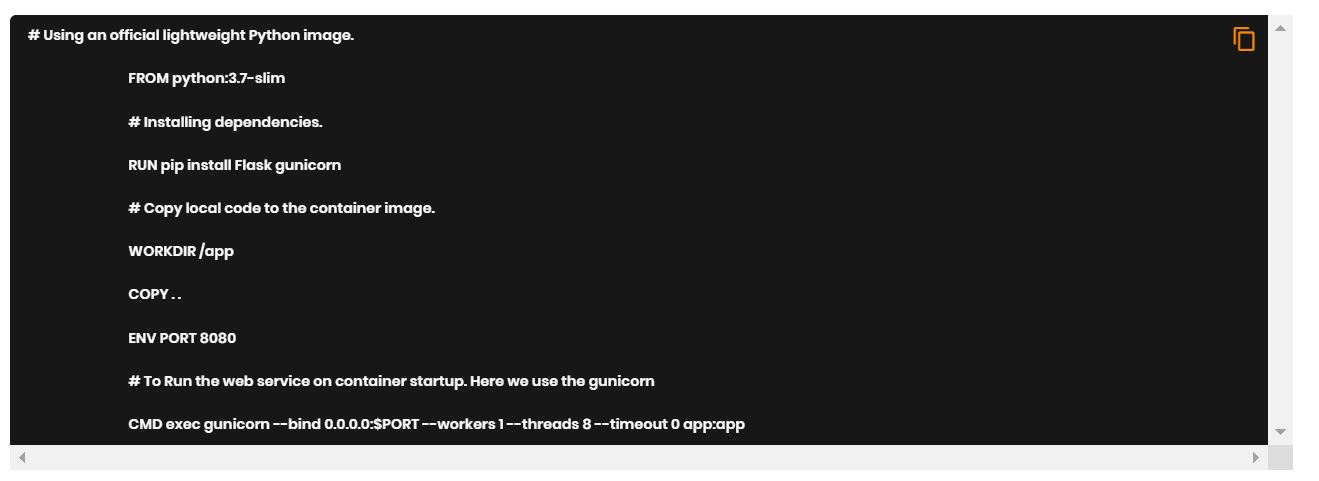

Execute the following command to create a file named Dockerfile and open it in a text editor:

Input the provided code into the Dockerfile. Once you have entered the code, press CTRL + O to save and then press Enter. To exit the editor, press CTRL + X.

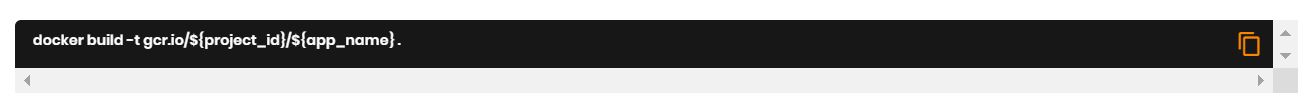

To create your Docker image, enter the given command (the current directory is indicated by a dot at the end of the command).

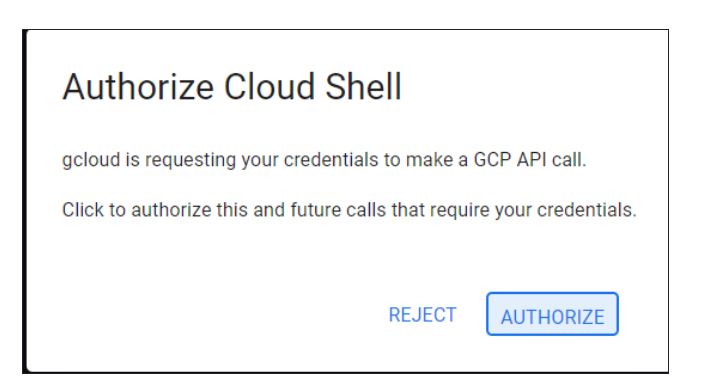

Now click 0n Authorize.

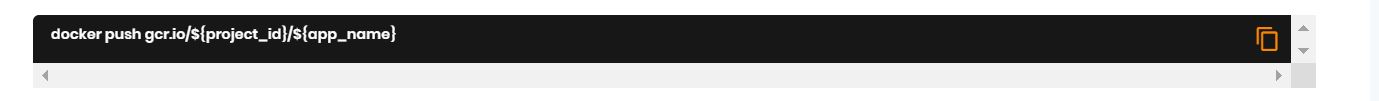

Enter The Given Command To Push The Docker Image To The Container Registry.

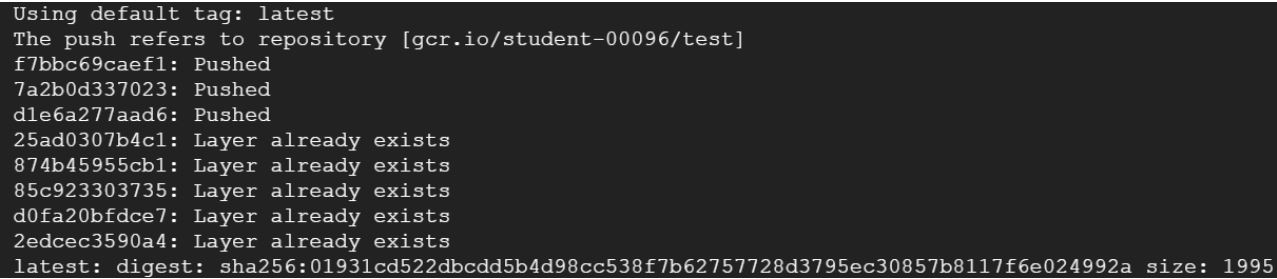

Output Should Look Like The Following

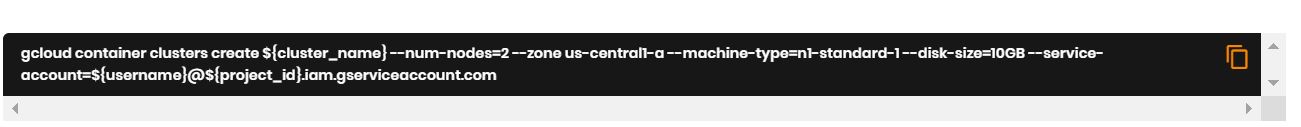

To set up a cluster with two nodes and a 10 GB boot size, use the command below. We have set up a service profile with the necessary permissions for this lab.

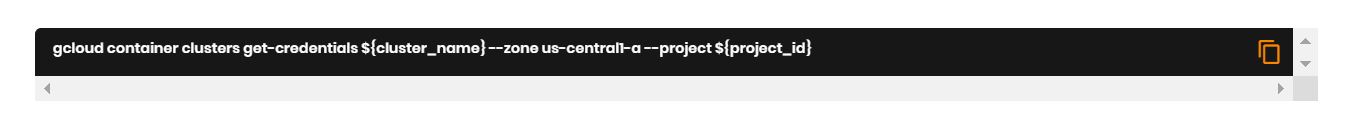

Enter the following command to get connected with the Cluster created.

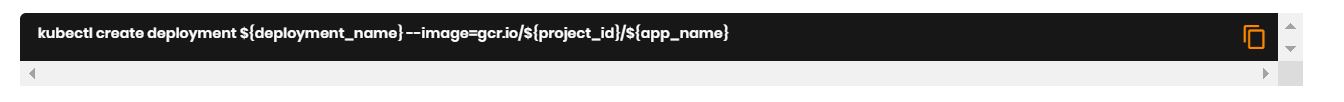

Enter the below command to deploy the image.

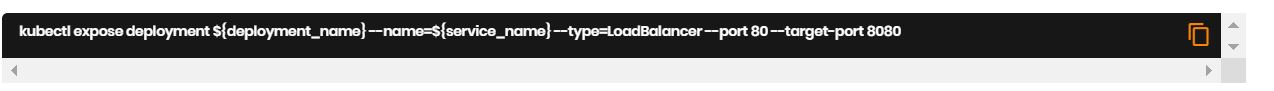

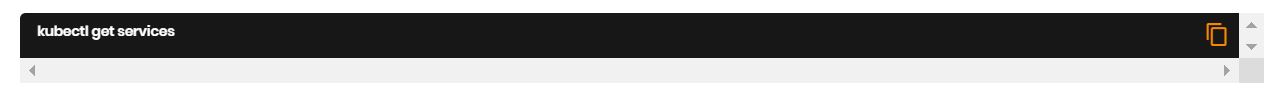

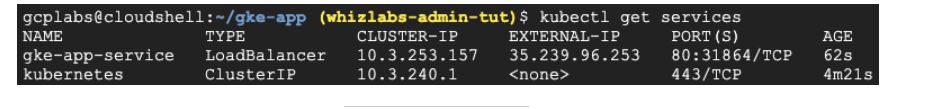

Enter The Below Command to list down the Services.

You can see the External IP Address that needs to be entered in the URL such as 35.239.96.253

The Final Output, Hello World, is Visible.

Simply refresh the Google Cloud Console page to confirm the creation and functionality of GKE.

Use Cases of Google Kubernetes Engine

Here are the common use cases of Google Kubernetes Engine:

1.) Continuous Delivery Pipeline

GKE makes it simple to install, update, and manage apps and services, which facilitates quick application creation and iteration.

To automatically build, test, and launch an application, users need to configure GKE, Cloud Build, Cloud Source Repositories, and Spinnaker for the Google Cloud services. The continuous delivery pipeline automatically rebuilds, retests, and redeploys the updated version of the app when the code is changed.

2.) Migrate a 2-tier application to GKE

Workloads can be moved and converted straight into containers in GKE by users using Migrate for Anthos. As an illustration, move a two-tiered LAMP stacking application from VMware to the Google Kubernetes Engine, including the application and database virtual machines.

By restricting database access to the application container and preventing access from outside the cluster, customers can increase security. Use kubectl to obtain authenticated shell access instead of SSH.

FAQs

Is Google Kubernetes Engine free?

There is a free tier for GKE, however, its features and resources are restricted. Pay-per-use options are offered for additional features.

Does using GKE require any prior knowledge?

Users no longer have to micromanage every aspect of their applications because Google will take care of it for them with the help of the new Autopilot function.

Is Kubernetes a container?

No, Kubernetes is a means of managing any application container that a person or group of people may own.

How much does the Google Kubernetes Engine cost?

The Google Kubernetes Engine pricing varies on the different usage.

Clusters on GKE operating in standard mode are free when running on less than three nodes. However, clusters with more than three nodes incur a charge of $0.10 per hour per cluster, in addition to the resources consumed by the workload.

For autopilot clusters with a single control plane, the cost is $0.10 per hour per cluster. In the case of large autopilot clusters featuring multiple control plane instances, charges are based on the number of control planes, nodes, and overall cluster resources utilized.

Conclusion

By following the steps and insights outlined in this blog, users can gain a solid foundation for effectively utilizing GKE to manage and deploy containerized applications on the Google Cloud platform.

Utilizing hands-on labs and sandboxes is indeed an excellent approach to practical learning.

This approach not only helps in mastering GKE but also provides a broader understanding of the Google Cloud ecosystem.

- Top 20 Questions To Prepare For Certified Kubernetes Administrator Exam - August 16, 2024

- 10 AWS Services to Master for the AWS Developer Associate Exam - August 14, 2024

- Exam Tips for AWS Machine Learning Specialty Certification - August 7, 2024

- Best 15+ AWS Developer Associate hands-on labs in 2024 - July 24, 2024

- Containers vs Virtual Machines: Differences You Should Know - June 24, 2024

- Databricks Launched World’s Most Capable Large Language Model (LLM) - April 26, 2024

- What are the storage options available in Microsoft Azure? - March 14, 2024

- User’s Guide to Getting Started with Google Kubernetes Engine - March 1, 2024