Snowpipe is the Continuous Data Ingestion service offered by Snowflake.

Snowpipe initiates Data Loading from files the moment they are available in a stage. This allows you to load data from files in micro-batches rather than manually executing COPY statements on a schedule to enable large load batches.

Snowflake supports loading data from any of the three cloud storage service providers, i.e. Amazon S3, Microsoft Azure, and Google Cloud Storage irrespective of the cloud platform.

How does Snowpipe works?

Snowpipe loads data once it is available in Stage as per the COPY statement defined in the referenced pipe.

A Pipe is a named, first class Snowflake object that is used by Snowpipe and carries a COPY statement. The COPY statement recognizes the source location of the target table and data files. Snowpipe supports all data types including semi-structured types like Avro and JSON.

Snowflake follows one of these methods for detecting staged files:

- Calling Snowpipe REST endpoints: In this, the client application makes a call to a public REST endpoint using a pipe object name and a data filename list. If new data files that match the list are found in the stage referenced by the piped object, they get added in the loading queue. Thus, data is loaded from the queue using Snowflake-provided compute resources into a Snowflake table on the basis of parameters defined in the queue.

- Automating Snowpipe with Cloud Messaging: Here, automated data loads enable event notifications for Cloud Storage that informs Snowflake about the arrival of new data files for loading. Snowpipe then copies these files into a queue, from where they get loaded into the target table in a continuous and serverless way on the basis of parameters defined in a specified pipe object.

Snowflake supports the use of standard SQL for querying data files located in the internal stage, i.e. Snowflake, and named external stages which could be Google Cloud Storage, Microsoft Azure, and Amazon S3.

All you need to know about Snowflake Certifications

Features that Snowpipe offer

- Data Duplication: It prevents the reloading of same files and data duplication in tables, and does not load files with the same name even if they are modified later.

- Serverless Compute Model: It provides a pipeline and enables loading of fresh data in micro-batches the moment it is available.

- Single Queue Data Loading: For every pipe object, Snowflake creates a single queue for the sequencing of data files that are waiting to load.

For supporting the creation and management of pipes, Snowflake offers special DDL commands which are,

- CREATE PIPE

- DESCRIBE PIPE

- ALTER PIPE

- DROP PIPE

- SHOW PIPES

Differences between Snowpipe and Bulk Loading

| Snowpipe | Bulk Loading | |

| Authentication | Requires key pair with JWT | Client supported security options |

| Transactions | Loads are split or combined into single or multiple transactions | Single Transaction Loading |

| Cost | Billed as per the compute resources used in Snowpipe Warehouse during file loading | Billed for the time a Virtual Warehouse is active |

| Compute Resources | Uses Snowflake-supplied Compute Resources | Needs User-specified Warehouse |

| Load History | Stored in the metadata of the pipe for 14 days | Stored in the metadata of the target table for 64 days |

Data in Snowflake is maintained in databases. Each database consists of one or more schemas. Schemas are logical groupings of Database objects including views and tables. Databases carry Schemas and Schemas further comprise objects. Snowflake does not place any restrictions on the number of databases, schemas, or objects that could be created.

Tables store data in the form of columns and rows. Databases carry permanent tables that are persistent and stay in memory till the time you drop them.

Snowflake allows the usage of permanent tables as the default table type when creating tables. However, tables can also be defined as either temporary or transient in Snowflake. These tables prove extremely useful when you need to store data that is not required to be maintained for a long period of time, that is, Transitory Data.

Similar to relational databases, Snowflake supports the creation of Temporary tables that are able to hold the data that is not permanent. At multiple times, we require the data only for the current session and so temporary tables are needed. A temporary table is only visible in the current session, that is they exist only in the session that they have been created and are purged once the session ends. The data thus created cannot be recovered later. Like other table types, temporary tables also belong to a specific database and schema.

Even though you have a permanent table existing, you are allowed to create a temporary table with the same name since it is only session specific.

Restrictions that come along

Whenever you wish to view the existing tables using the ‘show tables’ statement, you will only get to see the existing permanent tables and no temporary tables.

- Cloning is not allowed

- No fail safe period, i.e. the moment session ends, the table and data vanishes

Transient Tables in Snowflake

Snowflake also provides Transient tables which remain until dropped explicitly and stay available for the users as per the privileges that they hold. These tables are created for the transactory data that is required even after the current session.

Temporary Tables vs Transient Tables

| Temporary Table | Transient Table |

| Created using the keyword ‘temporary’ | Created using the keyword ‘transient’ |

| Used for storing non-permanent, transitory data such as ETL data and session-specific data | Used for the storage of transitory data that is required beyond a session |

| Could carry the same name as the permanent table | Gives error, cannot be of the same name as the permanent table |

| Session-bound | Not bound to a session |

| Not visible using ‘show tables’ | Visible just like permanent tables when using ‘show tables’ |

| Cloning is not allowed | Cloning is not allowed |

| No fail safe period, Data cannot be recovered once the session has ended | No fail safe period, Data cannot be recovered after the time travel retention period has passed |

| Gets deleted once the session ends | Has to be dropped explicitly when the session is finished |

The fail-safe period for a permanent table is 7 days.

Data Security in Snowflake

When we think of migrating our data to the Cloud, the first thing that comes to our mind is Data Security. This is a major concern when you are new to Cloud and do not carry an experienced technical team that knows the whereabouts of Data Protection in Cloud.

When you are using Cloud Storage, Cloud Computing and multiple other Cloud Technologies which run on public networks, it becomes extremely critical to secure and protect data.

Snowflake offers multiple approaches to deal with Data Security. They are,

- Data Encryption: Snowflake provides end-to-end encryption which ensures that only those users are allowed to see data that are allowed through sufficient permissions. When a customer stages its documents into Snowflake’s internal stage, Snowflake encrypts the data dynamically. It follows AES 256 bit encryption with a hierarchical key scheme. This model has keys on four levels,

- Root Key

- Account Master Keys

- Table Master Keys, and

- File keys

This system includes ‘wrapping’ wherein a parent key(higher layer) encrypts all child keys(keys in the layer below).

The Snowflake Provider, command line users, drivers, and the Web UI interact through http connections secured at rest with TLS 1.2.

When a master key is 30 years old, it gets rotated automatically. When you have Periodic Rekeying enabled, Snowflake generates a new encryption key and re-encrypts all the data after a year using this key.

E2EE prevents data from being read at-rest or in-transit by third parties.

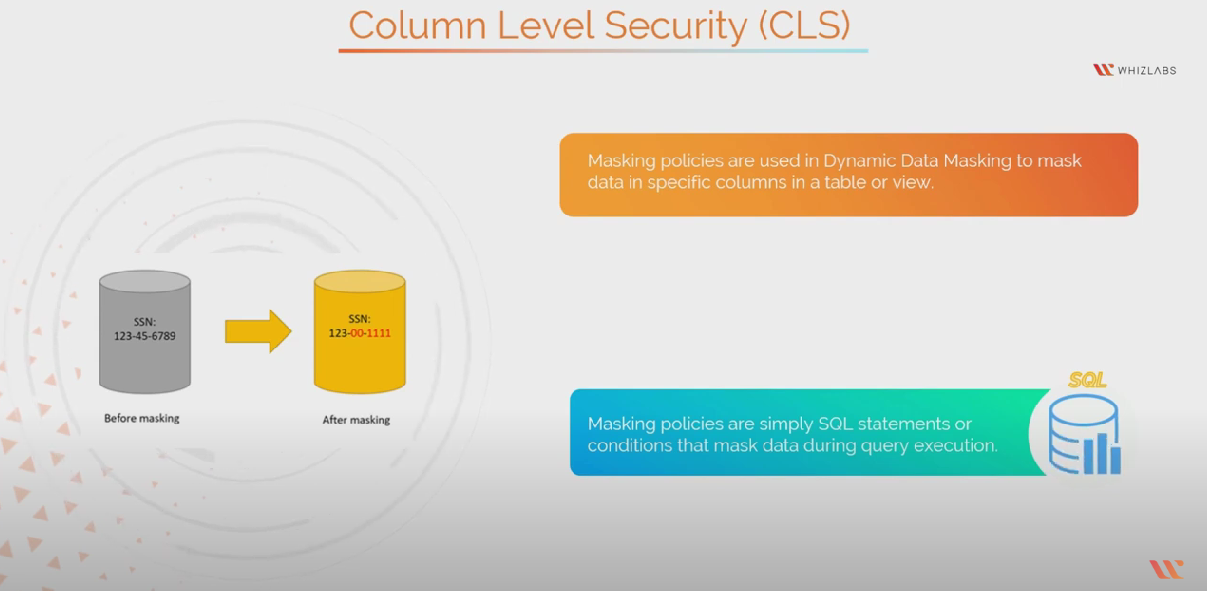

- CLS-Column Level Security or Data Masking: Snowflake’s Column Level Security allows you to mask the columns holding sensitive data including Bank Account no.s, SSN no.s, among others. Snowflake offers data masking through,

-

- External Tokenization: This enables the tokenization of sensitive data before it is loaded into Snowflake. It dynamically detokenizes the data while executing queries by making use of masking policies with External Functions.

- Dynamic Data Masking: Here, masking policies are used to mask the data in specific columns in a view or table. These policies are simple SQL statements/Conditions that mask the data while executing the query. This allows the real data in the table to be the same so no static masking applies. Masking policies can be applied on the basis of User Role/Group enabling masked data for unauthorized users and real data for users with permissions.

- RLS-Row Level Security: Row level security is implemented through Row access policies by deciding which rows should be visible in the query result. A row access policy is a schema-level object that decides which row should be returned as a result of SELECT, UPDATE, MERGE, and DELETE statements.

Snowflake makes use of Views/Secure Views for providing Row Level Security. Data Security can also be controlled by assigning Access Privileged Roles on database objects to users. Information is shared and hidden from the users through views. Data can be concealed or filtered using SQL statements in the definition of View.

Secure Views allows you to hide the SQL statement used in generating the view. A secure view can be constructed using the ‘SECURE’ keyword before the name of the view.

Summary

On a final note, you can clearly understand what is Snowpipe and it’s feature in snowflake that allows you to continuously load data into your snowflake account. This is especially useful if you have a lot of data that is constantly changing, as it allows you to keep your data up-to-date without having to manually load it each time.

There are a few different ways to implement Snowpipe, so be sure to select the best method for your architecture. And, be sure to test the workflow on a small dataset first. This will allow you to accurately assess the cost-benefit of using Snowpipe.

We hope this blog helps in your snowflake data journey. Feel free to comment us if you have queries.

- 25 Free Questions on MS-101: Microsoft 365 Mobility and Security - November 13, 2022

- What is Snowpipe & how does it works? - October 7, 2022

- Preparation Guide on DP-420 Designing and Implementing Cloud-Native Applications Using Microsoft Azure Cosmos DB Certification - September 12, 2022

- Preparation guide on MB-910: Microsoft Dynamics 365 Fundamentals (CRM) Certification Exam - August 5, 2022

- Snowflake Certifications – Which snowflake certification is best for you? - July 11, 2022

- All you need to know about Certified Ethical Hacker Certification - June 16, 2022

- What are Hands-On Labs? A beginner’s guide to Hands-on Labs - May 17, 2022

- 25 Free Question on Salesforce Administrator Certification - April 26, 2022