Came here and looking for DP-203 exam questions? You have certainly landed on the right page. Whizlabs free practice questions not only give you an evaluation of the exam but going through these help you revise the exam-ready concepts.

What do Azure Data Engineers do?

Azure Data Engineers enable stakeholders in the understanding of Data via exploration. Using different tools and techniques, they enable the development and maintenance of compliant and secure Data Processing Pipelines. They further help with the storage and production of cleansed and enhanced datasets for analysis, using multiple Azure Data Services and Languages.

For Who this exam is intended for?

This exam has been tailor-built for candidates who possess a strong knowledge of Data Processing Languages, like Python, Scala, and SQL. They must have a clear understanding of Data Architecture Patterns and Parallel Processing.

The candidates appearing for the DP-203 exam are assumed to be having subject level expertise on the integration, consolidation and transformation of data coming through multiple unstructured and structured data systems, to be formed into a structure capable of building analytics solutions.

What does this exam comprise of?

The DP-203 exam evaluates a candidate on his ability to implement certain technical tasks including,

- Designing and implementation of Data Storage

- Monitoring and optimization of Data Storage and Data Processing.

- Designing and development of Data Processing

- Designing and implementation of Data Security

Ok. Let’s start learning these DP-203 exam questions now!

Domain : Design and implement data storage

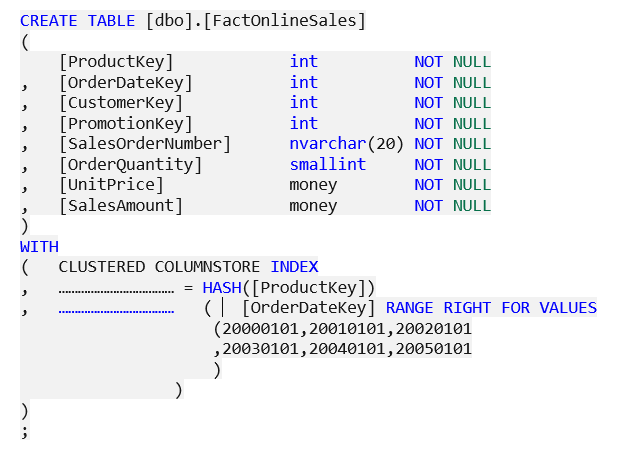

Q1 : You have been assigned the task of partitioning the FactOnlineSales table on the OrderDateKey column in the dedicated SQL pool. For this purpose, you decide to use the CREATE TABLE statement.

Complete the statement by filling the blanks with the right words.

A. Distribution and Partition

B. DistributionTable and PartitionTable

C. Distribution and Collate

D. Partition and Distribution

Correct Answer: A

Explanation:

DISTRIBUTION = HASH ( distribution_column_name ) is the distribution method that assigns every row to one distribution by hashing the value present in distribution_column_name. The right syntax to use partition method is PARTITION ( partition_column_name RANGE [ LEFT | RIGHT ] FOR VALUES ([boundary_value [,…n]])).

Option A is correct. Distribution and Partition are the right options to be used to complete the given Create Table statement.

Option B is incorrect. The right syntax is to use only Distribution and Partition, not DistributionTable and PartitionTable.

Option C is incorrect. The partition should be used instead of Collate.

Option D is incorrect. Distribution and Partition are the right options to use.

References: To know more about partitioning the tables, please visit the below-given link:

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-tables-partition

https://docs.microsoft.com/en-us/sql/t-sql/statements/create-table-azure-sql-data-warehouse?view=aps-pdw-2016-au7

Domain : Design and implement data storage

Q2 : You are working on Azure Data Lake Store Gen1. Suddenly, you realize the need to know the schema of the external data. Which of the following plug-in would you use to know the external data schema?

A. Ipv4_lookup

B. Mysql_request

C. Pivot

D. Narrow

E. infer_storage_schema

Correct Answer: E

Explanation:

infer_storage_schema is the plug-in that helps infer the schema based on the external file contents; when the external data schema is unknown.

Option A is incorrect. The ipv4_lookup plugin checks for an IPv4 value in a lookup table and returns the matched rows.

Option B is incorrect. The mysql_request plugin transfers a SQL query to a MySQL Server network endpoint and returns the 1st row set in the result.

Option C is incorrect. Pivot plug-in is used to rotate a table by changing the unique values from 1 column in the input table into a number of different columns in the output table and perform aggregations wherever needed on any remaining column values that are desired in the final output.

Option D is incorrect. This plug-in is used to unpivot a wide table into a table with only three columns.

Option E is correct. infer_storage_schema plug-in can be used to infer the schema of external data and return it as a CSL schema string.

References: To know more about the external tables and plug-in, please visit the below-given link:

https://docs.microsoft.com/en-us/azure/data-explorer/kusto/management/external-tables-azurestorage-azuredatalake

https://docs.microsoft.com/en-us/azure/data-explorer/kusto/query/inferstorageschemaplugin

Domain : Design and implement data security

Q3 : You work in Azure Synapse Analytics dedicated SQL pool that has a table titled Pilots. Now you want to restrict the user access in such a way that users in ‘IndianAnalyst’ role can see only the pilots from India. Which of the following would you add to the solution?

A. Table partitions

B. Encryption

C. Column-Level security

D. Row-level security

E. Data Masking

Correct Answer: D

Explanation:

Row-level security is applicable on databases to allow fine-grained access to the rows in a database table for restricted control upon who could access which type of data.

Option A is incorrect. Table partitions are generally used to group similar data.

Option B is incorrect. Encryption is used for security purposes.

Option C is incorrect. Column level security is used to restrict data access at the column level. In the given scenario, we need to restrict access at the row level.

Option D is correct. In this scenario, we need to restrict access on a row basis, i.e only for the pilots from India, there Row-level security is the right solution.

Option E is incorrect. Sensitive data exposure can be limited by masking it to unauthorized users using SQL Database dynamic data masking.

References: To know more about Row-level security, please visit the below-given links:

https://azure.microsoft.com/en-in/resources/videos/row-level-security-in-azure-sql-database/

https://techcommunity.microsoft.com/t5/azure-synapse-analytics/how-to-implement-row-level-security-in-serverless-sql-pools/ba-p/2354759

Domain : Design and implement data storage

Q4 : While working on the project, you realize that the delta table is not correct. One of your friends suggests deleting the whole directory of the table and creating a new table on the same path. Would you follow the suggested solution?

A. Yes

B. No

Correct Answer: B

Explanation:

Deleting the whole directory of a Delta table and creating a new table on the same path is not a recommended solution as:

A directory may consist of very large files and deleting the directory can consume days or even hours. Therefore, it is not an efficient solution.

All the content of the deleted files is lost and if by mistake you delete a wrong file, it is very hard to recover it.

Deleting the directory is not atomic. While table deletion is in progress, a concurrent query reading the table can view a partial table or even fail.

Reference: To know more about best practices while using Delta Lake, please visit the below-given link: https://docs.microsoft.com/en-us/azure/databricks/delta/best-practices

Domain : Design and implement data storage

Q5 : The partition specifies how Azure storage load balances entities, messages, and blobs across servers to achieve the traffic requirements of these objects. Which of the following represents the partition key for a blob?

A. Account name + Table Name + blob name

B. Account name + container name + blob name

C. Account name + Queue name + blob name

D. Account Name + Table Name + Partition Key

E. Account Name + Queue Name

Correct Answer: B

Explanation:

For a blob, the partition key consists of account name + container name + blob name. Data is partitioned into ranges using these partition keys and these ranges are load balanced throughout the system.

Option A is incorrect. For a blob, the partition key includes account name + container name + blob name.

Option B is correct. For a blob, the partition key includes account name + container name + blob name.

Option C is incorrect. Account name + Queue name + blob name is not the right partition key for a blob.

Option D is incorrect. For an entity in a table, the partition key includes the table name and the partition key.

Option E is incorrect. For a message in a queue, the queue name is the partition key itself.

Reference: To know more about Partitioning Azure Blob Storage, please visit the below-given link: https://docs.microsoft.com/en-us/azure/architecture/best-practices/data-partitioning-strategies

Domain : Design and develop data processing

Q6 : Data Cleansing in Data Quality Services (DQS) includes a 2-step process for data cleansing: computer-assisted and interactive cleansing. Depending upon the computer-assisted cleansing process, during the interactive cleansing, DQS gives the data steward with information that is needed to make a decision about modifying or changing the data. For this purpose, DQS classifies the data into 5 tabs. From the below-given option, choose the tab that is not among these tabs.

A. Invalid

B. Valid

C. Suggested

D. New

E. Correct

F. Corrected

Correct Answer: B

Explanation:

During the interactive cleansing, Data Quality Services (DQS) classifies the data in these 5 tabs: Suggested, New, Invalid, Corrected, and Correct.

Option A is incorrect. Invalid tab has the values that were specified as invalid in the domain in the knowledge base or values that failed reference data or a domain rule.

Option B is correct. There is no such tab with the name valid.

Option C is incorrect. The suggested tab consists of the values having a confidence level greater than the auto-suggestion threshold value but lesser than the auto-correction threshold value for which Data Quality Services (DQS) found suggestions.

Option D is incorrect. The new tab consists of the valid values for which Data Quality Services (DQS) doesn’t have sufficient information (suggestion), and hence can’t be mapped to any other tab.

Option E is incorrect. The correct tab is for the values which were found correct.

Option F is incorrect. The corrected tab is for the values that are corrected by Data Quality Services (DQS) during the automated cleansing.

Reference: To know more about data cleansing, please visit the below-given link: https://docs.microsoft.com/en-us/sql/data-quality-services/data-cleansing?view=sql-server-ver15

Domain : Design and implement data security

Q7 : You need to design an enterprise data warehouse in Azure SQL Database with a table titled customers. You need to ensure that the customer supportive staff can identify the customers by matching the few characters of their email addresses but the full email addresses of the customers should not be visible to them. Which of the following would you include in the solution?

A. Row-level security

B. Encryption

C. Column Level Security

D. Dynamic Data Masking

E. Any of the above can be used

Correct Answer: D

Explanation:

Dynamic data masking is helpful in preventing unauthorized access to sensitive data by empowering the clients to specify how much of the sensitive data to disclose with minimum impact on the application layer. In this policy-based security feature, the sensitive data is hidden in the output of a query over specified database fields, but there is no change in the data in the database.

For example: *******abc@gmail.com

Option A is incorrect. Row-level security is used to enable the restricted access i.e who can access what type of data.

Option B is incorrect. Encryption is not the right solution.

Option C is incorrect. Column level security won’t help in limiting the exposure of sensitive data.

Option D is correct. In the given scenario, there is a need to use Dynamic data masking to limit the sensitive data exposure to non-privileged users.

Option E is incorrect. Dynamic Data Masking is the right answer.

Reference: To know more about dynamic data masking, please visit the below-given link: https://docs.microsoft.com/en-us/azure/azure-sql/database/dynamic-data-masking-overview

Domain : Design and implement data storage

Q8 : You need to create a quick object in a test environment and therefore you decide to create a temporal table with an “anonymous” history table. From the given below statement/remarks about the history table in this context, choose the statement(s) that is/are true.

A. You need to manually create the anonymous history table and provide its specific schema

B. The history table is created as a rowstore table

C. The history table is created as a columnstore table

D. A default clustered index is developed for the history table

E. A history table is always uncompressed. No compression is ever applied on the history table

Correct Answers: B and D

Explanation:

An anonymous history table is automatically built in the same schema as the temporal or current table. The history table is built as a rowstore table. If possible, page compression is applied on the history table otherwise the table remains uncompressed. For example, few table configurations, like SPARSE columns, don’t allow compression.

Option A is incorrect. An anonymous history table is automatically built in the same schema as the temporal or current table.

Option B is correct. It is true that the history table is built as a rowstore table.

Option C is incorrect. The history table is created as a rowstore table, not columnstore table.

Option D is correct. A default clustered index is developed for the history table with an auto-generated name with the format IX_<history_table_name>. This index has the PERIOD columns (end, start).

Option E is incorrect. It is not true that the history table always remains uncompressed. If possible, page compression is applied on the history table otherwise the table remains uncompressed. For example, few table configurations, like SPARSE columns, don’t allow compression.

Reference: To know more about creating a system-versioned temporal table, please visit the below-given link: https://docs.microsoft.com/en-us/sql/relational-databases/tables/creating-a-system-versioned-temporal-table?view=sql-server-ver15

Domain : Design and develop data processing

Q9 : There are a number of various analytical data stores that use different languages, models, and provide different capabilities. Which of the following is a low-latency NoSQL data store that provides a high-performance and flexible option to query structured and semi-structured data?

A. Azure Synapse Analytics

B. HBase

C. Spark SQL

D. Hive

E. None of these

Correct Answer: B

Explanation:

HBase is a low-latency NoSQL data store that provides a high-performance and flexible option to query structured and semi-structured data. The primary data model used by HBase is the Wide column store.

Option A is incorrect. Azure Synapse is a managed service depending upon the SQL Server database technologies and is optimized for supporting large-scale data warehousing workloads.

Option B is correct. HBase is a low-latency NoSQL data store that provides a high-performance and flexible option to query structured and semi-structured data.

Option C is incorrect. Spark SQL is an API developed on Spark that enables the creation of data frames and tables which are possible to be queried using SQL syntax.

Option D is incorrect. It is HBase, not Hive that is a low-latency NoSQL data store that provides a high-performance and flexible option to query structured and semi-structured data.

Option E is incorrect. HBase is the right answer.

Reference: To know more about batch processing, please visit the below-given link: https://docs.microsoft.com/en-us/azure/architecture/data-guide/big-data/batch-processing

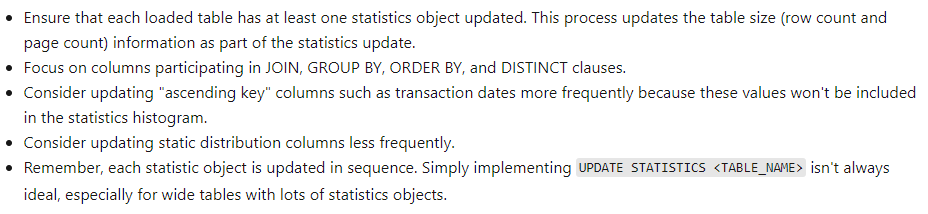

Domain : Monitor and optimize data storage and data processing

Q10 : You work in an Azure Transformational Logics (ATL) company and you have been given the responsibility to create and update query-optimization statistics utilizing the Synapse SQL resources in a dedicated SQL pool. The following are the guiding principles recommended for updating the statistics during the load process. Which of the following is/are not true?

A. Ensure that every loaded table is having at least 1 statistics object updated

B. Focus on the columns participating in ORDER BY, GROUP BY, JOIN and DISTINCT clauses

C. Update “ascending key” columns like order dates more frequently as these values are not considered/included in the statistics histogram

D. Update static distribution columns more frequently

E. None of these

Correct Answer: D

Explanation:

The below-given guiding principles are recommended to update the statistics during the load process:

Option A is incorrect. It is true that you should ensure that every loaded table is having at least 1 statistics object updated.

Option B is incorrect. The given one is also a guiding principle to update the statistics during the load process.

Option C is incorrect. The given one is also a guiding principle to update the statistics during the load process.

Option D is correct. Rather than more frequently, you need to update static distribution columns less frequently.

Option E is incorrect. Option D describes the wrong principle.

Reference: To know more about Statistics in Synapse SQL, please visit the below-given link: https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/develop-tables-statistics#update-statistics

Domain : Design and implement data storage

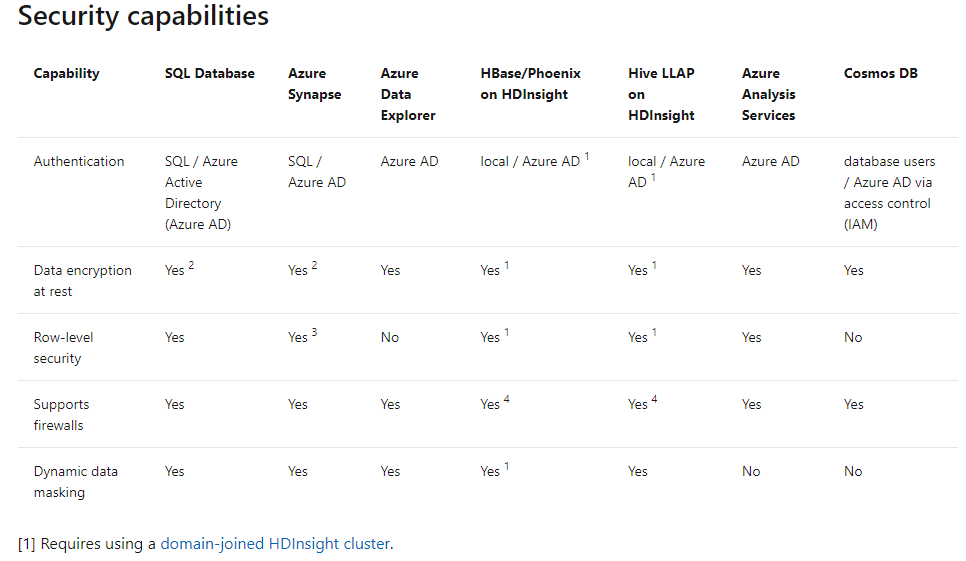

Q11 : There are a number of different options for data serving storage in Azure. These options vary based on the capability they offer. Which of the below-given options don’t offer Row-Level security?

A. SQL Database

B. Azure Data Explorer

C. HBase/Phoenix on HDInsight

D. Hive LLAP on HDInsight

E. Azure Analysis Services

F. Cosmos DB

Correct Answers: B and F

Explanation:

The below table mentions the various security capabilities offered by different data serving storage options.

Option A is incorrect. SQL Database offers Row-level security.

Option B is correct. Azure Data Explorer doesn’t provide Row-level security.

Option C is incorrect. HBase/Phoenix on HDInsight offers Row-level security with domain-joined HDInsight clusters.

Option D is incorrect. Hive LLAP on HDInsight offers Row level security with domain-joined HDInsight clusters.

Option E is incorrect. Azure Analysis Services offers Row-level security.

Option F is correct. Cosmos DB doesn’t provide Row-level security.

Reference: To know more about analytical data stores in Azure, please visit the below-given link: https://docs.microsoft.com/en-us/azure/architecture/data-guide/technology-choices/analytical-data-stores

Domain : Design and develop data processing

Q12 : When you implement the Clean Missing Data module to a set of data, the Minimum missing value ratio and Maximum missing value ratio are two important factors in replacing the missing values. If the Maximum missing value is set to 1, what does it mean?

A. missing values are cleaned only when 100% of the values in the column are missing

B. missing values are cleaned even if there is only one missing value

C. missing values are cleaned only when there is only one missing value

D. missing values won’t be cleaned

E. missing values are cleaned even if 100% of the values in the column are missing

Correct Answer: E

Explanation:

Maximum missing value ratio is specified as the maximum number of missing values that can be present for the operation that is to be executed. By default, the Maximum missing value ratio is set to 1 which indicates that missing values will be cleaned even if 100% of the values in the column are missing.

Option A is incorrect. The use of the word “Only When” does not rightly state the meaning.

Option B is incorrect. Setting Minimum missing value ratio property to 0 actually means that missing values are cleaned even if there is only one missing value.

Option C is incorrect. Minimum and Maximum missing value ratios talk only about minimum and maximum ratios, not a specific number.

Option D is incorrect. The given statement is not right.

Option E is correct. Setting the Maximum missing value ratio to 1 indicates that missing values will be cleaned even if 100% of the values in the column are missing.

Reference: To know more about the clean missing Data Module, please visit the below-given link: https://docs.microsoft.com/en-us/azure/machine-learning/algorithm-module-reference/clean-missing-data

Domain : Design and develop data processing

Q13 : On each file upload, Batch writes 2 log files to the compute node. These log files can be examined to know more about a specific failure. These two files are:

A. fileuploadin.txt and fileuploaderr.txt

B. fileuploadout.txt and fileuploadin.txt

C. fileuploadout.txt and fileuploaderr.txt

D. fileuploadout.JSON and fileuploaderr.JSON

E. fileupload.txt and fileuploadout.txt

Correct Answer: C

Explanation:

When you upload a file, 2 log files are written by Batch to the compute node, named – fileuploadout.txt and fileuploaderr.txt. These log files help to get information about a specific failure. The scenarios where file upload is not done, these fileuploadout.txt and fileuploaderr.txt log files don’t exist.

Option A is incorrect. fileuploadin.txt and fileuploaderr.txt are not the right files.

Option B is incorrect.txt and fileuploadin.txt are not the right log files.

Option C is correct. On each file upload, Batch writes 2 log files to the compute node. These files are fileuploadout.txt and fileuploaderr.txt.

Option D is incorrect.JSON and fileuploaderr.JSON are not the right log files.

Option E is incorrect. fileupload.txt and fileuploadout.txt are not the right files.

Reference: To know more about job and task error checking, please visit the below given link: https://docs.microsoft.com/en-us/azure/batch/batch-job-task-error-checking

Domain : Design and implement data storage

Q14 : You have been assigned the task to manage the storage of consumers profiles and Sales data. A general request is to create a list of “the top 100 consumers including name, account number and sales around for a specific time period” or “who are the consumers within a particular geographic region?”

Is Azure Blob storage a recommended choice for this data?

A. Yes

B. No

Correct Answer: B

Explanation:

Blob is not a recommended choice for structured data that needs to be queried regularly. Blobs have higher latency than memory and local disk and also do not have the indexing feature that increases the databases’ efficiency at running queries.

Reference: To know more about blobs, please visit the below-given link: https://docs.microsoft.com/en-us/azure/storage/blobs/storage-blobs-introduction

Domain : Design and implement data security

Q15 : A famous online payment gateway provider is creating a new product where the users can pay their credit card bills and earn reward coins. As part of compliance, they need to ensure that all the data, including credit card details and PIIs, are securely kept. This product is backed by a dedicated SQL pool in azure Synapse analytics. The major concern is that the database team that performs maintenance should not be able to view the customer’s info. Which of the following can be the best solution?

A. Implement Transparent data encryption

B. Use Azure Defender for SQL

C. Use Dynamic data masking (DDM)

D. Assign only SQL security manager role to maintenance team members

Correct Answer: C

Explanation:

Here there is a lot of critical data and personal information involved. Dynamic data masking is the best solution for this. Consider the case of credit card numbers; using DDM, we can actually hide the numbers in that particular column. For example, if the credit card number is 1234 5678 then the displayed value will be like XXXX XX78. Similarly, we can use masking for other data in other columns where PII is present. The maintenance team with limited permissions will only see the covered data and thus, the data is safe from exploitation.

Option A incorrect: Transparent data encryption is a method used by Azure in its relational database services for encrypting data at rest. This will not be the best solution here.

Option B is incorrect: Azure defender is mainly used to mitigate potential DB vulnerabilities and detect anomalous activities.

Option C is correct: DDM can hide the data columns as required.

Option D is incorrect: Assigning Azure security manager role will grant them access to security features configuration, including the ability to enable or disable DDM. This is exactly the opposite of what is required here.

Reference: To know more about DDM, please refer to the doc below: https://docs.microsoft.com/en-us/azure/azure-sql/database/dynamic-data-masking-overview

Domain : Monitor and optimize data storage and data processing

Q16 : You have a Serverless SQL pool development assigned by your company. This should follow the best practices and optimized solutions. Which of the following solutions will help you increase the performance?

A. Use the same region for Azure Storage account and serverless SQL pool

B. Convert CSV to Parquet

C. Use CETAS

D. Use azure storage throttling

Correct Answers: A, B and C

Explanation:

When Azure Storage account and serverless SQL pool are co-located, the latency of loading will be reduced. Thus, there will be an increase in total performance. In situations where these are in different regions, data has to travel more, increasing the latency.

Parquet is columnar formats and compressed and have a smaller size than CSV. So, the time to read it will be less.

CETAs are parallel operations that create external table metadata and export the result of the SELECT query to a set of files in your storage account. We can enhance the query performances.

Options A, B and C are correct: These are the best practices followed to improve the performance of the Serverless SQL pool.

Option D is incorrect: Storage throttling detection will slow down the SQL pool, and thus the performance will be decreased.

Reference: To know more about Serverless SQL pool development best practices, please refer to the doc below: https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/develop-best-practices#serverless-sql-pool-development-best-practices

Domain : Design and implement data storage

Q17 : You have a traditional data warehouse storage with a snowflake schema with row-oriented storage that takes considerable time and low performance during queries. You plan to use clustered columnstore indexing. Will it improve query performance?

A. Yes

B. No

Correct Answer: A

Explanation:

Most of the traditional data warehouses use row-oriented storage. But columnstore indexes are used in modern data warehouses as the standard for storage and query in big data warehousing fact tables.

There are two advantages of using this while comparing with a traditional row-oriented Data warehouse.

10x performance in query performance

10 x data compression

Option A is correct: Using clustered columnstore will increase the query performance.

Reference: For more details on columnstore indexes, please refer to the following document: https://docs.microsoft.com/en-us/sql/relational-databases/indexes/columnstore-indexes-overview?view=sql-server-ver15

Domain : Design and implement data security

Q18 : A famous fintech startup is setting up its data solution using Azure Synapse analytics. As part of compliance, the company has decided that only the finance managers should be able to see the Bank Account Number and not anyone else. Which of the following is best suited in this scenario?

A. Firewall rules to block IP

B. Row-level security

C. Column-level security

D. Azure RBAC role

Correct Answer: C

Explanation:

Column-level security will control the access to particular columns based on the user membership. In the case of sensitive data, we can decide which user or group can access a particular column. In this question, the restriction should be given to Bank account numbers. So, ideally column-level security can be used.

Option A is incorrect: Firewall block will completely block access to the database.

Option B is incorrect: Row-level security will prevent access to row and is not required.

Option C is correct: It will be the best solution.

Option D is incorrect: Azure RBAC cannot control access to a particular column.

Reference: To know more, please refer to the docs below: https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/column-level-security

Domain : Design and implement data storage

Q19 : A famous IOT devices company collects the metadata about its sensors in the field in reference data. Which of the following services can be used as input for this type of data?

A. Azure SQL

B. Blob Storage

C. Azure Event Hub

D. Azure IOT hub

Correct Answers: A and B

Explanation:

Reference data is a fixed data set that is static or, in some cases, changes slowly. Here the metadata values of sensors are slowly changing and thus can be considered as reference data as the question tells. Azure blob storage can ingest this type of data, in which the data is modeled to be a sequence of blobs in ascending order of the date/time specified in the blob name. Similarly, Azure SQL also can intake reference data. In this case, the data is retrieved by Job in stream analytics and is stored with snapshot memory for further processing.

Options A and B correct: Azure SQL and Blob storage are supported input services for reference data.

Options C and D incorrect: Azure Event Hub and IoT hub are not supported.

Reference: To know more, please refer to the docs below: https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-use-reference-data

Domain : Design and develop data processing

Q20 : You have an Azure Data Factory with Self Hosted Runtime Integration Runtime (SHIR) set up on Azure VM. During a regional failure/disaster, what is your best option for Data redundancy?

A. Utilize Microsoft managed Regional Failover by Azure Data factory

B. Use Azure Site Recovery for VM failover

C. Data is by default replicated to the paired region for Azure VM and does automatic failover

D. Utilize automatic Regional Failover for Azure VM

Correct Answer: B

Explanation:

Data redundancy is essential in the case of critical workloads. Azure Data Factory with default Azure managed Integration runtime environment has an option for automatic / Microsoft managed failover. So, if a disaster or any other thing is causing a region failure, Microsoft managed failover occurs in the paired region. Then you will be able to access the Azure Data Factory resources after that.

But in this case, the Integration runtime is SHIR. And it is using Azure VMs for its infrastructure.

In this case, the best option will be to configure Azure Site Recovery for Azure VMs and create automatic/ manual cutover to the failover region.

Option A is incorrect: This will not work for SHIR and only works when the runtime is Azure integrated runtime for the Azure Data Factory.

Option B is correct: Site recovery for Azure VM is the best option. When a region failure occurs, we can quickly do a failover to the next region.

Option C is incorrect: Azure will not replicate data of Azure VMs by default to paired regions or do an automatic failover. We have to set up an Azure Site Recovery service.

Option D is incorrect: Similar to option C, automatic failover is not an option for Azure VM, unless it is configured by Site Recovery. So, it is not the best answer.

Reference: To know more, please refer to the docs below: https://docs.microsoft.com/en-us/azure/data-factory/concepts-data-redundancy

Domain : Design and implement data storage

Q21 : Which one of the following T-SQL commands is useful to check the disk space usage, data skew used for tables in the SQL data warehouse database?

A. DBCC PDW_SHOWSPACEUSED

B. DBCC PDW_SHOWPARTITIONSTATS

C. DBCC PDW_SHOWEXECUTIONPLAN

D. None of the above

Correct Answer: A

Explanation:

Option A is correct because the DBCC PDW_SHOWSPACEUSED T-SQL command is used to display the number of rows, disk space used for tables in SQL DW DBs.

Option B is incorrect because DBCC PDW_SHOW PARTITIONSTATS T-SQL command is to display the number of rows & size of each partition in the SQL DW table.

Option C is incorrect because DBCC PDW_SHOWEXECUTIONPLAN T-SQL command is used to provide SQL server query execution plans in Synapse analytics / PDW.

References:

https://docs.microsoft.com/en-us/sql/t-sql/database-console-commands/dbcc-pdw showexecutionplan-transact-sql?view=azure-sqldw-latest

https://docs.microsoft.com/en-us/sql/t-sql/database-console-commands/dbcc-pdw-showpartitionstats transact-sql?view=azure-sqldw-latest

https://docs.microsoft.com/en-us/sql/t-sql/database-console-commands/dbcc-pdw-showspaceused transact-sql?toc=/azure/synapse-analytics/sql-data-warehouse/toc.json&bc=/azure/synapse analytics/sql-data-warehouse/breadcrumb/toc.json&view=azure-sqldw-latest&preserve-view=true

Domain : Design and develop data processing

Q22 : Bryan is executing an init script which is required to run a bootstrap script during the Databricks Spark driver or worker node startup.

Which kind of init script can he choose?

A. Global

B. Job

C. Cluster-scoped

D. None of the above

Correct Answer: C

Explanation:

Option A is incorrect because the global type of init script can’t execute on model serving clusters, and it only works on clusters on the same workspace.

Option B is incorrect because there’s no init script type as Job in Databricks.

Option C is correct because the Cluster-scoped init script type works for every Databricks cluster configured.

Reference: https://docs.microsoft.com/en-us/azure/databricks/clusters/init-scripts

Domain : Design and develop data processing

Q23 : The Complex event processing streaming solution which Jeffrey is working on the IoT platform, is a hybrid cloud platform where few data sources are transformed into on-premises Big Data platform. The on-premises data center and Azure services are connected via a virtual network gateway.

What kind of resources can he choose for this on-premises Big data platform connected to Azure via virtual network gateway, complex data processing and execution UDF jobs on java?

A. Spark Structured Streaming / Apache Storm

B. Apache Ignite

C. Apache Airflow

D. Apache Kafka

E. None of the above

Correct Answer: A

Explanation:

Option A is correct because Spark Structure Streaming/ Apache Storm can be used for complex event processing for real-time data streams on-premises.

Option B is incorrect because Apache ignite can’t help with real-time event processing with UDF jobs processing.

Option C is incorrect because Apache Airflow is a platform for programmatically authoring, monitoring workflows.

Option D is incorrect because Apache Kafka is used for publishing and consuming event streams in pub-sub scenarios.

Reference: https://docs.microsoft.com/en-us/azure/stream-analytics/streaming-technologies

Domain : Design and implement data storage

Q24 : Nicole is working on migrating on-premises SQL Server databases to Azure SQL data warehouse (Synapse dedicated SQL pools) tables. The tables of the dedicated SQL pools of Synapse Analytics require partition. She’s designing SQL table partitions for this data migration to the Azure Synapse. The partition of the tables already contains the data, and she’s looking for the most efficient method to split the partitions in the dedicated SQL pool tables.

What T-SQL statement can she use for splitting partitions that contain data?

A. CTAS

B. CETAS

C. OPENROWSET

D. Clustered Columnstore Indexes

Correct Answer: A

Explanation:

Option A is correct because the CTAS statement can be used as the most efficient method while splitting the partitions that contain data.

Option B is incorrect because CETAS is used in the dedicated SQL pool of Synapse for creating External table and data export operations in parallel for Hadoop, Azure Blob Storage, and ADLS Gen2.

Option C is incorrect because the OPENROWSET function in Synapse SQL reads the content of the file(s) from a data source and returns the content as a set of rows.

Option D is incorrect because Clustered column store tables offer both the highest level of data compression and the best overall query performance. It doesn’t help in partition splitting.

Domain : Monitor and optimize data storage and data processing

Q25 : Which of the following five kinds of log types can Phil select for storing Databricks Diagnostic logs?

A. Secrets

B. RDP

C. Network

D. DBFS

E. Keys

F. SQL permissions

G. Accounts

H. Spark

I. Ssh

J. Ambari

Correct Answers: A, D, F, G and I

Explanation:

Option A is correct because the Secrets log can be stored as Diagnostics logs for Databricks.

Option B is incorrect because RDP can’t be used as a diagnostic log type for Databricks.

Option C is incorrect because network flow logs can’t be stored as diagnostics log types for Databricks.

Option D is correct because DBFS logs can be stored as databricks diagnostics logs.

Option E is incorrect because Keys can’t be stored as Databricks diagnostics logs.

Option F is correct because SQL permissions can be stored as Databricks diagnostic log type.

Option G is correct because databricks accounts logs can be stored as diagnostic logs.

Option I is correct because ssh logs can also be stored as Databricks diagnostic log type.

Option J is incorrect because Ambari logs can’t be stored as Databricks diagnostics log type.

Domain: Develop Data Processing

Q26 : A Company has received a new project for migrating data from Amazon S3 to Azure Data Lake Storage Gen2. There is a requirement to create a pipeline with approximate data is less than 10 TB. Which of the following is the more efficient and easier-to-use tool to perform the migration?

A. Copy Data Tool

B. Configure SSIS

C. Copy Data using Data Flows

D. None of the above

Correct Answer: A

Option A is Correct. If you want to copy a small data volume from AWS S3 to Azure (for example, less than 10 TB), the Azure Data Factory Copy Data tool is more efficient and easier to use.

Option B is incorrect. Because SSIS is not used t copy data from AWS s3 to Azure, it’s used to migrate data from on-premises to the cloud.

Option C is incorrect. Copy data using Data Flows are used for transformation. It is not used for copying/migrating the data from AWS S3 to Azure.

References:

- Migrate data from Amazon S3 to Azure Data Lake Storage Gen2 – Azure Data Factory | Microsoft Learn

- Copy Data tool – Azure Data Factory & Azure Synapse | Microsoft Learn

Domain: Develop Data Processing

Q27 : Arrange the basic steps for implementing and Designing polyBase ETL for a dedicated SQL pool in the correct order.

A. Load the data into dedicated SQL pool staging tables using PolyBase

B. Extract the source data into text files

C. Insert the data into production tables

D. Transform the data

E. Prepare the data for loading

F. Land the data into Azure Blob storage or Azure Data Lake Store

Correct Answer: B, F, E, A, D and C

Polybase is a technology that accesses external data stored in Azure Blob Storage or Azure Data Lake Storage via T-SQL language. Extract, Load, and Transform (ELT) is a process by which data is extracted from a source system, loaded into a data warehouse, and then transformed.

The basic steps for implementing a PolyBase ELT for a dedicated SQL pool are:

B. Extract the source data into text files.

F. Land the data into Azure Blob storage or Azure Data Lake Store.

E. Prepare the data for loading.

A. Load the data into dedicated SQL pool staging tables using PolyBase.

D. Transform the data.

C. Insert the data into production tables.

Reference:

Domain: Secure, Monitor, and Optimize Data Storage and Data Processing

Q28: You monitor Azure Stream Analytics Job. You need to ensure that the job is having enough streaming units provisioned. You configure monitoring of the Streaming Unit (SU) memory% utilization metrics. Which additional metrics you should monitor?

A. Function events

B. Late Input Events

C. Backlogged Input Events

D. Watermark Delay

Correct Answers: C and D

Option C is correct. Backlogged Input Events. A number of input events are backlogged.

Option D is Correct. Watermark Delay. Maximum watermark delay across all partitions of all outputs in the job.

The SU memory% utilization metric, which ranges from 0% to 100%, describes the memory consumption of your workload. For a streaming job with a minimal footprint, this metric is usually between 10% to 20%. If SU% utilization is high (above 80%), or input events get backlogged (even with a low SU% utilization since it doesn’t show CPU usage), your workload likely requires more compute resources, which requires you to increase the number of SUs. It’s best to keep the SU metric below 80% to account for occasional spikes.

To react to increased workloads and increase streaming units, consider setting an alert of 80% on the SU Utilization metric. Also, you can use watermark delay and backlogged events metrics to see if there’s an impact.

Option A is Incorrect. Function Events are not used to monitor SU memory %, It’s used to find a number of failed Azure Machine Learning function calls.

Option B is Incorrect. Late Inout Events is not used for monitoring SU memory%, it’s used to monitor events that arrived later than the configured tolerance window for late arrivals.

References:

https://learn.microsoft.com/en-us/azure/stream-analytics/stream-analytics-time-handling

Azure Stream Analytics job metrics | Microsoft Learn

Domian: Design and Implement Data storage

Q29: Moving the data from a single input dataset to a single output dataset, with a process in between is called Data Lineage for 1:1 operation. [State True or False]

A. True

B. False

Correct Answer: A

The common pattern for capturing data lineage is moving data from a single input dataset to a single output dataset, with a process in between.

- source/input: Customer (SQL Table)

- sink/output: Customer1.csv (Azure Blob)

- process: CopyCustomerInfo1#Customer1.csv (Data Factory Copy activity)

Reference:

Connect to Azure Data Factory – Microsoft Purview | Microsoft Learn

Domain: Secure, Monitor, and Optimize Data Storage and Data Processing

Q30: The company want to design an E-commerce data solution, in which you need to prevent unauthorized access to sensitive data information of the customer, which is credit card number and phone number information.

You are asked to recommend a solution that must prevent viewing full information of the sensitive information of the customer. For example, credit card should be displayed as xxxx-xxxx-xxxx-9876. What of the following method is used to protect viewing sensitive data?

A. Table partitions

B. Column Encryption

C. Dynamic data masking

D. Sensitive classifications

Correct Answer: C

Option C is correct. Dynamic data masking, It’s a policy-based security feature that hides the sensitive data in the result set of a query over designated database fields, while the data in the database is not changed.

Option A is incorrect. Table partitions enable you to divide your data into smaller groups of data. It cannot protect sensitive data.

Option B is incorrect. Column Encryption is also preventing access to sensitive data, but we cannot view the data as masking the data.

Option D is incorrect. Sensitive classifications are used to apply security controls and access policies.

Reference:

Dynamic data masking – Azure SQL Database | Microsoft Learn

Summary

We are hopeful that you are able to understand an outline of the DP-203 exam with this free test. To get a Bird’s eye view on the DP-203 exam, go through Whizlabs Practice tests on our official page, and learn the concepts with elaborate explanations. Preparation is always the key to success. Spend more time on learning through DP-203 free questions and practice tests before attempting the real exams. Keep learning!

- Top 10 Highest Paying Cloud Certifications in 2024 - March 1, 2023

- 12 AWS Certifications – Which One Should I Choose? - February 22, 2023

- 11 Kubernetes Security Best Practices you should follow in 2024 - May 30, 2022

- How to run Kubernetes on AWS – A detailed Guide! - May 30, 2022

- Free questions on CompTIA Network+ (N10-008) Certification Exam - April 13, 2022

- 30 Free Questions on Microsoft Azure AI Fundamentals (AI-900) - March 25, 2022

- How to Integrate Jenkins with GitHub? - March 22, 2022

- How to Create CI/CD Pipeline Inside Jenkins ? - March 22, 2022