Are you looking for free questions and answers to prepare for the AWS Certified Advanced Networking Specialty exam?

Here are our newly updated 25+ Free questions on the AWS Certified Advanced Networking Specialty exam to make you aware of actual exam properties. This sample free question set provides you with detailed information on AWS Certified Advanced Networking Specialty exam pattern, question format, difficulty level of questions and time needed to answer each question.

To validate your knowledge and understanding of concepts with real-time scenario based AWS ANS-C01 questions, we strongly suggest you to practice with AWS Advanced Networking Specialty Certification Practice tests.

The AWS Advanced Networking Specialty certification practice exam aids in determining which areas you are knowledgeable about and which ones may require additional study to pass the actual AWS Certified Advanced Networking – Specialty exam with a high score.

Domain: Network Implementation

Question 1 : A media company is using a single Network Load Balancer to load balance traffic per availability zone to backend applications running on Amazon ECS. Amazon ECS Containers are placed in 3 Availability Zones accessing applications deployed in the same account across these 3 Availability Zones. It has been observed that containers are accessing applications deployed in other Availability Zone leading to high latency and high cost.

Which of the following solutions can be implemented to enable containers accessing applications from the local Availability Zone?

A. Append region name to the NLB DNS name which will resolve to the IP address of the NLB local node

B. Disable Cross-Zone load balancing to distribute traffic to applications nodes within each Availability Zone

C. Enable Cross-Zone load balancing to distribute traffic to applications nodes within each Availability Zone

D. Append the availability zone name to the NLB DNS name which will resolve to the IP address of the NLB local node

Correct Answer: D

Explanation:

Network Load Balancer has one IP address enabled per Availability Zone. When NLB is enabled in 3 Availability Zones, the DNS name will return IP from all 3 NLB nodes in these Availability Zones.

To get lower latency & decrease inter Availability Zone costs, containers can prefer accessing applications deployed in the local Availability Zone instead of accessing applications from other Availability Zone. This can be implemented by appending the Availability Zone name to the NLB DNS name.

Option A is incorrect as DNS for NLB already has a region name. This will resolve all NLB nodes created in each availability zone.

Option B is incorrect as disabling Cross-Zone load balancing will distribute traffic across targets in its Availability Zone only. In the above case, clients want to prefer containers in each availability zone to access applications from the local Availability Zone instead of from other Availability Zone.

Option C is incorrect as enabling Cross-Zone load balancing will distribute traffic across targets in all Availability Zones. In the above case, clients want to prefer containers accessing applications from the local Availability Zone instead of from another Availability Zone.

For more information on appending AZ name to NLB DNS name, refer to the following URL,https://aws.amazon.com/blogs/networking-and-content-delivery/resolve-dns-names-of-network-load-balancer-nodes-to-limit-cross-zone-traffic/

Domain: Network Design

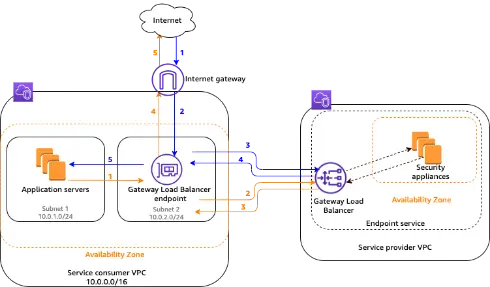

Question 2 : A State University has deployed e-learning educational courses on Amazon EC2 instances in a service consumer VPC. These courses are accessed by global users via the Internet Gateway attached to this VPC. To strengthen the security of these media, they have deployed a security appliance & Gateway Load Balancer in service provider VPC. Gateway Load Balancer endpoint is created in service consumer VPC. Amazon EC2 instance is part of the application server subnet while the Gateway endpoint is part of the Gateway Load Balancer endpoint subnet. All traffic flow from and to the Internet via Internet Gateway from service consumer VPC should be flowing via security appliance in security provider VPC where traffic will be intercepted to identify any malware or security breaches.

The IT Team from this university is looking for your suggestions for configuring routing tables at the Internet gateway, Service consumer VPC & Gateway Load Balancer endpoint subnet.

Which of the following are the correct route table entries that need to be configured?

A. 1) In the Internet Gateway route table, for the destination as application server’s subnet target should be the Gateway Load Balancer endpoint

2) In the Application server subnet, the default route should be added with Target as Internet Gateway

3) In the Gateway Load Balancer endpoint subnet, the default route should be added with Target as the Internet gateway

B. 1) In the Internet Gateway route table, for the destination as application server’s subnet target should be the Gateway Load Balancer endpoint

2) In the Application server subnet, the default route should be added with Target as the Gateway Load Balancer endpoint

3) In the Gateway Load Balancer endpoint subnet, the default route should be added with Target as the Internet gateway

C. 1) In the Internet Gateway route table, for the destination as application server’s subnet target should be the Security appliance subnet

2) In the Application server subnet, the default route should be added with Target as the Gateway Load Balancer endpoint

3) In the Gateway Load Balancer endpoint subnet, the default route should be added with Target as Security appliance subnet

D. 1) In the Internet Gateway route table, for the destination as application servers subnet target should be Security appliance subnet

2) In the Application server subnet, the default route should be with Target as Internet Gateway

3) In the Gateway Load Balancer endpoint subnet, the default route with Target as Internet Gateway

Correct Answer: B

Explanation:

Traffic flow from Amazon EC2 instance to and from Internet gateway via Gateway Load Balancer endpoint is as shown in the diagram below,

Routing entries should be added based on the following logic,

1) For all traffic from the Internet towards the Amazon EC2 instance in the application server subnet, it should hit the Gateway Load Balancer endpoint.

2) In the application server subnet all traffic destined to the Internet should first hit the Gateway Load Balancer endpoint.

3) For all traffic receiving from security appliances to Gateway Load Balancer endpoint which has destination as application server subnet, they already have a local route in the routing table since both Gateway Load Balancer endpoint & application server are part of the same VPC.

4)For all traffic receiving from the security appliance to Gateway Load Balancer endpoint which has destination as internet, a default route needs to be added pointing to Internet Gateway.

Option A is incorrect as since all traffic should pass via security appliance in service provider VPC, a default route for application server subnet should have the target as Gateway Load Balancer endpoint & not Internet gateway.

Option C is incorrect as in the Internet Gateway route table, for the destination as application server subnet, the target should be gateway load balancer endpoint and not security appliance subnet. Also, in the Gateway Load Balancer endpoint subnet, the default route should be added with Target as Internet Gateway and not the Security appliance subnet.

Option D is incorrect as in the Internet Gateway route table, for the destination as application server subnet, the target should be gateway load balancer endpoint & not security appliance subnet. Also, in the application server subnets should have a target as a Gateway Load Balancer endpoint & not an Internet gateway.

For more information on routing with Gateway Load Balancer, refer to the following URL, https://docs.aws.amazon.com/elasticloadbalancing/latest/gateway/getting-started.html

Domain: Network Implementation

Question 3 : An engineering firm has deployed dual AWS Direct Connect links from an on-premises location to AWS Cloud. These links terminate on AWS Transit Gateway using a transit virtual interface accessing multiple VPCs. For outgoing traffic from on-premises to AWS, they are preferring primary AWS Direct Connect link but traffic from AWS to on-premises is getting load balanced across both primary & secondary links. The IT Team from this firm requires return traffic to prefer the primary link instead of getting load balance across both links.

What BGP communities can be added to meet this requirement?

A. Apply local preference BGP community tag as 7224:7300 to the primary virtual interface & local preference BGP community tag as 7224:7100 to the secondary virtual interface

B. Apply local preference BGP community tag as 7224:7100 to the primary virtual interface & local preference BGP community tag as 7224:7300 to secondary virtual interface

C. Apply local preference BGP community tag as 7224:9300 to the primary virtual interface & local preference BGP community tag as 7224:8200 to secondary virtual interface

D. Apply local preference BGP community tag as 7224:8200 to the primary virtual interface & local preference BGP community tag as 7224:9300 to secondary virtual interface

Correct Answer: A

Explanation:

Local Preference BGP communities can control traffic from Amazon to on-premises. Local Preference BGP communities can be used to load balance traffic across multiple links or prefer primary links over secondary links. In the above case, there are multiple AWS Direct Connect links, to prefer links over other links, the local preference BGP community can be used.

Local Preference BGP communities have the following options,

7224:7100—Low preference

7224:7200—Medium preference

7224:7300—High preference

To prefer an AWS Direct connect link, community 7224:7300 can be added.

Option B is incorrect as Local Preference BGP communities 7224:7300 which have high preference need to be added to the primary link & not to the secondary link.

Option C is incorrect as Applying BGP community 7224:9300 to advertised prefixes to Amazon will propagate prefixes globally in the AWS network. Amazon will apply BGP community 7224:8200 to advertise prefixes only from continent to customer in which AWS Direct Connect point of presence is implemented. Setting the local preference BGP community as 7224:9300 will not prefer traffic on Primary links.

Option D is incorrect as Applying BGP community 7224:9300 to advertised prefixes to Amazon will propagate prefixes globally in the AWS network. Amazon will apply BGP community 7224:8200 to advertise prefixes only from continent to customer in which AWS Direct Connect point of presence is implemented. Setting the local preference BGP community as 7224:8200 will not prefer traffic on Primary links.

For more information on using BGP communities with AWS Direct Connect, refer to the following URL, https://docs.aws.amazon.com/directconnect/latest/UserGuide/routing-and-bgp.html

Domain: Network Design

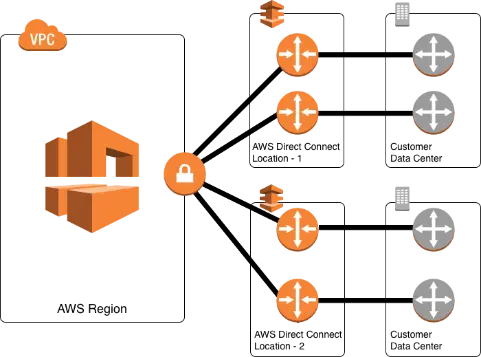

Question 4 : A company has deployed a new application in AWS Cloud. The peak requirement for this application would be 2 Gbps. To access this application from an on-premises location, the company is planning to use AWS Direct Connect for this requirement. For this critical application, connectivity should be fully resilient with proper backup solutions in place. Any outage in the links will lead to a huge financial impact on the company.

Which of the following solutions can be implemented to provide maximum resiliency for critical applications without any performance degradation?

A. Create a new 2 Gig AWS Direct Connect links backing with a VPN connection of 2 Gb

B. Create a new 1 Gig x 2 AWS Direct links at a single AWS Direct Connect location with links terminating on two different routers

C. Create a new 2 Gig AWS Direct links with links terminating at two AWS Direct Connect locations on two different routers

D. Create a new 2 Gig AWS Direct links with links terminating at two AWS Direct Connect locations on a single router

Correct Answer: C

Explanation:

To get maximum resiliency on AWS Direct Connect links, links need to be terminated on two separate routers at each Direct Connect location. Also, to get location-level redundancy, AWS Direct Connect links need to be terminated at two different AWS Direct Connect locations. This type of connectivity will provide router level as well as location level resiliency for the critical application.

The following diagram shows AWS Direct Connect connectivity with maximum resiliency,

Option A is incorrect as when the AWS Direct Connect link is down, the application may face performance issues on the VPN link.

Option B is incorrect as since the company is looking for maximum resiliency, if there are issues at one AWS Direct connect location, it may result in an outage.

Option D is incorrect as this will not provide router-level redundancy at each AWS Direct connect location.

For more information on redundancy with AWS Direct Connect, refer to the following URL, https://aws.amazon.com/directconnect/resiliency-recommendation/

Domain: Network Security, Compliance, and Governance

Question 5 : A healthcare company is setting hybrid connectivity using AWS Direct Connect between on-premises locations and the AWS cloud. They are looking for securing all types of data including control plane traffic flowing over this link. The proposed solution should not impact data speed for the traffic.

Which of the following encryption solutions is best suited to meet this requirement?

A. Use SSL/TLS encryption for applications over AWS Direct Connect

B. Use IPsec VPN over AWS Direct Connect

C. Use MACsec with AWS Direct Connect

D. Use GRE VPN over AWS Direct Connect

Correct Answer: C

Explanation:

MACsec is a layer 2 encryption service that provides layer 2 confidentiality and integrity to all control plane protocols. With MACsec, encryption is done using hardware that provides high-speed encryption. MACsec can be used for 10 Gbps and 100 Gbps AWS Direct Connect links providing high-speed data encryption from an on-premises location to AWS.

Option A is incorrect as SSL/TLS encryption will work at upper layers & will not provide high-speed encryption for ethernet connections which includes control plane protocols.

Option B is incorrect as IPsec VPN over AWS Direct Connect will work at upper layers and will not provide high-speed encryption for ethernet connections which includes control plane protocols.

Option D is incorrect as GRE tunnels will provide encapsulation & not encryption of data over AWS Direct Connect.

For more information on LAGs with AWS Direct Connect, refer to the following URL, https://aws.amazon.com/blogs/networking-and-content-delivery/adding-macsec-security-to-aws-direct-connect-connections/

Domain: Network Design

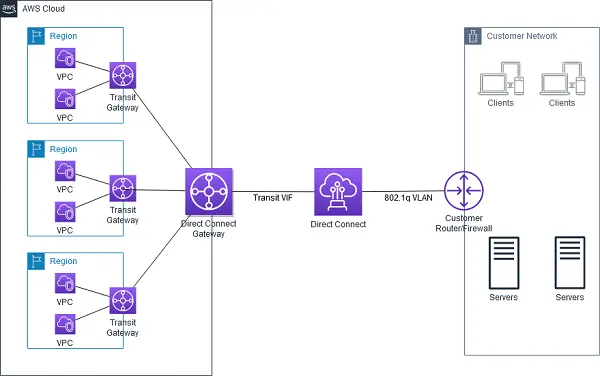

Question 6 : An insurance company is planning to set up hybrid connectivity between on-premises locations & AWS. To establish this connectivity, the company has procured a 500 Mbps hosted connection from the AWS Direct Connect partner. Multiple VPCs created in different regions need to be accessed from a single on-premises location.

What solution can be designed to implement this connectivity in the most simple way?

A. Create a transit virtual interface to Direct Connect Gateway & terminate it to AWS Transit gateway which has VPC attachments to multiple VPCs in different regions

B. Create a public virtual interface to Direct Connect gateway & terminate it to AWS Transit gateway which has VPC attachments to multiple VPCs in different regions

C. Create a public virtual interface over this connection. Establish VPN over this AWS Direct Connect & terminate it to AWS Transit gateway which has VPC attachments to multiple VPCs

D. Create a transit virtual interface over the Direct Connect link. Establish VPN over this AWS Direct Connect and terminate it to AWS Transit gateway which has VPC attachments to multiple VPCs

Correct Answer: A

Explanation:

AWS Transit Gateway can be used as a transit hub to connect multiple VPCs from on-premises locations. For this, a transit virtual interface is created with AWS Direct Connect and is used with AWS Transit Gateway. Using this connectivity, a single Direct Connect Link can help to connect to multiple VPCs resulting in a simpler cost-effective solution. AWS Direct Connect supports any speed links to AWS Transit Gateway. For connecting to VPC in different regions, Direct Connect gateway can be used to connect to Transit gateways in multiple regions.

The following diagram shows connectivity from an on-premise location to multiple VPCs using AWS Transit Gateway and Direct Connect.

Option B is incorrect as for connecting AWS Direct Connect on AWS Transit Gateway, a transit virtual interface is required and not a public virtual interface.

Option C is incorrect as although this will work, this will require additional configurations to create a VPN over AWS Direct connect. Using the Transit Virtual Interface to AWS Transit Gateway will be a simpler solution to deploy.

Option D is incorrect as a VPN connection over AWS Direct Connect should be created on the public virtual interface and not on a transit virtual interface.

For more information on connecting multiple VPCs using AWS Transit Gateway, refer to the following URL, https://docs.aws.amazon.com/whitepapers/latest/aws-vpc-connectivity-options/aws-direct-connect-aws-transit-gateway.html

Domain: Network Management and Operation

Question 7 : A company has deployed an AWS CloudFront distribution with AWS Application Load Balancer as the origin. AWS Application Load Balancer further distributes traffic to Amazon EC2 instances deployed in multiple availability zones. The operations Team monitoring this traffic is observing that some of the user sessions are directly terminating on the Application Load balancer instead of via AWS CloudFront. This is leading to additional load on Application Load Balancer.

What corrective actions can be initiated to overcome this problem?

A. Configure host firewall on Amazon EC2 instance & allow only CloudFront CIDR ranges to access

B. Add custom headers in Amazon CloudFront & configure ALB to forward requests containing only custom headers to the EC2 Instance

C. Create a security group for Application Load Balancer which will allow only Amazon CloudFront CIDR ranges

D. Use WAF at both AWS CloudFront & AWS Application Load Balancer to restrict direct access from the internet

Correct Answer: B

Explanation:

Following steps can be initiated to restrict users from directly accessing Application Load Balancer instead of from Amazon CloudFront ,

- Configure Amazon CloudFront to add custom HTTP headers to all requests which are forwarded to the Application Load balancer.

- Configure Application Load Balancer to forward only requests which have custom headers to Amazon EC2 instances.

This will ensure that no users can directly access the Application Load balancer & increase the load on it. Also, when all users are accessing applications via Amazon CloudFront, it will help to decrease latency as well as protect from Distributed denial of service (DDOS) attacks.

Option A is incorrect as configuring a host-based firewall will be additional admin work. Also since the Application load balancer is front-ending these instances, the instance will be receiving traffic from the Application Load balancer IP address.

Option C is incorrect as this will add complexity to managing & updating security groups with a large number of Amazon CloudFront CIDR ranges.

Option D is incorrect as although this will work, this will lead to additional latency to traffic because of dual WAF rules checking.

For more information on restricting access to the Application load balancer front-ended by Amazon CloudFront, refer to the following URL, https://docs.aws.amazon.com/AmazonCloudFront/latest/DeveloperGuide/restrict-access-to-load-balancer.html

Domain: Network Design

Question 8 : A streaming provider is using Amazon CloudFront for distributing content to users across the globe. Marketing team is looking for users’ physical locations to get the popularity of the content region wise. Application team has suggested performing HTTP header manipulation by adding HTTP header True-Client-IP to the viewer request. As an AWS expert, you have been assigned to create a function for this requirement & deploy at the Amazon CloudFront.

Which functions can be configured at CloudFront to get these details ?

A. Use Lambda@Edge function & execute at the edge location

B. Use CloudFront functions & execute at the regional edge location

C. Use Lambda@Edge & execute at the regional edge location

D. Use CloudFront functions & execute at the edge location

Correct Answer: D

Explanation:

CloudFront functions are suitable for performing lightweight short-running functions from the edge locations. CloudFront Functions are suitable for running following functions,

- Cache key normalization

- Header manipulation

- URL redirects or rewrites

- Request authorization

In the above case , the customer needs to perform Header manipulation by adding True-Client-IP header to viewer request. This can be done using CloudFront function & can be executed from edge location.

Option A is incorrect as for HTTP header manipulation, CloudFront functions are a better option than Lambda@Edge. Also, Lambda@Edge cannot be executed from edge locations. Lambda@Edge functions are executed from regional cache locations.

Option B is incorrect as CloudFront functions run from edge locations & not from regional edge locations.

Option C is incorrect as for HTTP header manipulation, CloudFront functions are a better option than Lambda@Edge as these are less expensive.

For more information on CloudFront functions, refer to the following URL, https://docs.aws.amazon.com/AmazonCloudFront/latest/DeveloperGuide/edge-functions.html

Domain: Network Implementation

Question 9 : An IT firm is planning to deploy a new application which works on IPv6 addresses. A VPC with IPv4 address is already created which has an Amazon EC2 instance deployed. For securing Amazon EC2 instances, security group & custom network ACL are configured. As a Network design lead you have been asked to work on existing VPC to support IPv6.

What steps can be performed to have an existing VPC with IPv4 addressing migrated to IPv6 addressing?

A. Associate an IPv6 CIDR block to VPC & subnets. Update Route tables & security group rules to include IPv6 addresses. Manually add IPv6 subnets in custom network ACL created for IPv4 subnets. Select instance type supporting IPv6 & assign IPv6 to the instance

B. Associate an IPv6 CIDR block to subnets. Update Route tables & security group rules automatically updated with IPv6 addresses. IPv6 subnets are automatically added to custom network ACL created for IPv4 subnets. Select instance type supporting IPv6 & assign IPv6 to the instance

C. Disable IPV4 support to VPC & associate an IPv6 CIDR block to VPC & subnets. Update Route tables & security group rules to include IPv6 addresses. Manually add IPv6 subnets in custom network ACL created for IPv4 subnets. Select instance type supporting IPv6 & assign IPv6 to the instance

D. Disable IPV4 support to VPC & associate an IPv6 CIDR block to subnets rules automatically updated with IPv6 addresses. Update Route tables & security group rules to include IPv6 addresses. IPv6 subnets are automatically added to custom network ACL created for IPv4 subnets. Select instance type supporting IPv6 & assign IPv6 to the instance

Correct Answer: A

Explanation:

For Associating IPv6 CIDR to existing VPC, the following steps are required,

1) Associate an IPv6 CIDR block with VPC and subnets- New IPv6 CIDR block needs to be associated with both VPC & subnets.

2) Update route tables-Update route tables with IPv6 subnets.

3) Update security group rules & network ACL – need to manually update security groups with new IPv6 subnets. If the existing VPC has default Network ACL, IPv6 subnets are automatically updated. In the case of custom network ACL, rules need to be manually updated in network ACL for IPv6 subnets.

4) Change instance type-Only required if the existing EC2 instance is an old generation instance.

5) Assign IPv6 addresses to your instances.

6) Configure IPv6 on your instances- This is an optional step required depending upon AMI used for Amazon EC2 instances.

Option B is incorrect as Security Group rules are not automatically updated with IPv6 subnets. It needs to be manually updated. Custom network ACL created for IPv4 subnets needs to be manually updated with IPv6 subnets & are not automatically updated.

Option C is incorrect as for associating IPv6 CIDR block to VPC, IPv4 is not required to be disabled.

Option D is incorrect as for associating IPv6 CIDR block to VPC, IPv4 is not required to be disabled. Custom network ACL created for IPv4 subnets needs to be manually updated with IPv6 subnets.

For more information on associating IPv6 with VPC, refer to the following URL, https://docs.aws.amazon.com/vpc/latest/userguide/vpc-migrate-ipv6.html

Domain: Network Implementation

Question 10 : An R&D firm has built a new graphics-intensive application that requires a very low latency for better performance. Users will be accessing this application from an on-premises location. The firm has already deployed services in the AWS cloud & gradually all services deployed in the data center will be moved to AWS Cloud. This application will be using Amazon S3 for storage which should be accessible with optimum latency.

Which solution can be implemented to meet this requirement?

A. Extend subnets from parent AWS VPC to AWS Outpost. Deploy the application in the AWS Outpost. Access Amazon S3 privately using the Gateway endpoint

B. Extend subnets from parent AWS VPC to AWS Local Zone. Deploy the application in the AWS Local Zone. Access Amazon S3 privately over AWS Private network

C. Extend subnets from parent AWS VPC to AWS Local Zone. Deploy the application in the AWS Local Zone. Access Amazon S3 privately using Gateway endpoint

D. Extend subnets from parent AWS VPC to AWS Outpost. Deploy application in the AWS Outpost. Access Amazon S3 via privately over AWS Private network

Correct Answer: B

Explanation:

AWS Local Zones can be used to deploy certain AWS services like compute & storage services (Amazon EC2, Amazon EBS, Amazon FSx, etc.) closer to end-users. This will help to access AWS services with less latency. VPC from the parent region is extended to AWS Local Zones. Other AWS services like Amazon S3 hosted in parent regions are accessible via VPC over AWS private network which helps in low latency for applications hosted in AWS Local Zones.

Option A is incorrect as AWS Outpost needs to be deployed in a local data center. Since the customer is moving all services out of the data center, this is not a better option.

Option C is incorrect as From AWS Local zone, Amazon S3 is privately accessible over AWS Private network. There is no need to access via the S3 Gateway endpoint.

Option D is incorrect as AWS Outpost needs to be deployed in a local data center. Since the customer is moving all services out of the data center, using AWS Local Zones is a better option.

For more information on AWS Local Zones, refer to the following URL, https://aws.amazon.com/about-aws/global-infrastructure/localzones/faqs/

Domain: Network Security, Compliance, and Governance

Question 11 : An IT firm has deployed Kubernetes clusters using Amazon Elastic Kubernetes Service (Amazon EKS). These clusters are deployed in multiple member accounts which are part of AWS Organisation. They are using Amazon GuardDuty for monitoring security in all accounts. The Security Team is looking for suspicious activity being carried out in Amazon EKS.

What steps can be taken to check GuardDuty findings from this Elastic Kubernetes Service (Amazon EKS)?

A. Enable Kubernetes protection for all member accounts in an Organisation using GuardDuty member accounts. Retrieve GuardDuty findings through logs stored in Amazon S3 buckets

B. Enable Kubernetes protection for all member accounts in an Organisation using GuardDuty delegated administrators accounts. Retrieve GuardDuty findings through logs stored in Amazon S3 buckets

C. Enable Kubernetes protection for all member accounts in an Organisation using GuardDuty delegated administrators accounts. Retrieve GuardDuty findings through Amazon CloudWatch events

D. Enable Kubernetes protection for all member accounts in an Organisation using GuardDuty member accounts. Retrieve GuardDuty findings through Amazon CloudWatch events

Correct Answer: C

Explanation: Kubernetes protection in Amazon GuardDuty helps to find any suspicious activities in Kubernetes clusters within Amazon Elastic Kubernetes Service (Amazon EKS). To detect any suspicious activities , Amazon GuardDuty monitors Kubernetes logs. In a multi-account environment, where accounts are part of AWS Organisation, only GuardDuty delegated administrators’ accounts can enable Kubernetes protection for clusters in all member accounts.

Option A is incorrect as GuardDuty member accounts cannot enable Kubernetes protection in Amazon GuardDuty. Only GuardDuty delegated administrator’s accounts can enable Kubernetes protection for the member accounts.

Option B is incorrect as GuardDuty findings can be retrieved from the GuardDuty console or from Amazon CloudWatch events. Finding cannot be retrieved from logs stored in the Amazon S3 bucket.

Option D is incorrect as GuardDuty member accounts cannot enable Kubernetes protection in Amazon GuardDuty. Only GuardDuty delegated administrator’s accounts can enable Kubernetes protection for the member accounts.

For more information on Kubernetes protection in Amazon GuardDuty, refer to the following URL, https://docs.aws.amazon.com/guardduty/latest/ug/kubernetes-protection.html

Domain: Network Implementation

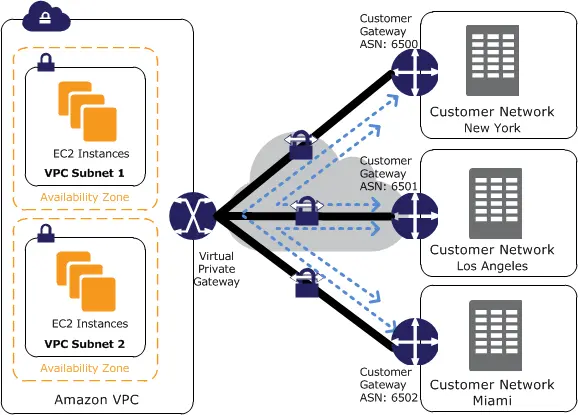

Question 12 : A start-up company is planning to connect three of its locations to AWS Cloud using AWS Site-to-Site VPN. This connectivity will be established using existing internet connections. All three locations need to communicate with the VPC having subnets in multiple AZ. Additionally, all three locations need to communicate with each other. The company is planning to set up AWS Direct Connect at one of the locations in near future, the proposed solution should be feasible for this connectivity as well.

How can connectivity be built to meet this requirement?

A. Create customer gateways with the same BGP ASN at each customer location. Create a Site-to-Site VPN with dynamic routing protocol BGP to a different VGW attached to a separate VPC. Create VPC peering between these VPCs. Configure the customer gateway devices to advertise a site-specific prefix to the VGW

B. Create customer gateways with unique BGP ASN at each customer location. Create a Site-to-Site VPN with dynamic routing protocol BGP to a common VGW. Configure the customer gateway devices to advertise a site-specific prefix to the VGW

C. Create customer gateways with the same BGP ASN at each customer location. Create a Site-to-Site VPN with dynamic routing protocol BGP to a common VGW. Configure the customer gateway devices to advertise a default prefix to the VGW

D. Create customer gateways with unique BGP ASN at each customer gateway. Create a Site-to-Site VPN with dynamic routing protocol BGP to a different VGW attached to a separate VPC. Create VPC peering between these VPCs. Configure the customer gateway devices to advertise a default prefix to the VGW

Correct Answer: B

Explanation:

For connecting multiple locations to the AWS cloud using AWS Site-to-Site VPN,

- Create a single Virtual Gateway and attach it to an AWS VPC.

- Create a customer gateway at each of the customer locations. BGP ASN should be unique at each of the locations. A public IP address should be assigned to this gateway for creating VPN to VGW.

- From each of the customer gateway, a site-specific prefix needs to be advertised to the VGW.

- At VGW, route propagation can be enabled to have subnets in VPC communicate with subnets at multiple sites.

- AWS VPN CloudHub can provide communication between customer sites if one of the sites is connecting to VGW on AWS Direct Connect.

The following diagram shows connectivity with AWS VPN CloudHub,

Option A is incorrect as BGP ASN for each customer site should be unique. Also, with VPC peering transitive routing is not allowed. Sites connecting to different VPCs cannot communicate with each other over VPC peering.

Option C is incorrect as BGP ASN for each customer site should be unique. Customer Gateway devices should advertise a site-specific prefix to the AWS VGW, and not a default route.

Option D is incorrect as the Customer Gateway device should advertise a site-specific prefix to the AWS VGW, and not a default route. Also, with VPC peering transitive routing is not allowed. Sites connecting to different VPCs cannot communicate with each other over VPC peering.

For more information on AWS VPN CloudHub, refer to the following URLs, https://docs.aws.amazon.com/vpc/latest/userguide/vpn-connections.html, https://docs.aws.amazon.com/vpn/latest/s2svpn/VPN_CloudHub.html

Domain: Network Implementation

Question 13 : An online grocery store has deployed a new application using Amazon RDS as a database. Developers located at on-premises, need to access RDS DB instances. Due to a limited budget, they will be using existing internet links for connection to the AWS cloud. Security Head is seeking your advice to develop a security solution for this remote access. Also, the solution needs to be scalable.

Which of the following is the most secure way of accessing the Amazon RDS instance from the on-premises location?

A. Create a publicly accessible Amazon EC2 instance. Access EC2 instance using Site-to-Site VPN created over IPsec using VGW. Login to this instance from an on-premises location and then access the RDS instance in a private DB subnet

B. Create a publicly accessible RDS instance. Attach a network ACL to DB subnet, allowing only subnet from on-premises & deny all other prefixes. Access RDS instance using the internet at the on-premises location

C. Create an AWS Client VPN. Deploy the RDS instance in a private subnet in the VPC. Associate DB instance subnet with AWS Client VPN interface endpoint

D. Create a publicly accessible Amazon EC2 instance. Access EC2 instance using public IP address over the internet. Login to this instance from an on-premises location and then access the RDS instance in a private DB subnet

Correct Answer: C

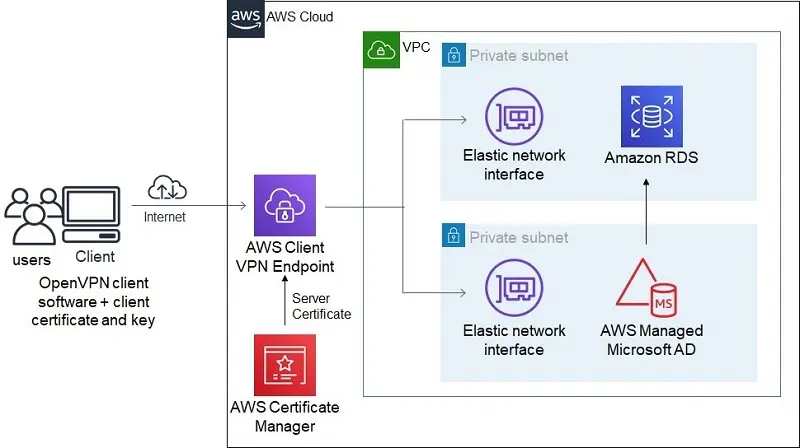

Explanation:

AWS Client VPN is a managed solution that can help to establish secure connectivity from users to AWS services. The client establishes a secure VPN connection using OpenVPN software which terminates on the AWS client VPN endpoint.

AWS services that need to be accessed remotely are associated with this AWS client VPN endpoint. In the above scenario, an RDS instance is launched in a VPC private subnet. This subnet is associated with the AWS Client VPN endpoint. AWS Client VPN provides a scalable option for connecting to any AWS resources from an on-premises location.

The connectivity diagram will be as follows,

Option A is incorrect as this is a sub-optimal way of accessing the Amazon RDS instance. Using AWS Client VPN is a better option for accessing RDS instances remotely.

Option B is incorrect as this will require RDS instances to be placed in public subnets which increases security risk.

Option D is incorrect as this is not a secure way of accessing RDS instances.

For more information on accessing RDS instance remotely, refer to the following URL, https://aws.amazon.com/blogs/database/accessing-an-amazon-rds-instance-remotely-using-aws-client-vpn/

Domain: Network Design

Question 14 : You are working as an AWS Consultant for a global IT company. The company has deployed its application in AWS Cloud across two regions. VPC peering is already configured between VPCs in these two regions. The company has deployed AWS Managed Microsoft Active Directory (AD) in these regions with multi-region replication configured between them. All global remote users need to be authenticated by this active directory before accessing applications in VPC. The company wants to build secure connectivity having optimal latency between global remote users and the active directory. A fully fault-tolerant and scalable solution should be deployed.

What design can be proposed to meet the requirement?

A. Configure all remote users to use bastion hosts deployed at the on-premises location. Create a managed Site-To-Site VPN from an on-premises location to AWS using AWS Transit Gateway. Associate VPC with AWS Managed Microsoft AD with AWS Transit Gateway at each region. Configure peering between AWS Transit Gateway at each region

B. Deploy a third-party software VPN in the Amazon EC2 instance in the same VPC as that of the active directory. Remote users will use an open-source VPN to connect to Amazon EC2 instances and then will be authenticated via Associate AWS Managed Microsoft AD

C. Create a Client VPN endpoint in both regions. Associate AWS Managed Microsoft AD instance with client VPN endpoints in both regions. Share Client VPN configuration files to users based upon geographically nearest region

D. Create a Client VPN endpoint in both regions. Associate AWS Managed Microsoft AD instance with client VPN endpoints in both regions. Create a Route53 public-hosted zone. Create CNAME records pointing to the DNS name of the endpoint with latency-based routing and health checks

Correct Answer: D

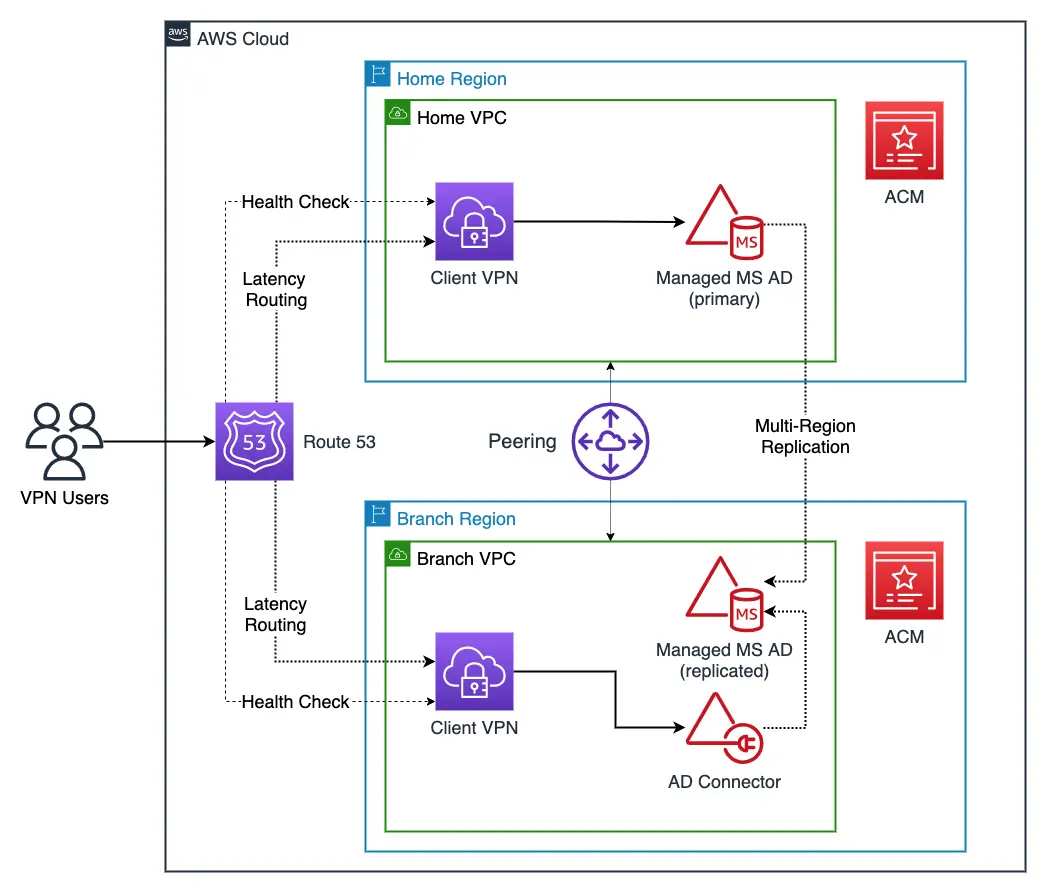

Explanation:

AWS Client VPN provides a secure managed VPN connectivity between users and AWS services. With public hosted zones created in Route 53 pointing to client VPN interfaces based upon latency-based routing, remote users will be pointed to client VPN interfaces that have optimal latency.

AWS Managed Microsoft AD can be associated with the client VPN interface, which can be used by remote users for authentication.

The connectivity diagram is as follows,

Option A is incorrect as using bastion host and site-to-site VPN is not a scalable solution. This will also incur high latency for all remote users to first connect to on-premises location and from there initiate connectivity to AWS Managed Microsoft AD.

Option B is incorrect as this solution will not be a scalable solution. Building resiliency with Amazon EC2 instances across two regions will incur additional admin work.

Option C is incorrect as Sharing Client VPN configuration files with the geographically nearest location will not guarantee optimal latency between users and the active directory.

For more information on using Route 53 along with Client VPN for multi-region connectivity, refer to the following URLs, https://aws.amazon.com/blogs/networking-and-content-delivery/building-multi-region-aws-client-vpn-with-microsoft-active-directory-and-amazon-route-53/

Domain: Network Management & Operation

Question 15 : An IT company has created a private hosted zone for a new application launched in VPC A. This application will be accessed from a large number of VPCs across multiple regions. The quality assurance team is looking for the DNS queries made while accessing this application in real-time to troubleshoot application issues related to the DNS.

What can be configured to meet this requirement?

A. Configure Route 53 Resolver Query Logs. Select destination for these logs as Amazon S3 bucket

B. Configure Route 53 Public DNS Query Logs. Select the destination for these logs as Amazon CloudWatch Logs

C. Configure Route 53 Public DNS Query Logs. Select the destination for these logs as Amazon Kinesis Data Firehose

D. Configure Route 53 Resolver Query Logs. Select the destination for these logs as Amazon Kinesis Data Firehose

Correct Answer: D

Explanation: Route 53 Resolver Query Logs call DNS queries made by the resources within the VPC.

Destination for the DNS query logs can be one of the following

- Amazon CloudWatch Logs: To search, query, monitor metrics, or raise alarms based upon logs.

- Amazon S3: For long-term storage of the logs for security compliance.

- Amazon Kinesis Data Firehose: For real-time analysis of the DNS logs.

Since the quality assurance team requires real-time analysis of the logs, the destination can be selected as Amazon Kinesis Data Firehose.

Option A is incorrect as the client wants to analyze DNS queries in real-time, Amazon S3 is not an ideal destination for storing logs.

Option B is incorrect as the client wants to analyze DNS queries in real-time, Amazon CloudWatch Logs is not an ideal destination for storing logs.

Route 53 Public DNS Query logs all DNS queries made from the internet and it does not log queries made by resources within the VPC.

Amazon CloudWatch Logs can be selected as a destination when there is a need to raise an alarm based upon DNS query logs.

Option C is incorrect as Route 53 Public DNS Query logs all DNS queries made from the internet and it does not log queries made by resources within the VPC.

For more information on Route 53 Resolver query logging, refer to the following URL, https://aws.amazon.com/blogs/aws/log-your-vpc-dns-queries-with-route-53-resolver-query-logs/

Domain: Network Management & Operation

Question 16: An engineering company is using hybrid connectivity between on-premises locations and the AWS cloud. The IT team has created a privately hosted zone example.com and has associated with the VPC. They have also created an outbound Route53 resolver for example.com and have associated with this VPC. IT Team is observing traffic routed to an on-premises network instead of routing based upon records in a private hosted zone.

What could be the possible reason for such behavior?

A. A public hosted zone needs to be created instead of a private hosted zone

B. Amazon VPC has a private hosted zone that has enableDnsHostnames set to false

C. Resolver rules will take precedence over private hosted zones

D. Amazon VPC has a private hosted zone that has enableDnsSupport set to false

Correct Answer: C

Explanation:

When a private hosted zone is created for a domain name and a resolver rule is created to route traffic to an on-premises network for the same domain name, traffic is routed based upon resolver rules and not based upon records in the private hosted zones.

Option A is incorrect as Since queries will be within the VPC and not from the internet, only a private hosted zone needs to be created.

Option B is incorrect as a private hosted zone can be created only if Amazon VPC has enableDnsHostnames enabled.

Option D is incorrect as a private hosted zone cannot be created only if Amazon VPC has enableDnsSupport enabled.

For more information on Private hosted zones, refer to the following URL, https://docs.aws.amazon.com/Route53/latest/DeveloperGuide/hosted-zone-private-considerations.html

Domain: Network Management & Operation

Question 17 : A Sports Channel is broadcasting a major sports event. For this IT team has set up broadcasting using a multi-CDN architecture with Amazon CloudFront and a custom CDN deployed in Europe and Japan region. This event will be viewed by viewers across the globe. Origin servers are deployed on Amazon EC2 instances in the us-east-1 region. The IT Team is observing an increase in load on origin servers during this event due to which origin servers are intermittently becoming non-responsive. For future sports events, the IT team wants to have proactive measures to maintain the load on origin servers to acceptable levels.

What actions can be initiated to minimize load on origin servers?

A. Set up CloudFront Origin Shield in both Europe & Japan regions in front of custom CDN

B. Set up CloudFront Origin Shield in the Regional cache location of the us-east-1 region

C. Set up CloudFront Origin Shield in the Regional cache locations of Europe & Japan

D. Set up CloudFront Origin Shield in edge cache locations of the us-east-1 region

Correct Answer: B

Explanation:

Using multi-CDN architecture can increase the load on origin servers, as multiple requests from different edge locations or multiple regional cache locations need to be handled by the origin servers.

To alleviate the load on origin servers, CloudFront Origin Shield can be deployed at one of the regional cache locations which are nearer to the Origin servers. With CloudFront Origin Shield, requests from other regional cache locations will not directly hit origin servers but will be directed to CloudFront Origin Shield.

In the above case, origin servers are deployed at the us-east-1 region, and CloudFront Origin Shield can be set up at the regional cache location in the us-east-1 region. This will minimize load on origin servers.

Option A is incorrect as CloudFront Origin Shield should be in a region closer to the origin server. Since origin servers are in the us-east-1 region, CloudFront Origin Shield should not be placed in Europe or Japan regions in front of custom CDN.

Option C is incorrect as CloudFront Origin Shield should be in a region closer to the origin server. Since origin servers are in the us-east-1 region, CloudFront Origin Shield should be placed in Europe or Japan regions.

Option D is incorrect as CloudFront Origin Shield should be placed at Regional Cache locations and not at edge locations.

For more information on CloudFront Origin Shield, refer to the following URLs, https://aws.amazon.com/blogs/networking-and-content-delivery/using-cloudfront-origin-shield-to-protect-your-origin-in-a-multi-cdn-deployment/

Domain: Network Implementation

Question 18 : A government organization is using Amazon CloudFront for the distribution of content stored in Amazon EC2 instances. Some of this content is private & should not be cached at the CloudFront. When there is a viewer request for this private content, Amazon CloudFront should always retrieve this content from the Origin Amazon EC2 instance. For such content, the deployment team has added a header at the Origin as “Cache-Control: no-cache, no-store”. It is observed that in some cases when there is a request from viewers for this content and origin servers are not reachable, Amazon CloudFront is distributing cache copies.

What setting can be done at the Origin server end to avoid sharing cache copies from Amazon CloudFront?

A. At Origin, set headers as Cache-Control: stale-if-error=0

B. At Origin, set both headers, Cache-Control: max-age and Cache-Control: s-maxage

C. At Origin, add Expires header to the origin

D. At Origin, remove the header, Cache Control: max-age

Correct Answer: A

Explanation:

When a header “Cache-Control: no-cache, no-store” is set at the Origin server, Amazon CloudFront will retrieve content from the origin server when there is a request from the viewer. In some cases, if the origin server is not reachable, Amazon CloudFront will share a cached copy with the viewer.

To avoid such cases, at the origin server, the header can be set as Cache-Control: stale-if-error=0. This will make sure that no cache copies are shared with the viewer. When there is a request from the viewer for private content and origin servers are not reachable, Amazon CloudFront will return an error.

Option B is incorrect as setting both headers max-age and s-maxage , Amazon CloudFront will cache the object for the duration lesser than s-maxage or default TTL specified.

Option C is incorrect as with setting expires header, Amazon CloudFront will cache the object until the date specified in the expire header.

Option D is incorrect as with removing the max-age header, Amazon CloudFront will cache the object for the duration of default TTL.

For more information on headers used for caching with Amazon CloudFront, refer to the following URLs, https://docs.aws.amazon.com/AmazonCloudFront/latest/DeveloperGuide/Expiration.html#ExpirationDownloadDist

Domain: Network Implementation

Question 19 : A financial institution has implemented hybrid connectivity using dual dedicated AWS Direct Connect links terminating at two different AWS locations. Link 1 has a bandwidth of 40 Gbps while Link 2 has a bandwidth of 10 Gbps. The operations team is observing some of the traffic flow is asymmetric, in which outbound traffic from AWS to on-premises location is flowing on Link 1 while incoming traffic to AWS cloud is on Link 2.

Operations Head has instructed you to make all traffic flow symmetric making Link 1 as the primary link for communication between AWS cloud and on-premises location. Link 2 should be used as a secondary link for all communications.

How can BGP policy be set up at the customer end routers for making Link 1 as the primary link?

A. In Outbound BGP policy add AS_Path with 3 AS for prefixes advertised on Link 2. Inbound policy sets the local preference as 80 for prefixes to be preferred on Link 1

B. In Outbound BGP policy add local preference as 300 for prefixes advertised on Link 2. In inbound policy set the local preference as 80 for prefixes to be preferred on Link 1

C. In Outbound BGP policy add AS_Path with 1 for prefixes advertised on Link 2. In inbound policy set AS_Path as 2 for prefixes to be preferred on Link 1

D. In Outbound BGP policy add AS_Path with 3 AS for prefixes advertised on link 2. Inbound policy set the local preference as 300 for prefixes to be preferred on Link 1

Correct Answer: D

Explanation:

BGP AS_Path attribute can be used to influence incoming traffic and is applied in outbound BGP policy. Shortest AS_Path is preferred. To prefer all incoming traffic on Link 1, AS_Path is set as 3 AS on Link 2. This will make Link 2 a less preferred path than Link 1 (which will have by default 1 AS) for traffic from the AWS cloud to the on-premises network.

BGP Local Preference attribute is applied to the inbound route policy to influence all outgoing traffic. A Higher Local Preference value is preferred. Default Local preference value is 100.

To prefer outgoing traffic on Link 1, an inbound policy should be created to set the Local preference attribute as higher than the local preference value on Link 2. The local preference value of 300 can be set on Link 1 to prefer outgoing traffic.

Option A is incorrect as setting Local preference as 80 on Link 1, will make Link 2 ( which will have default local preference as 100) the preferred path for outgoing traffic.

Option B is incorrect as Outbound BGP Policy should alter AS_Path and not the Local Preference attribute.

Option C is incorrect as the Inbound BGP policy should alter the Local Preference attribute and not the AS_Path attribute.

For more information on using BGP attributes for primary links, refer to the following URL, https://aws.amazon.com/blogs/networking-and-content-delivery/creating-active-passive-bgp-connections-over-aws-direct-connect/

Domain: Network Design

Question 20 : You are working as an AWS consultant for a pharma company. For setting up a new TEST laboratory for R&D, the company is planning to build a sub-1 Gig link to AWS. New link should have a pre-defined SLA and consistent latency. Connectivity should be fully resilient and establish communication with all VPCs created within the organization in the region. Connectivity should be established in the shortest possible timeline.

What connectivity can be proposed to meet their requirement?

A. AWS Site-to-Site Managed VPN terminating on AWS Transit Gateway

B. AWS Direct Connect Hosted Connection terminating on AWS Transit Gateway

C. Dedicated AWS Direct Connect link terminating on Direct Connect Gateway

D. AWS Client VPN connecting to VPC via Client VPN endpoint

Correct Answer: B

Explanation:

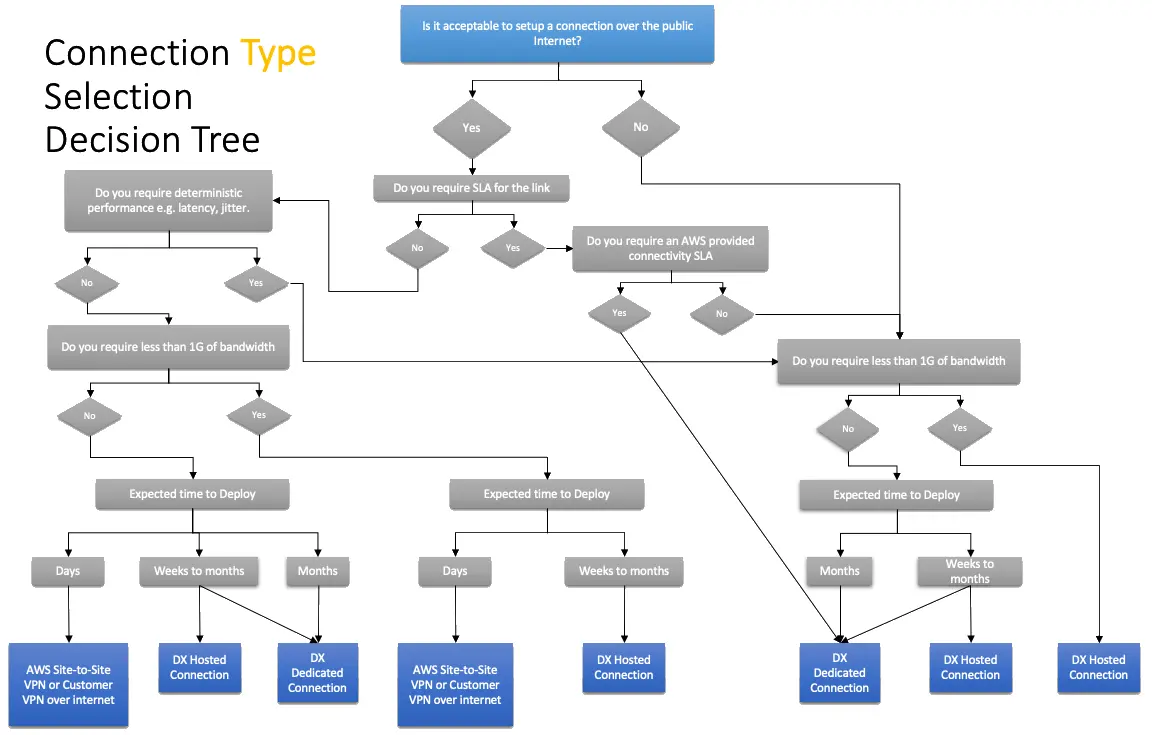

For deploying sub 1 Gbps links between on-premises location and AWS, there are two options available

- AWS Site-to-Site VPN: This is set up over the public internet. Latency on these links depends on the internet service provider network. This may vary as per congestion in the network. Also, no SLA is offered on this VPN connection.

- AWS Direct Connect Hosted Connection: In hosted connection physical connectivity between the client & AWS is provided by the AWS Direct Connect partners. Since traffic is on private links, consistent latency is observed. AWS offers SLA on the Direct connect links for both dedicated as well hosted connections. With connection terminating on AWS Transit Gateway, connectivity to all VPC in a region can be established.

The selection criteria for Hybrid connections are as follows,

Option A is incorrect as With Site-toSite VPN terminating on AWS Transit Gateway, connectivity to all VPC in a region can be established. But AWS Site-to-Site Managed VPNs are created over the public internet and do not offer any SLA.

Option C is incorrect as Dedicated AWS Direct connect links are available in 1 Gbps, 10 Gbps, and 100 Gbps. These are not available in sub-1 Gbps bandwidth. Deploying AWS direct connect hosted connection is faster than deploying a dedicated AWS Direct Connect link. The hosted connection can be deployed in a few weeks while deploying dedicated direct connect links may take a longer time ( approx. few months )

Option D is incorrect as AWS Client VPN is created over the public internet and does not offer any SLA.

For more information on selecting the most suitable hybrid connectivity, refer to the following URLs, https://docs.aws.amazon.com/whitepapers/latest/hybrid-connectivity/connectivity-type-selection-summary.html

Domain: Network Implementation

Question 21 : A global oil company has a head office located in London and regional offices in Paris and Sydney. They established hybrid connectivity using dedicated 10 Gbps AWS direct connect connections at all three locations.

A public virtual interface is created at all these locations to access public AWS services. Head-Office requires connectivity to public AWS services across the globe, while regional offices require connectivity to public AWS services for the region in which they are part of. The Head office should accept prefixes of public AWS services from the local continent while the regional office should accept public AWS services prefixes from the local region only. You have been assigned to implement BGP configuration on public virtual interfaces.

How can BGP community tags be attached at all three locations to meet these requirements?

A. For regional data centers, advertise prefixes with BGP community tag as 7224:8100 and accept prefixes with BGP community tag 7224:9100. For the head office, advertise prefixes with BGP community tag as 7224:8200 and accept all prefixes with community tags 7224:9200

B. For regional data centers, advertise prefixes with BGP community tag as 7224:9100 and accept prefixes with BGP community tag 7224:8100. For the head office, advertise prefixes with BGP community tag as 7224:9300 and accept all prefixes with BGP community tag as 7224:8200

C. For regional data centers, advertise prefixes with BGP community tag as 7224:9300 and accept prefixes with BGP community tag 7224:8200. For the head office, advertise prefixes with BGP community tag as 7224:9300 and accept all prefixes with community tags 7224:8100

D. For regional data centres, advertise prefixes with BGP community tag as 7224:8200 and accept prefixes with BGP community tag 7224:9200. For the head office, advertise prefixes with BGP community tag as 7224:8100 and accept all prefixes with community tags 7224:9100

Correct Answer: B

Explanation:

Customers can use BGP community tags to determine the scope of prefixes that are advertised to the AWS cloud. Following community tags can be used

- 7224:9100— To advertise prefixes to Local AWS Region only.

- 7224:9200—To advertise prefixes to all AWS Regions for a continent.

- 7224:9300— To advertise prefixes to all public AWS Regions globally.

Since regional offices need to advertise prefixes to local AWS regions only, BGP community 7224:9100 should be tagged. At head office, to advertise prefixes globally BGP community 7224:9300 should be tagged.

AWS applies BGP community tags to prefixes advertised to customers. Following community tags are applied to determine which prefixes are advertised to the customer,

- 7224:8100— To advertise prefixes from Local AWS Region only.

- 7224:8200—To advertise prefixes from all AWS Regions for a continent.

- No tag— To advertise prefixes from all public AWS Regions globally.

Since regional offices need to have prefixes from the local AWS region only, it can accept prefixes with BGP community 7224:8100. At head office, the BGP route policy can be configured to accept community 7224:8200.

Option A is incorrect as for advertising prefixes to AWS, customers should use either of the three communities, 7224:9100 or 7224:9200 or 7224:9300. While receiving prefixes from AWS, customers should match 7224:8100 or 7224:8200 or no tag.

Option C is incorrect as at regional data center advertising prefixes with tag 7224:9300, will advertise those prefixes globally which are not intended.

Option D is incorrect as for advertising prefixes to AWS, customers should use either of the three communities, 7224:9100 or 7224:9200 or 7224:9300. While receiving prefixes from AWS, customers should match 7224:8100 or 7224:8200 or no tag.

For more information on BGP community tags for advertised and received prefixes over Public VIF, refer to the following URL, https://docs.aws.amazon.com/directconnect/latest/UserGuide/routing-and-bgp.html

Domain: Network Design

Question 22 : A global engineering company has two data centers in New York and Tokyo. The company has deployed application servers on Amazon EC2 instances in VPC created at four different AWS regions. You have been engaged to design connectivity between these data centers and VPC. All VPCs should be able to communicate with all other VPCs as well as with both the data centers. The proposed connectivity should integrate data centers with any new AWS region in which the application will be deployed in the future.

What design can be proposed to provide full resiliency?

A. Set up dual Direct Connect links terminating on two different Direct Connect Gateway from each Data Centre. Connect one Direct Connect gateway to three AWS Transit Gateways attached in three separate regions while another Direct Connect gateway to one Transit gateway attached to one region. Configure full mesh peering between four Transit Gateways created in each of the four regions

B. Set up dual Direct Connect links terminating on a single Direct Connect Gateway from each Data Centre. Connect one Direct Connect gateway to four AWS Transit Gateways attached to four separate regions. Configure full mesh peering between four Transit Gateways created in each of the four regions

C. Set up dual Direct Connect links terminating on a single Direct Connect Gateway from each Data Centre. Connect one Direct Connect gateway to four Virtual Private gateways attached to four separate regions. Configure full mesh VPC peering between VPCs in different regions

D. Set up dual Direct Connect links terminating on two different Direct Connect Gateway from each Data Centre. Connect one Direct Connect gateway to two Virtual Private gateways attached to two separate regions while another Direct Connect gateway to two Virtual Private gateways attached to two separate regions. Configure full mesh VPC peering between VPCs in different regions

Correct Answer: A

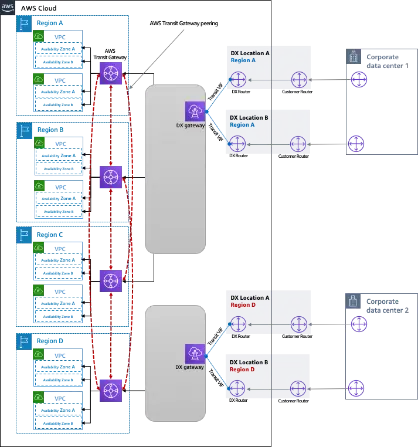

Explanation:

Connectivity can be designed as follows,

- Two data centers can have dual AWS Direct Connect links terminating at two different Direct Connect Gateway. This will provide resiliency at link level as well as location level. A single Transit virtual interface can be created on each of these links.

- VPCs in each of the four regions can connect with a single AWS Transit gateway attached to each region. Cross-region VPC communication can be provided by peering through the AWS Transit gateway attached to each region. The same infrastructure setup can be used for any new VPC created in these four regions.

- Each AWS Direct Connect Gateway can connect a maximum of up to three AWS Transit Gateways. To have connectivity with VPC in four different regions, one Direct Connect Gateway can have connectivity with three Transit Gateways while other Direct Connect Gateways can have connectivity with one Transit Gateway. This will provide communication between data centers at both locations New York and Tokyo with VPCs in all four regions.

- For all future applications to be deployed in new regions, if the count of regions is more than 3 per Direct Connect Gateway, an additional Direct Connect Gateway can be set up.

- Route-summarization can be configured to avoid maximum prefix count limits.

- Number of prefixes from on-premises to AWS on a transit virtual interface: 100

- Number of prefixes per AWS Transit Gateway from AWS to on-premises on a transit virtual interface: 20

Refer to below diagram for connectivity,

Option B is incorrect as only three AWS Transit gateways can be connected per Direct Connect Gateway.

Option C is incorrect as full Mesh VPC peering will add complexity & additional management overhead to configure peering for any new VPC created in any of the regions. Also, connecting both data centers to a single Direct Connect Gateway will lead to a single point of failure.

Option D is incorrect as full Mesh VPC peering will add complexity & additional management overhead to configure peering for any new VPC created in any of the regions.

For more information on connecting VPC & on-premises locations in multi-region, refer to the following URL, https://docs.aws.amazon.com/whitepapers/latest/hybrid-connectivity/aws-dx-dxgw-with-aws-transit-gateway-multi-regions-more-than-3.html

Domain: Network Implementation

Question 23 : An IT company has set up a hybrid connectivity between AWS cloud and data center. They have set up a Route 53 private hosted zone in the AWS and have an existing DNS server in the data center. Route 53 resolver endpoint is configured to forward all queries for the domain example.com to the DNS server in the data center. Recently they have created a subdomain test.example.com in the AWS cloud. Queries for this subdomain to be resolved by the resolver and should not be forwarded to the DNS server in the data center.

What rules can be configured to get DNS resolution as per requirement?

A. Create a recursive rule specifying test.example.com

B. Create a conditional forwarding rule specifying test.example.com

C. Create a conditional forwarding rule specifying test.example.com disabling an autodefined rule

D. Create a System rule and specify test.example.com

Correct Answer: D

Explanation:

Rules for Route 53 Resolver endpoints are categorized in two ways,

- Who creates the rules,

- Autodefined Rules: These rules are automatically created by the resolver and are associated with the VPC.

- Custom Rules: These rules are created by the customer.

- What rules do,

- Conditional Forwarding Rules: These rules are created to forward DNS queries for a specific domain name to the DNS server on the on-premises.

- System Rules: These rules are created to selectively override the domain names in the conditional forwarding rule. With Conditional forwarding rules, all the queries for the domain name are forwarded to on-premises DNS servers. If for a sub-domain, queries need to be handled by the resolver, system rules can be created for that sub-domain. In the above case, all queries for domain example.com are forwarded to the DNS server. For sub-domain test.example.com, a system rule can be created so that queries for that sub-domain will not be forwarded to the DNS server in the data center.

- Recursive Rules: Resolver automatically creates a recursive rule for all domains which are not part of the custom rules or are not defined in the autodefined rules.

Option A is incorrect as the recursive rule will not be useful for selectively overriding forwarding rules.

Option B is incorrect as with conditional forwarding rules, queries for the domain name specified will be forward to DNS server on-premises.

Option C is incorrect as conditional forwarding rules, queries for the domain name specified will be forward to DNS server on-premises. Conditional Forwarding rules (forwarding rules) are part of custom rules which users can specify. These rules are not created automatically by the resolver as in autodefined rules.

For more information on route 53 resolver rules, refer to the following URL, https://docs.aws.amazon.com/Route53 /latest/DeveloperGuide/resolver.html#resolver-overview-forward-vpc-to-network

Domain: Network Implementation

Question 24 : A company is using hybrid connectivity between data centers and AWS using AWS Direct Connect. The DNS server in the data center is forwarding DNS queries to VPC using an inbound Resolver endpoint. A new application will be deployed in the VPC. Operations team is expecting high growth in DNS queries due to deploying a new application in the VPC.

What additional configuration can be done proactively to ensure DNS queries are successfully resolved?

A. Add more IP addresses to the inbound Resolver endpoint in a different Availability Zone

B. Add additional inbound Resolver endpoints and attach to different Availability Zone

C. Add additional inbound Resolver endpoint and attach to the same Availability Zone

D. Add more IP addresses to the inbound Resolver endpoint in the same Availability Zone

Correct Answer: A

Explanation:

Currently, Route 53 resolver endpoint supports 10,000 queries per second per IP address. In case of increasing queries per second, an additional IP address per Resolver endpoint can be added. There is a soft limit of 6 IP addresses per resolver endpoint.

Option B is incorrect as to resolve a large number of queries per second, instead of adding multiple inbound resolver endpoints, a better solution is to add additional IP address to resolver endpoints.

Option C is incorrect as to resolve a large number of queries per second, instead of adding multiple inbound resolver endpoints, a better solution is to add additional IP address to resolver endpoints.

Option D is incorrect as IP address should be added in the different Availability Zones instead of assigning IP address in the same Availability Zone.

For more information on Amazon Route53 resolver endpoints, refer to the following URL, https://docs.aws.amazon.com/Route53/latest/DeveloperGuide/resolver.html#resolver-considerations-number-of-endpoints

Domain: Network Design

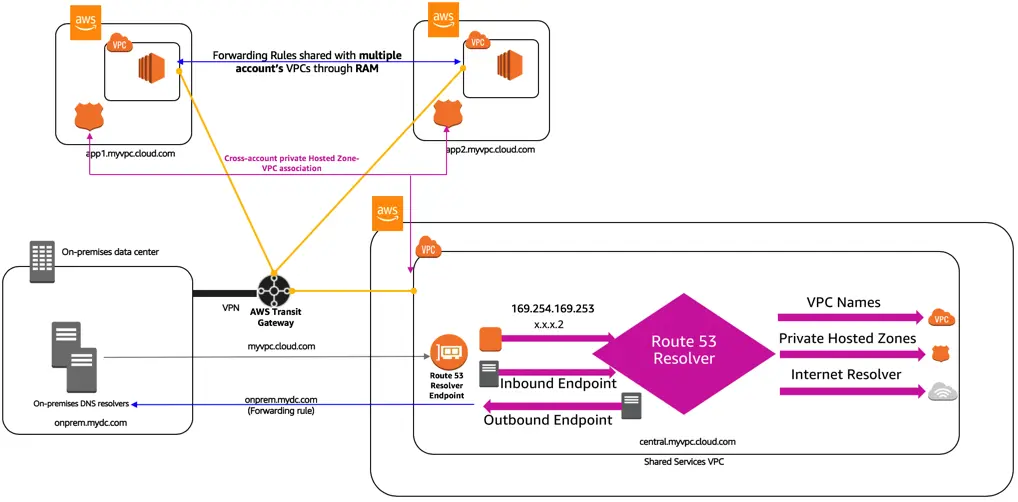

Question 25 : A company has created a shared-services VPC for centralized DNS management. IT Team has created private hosted zones in this shared services VPC. Applications are deployed in different Spoke VPCs. Private hosted zone in shared-services VPC needs to be resolved across multiple accounts created in Spoke VPCs. The proposed solution should also be valid for forwarding DNS queries from on-premises to the shared service VPC in near future.

What connectivity can be proposed to meet this requirement in the most cost-effective manner?

A. Establish network connectivity between shared services VPC and Spoke VPC using AWS Transit Gateway. Use Amazon Route 53 resolver forwarding to query Route 53 private hosted zones from multiple accounts in Spoke VPCs

B. Establish network connectivity between shared services VPC and Spoke VPC using AWS Transit Gateway. Share the private hosted zone between accounts and associate with the Spoke VPC that needs resolution

C. Establish network connectivity between shared services VPC and Spoke VPC using VPC peering. Share the private hosted zone between accounts and associate with the Spoke VPC that needs resolution

D. Establish network connectivity between shared services VPC and Spoke VPC using VPC peering. Use Amazon Route 53 resolver forwarding to query Route 53 private hosted zones from multiple accounts in Spoke VPCs

Correct Answer: B

Explanation: Private Hosted Zones created in a shared-services VPC can be resolved in multiple accounts setup in different Spoke VPCs in the following manner,

- Interconnect Spoke VPC and the Shared-services VPC by AWS Transit Gateway.

- Share Private Hosted zone between accounts using AWS RAM and associate it with Spoke VPC that needs resolution.

In case of any future requirements of private hosted zone resolution from the on-premises network, On-premises network can be connected to AWS Transit Gateway and inbound Route 53 resolver endpoint can be created in shared services VPC.

Option A is incorrect as using Route 53 resolver forwarding will incur additional costs and introduce complexity in administration.

Option C is incorrect as VPC Peering will not work only with VPC-to-VPC private hosted zone resolution. For forwarding queries from on-premises, a separate setup will be required which will result in additional cost.

Option D is incorrect as Route 53 resolver forwarding will incur additional costs and introduce complexity in administration. VPC Peering will not work only with VPC-to-VPC private hosted zone resolution. For forwarding queries from on-premises, a separate setup will be required which will result in additional cost.

For more information on Route 53 private hosted zone resolution across VPCs, refer to the following URL, https://aws.amazon.com/blogs/networking-and-content-delivery/centralized-dns-management-of-hybrid-cloud-with-amazon-route-53-and-aws-transit-gateway/

Domain: Network Implementation

Question 26 : A start-up company is planning to use Amazon Route 53 as a DNS for applications in AWS Cloud Infrastructure. Applications will be deployed in multiple regions catering to local users in each region. While setting Route 53 route policies, the IT team should ensure that queries are responded based upon the user’s location so that users can access applications from the nearest region. Route policy should respond to the queries in a stable and predictable way. The IT head needs you to ensure customers do not receive “no answer response” from Route 53.

What settings can be implemented to get this resolution in the desired way?

A. Create a multi-answer routing policy. Create a default policy for queries not mapped to any location

B. Create a latency-based routing policy. Create a default policy for queries not mapped to any location

C. Create a Geolocation routing policy. Create a default policy for queries not mapped to any location

D. Create a Geoproximity routing policy. Create a default policy for queries not mapped to any location

Correct Answer: C

Explanation:

Geolocation routing policy responds to queries based upon user location. All the users based in a particular location will always get the same response with this routing policy. This will ensure a stable and predictable response to the users. Geolocation routing policy works by mapping users IP addresses to locations. Route 53 responds with “no answer response” for users IP addresses that are not mapped to any locations. A default policy needs to be created for all the users which are not mapped to any location so that users do not receive a “no answer response” from Route 53.

Option A is incorrect as with multivalue answer routing policy, the client selects based upon random responses it receives from Route 53. For multivalue answer policy, no default policy is required to be set.

Option B is incorrect as with latency-based routing, based upon latency, routing data can change frequently which may lead to unpredictable responses to the queries when there is change in latency.

Option D is incorrect as Geoproximity routing policy can be used to route traffic based upon resource location and not based upon user locations.

For more information on best practices with Amazon Route53, refer to the following URLs, https://docs.aws.amazon.com/Route53/latest/DeveloperGuide/best-practices-dns.html, https://docs.aws.amazon.com/Route53/latest/DeveloperGuide/routing-policy.html#routing-policy-geo

Domain: Network Design

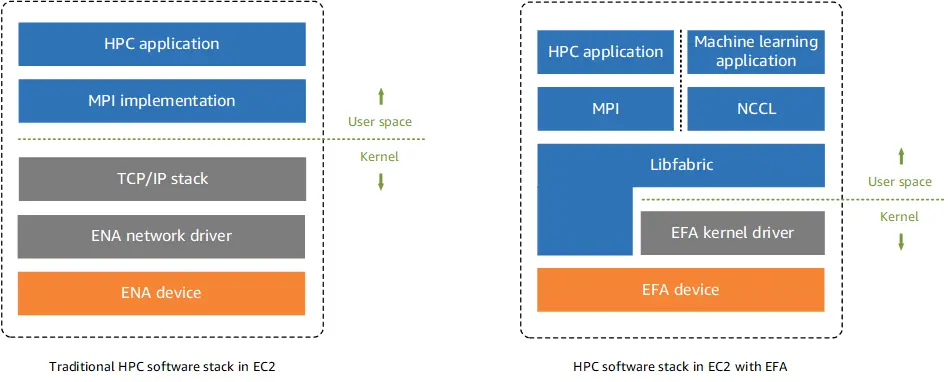

Question 27 : A large engineering firm is planning to deploy HPC applications in AWS cloud for its R&D work. HPC applications will be deployed on Linux based Amazon EC2 instances. All these instances will be launched in a single subnet of the VPC. Traffic between these instances should have the lowest latency without any impact on the network performance.

Which deployment option will provide optimum performance for HPC applications?

A. Enable enhanced networking on Amazon EC2 instance with Elastic Network Adaptor (ENA). Place all Amazon EC2 instances in a partition placement group

B. Enable enhanced networking on Amazon EC2 instance with Intel 82599 Virtual Function (VF). Place all Amazon EC2 instances in a cluster placement group

C. Enable enhanced networking on Amazon EC2 instance Elastic Fabric Adaptor (EFA). Place all Amazon EC2 instances in a cluster placement group

D. Enable enhanced networking on Amazon EC2 instance with Intel 82599 Virtual Function (VF). Place all Amazon EC2 instances in a partition placement group

Correct Answer: C

Explanation:

For better performance of HPC applications in AWS Cloud, applications should be launched on an Amazon EC2 instance with EFA and launched in a cluster placement group. EFA is an ENA with added capabilities that supports HPC applications to directly communicate with network interfaces resulting in low-latency reliable connectivity. EFA supports OS-bypass. With OS-bypass, HPC applications can directly communicate with the network interface hardware leading to low latency and reliable communications.

Option A is incorrect as for better performance for traffic between Amazon EC2 instances, the instance should be placed in a cluster placement group and not in a partition placement group. For HPC applications, instances with EFA would provide better performance than instances with ENA enabled. This is due to an additional OS-bypass feature that EFA supports.

Option B is incorrect as For HPC applications, the instance with EFA would provide better performance than an instance with an ENA Intel 82599 VF interface. This is due to an additional OS-bypass feature that EFA supports.

Option D is incorrect as for better performance for traffic between Amazon EC2 instances, the instance should be placed in a cluster placement group and not in a partition placement group. For HPC applications, an instance with EFA would provide better performance than an instance with an ENA Intel 82599 VF interface. This is due to an additional OS-bypass feature that EFA supports.

For more information on HPC applications performance enhancements, refer to the following URLs, https://d1.awsstatic.com/whitepapers/Intro_to_HPC_on_AWS.pdf, https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/efa.html, https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/placement-groups.html

Summary

Thus, for the AWS Certified Advanced Networking Specialty certification exam, we’ve provided 25+ Free Pratice Questions. These practice questions/dumps undoubtedly helped you gauge your level of preparation and increase your confidence level. Whizlabs aims you to get you ready for the AWS Certified Advanced Networking Specialty by offering practice questions & study resources.

Note that these are not exam dumps where practice questions are found as real exam simulators that would help you to pass the exam in the first attempt.

- Which Kubernetes Certification is Right for You? - April 10, 2024

- Top 5 Topics to Prepare for the CKA Certification Exam - April 8, 2024

- 7 Databricks Certifications: Which One Should I Choose? - April 8, 2024

- What are the benefits of having a Google Cloud Database Engineer Certification? - March 18, 2024

- 5 Reasons for AWS Certification Exam Failure and Proven Strategies to Succeed Next Time - March 4, 2024

- How to Filter Inbound Internet Traffic with Azure Firewall Policy DNAT - November 22, 2023

- Preparation guide for CompTIA Security+ SY0-701 - November 14, 2023

- Whizlabs Black Friday Sale 2023 !! - November 9, 2023